Table Of Contents

Category

Artificial Intelligence

IoT

Blockchain

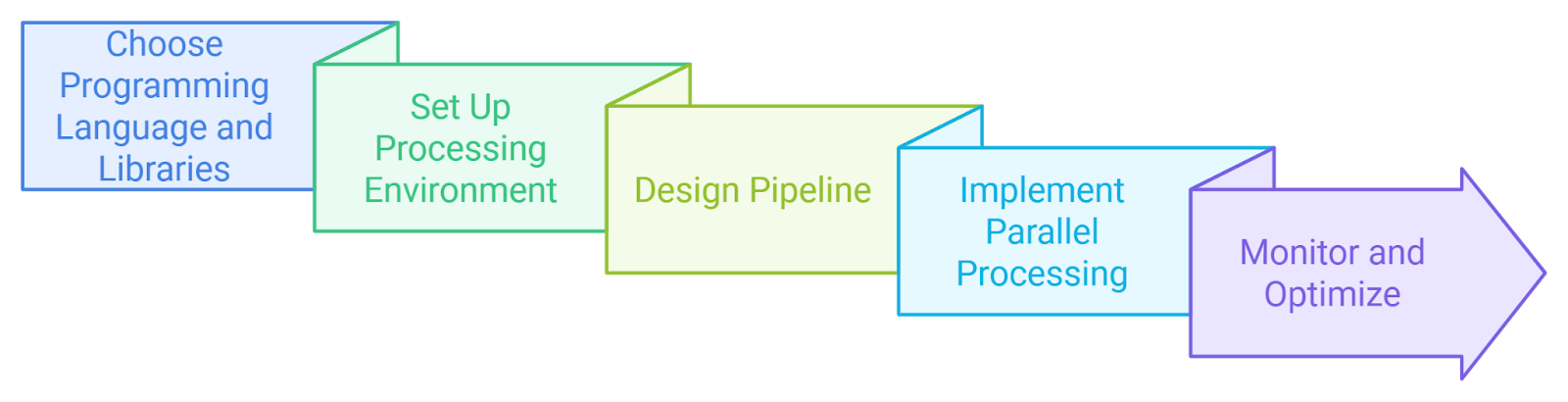

1. Introduction to Concurrency and Parallelism

Concurrency and parallelism are two fundamental concepts in programming that often get confused. Understanding the distinction between them is crucial for effective software development, especially in systems programming languages like Rust, particularly when dealing with concurrency and parallelism in Rust.

1.1. Understanding Concurrency vs. Parallelism

Concurrency refers to the ability of a program to manage multiple tasks at the same time. It does not necessarily mean that these tasks are being executed simultaneously; rather, they can be interleaved. This is particularly useful in scenarios where tasks are I/O-bound, such as reading from a file or waiting for network responses.

- Concurrency allows for better resource utilization.

- It can improve responsiveness in applications.

- It is often implemented using threads, async/await patterns, or event loops.

Parallelism, on the other hand, involves executing multiple tasks simultaneously, typically on multiple CPU cores. This is beneficial for CPU-bound tasks that require significant computational power.

- Parallelism can significantly reduce execution time for large computations.

- It requires careful management of shared resources to avoid race conditions.

- It is often implemented using multi-threading or distributed computing.

In Rust, both concurrency and parallelism are supported through its powerful type system and ownership model, which help prevent common pitfalls like data races and memory safety issues.

Key Differences Between Concurrency and Parallelism

- Execution:

- Concurrency is about dealing with lots of things at once (interleaving).

- Parallelism is about doing lots of things at once (simultaneously).

- Use Cases:

- Concurrency is ideal for I/O-bound tasks.

- Parallelism is suited for CPU-bound tasks.

- Implementation:

- Concurrency can be achieved with threads, async programming, or event-driven models.

- Parallelism typically requires multi-threading or distributed systems.

Rust's Approach to Concurrency and Parallelism

Rust development provides several features that make it an excellent choice for concurrent and parallel programming:

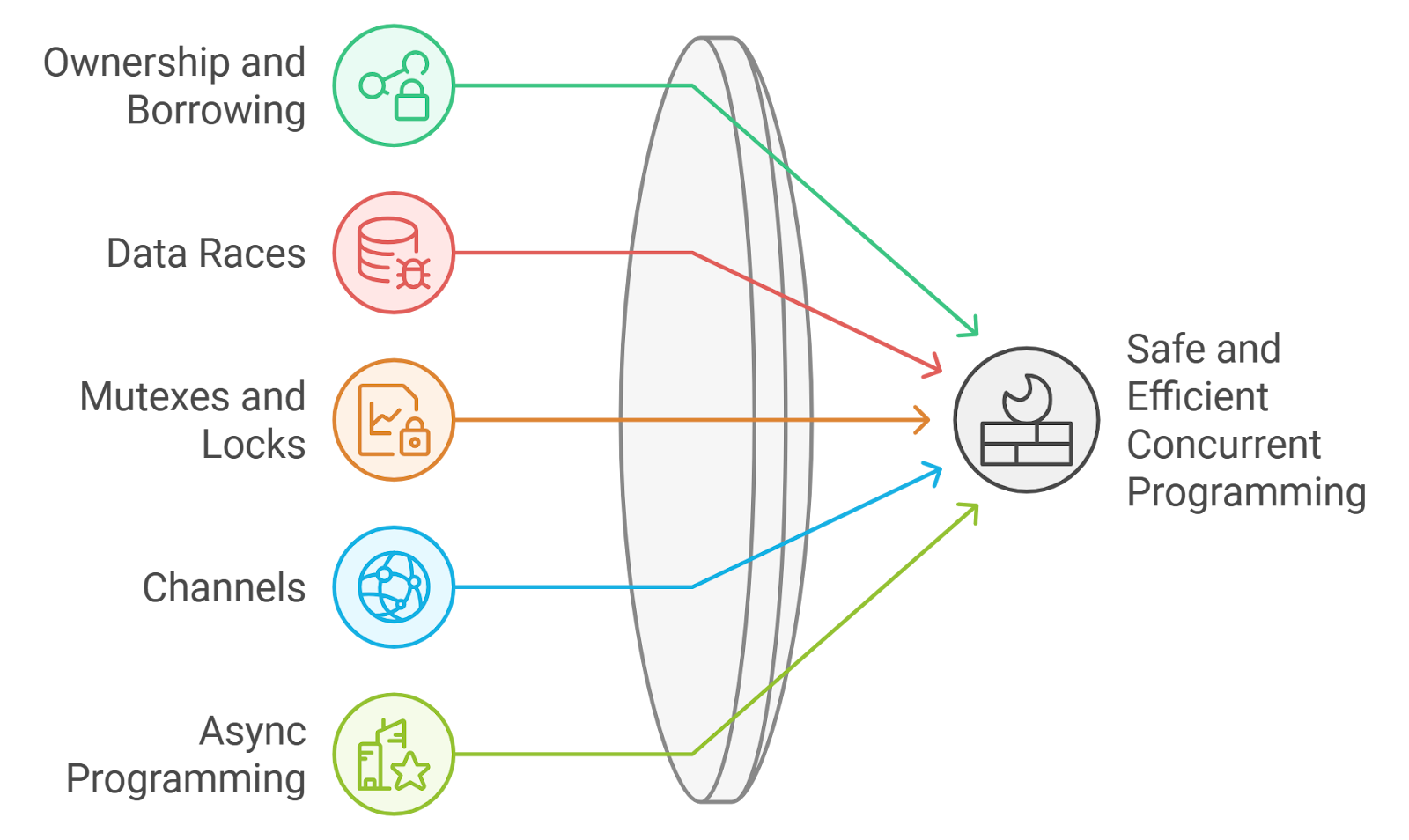

- Ownership and Borrowing: Rust's ownership model ensures that data is safely shared between threads without the risk of data races.

- Fearless Concurrency: Rust's type system enforces rules at compile time, allowing developers to write concurrent code without fear of common pitfalls.

- Concurrency Primitives: Rust offers various concurrency primitives, such as threads, channels, and async/await, making it easier to write concurrent applications.

Steps to Implement Concurrency in Rust

- Set up a new Rust project using Cargo:

language="language-bash"cargo new my_concurrent_app-a1b2c3-cd my_concurrent_app

- Add dependencies for async programming in

Cargo.toml:

language="language-toml"[dependencies]-a1b2c3-tokio = { version = "1", features = ["full"] }

- Write an asynchronous function:

language="language-rust"use tokio::time::{sleep, Duration};-a1b2c3--a1b2c3-async fn perform_task() {-a1b2c3- println!("Task started");-a1b2c3- sleep(Duration::from_secs(2)).await;-a1b2c3- println!("Task completed");-a1b2c3-}

- Create a main function to run the async tasks concurrently:

language="language-rust"#[tokio::main]-a1b2c3-async fn main() {-a1b2c3- let task1 = perform_task();-a1b2c3- let task2 = perform_task();-a1b2c3- tokio::join!(task1, task2);-a1b2c3-}

Steps to Implement Parallelism in Rust

- Set up a new Rust project using Cargo:

language="language-bash"cargo new my_parallel_app-a1b2c3-cd my_parallel_app

- Add dependencies for parallelism in

Cargo.toml:

language="language-toml"[dependencies]-a1b2c3-rayon = "1.5"

- Use Rayon to perform parallel computations:

language="language-rust"use rayon::prelude::*;-a1b2c3--a1b2c3-fn main() {-a1b2c3- let numbers: Vec<i32> = (1..=10).collect();-a1b2c3- let sum: i32 = numbers.par_iter().sum();-a1b2c3- println!("Sum: {}", sum);-a1b2c3-}

By leveraging Rust's features, developers can effectively implement both concurrency and parallelism in Rust, leading to more efficient and safer applications. At Rapid Innovation, we specialize in harnessing these programming paradigms to help our clients achieve greater ROI through optimized software solutions. Partnering with us means you can expect improved performance, reduced time-to-market, and enhanced scalability for your applications. Let us guide you in navigating the complexities of Rust Blockchain development, ensuring your projects are executed efficiently and effectively.

1.2. Why Rust for Concurrent and Parallel Programming?

At Rapid Innovation, we recognize that Rust is increasingly acknowledged as a powerful language for concurrent and parallel programming due to several key features that can significantly enhance your development processes:

- Performance: Rust is designed for high performance, comparable to C and C++. It allows developers to write low-level code while maintaining high-level abstractions, making it suitable for performance-critical applications. This means that your applications can run faster and more efficiently, leading to a greater return on investment (ROI).

- Concurrency without Data Races: Rust's ownership model ensures that data races are caught at compile time. This means that if two threads try to access the same data simultaneously, the compiler will flag it as an error, preventing potential runtime crashes. By minimizing errors, we help you save time and resources, allowing you to focus on innovation. This is a key aspect of what makes rust concurrency so effective.

- Lightweight Threads: Rust's standard library provides lightweight threads, known as "green threads," which are managed by the Rust runtime. This allows for efficient context switching and better resource utilization, ensuring that your applications can handle more tasks simultaneously without compromising performance. This feature is essential for achieving concurrency in Rust.

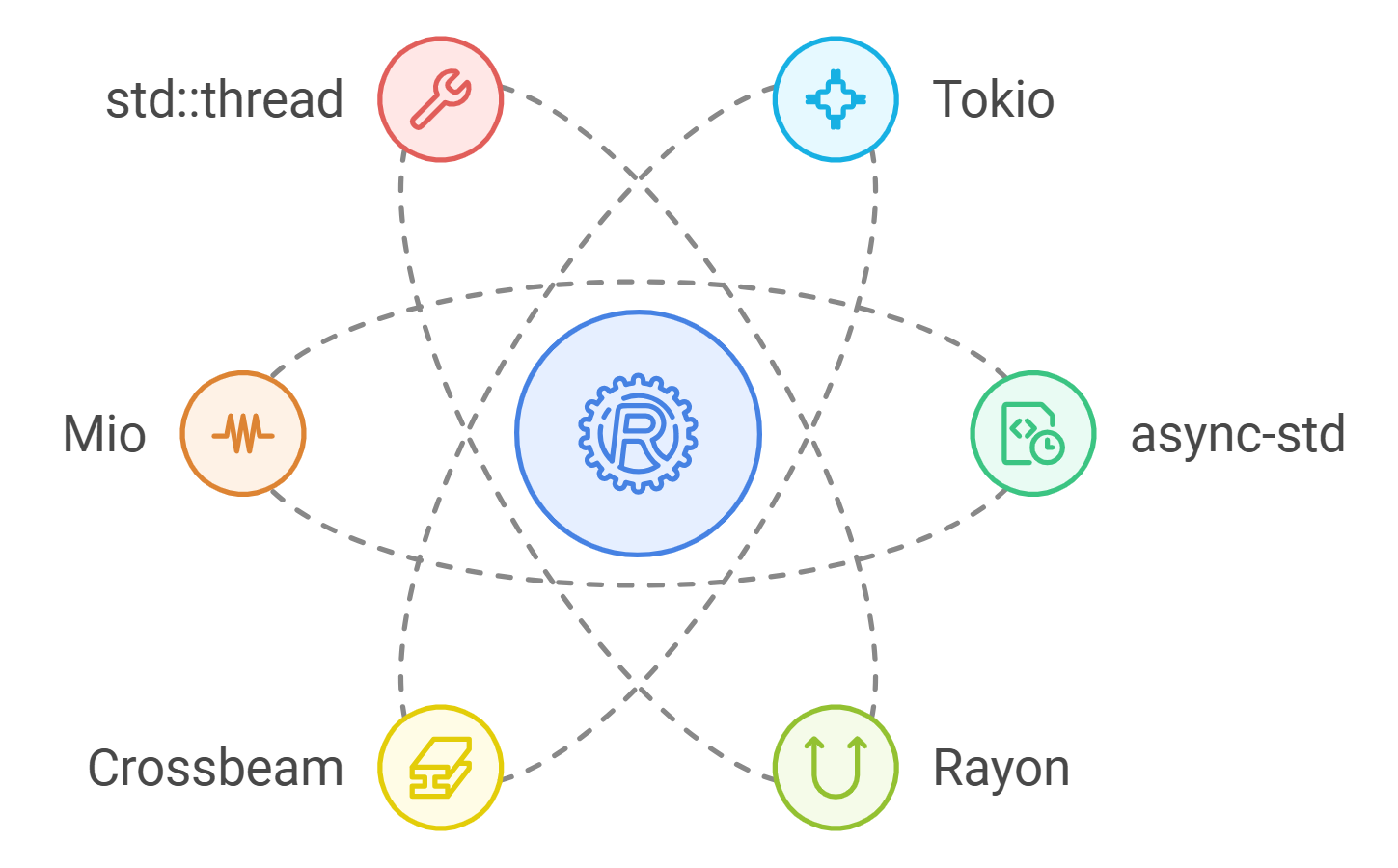

- Ecosystem Support: The Rust ecosystem includes libraries like

Rayonfor data parallelism andTokiofor asynchronous programming, making it easier to implement concurrent and parallel solutions. Our team can leverage these tools to create tailored solutions that meet your specific needs, including those that require rust fearless concurrency. - Community and Documentation: Rust has a vibrant community and extensive documentation, which helps developers learn and implement concurrent programming techniques effectively. By partnering with us, you gain access to our expertise and resources, ensuring that your projects are executed smoothly and efficiently. This community support is invaluable for those exploring rust concurrent programming.

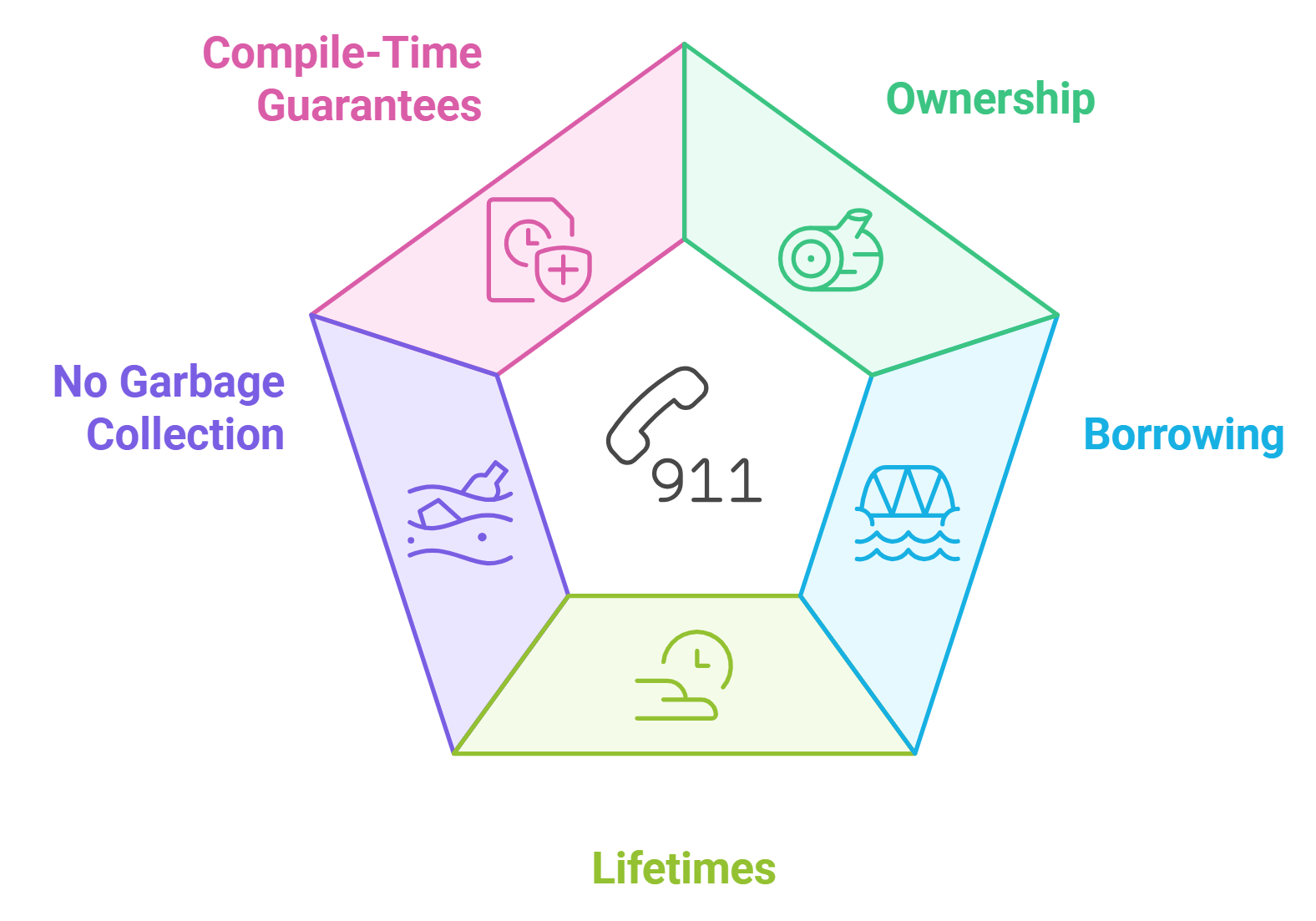

1.3. Rust's Memory Safety and Ownership Model

Rust's memory safety and ownership model are foundational to its approach to concurrent programming, providing several benefits that can enhance your project's success:

- Ownership: Every piece of data in Rust has a single owner, which is responsible for its memory. When the owner goes out of scope, the memory is automatically freed. This prevents memory leaks and dangling pointers, ensuring that your applications run reliably.

- Borrowing: Rust allows references to data without transferring ownership. Borrowing can be mutable or immutable, but Rust enforces rules that prevent data from being modified while it is borrowed immutably, ensuring thread safety. This leads to fewer bugs and a more stable product.

- Lifetimes: Rust uses lifetimes to track how long references are valid. This helps the compiler ensure that references do not outlive the data they point to, preventing use-after-free errors. By ensuring memory safety, we help you avoid costly downtime and maintenance.

- No Garbage Collection: Unlike languages that rely on garbage collection, Rust's ownership model eliminates the need for a garbage collector, leading to predictable performance and lower latency. This can result in a more responsive user experience for your applications.

- Compile-Time Guarantees: The ownership and borrowing rules are enforced at compile time, which means that many concurrency issues are resolved before the code even runs, leading to safer and more reliable concurrent applications. This proactive approach minimizes risks and enhances your project's overall success.

2. Basics of Rust for Concurrent Programming

To get started with concurrent programming in Rust, you need to understand some basic concepts and tools that we can help you implement effectively:

- Threads: Rust provides a simple way to create threads using the

std::threadmodule. You can spawn a new thread with thethread::spawnfunction, allowing your applications to perform multiple tasks simultaneously. - Mutexes: To safely share data between threads, Rust uses

Mutexfrom thestd::syncmodule. AMutexallows only one thread to access the data at a time, preventing data races. Our expertise ensures that your data remains secure and consistent. - Channels: Rust provides channels for communication between threads. The

std::sync::mpscmodule allows you to create channels to send messages between threads safely. This facilitates efficient inter-thread communication, enhancing your application's performance. - Example Code: Here’s a simple example demonstrating the use of threads and a mutex:

language="language-rust"use std::sync::{Arc, Mutex};-a1b2c3-use std::thread;-a1b2c3--a1b2c3-fn main() {-a1b2c3- let counter = Arc::new(Mutex::new(0));-a1b2c3- let mut handles = vec![];-a1b2c3--a1b2c3- for _ in 0..10 {-a1b2c3- let counter = Arc::clone(&counter);-a1b2c3- let handle = thread::spawn(move || {-a1b2c3- let mut num = counter.lock().unwrap();-a1b2c3- *num += 1;-a1b2c3- });-a1b2c3- handles.push(handle);-a1b2c3- }-a1b2c3--a1b2c3- for handle in handles {-a1b2c3- handle.join().unwrap();-a1b2c3- }-a1b2c3--a1b2c3- println!("Result: {}", *counter.lock().unwrap());-a1b2c3-}

- Steps to Run the Code:

- Install Rust using

rustup. - Create a new Rust project with

cargo new my_project. - Replace the contents of

src/main.rswith the code above. - Run the project using

cargo run.

By leveraging Rust's features, our team at Rapid Innovation can help you write safe and efficient concurrent programs that take full advantage of modern multi-core processors, ultimately leading to greater ROI and success for your projects. Partner with us to unlock the full potential of your development initiatives.

2.1. Ownership and Borrowing

Ownership is a core concept in Rust that ensures memory safety without needing a garbage collector. Each value in Rust has a single owner, which is responsible for cleaning up the value when it goes out of scope. This ownership model prevents data races and ensures that memory is managed efficiently.

- Key principles of ownership:

- Each value has a single owner.

- When the owner goes out of scope, the value is dropped.

- Ownership can be transferred (moved) to another variable.

Borrowing allows references to a value without taking ownership. This is crucial for allowing multiple parts of a program to access data without duplicating it.

- Types of borrowing:

- Immutable borrowing: Multiple references can be created, but none can modify the value.

- Mutable borrowing: Only one mutable reference can exist at a time, preventing data races.

Example of ownership and borrowing:

language="language-rust"fn main() {-a1b2c3- let s1 = String::from("Hello");-a1b2c3- let s2 = &s1; // Immutable borrow-a1b2c3- println!("{}", s2); // Works fine-a1b2c3- // let s3 = &mut s1; // Error: cannot borrow `s1` as mutable because it is also borrowed as immutable-a1b2c3-}

2.2. Lifetimes

Lifetimes are a way of expressing the scope of references in Rust. They ensure that references are valid as long as they are used, preventing dangling references and ensuring memory safety.

- Key concepts of lifetimes:

- Every reference has a lifetime, which is the scope for which that reference is valid.

- The Rust compiler uses lifetimes to check that references do not outlive the data they point to.

Lifetimes are often annotated in function signatures to clarify how long references are valid. The Rust compiler can usually infer lifetimes, but explicit annotations may be necessary in complex scenarios.

Example of lifetimes:

language="language-rust"fn longest<'a>(s1: &'a str, s2: &'a str) -> &'a str {-a1b2c3- if s1.len() > s2.len() {-a1b2c3- s1-a1b2c3- } else {-a1b2c3- s2-a1b2c3- }-a1b2c3-}

In this example, the function longest takes two string slices with the same lifetime 'a and returns a reference with the same lifetime.

2.3. Smart Pointers (Box, Rc, Arc)

Smart pointers are data structures that provide more functionality than regular pointers. They manage memory automatically and help with ownership and borrowing.

- Box:

- A smart pointer that allocates memory on the heap.

- Provides ownership of the data it points to.

- Useful for large data structures or recursive types.

Example of Box:

language="language-rust"fn main() {-a1b2c3- let b = Box::new(5);-a1b2c3- println!("{}", b);-a1b2c3-}

- Rc (Reference Counted):

- A smart pointer that enables multiple ownership of data.

- Keeps track of the number of references to the data.

- When the last reference goes out of scope, the data is dropped.

Example of Rc:

language="language-rust"use std::rc::Rc;-a1b2c3--a1b2c3-fn main() {-a1b2c3- let a = Rc::new(5);-a1b2c3- let b = Rc::clone(&a);-a1b2c3- println!("{}", b);-a1b2c3-}

- Arc (Atomic Reference Counted):

- Similar to Rc but thread-safe.

- Allows safe sharing of data across threads.

- Uses atomic operations to manage reference counts.

Example of Arc:

language="language-rust"use std::sync::Arc;-a1b2c3-use std::thread;-a1b2c3--a1b2c3-fn main() {-a1b2c3- let a = Arc::new(5);-a1b2c3- let a_clone = Arc::clone(&a);-a1b2c3--a1b2c3- thread::spawn(move || {-a1b2c3- println!("{}", a_clone);-a1b2c3- }).join().unwrap();-a1b2c3-}

Smart pointers in Rust provide powerful tools for managing memory and ownership, making it easier to write safe and efficient code. The concepts of rust ownership and borrowing are fundamental to understanding how Rust achieves memory safety and concurrency without a garbage collector.

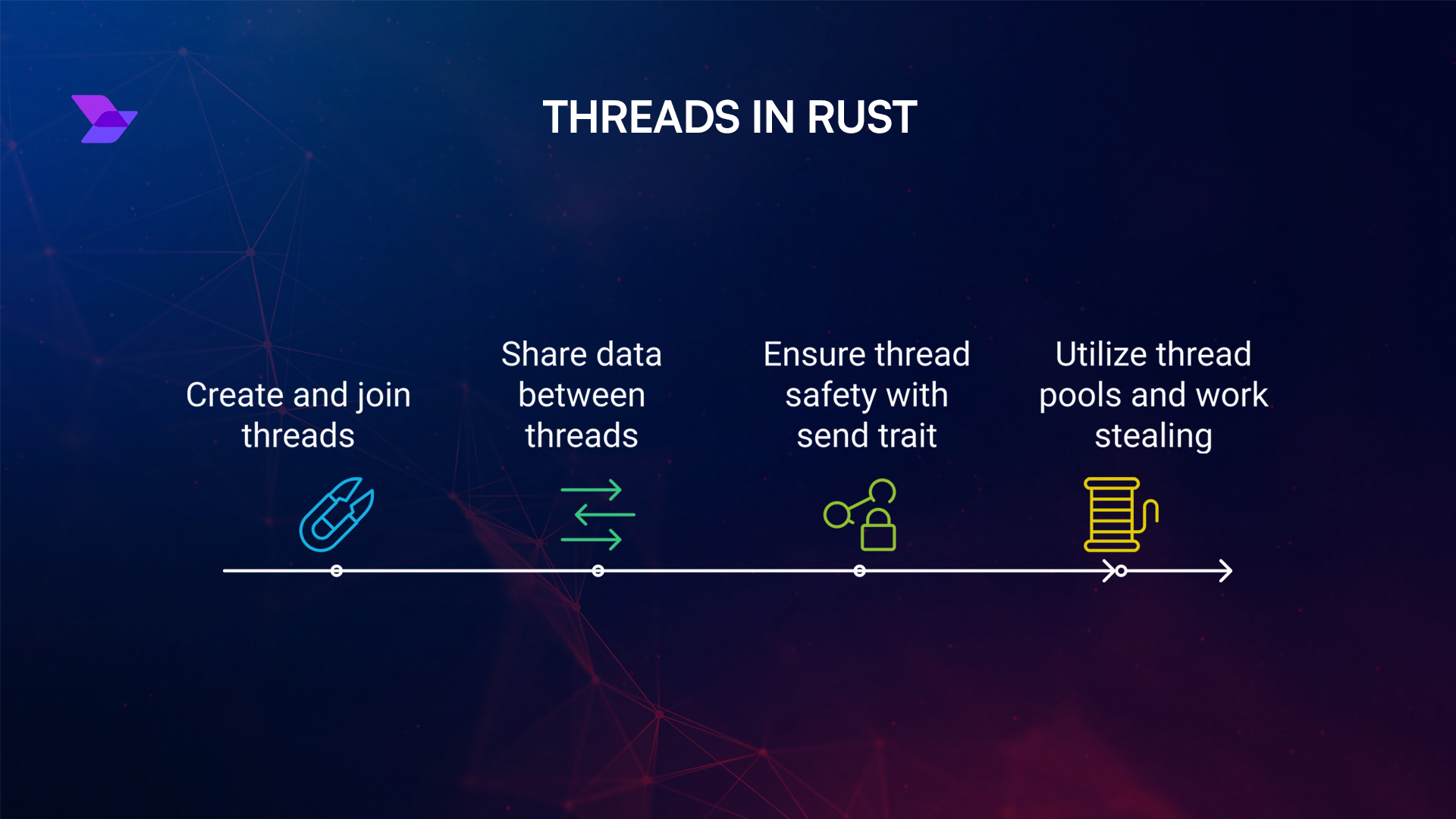

3. Threads in Rust

At Rapid Innovation, we understand that leveraging the right technology can significantly enhance your operational efficiency. Rust provides a powerful and safe way to handle concurrency through its rust threading model. Threads allow multiple tasks to run simultaneously, making efficient use of system resources. Rust's ownership model ensures that data races are avoided, promoting safe concurrent programming.

3.1. Creating and Joining Threads

Creating and managing threads in Rust is straightforward, thanks to the standard library's std::thread module. Here’s how you can create and join threads:

- Creating a Thread: Use the

thread::spawnfunction to create a new thread. This function takes a closure as an argument, which contains the code to be executed in the new thread. - Joining a Thread: After spawning a thread, you can call the

joinmethod on the thread handle to wait for the thread to finish execution. This ensures that the main thread does not exit before the spawned thread completes.

Example code to create and join threads:

language="language-rust"use std::thread;-a1b2c3--a1b2c3-fn main() {-a1b2c3- let handle = thread::spawn(|| {-a1b2c3- for i in 1..5 {-a1b2c3- println!("Thread: {}", i);-a1b2c3- }-a1b2c3- });-a1b2c3--a1b2c3- // Main thread work-a1b2c3- for i in 1..3 {-a1b2c3- println!("Main: {}", i);-a1b2c3- }-a1b2c3--a1b2c3- // Wait for the thread to finish-a1b2c3- handle.join().unwrap();-a1b2c3-}

- Key Points:

- The

thread::spawnfunction returns aJoinHandle, which can be used to join the thread. - The

joinmethod blocks the calling thread until the thread represented by the handle terminates. - If the spawned thread panics, the

joinmethod will return an error.

- The

3.2. Sharing Data Between Threads

When working with threads, sharing data safely is crucial to avoid data races. Rust provides several mechanisms to share data between threads, primarily through the use of Arc (Atomic Reference Counted) and Mutex (Mutual Exclusion).

- Arc: This is a thread-safe reference-counting pointer that allows multiple threads to own the same data. It ensures that the data is deallocated only when all references are dropped.

- Mutex: This is a synchronization primitive that allows only one thread to access the data at a time. It provides a lock mechanism to ensure that data is accessed safely.

Example code to share data between threads using Arc and Mutex:

language="language-rust"use std::sync::{Arc, Mutex};-a1b2c3-use std::thread;-a1b2c3--a1b2c3-fn main() {-a1b2c3- let counter = Arc::new(Mutex::new(0));-a1b2c3- let mut handles = vec![];-a1b2c3--a1b2c3- for _ in 0..10 {-a1b2c3- let counter = Arc::clone(&counter);-a1b2c3- let handle = thread::spawn(move || {-a1b2c3- let mut num = counter.lock().unwrap();-a1b2c3- *num += 1;-a1b2c3- });-a1b2c3- handles.push(handle);-a1b2c3- }-a1b2c3--a1b2c3- for handle in handles {-a1b2c3- handle.join().unwrap();-a1b2c3- }-a1b2c3--a1b2c3- println!("Result: {}", *counter.lock().unwrap());-a1b2c3-}

- Key Points:

- Use

Arcto share ownership of data across threads. - Use

Mutexto ensure that only one thread can access the data at a time. - Always handle the result of

lock()to avoid panics if the lock is poisoned.

- Use

By leveraging Rust's threading capabilities, developers can create efficient and safe concurrent applications. The combination of Arc and Mutex allows for safe data sharing, while the straightforward thread creation and joining process simplifies concurrent programming. At Rapid Innovation, we are committed to helping you harness these powerful features to achieve greater ROI and operational excellence. Partnering with us means you can expect enhanced productivity, reduced time-to-market, and a robust framework for your development needs.

3.3. Thread Safety and the Send Trait

Thread safety is a crucial concept in concurrent programming, ensuring that shared data is accessed and modified safely by multiple threads. In Rust, the Send trait plays a vital role in achieving thread safety.

- The

Sendtrait indicates that ownership of a type can be transferred across thread boundaries. - Types that implement

Sendcan be safely sent to another thread, allowing for concurrent execution without data races. - Most primitive types in Rust, such as integers and booleans, implement

Sendby default. - Complex types, like

Rc<T>, do not implementSendbecause they are not thread-safe. Instead,Arc<T>(atomic reference counting) is used for shared ownership across threads.

To ensure thread safety in your Rust applications, consider the following:

- Use

Arc<T>for shared ownership of data across threads. - Leverage synchronization primitives like

Mutex<T>orRwLock<T>to manage access to shared data. - Always check if a type implements

Sendwhen passing it to a thread.

In the context of 'rust thread safety', it is essential to understand how the Send trait contributes to the overall safety of concurrent programming in Rust. Additionally, 'thread safety in rust' is achieved through careful design and the use of appropriate types that adhere to the Send trait.

3.4. Thread Pools and Work Stealing

Thread pools are a powerful concurrency model that allows for efficient management of multiple threads. They help reduce the overhead of thread creation and destruction by reusing a fixed number of threads to execute tasks.

- A thread pool maintains a collection of worker threads that wait for tasks to execute.

- When a task is submitted, it is assigned to an available worker thread, which processes it and then returns to the pool.

- Work stealing is a technique used in thread pools to balance the workload among threads. If a worker thread finishes its tasks and becomes idle, it can "steal" tasks from other busy threads.

Benefits of using thread pools and work stealing include:

- Improved performance by reducing the overhead of thread management.

- Better resource utilization, as threads are reused rather than created and destroyed frequently.

- Enhanced responsiveness in applications, as tasks can be executed concurrently.

To implement a thread pool with work stealing in Rust, follow these steps:

- Use a library like

rayonortokiothat provides built-in support for thread pools and work stealing. - Define the tasks you want to execute concurrently.

- Submit tasks to the thread pool for execution.

Example code snippet using rayon:

language="language-rust"use rayon::prelude::*;-a1b2c3--a1b2c3-fn main() {-a1b2c3- let data = vec![1, 2, 3, 4, 5];-a1b2c3--a1b2c3- let results: Vec<_> = data.par_iter()-a1b2c3- .map(|&x| x * 2)-a1b2c3- .collect();-a1b2c3--a1b2c3- println!("{:?}", results);-a1b2c3-}

4. Synchronization Primitives

Synchronization primitives are essential tools in concurrent programming, allowing threads to coordinate their actions and manage access to shared resources. In Rust, several synchronization primitives are available:

Mutex<T>: A mutual exclusion primitive that provides exclusive access to the data it wraps. Only one thread can access the data at a time, preventing data races.- To use a

Mutex, wrap your data in it and lock it when accessing:

language="language-rust"use std::sync::{Arc, Mutex};-a1b2c3--a1b2c3-let data = Arc::new(Mutex::new(0));-a1b2c3--a1b2c3-let data_clone = Arc::clone(&data);-a1b2c3-std::thread::spawn(move || {-a1b2c3- let mut num = data_clone.lock().unwrap();-a1b2c3- *num += 1;-a1b2c3-});

RwLock<T>: A read-write lock that allows multiple readers or one writer at a time. This is useful when read operations are more frequent than write operations.Condvar: A condition variable that allows threads to wait for certain conditions to be met before proceeding. It is often used in conjunction withMutex.

When using synchronization primitives, consider the following:

- Minimize the scope of locks to reduce contention.

- Avoid holding locks while performing long-running operations.

- Be cautious of deadlocks by ensuring a consistent locking order.

4.1. Mutex and RwLock

Mutex (Mutual Exclusion) and RwLock (Read-Write Lock) are synchronization primitives used in concurrent programming synchronization to manage access to shared resources.

- Mutex:

- A mutex allows only one thread to access a resource at a time.

- It is simple and effective for protecting shared data.

- When a thread locks a mutex, other threads attempting to lock it will block until the mutex is unlocked.

- RwLock:

- An RwLock allows multiple readers or a single writer at any given time.

- This is beneficial when read operations are more frequent than write operations.

- It improves performance by allowing concurrent reads while still ensuring exclusive access for writes.

Example of using Mutex in Rust:

language="language-rust"use std::sync::{Arc, Mutex};-a1b2c3-use std::thread;-a1b2c3--a1b2c3-let counter = Arc::new(Mutex::new(0));-a1b2c3-let mut handles = vec![];-a1b2c3--a1b2c3-for _ in 0..10 {-a1b2c3- let counter = Arc::clone(&counter);-a1b2c3- let handle = thread::spawn(move || {-a1b2c3- let mut num = counter.lock().unwrap();-a1b2c3- *num += 1;-a1b2c3- });-a1b2c3- handles.push(handle);-a1b2c3-}-a1b2c3--a1b2c3-for handle in handles {-a1b2c3- handle.join().unwrap();-a1b2c3-}-a1b2c3--a1b2c3-println!("Result: {}", *counter.lock().unwrap());

Example of using RwLock in Rust:

language="language-rust"use std::sync::{Arc, RwLock};-a1b2c3-use std::thread;-a1b2c3--a1b2c3-let data = Arc::new(RwLock::new(0));-a1b2c3-let mut handles = vec![];-a1b2c3--a1b2c3-for _ in 0..10 {-a1b2c3- let data = Arc::clone(&data);-a1b2c3- let handle = thread::spawn(move || {-a1b2c3- let mut num = data.write().unwrap();-a1b2c3- *num += 1;-a1b2c3- });-a1b2c3- handles.push(handle);-a1b2c3-}-a1b2c3--a1b2c3-for handle in handles {-a1b2c3- handle.join().unwrap();-a1b2c3-}-a1b2c3--a1b2c3-let read_num = data.read().unwrap();-a1b2c3-println!("Result: {}", *read_num);

4.2. Atomic Types

Atomic types are special data types that provide lock-free synchronization. They allow safe concurrent access to shared data without the need for mutexes.

- Characteristics of Atomic Types:

- Operations on atomic types are guaranteed to be atomic, meaning they complete in a single step relative to other threads.

- They are typically used for counters, flags, and other simple data types.

- Common Atomic Types:

AtomicBool: Represents a boolean value.AtomicIsizeandAtomicUsize: Represent signed and unsigned integers, respectively.AtomicPtr: Represents a pointer.

Example of using Atomic Types in Rust:

language="language-rust"use std::sync::atomic::{AtomicUsize, Ordering};-a1b2c3-use std::thread;-a1b2c3--a1b2c3-let counter = AtomicUsize::new(0);-a1b2c3-let mut handles = vec![];-a1b2c3--a1b2c3-for _ in 0..10 {-a1b2c3- let handle = thread::spawn(|| {-a1b2c3- counter.fetch_add(1, Ordering::SeqCst);-a1b2c3- });-a1b2c3- handles.push(handle);-a1b2c3-}-a1b2c3--a1b2c3-for handle in handles {-a1b2c3- handle.join().unwrap();-a1b2c3-}-a1b2c3--a1b2c3-println!("Result: {}", counter.load(Ordering::SeqCst));

4.3. Barriers and Semaphores

Barriers and semaphores are synchronization mechanisms that help manage the execution of threads in concurrent programming synchronization.

- Barriers:

- A barrier allows multiple threads to wait until a certain condition is met before proceeding.

- It is useful for synchronizing phases of computation among threads.

- Semaphores:

- A semaphore is a signaling mechanism that controls access to a shared resource.

- It maintains a count that represents the number of available resources.

- Threads can acquire or release the semaphore, allowing for controlled access.

Example of using a Semaphore in Rust:

language="language-rust"use std::sync::{Arc, Semaphore};-a1b2c3-use std::thread;-a1b2c3--a1b2c3-let semaphore = Arc::new(Semaphore::new(2)); // Allow 2 concurrent threads-a1b2c3-let mut handles = vec![];-a1b2c3--a1b2c3-for _ in 0..5 {-a1b2c3- let semaphore = Arc::clone(&semaphore);-a1b2c3- let handle = thread::spawn(move || {-a1b2c3- let _permit = semaphore.acquire().unwrap();-a1b2c3- // Critical section-a1b2c3- });-a1b2c3- handles.push(handle);-a1b2c3-}-a1b2c3--a1b2c3-for handle in handles {-a1b2c3- handle.join().unwrap();-a1b2c3-}

These synchronization primitives are essential for ensuring data integrity and preventing race conditions in concurrent programming synchronization. By leveraging these tools, Rapid Innovation can help clients optimize their applications, ensuring efficient resource management and improved performance. Our expertise in AI and Blockchain development allows us to implement these advanced programming techniques, ultimately leading to greater ROI for our clients. When you partner with us, you can expect enhanced operational efficiency, reduced time-to-market, and a robust framework for your projects, all tailored to meet your specific business goals.

4.4. Condition Variables

Condition variables are synchronization primitives that enable threads to wait for certain conditions to be true before proceeding. They are particularly useful in scenarios where threads need to wait for resources to become available or for specific states to be reached.

- Purpose: Condition variables allow threads to sleep until a particular condition is met, which helps in avoiding busy-waiting and reduces CPU usage.

- Usage: Typically used in conjunction with mutexes to protect shared data. A thread will lock a mutex, check a condition, and if the condition is not met, it will wait on the condition variable.

- Signaling: When the condition changes (e.g., a resource becomes available), another thread can signal the condition variable, waking up one or more waiting threads.

Example of using condition variables in C++:

language="language-cpp"#include <iostream>-a1b2c3-#include <thread>-a1b2c3-#include <mutex>-a1b2c3-#include <condition_variable>-a1b2c3--a1b2c3-std::mutex mtx;-a1b2c3-std::condition_variable cv;-a1b2c3-bool ready = false;-a1b2c3--a1b2c3-void worker() {-a1b2c3- std::unique_lock<std::mutex> lock(mtx);-a1b2c3- cv.wait(lock, [] { return ready; });-a1b2c3- std::cout << "Worker thread proceeding\n";-a1b2c3-}-a1b2c3--a1b2c3-void signalWorker() {-a1b2c3- std::lock_guard<std::mutex> lock(mtx);-a1b2c3- ready = true;-a1b2c3- cv.notify_one();-a1b2c3-}-a1b2c3--a1b2c3-int main() {-a1b2c3- std::thread t(worker);-a1b2c3- std::this_thread::sleep_for(std::chrono::seconds(1));-a1b2c3- signalWorker();-a1b2c3- t.join();-a1b2c3- return 0;-a1b2c3-}

- Key Functions:

wait(): Blocks the thread until notified.notify_one(): Wakes up one waiting thread.notify_all(): Wakes up all waiting threads.

5. Message Passing

Message passing is a method of communication between threads or processes where data is sent as messages. This approach is often used in concurrent programming to avoid shared state and reduce the complexity of synchronization.

- Advantages:

- Decoupling: Threads or processes do not need to share memory, which reduces the risk of race conditions.

- Scalability: Easier to scale applications across multiple machines or processes.

- Simplicity: Simplifies the design of concurrent systems by using messages to communicate state changes.

- Types of Message Passing:

- Synchronous: The sender waits for the receiver to acknowledge receipt of the message.

- Asynchronous: The sender sends the message and continues without waiting for an acknowledgment.

5.1. Channels (mpsc)

Channels are a specific implementation of message passing, particularly in languages like Go and Rust. The term "mpsc" stands for "multiple producers, single consumer," which describes a channel that allows multiple threads to send messages to a single receiver.

- Characteristics:

- Thread Safety: Channels are designed to be safe for concurrent use, allowing multiple producers to send messages without additional synchronization.

- Buffering: Channels can be buffered or unbuffered. Buffered channels allow a certain number of messages to be sent without blocking, while unbuffered channels require the sender and receiver to synchronize.

- Implementation:

- In Rust, channels can be created using the

std::sync::mpscmodule.

- In Rust, channels can be created using the

Example of using channels in Rust:

language="language-rust"use std::sync::mpsc;-a1b2c3-use std::thread;-a1b2c3--a1b2c3-fn main() {-a1b2c3- let (tx, rx) = mpsc::channel();-a1b2c3--a1b2c3- thread::spawn(move || {-a1b2c3- let val = String::from("Hello from thread");-a1b2c3- tx.send(val).unwrap();-a1b2c3- });-a1b2c3--a1b2c3- let received = rx.recv().unwrap();-a1b2c3- println!("Received: {}", received);-a1b2c3-}

- Key Functions:

send(): Sends a message through the channel.recv(): Receives a message from the channel, blocking if necessary.

By utilizing condition variables and message passing, developers can create efficient and safe concurrent applications that minimize the risks associated with shared state and synchronization.

5.2. Crossbeam Channels

Crossbeam is a Rust library that provides powerful concurrency tools, including channels for message passing between threads. Channels are essential for building concurrent applications, allowing threads to communicate safely and efficiently.

- Types of Channels: Crossbeam offers two main types of channels:

- Unbounded Channels: These channels can hold an unlimited number of messages. They are useful when you don't want to block the sender.

- Bounded Channels: These channels have a fixed capacity. If the channel is full, the sender will block until space is available.

- Creating a Channel:

- Use

crossbeam::channel::unbounded()for an unbounded channel. - Use

crossbeam::channel::bounded(size)for a bounded channel.

- Use

- Sending and Receiving Messages:

- Use the

send()method to send messages. - Use the

recv()method to receive messages.

- Use the

Example code snippet:

language="language-rust"use crossbeam::channel;-a1b2c3--a1b2c3-let (sender, receiver) = channel::unbounded();-a1b2c3--a1b2c3-std::thread::spawn(move || {-a1b2c3- sender.send("Hello, World!").unwrap();-a1b2c3-});-a1b2c3--a1b2c3-let message = receiver.recv().unwrap();-a1b2c3-println!("{}", message);

- Benefits of Crossbeam Channels:

- Performance: Crossbeam channels are designed for high performance and low latency.

- Safety: They ensure thread safety, preventing data races.

- Flexibility: They support both synchronous and asynchronous message passing.

5.3. Actor Model with Actix

The Actor Model is a conceptual model used for designing concurrent systems. Actix is a powerful actor framework for Rust that allows developers to build concurrent applications using the Actor Model.

- Key Concepts:

- Actors: Independent units of computation that encapsulate state and behavior. Each actor processes messages asynchronously.

- Messages: Actors communicate by sending messages to each other. Messages are immutable and can be of any type.

- Creating an Actor:

- Define a struct to represent the actor.

- Implement the

Actortrait for the struct.

Example code snippet:

language="language-rust"use actix::prelude::*;-a1b2c3--a1b2c3-struct MyActor;-a1b2c3--a1b2c3-impl Message for MyActor {-a1b2c3- type Result = String;-a1b2c3-}-a1b2c3--a1b2c3-impl Actor for MyActor {-a1b2c3- type Context = Context<Self>;-a1b2c3-}-a1b2c3--a1b2c3-impl Handler<MyActor> for MyActor {-a1b2c3- type Result = String;-a1b2c3--a1b2c3- fn handle(&mut self, _: MyActor, _: &mut Self::Context) -> Self::Result {-a1b2c3- "Hello from MyActor!".to_string()-a1b2c3- }-a1b2c3-}

- Benefits of Using Actix:

- Concurrency: Actix allows for high levels of concurrency, making it suitable for building scalable applications.

- Fault Tolerance: The actor model inherently supports fault tolerance, as actors can be restarted independently.

- Ease of Use: Actix provides a straightforward API for defining actors and handling messages.

6. Async Programming in Rust

Async programming in Rust allows developers to write non-blocking code, which is essential for building responsive applications, especially in I/O-bound scenarios.

- Key Features:

- Futures: The core abstraction for asynchronous programming in Rust. A future represents a value that may not be available yet.

- Async/Await Syntax: Rust provides

asyncandawaitkeywords to simplify writing asynchronous code.

- Creating an Async Function:

- Use the

async fnsyntax to define an asynchronous function. - Use

.awaitto wait for a future to resolve.

- Use the

Example code snippet:

language="language-rust"use tokio;-a1b2c3--a1b2c3-#[tokio::main]-a1b2c3-async fn main() {-a1b2c3- let result = async_function().await;-a1b2c3- println!("{}", result);-a1b2c3-}-a1b2c3--a1b2c3-async fn async_function() -> String {-a1b2c3- "Hello from async function!".to_string()-a1b2c3-}

- Benefits of Async Programming:

- Efficiency: Non-blocking I/O operations allow for better resource utilization.

- Scalability: Async code can handle many connections simultaneously, making it ideal for web servers and network applications.

- Improved Responsiveness: Applications remain responsive while waiting for I/O operations to complete.

At Rapid Innovation, we leverage these advanced programming paradigms, including Rust concurrency tools, to help our clients build robust, scalable, and efficient applications. By integrating cutting-edge technologies like Rust's concurrency tools and async programming, we ensure that our clients achieve greater ROI through enhanced performance and responsiveness in their software solutions. Partnering with us means you can expect not only technical excellence but also a commitment to delivering solutions that align with your business goals.

6.1. Futures and Async/Await Syntax

Futures in Rust represent a value that may not be immediately available but will be computed at some point in the future. The async/await syntax simplifies working with these futures, making asynchronous programming in Rust more intuitive.

- Futures:

- A future is an abstraction that allows you to work with values that are not yet available.

- It can be thought of as a placeholder for a value that will be computed later.

- Async/Await:

- The

asynckeyword is used to define an asynchronous function, which returns a future. - The

awaitkeyword is used to pause the execution of an async function until the future is resolved. - Example:

language="language-rust"async fn fetch_data() -> String {-a1b2c3- // Simulate a network request-a1b2c3- "Data fetched".to_string()-a1b2c3-}-a1b2c3--a1b2c3-async fn main() {-a1b2c3- let data = fetch_data().await;-a1b2c3- println!("{}", data);-a1b2c3-}

- Benefits:

- Improved readability and maintainability of asynchronous code.

- Allows writing asynchronous code that looks similar to synchronous code.

6.2. Tokio Runtime

Tokio is an asynchronous runtime for Rust that provides the necessary tools to write non-blocking applications. It is built on top of the futures library and is designed to work seamlessly with async/await syntax.

- Key Features:

- Event Loop: Tokio uses an event loop to manage asynchronous tasks efficiently.

- Task Scheduling: It schedules tasks to run concurrently, allowing for high throughput.

- Timers and I/O: Provides utilities for working with timers and asynchronous I/O operations.

- Setting Up Tokio:

- Add Tokio to your

Cargo.toml:

language="language-toml"[dependencies]-a1b2c3-tokio = { version = "1", features = ["full"] }

- Creating a Tokio Runtime:

language="language-rust"#[tokio::main]-a1b2c3-async fn main() {-a1b2c3- // Your async code here-a1b2c3-}

- Benefits:

- High performance due to its non-blocking nature.

- Extensive ecosystem with libraries for various asynchronous tasks.

6.3. Async I/O Operations

Async I/O operations allow for non-blocking input and output, enabling applications to handle multiple tasks simultaneously without waiting for each operation to complete.

- Key Concepts:

- Non-blocking I/O: Operations that do not block the execution of the program while waiting for data.

- Stream and Sink: Streams represent a series of values over time, while sinks are used to send values.

- Example of Async File Read:

language="language-rust"use tokio::fs::File;-a1b2c3-use tokio::io::{self, AsyncReadExt};-a1b2c3--a1b2c3-#[tokio::main]-a1b2c3-async fn main() -> io::Result<()> {-a1b2c3- let mut file = File::open("example.txt").await?;-a1b2c3- let mut contents = vec![];-a1b2c3- file.read_to_end(&mut contents).await?;-a1b2c3- println!("{:?}", contents);-a1b2c3- Ok(())-a1b2c3-}

- Benefits:

- Improved application responsiveness by allowing other tasks to run while waiting for I/O operations.

- Efficient resource utilization, especially in networked applications.

By leveraging futures, async/await syntax, and the Tokio runtime, developers can create highly efficient and responsive applications in Rust. At Rapid Innovation, we harness these advanced async programming techniques in Rust to deliver robust solutions that drive greater ROI for our clients. Partnering with us means you can expect enhanced performance, reduced time-to-market, and a significant competitive edge in your industry. Let us help you achieve your goals efficiently and effectively.

6.4. Error Handling in Async Code

Error handling in asynchronous code is crucial for maintaining the stability and reliability of applications. Unlike synchronous code, where errors can be caught in a straightforward manner, async code requires a more nuanced approach. Here are some key strategies for effective error handling in async programming:

- Use Try-Catch Blocks: Wrap your async calls in try-catch blocks to handle exceptions gracefully.

language="language-javascript"async function fetchData() {-a1b2c3- try {-a1b2c3- const response = await fetch('https://api.example.com/data');-a1b2c3- const data = await response.json();-a1b2c3- return data;-a1b2c3- } catch (error) {-a1b2c3- console.error('Error fetching data:', error);-a1b2c3- }-a1b2c3-}

- Promise Rejection Handling: Always handle promise rejections using

.catch()to avoid unhandled promise rejections.

language="language-javascript"fetchData()-a1b2c3- .then(data => console.log(data))-a1b2c3- .catch(error => console.error('Error:', error));

- Centralized Error Handling: Implement a centralized error handling mechanism to manage errors across your application. This can be done using middleware in frameworks like Express.js.

- Logging: Use logging libraries to capture error details for debugging purposes. This can help in identifying issues in production environments.

- User Feedback: Provide meaningful feedback to users when an error occurs. This can enhance user experience and help them understand what went wrong.

7. Parallel Programming Techniques

Parallel programming techniques allow developers to execute multiple computations simultaneously, improving performance and efficiency. Here are some common techniques:

- Thread-Based Parallelism: This involves using multiple threads to perform tasks concurrently. Each thread can run on a separate core, making it suitable for CPU-bound tasks.

- Process-Based Parallelism: This technique uses multiple processes to achieve parallelism. Each process has its own memory space, which can help avoid issues related to shared state.

- Asynchronous Programming: This allows tasks to run in the background while the main thread continues executing. It is particularly useful for I/O-bound tasks.

- Task Parallelism: This involves breaking down a task into smaller sub-tasks that can be executed in parallel. This is often used in data processing applications.

7.1. Data Parallelism

Data parallelism is a specific type of parallel programming that focuses on distributing data across multiple processors or cores. It is particularly effective for operations that can be performed independently on different pieces of data. Here are some key aspects:

- Vectorization: This technique involves applying the same operation to multiple data points simultaneously. Many programming languages and libraries support vectorized operations, which can significantly speed up computations.

- Map-Reduce: This programming model allows for processing large data sets with a distributed algorithm. The "Map" function processes data in parallel, while the "Reduce" function aggregates the results.

- GPU Computing: Graphics Processing Units (GPUs) are designed for parallel processing and can handle thousands of threads simultaneously. This makes them ideal for data parallelism in applications like machine learning and scientific computing.

- Libraries and Frameworks: Utilize libraries such as OpenMP, MPI, or TensorFlow for implementing data parallelism in your applications. These tools provide abstractions that simplify the development of parallel algorithms.

- Performance Considerations: When implementing data parallelism, consider factors such as data transfer overhead, load balancing, and memory access patterns to optimize performance.

By understanding and applying these error handling techniques and parallel programming strategies, developers can create more robust and efficient applications. At Rapid Innovation, we leverage these methodologies to ensure that our clients' applications are not only high-performing but also resilient to errors, ultimately leading to greater ROI and enhanced user satisfaction. Partnering with us means you can expect tailored solutions that drive efficiency and effectiveness in achieving your business goals.

7.2. Task Parallelism

Task parallelism is a programming model that allows multiple tasks to be executed simultaneously. This approach is particularly useful in scenarios where tasks are independent and can be performed concurrently, leading to improved performance and resource utilization.

- Key characteristics of task parallelism:

- Tasks can be executed in any order.

- Each task may have different execution times.

- Ideal for workloads that can be divided into smaller, independent units.

- Benefits of task parallelism:

- Improved performance by utilizing multiple CPU cores.

- Better resource management, as tasks can be distributed across available resources.

- Enhanced responsiveness in applications, especially in user interfaces.

- Common use cases:

- Web servers handling multiple requests.

- Data processing applications that can split workloads.

- Scientific simulations that can run independent calculations.

7.3. Rayon for Easy Parallelism

Rayon is a data parallelism library for Rust that simplifies the process of writing parallel code. It abstracts away the complexities of thread management, allowing developers to focus on the logic of their applications.

- Features of Rayon:

- Easy-to-use API that integrates seamlessly with Rust's iterator patterns.

- Automatic load balancing, distributing work evenly across threads.

- Safe concurrency, leveraging Rust's ownership model to prevent data races.

- Steps to use Rayon:

- Add Rayon to your project by including it in your

Cargo.toml:

language="language-toml"[dependencies]-a1b2c3-rayon = "1.5"

- Import Rayon in your Rust file:

language="language-rust"use rayon::prelude::*;

- Use parallel iterators to process collections:

language="language-rust"let numbers: Vec<i32> = (1..100).collect();-a1b2c3-let sum: i32 = numbers.par_iter().map(|&x| x * 2).sum();

- Advantages of using Rayon:

- Simplifies parallel programming, reducing boilerplate code.

- Automatically optimizes performance based on available hardware.

- Encourages a functional programming style, making code easier to read and maintain.

7.4. SIMD (Single Instruction, Multiple Data)

SIMD (Single Instruction, Multiple Data) is a parallel computing paradigm that allows a single instruction to process multiple data points simultaneously. This technique is particularly effective for tasks that involve large datasets, such as image processing or scientific computations.

- Key aspects of SIMD:

- Operates on vectors of data, applying the same operation to multiple elements.

- Utilizes specialized CPU instructions to enhance performance.

- Can significantly reduce the number of instructions executed, leading to faster processing times.

- Benefits of SIMD:

- Increased throughput for data-intensive applications.

- Reduced power consumption compared to executing multiple instructions sequentially.

- Improved cache utilization, as data is processed in larger chunks.

- Common SIMD implementations:

- Intel's SSE (Streaming SIMD Extensions) and AVX (Advanced Vector Extensions).

- ARM's NEON technology for mobile devices.

- Rust's

packed_simdcrate for SIMD operations in Rust. - Example of SIMD in Rust:

- Add the

packed_simdcrate to yourCargo.toml:

language="language-toml"[dependencies]-a1b2c3-packed_simd = "0.3"

- Use SIMD types for parallel operations:

language="language-rust"use packed_simd::f32x4;-a1b2c3--a1b2c3-let a = f32x4::from_slice_unaligned(&[1.0, 2.0, 3.0, 4.0]);-a1b2c3-let b = f32x4::from_slice_unaligned(&[5.0, 6.0, 7.0, 8.0]);-a1b2c3-let result = a + b; // SIMD addition

By leveraging task parallelism programming, libraries like Rayon, and SIMD techniques, developers can significantly enhance the performance of their applications, making them more efficient and responsive. At Rapid Innovation, we specialize in implementing these advanced programming models to help our clients achieve greater ROI through optimized performance and resource utilization. Partnering with us means you can expect improved application responsiveness, better resource management, and ultimately, a more effective path to achieving your business goals.

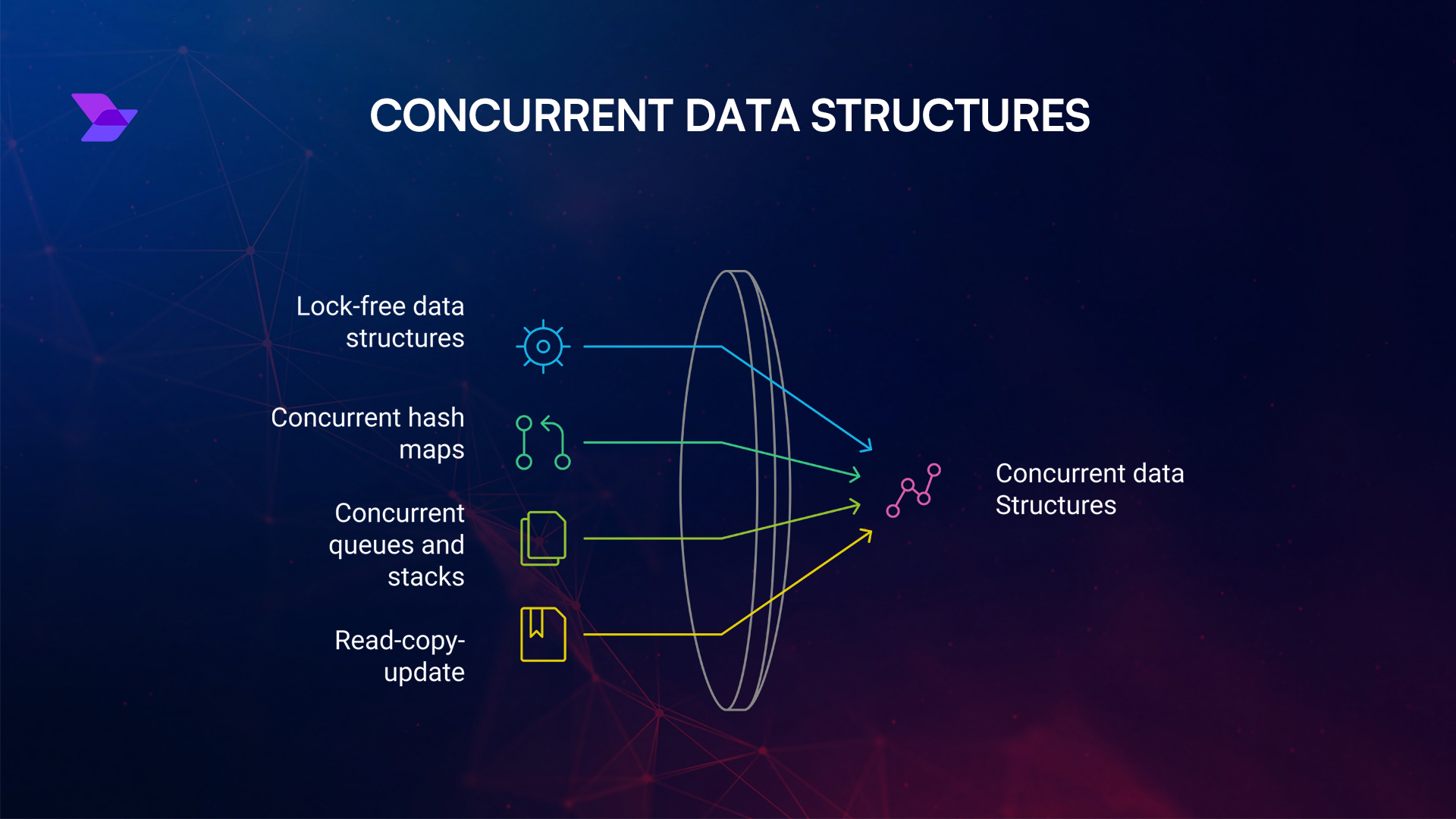

8. Concurrent Data Structures

At Rapid Innovation, we understand that concurrent data structures are crucial for enabling multiple threads to access and modify data simultaneously without causing inconsistencies or corrupting the data. These structures are essential in multi-threaded programming, where performance and data integrity are paramount. By leveraging our expertise in Rust cryptocurrency development, we can help you implement these advanced concurrent data structures to enhance your applications' efficiency and reliability.

8.1. Lock-free Data Structures

Lock-free data structures represent a significant advancement in concurrent programming. They allow threads to operate on shared data without using locks, minimizing thread contention and improving performance, especially in high-concurrency environments.

Benefits of Lock-free Data Structures:

- Increased Performance: By avoiding locks, lock-free structures reduce the overhead associated with thread management, leading to faster execution times.

- Improved Responsiveness: Threads can continue to make progress even if other threads are blocked, resulting in better responsiveness in applications, which is critical for user satisfaction.

- Avoidance of Deadlocks: The absence of locks eliminates the risk of deadlocks, ensuring smoother operation of your applications.

Common Lock-free Data Structures:

- Lock-free Stacks: Implemented using atomic operations to allow push and pop operations without locks.

- Lock-free Queues: Enable multiple threads to enqueue and dequeue items concurrently.

- Lock-free Lists: Allow for concurrent insertions and deletions without locks.

Implementation Steps for a Lock-free Stack:

- Use atomic pointers to represent the top of the stack.

- Implement push and pop operations using compare-and-swap (CAS) to ensure thread safety.

- Ensure that the stack maintains its integrity during concurrent operations.

Example Code for a Lock-free Stack:

language="language-cpp"class LockFreeStack {-a1b2c3-private:-a1b2c3- struct Node {-a1b2c3- int data;-a1b2c3- Node* next;-a1b2c3- };-a1b2c3- std::atomic<Node*> head;-a1b2c3--a1b2c3-public:-a1b2c3- LockFreeStack() : head(nullptr) {}-a1b2c3--a1b2c3- void push(int value) {-a1b2c3- Node* newNode = new Node{value, nullptr};-a1b2c3- Node* oldHead;-a1b2c3- do {-a1b2c3- oldHead = head.load();-a1b2c3- newNode->next = oldHead;-a1b2c3- } while (!head.compare_exchange_weak(oldHead, newNode));-a1b2c3- }-a1b2c3--a1b2c3- bool pop(int& value) {-a1b2c3- Node* oldHead;-a1b2c3- do {-a1b2c3- oldHead = head.load();-a1b2c3- if (!oldHead) return false; // Stack is empty-a1b2c3- } while (!head.compare_exchange_weak(oldHead, oldHead->next));-a1b2c3- value = oldHead->data;-a1b2c3- delete oldHead;-a1b2c3- return true;-a1b2c3- }-a1b2c3-};

8.2. Concurrent Hash Maps

Concurrent hash maps are specialized data structures that allow multiple threads to read and write data concurrently while maintaining data integrity. They are particularly useful in scenarios where frequent updates and lookups are required, making them ideal for applications that demand high performance.

Key Features of Concurrent Hash Maps:

- Segmented Locking: Many implementations use segmented locking, where the hash map is divided into segments, each protected by its own lock. This allows multiple threads to access different segments simultaneously, enhancing throughput.

- Lock-free Operations: Some advanced implementations provide lock-free operations for certain read and write actions, further enhancing performance.

- Dynamic Resizing: Concurrent hash maps can dynamically resize themselves to accommodate more entries without significant performance degradation, ensuring scalability.

Implementation Steps for a Concurrent Hash Map:

- Divide the hash map into multiple segments.

- Use locks for each segment to allow concurrent access.

- Implement methods for insertion, deletion, and lookup that respect the locking mechanism.

Example Code for a Simple Concurrent Hash Map:

language="language-cpp"#include <mutex>-a1b2c3-#include <vector>-a1b2c3-#include <list>-a1b2c3-#include <string>-a1b2c3--a1b2c3-class ConcurrentHashMap {-a1b2c3-private:-a1b2c3- static const int numBuckets = 10;-a1b2c3- std::vector<std::list<std::pair<std::string, int>>> table;-a1b2c3- std::vector<std::mutex> locks;-a1b2c3--a1b2c3-public:-a1b2c3- ConcurrentHashMap() : table(numBuckets), locks(numBuckets) {}-a1b2c3--a1b2c3- void insert(const std::string& key, int value) {-a1b2c3- int index = std::hash<std::string>{}(key) % numBuckets;-a1b2c3- std::lock_guard<std::mutex> guard(locks[index]);-a1b2c3- table[index].emplace_back(key, value);-a1b2c3- }-a1b2c3--a1b2c3- bool find(const std::string& key, int& value) {-a1b2c3- int index = std::hash<std::string>{}(key) % numBuckets;-a1b2c3- std::lock_guard<std::mutex> guard(locks[index]);-a1b2c3- for (const auto& pair : table[index]) {-a1b2c3- if (pair.first == key) {-a1b2c3- value = pair.second;-a1b2c3- return true;-a1b2c3- }-a1b2c3- }-a1b2c3- return false;-a1b2c3- }-a1b2c3-};

In conclusion, concurrent data structures, particularly lock-free data structures and concurrent hash maps, are vital for efficient multi-threaded programming. They provide mechanisms to ensure data integrity while allowing high levels of concurrency, making them essential in modern software development. By partnering with Rapid Innovation, you can leverage our expertise in java concurrent data structures to implement these advanced structures, ultimately achieving greater ROI and enhancing the performance of your applications. Let us help you navigate the complexities of multi-threaded programming and unlock the full potential of your projects.

8.3. Concurrent Queues and Stacks

Concurrent queues and stacks are data structures designed to handle multiple threads accessing them simultaneously without causing data corruption or inconsistency. They are essential in multi-threaded programming, where threads may need to share data efficiently.

Key Characteristics:

- Thread Safety: These structures are designed to be safe for concurrent access, meaning multiple threads can read and write without causing race conditions.

- Lock-Free Operations: Many concurrent queues and stacks implement lock-free algorithms, allowing threads to operate without traditional locking mechanisms, which can lead to performance bottlenecks.

Types of Concurrent Queues:

- Blocking Queues: These queues block the calling thread when trying to dequeue from an empty queue or enqueue to a full queue. They are useful in producer-consumer scenarios.

- Non-Blocking Queues: These allow threads to attempt to enqueue or dequeue without blocking, often using atomic operations to ensure thread safety.

Implementation Steps:

- Choose a suitable concurrent data structure based on your application needs (e.g.,

ConcurrentLinkedQueuein Java). - Use atomic operations (like compare-and-swap) to manage state changes.

- Ensure proper handling of edge cases, such as empty queues or stacks.

Example Code for a Concurrent Queue in Java:

language="language-java"import java.util.concurrent.ConcurrentLinkedQueue;-a1b2c3--a1b2c3-public class ConcurrentQueueExample {-a1b2c3- public static void main(String[] args) {-a1b2c3- ConcurrentLinkedQueue<Integer> queue = new ConcurrentLinkedQueue<>();-a1b2c3--a1b2c3- // Adding elements-a1b2c3- queue.offer(1);-a1b2c3- queue.offer(2);-a1b2c3--a1b2c3- // Removing elements-a1b2c3- Integer element = queue.poll();-a1b2c3- System.out.println("Removed: " + element);-a1b2c3- }-a1b2c3-}

8.4. Read-Copy-Update (RCU)

Read-Copy-Update (RCU) is a synchronization mechanism that allows multiple threads to read shared data concurrently while updates are made in a way that does not interfere with ongoing reads. This is particularly useful in scenarios where reads are more frequent than writes.

Key Features:

- Read Optimization: RCU allows readers to access data without locking, which significantly improves performance in read-heavy applications.

- Deferred Updates: Updates are made to a copy of the data, and once the update is complete, the new version is made visible to readers.

Implementation Steps:

- Create a data structure that supports RCU.

- When a thread wants to read, it accesses the current version of the data.

- For updates, create a new version of the data, update it, and then switch the pointer to the new version.

- Use a mechanism to ensure that no readers are accessing the old version before it is freed.

Example Code for RCU in C:

language="language-c"#include <stdio.h>-a1b2c3-#include <stdlib.h>-a1b2c3-#include <pthread.h>-a1b2c3--a1b2c3-typedef struct Node {-a1b2c3- int data;-a1b2c3- struct Node* next;-a1b2c3-} Node;-a1b2c3--a1b2c3-Node* head = NULL;-a1b2c3--a1b2c3-void rcu_read_lock() {-a1b2c3- // Implementation of read lock-a1b2c3-}-a1b2c3--a1b2c3-void rcu_read_unlock() {-a1b2c3- // Implementation of read unlock-a1b2c3-}-a1b2c3--a1b2c3-void update_data(int new_data) {-a1b2c3- Node* new_node = malloc(sizeof(Node));-a1b2c3- new_node->data = new_data;-a1b2c3- new_node->next = head;-a1b2c3- head = new_node;-a1b2c3-}-a1b2c3--a1b2c3-void read_data() {-a1b2c3- rcu_read_lock();-a1b2c3- Node* current = head;-a1b2c3- while (current) {-a1b2c3- printf("%d\n", current->data);-a1b2c3- current = current->next;-a1b2c3- }-a1b2c3- rcu_read_unlock();-a1b2c3-}

9. Advanced Concurrency Patterns

Advanced concurrency patterns extend basic concurrency mechanisms to solve more complex problems in multi-threaded environments. These patterns help manage shared resources, coordinate tasks, and improve performance.

Common Patterns:

- Fork-Join: This pattern divides a task into subtasks that can be processed in parallel and then combines the results.

- Pipeline: In this pattern, data flows through a series of processing stages, with each stage potentially running in parallel.

- Actor Model: This model encapsulates state and behavior in "actors" that communicate through message passing, avoiding shared state issues.

Implementation Steps:

- Identify the concurrency pattern that best fits your problem.

- Design the architecture to support the chosen pattern.

- Implement synchronization mechanisms as needed to ensure data integrity.

Example Code for Fork-Join in Java:

language="language-java"import java.util.concurrent.RecursiveTask;-a1b2c3-import java.util.concurrent.ForkJoinPool;-a1b2c3--a1b2c3-public class ForkJoinExample extends RecursiveTask<Integer> {-a1b2c3- private final int start;-a1b2c3- private final int end;-a1b2c3--a1b2c3- public ForkJoinExample(int start, int end) {-a1b2c3- this.start = start;-a1b2c3- this.end = end;-a1b2c3- }-a1b2c3--a1b2c3- @Override-a1b2c3- protected Integer compute() {-a1b2c3- if (end - start <= 10) {-a1b2c3- return computeDirectly();-a1b2c3- }-a1b2c3- int mid = (start + end) / 2;-a1b2c3- ForkJoinExample leftTask = new ForkJoinExample(start, mid);-a1b2c3- ForkJoinExample rightTask = new ForkJoinExample(mid, end);-a1b2c3- leftTask.fork();-a1b2c3- return rightTask.compute() + leftTask.join();-a1b2c3- }-a1b2c3--a1b2c3- private Integer computeDirectly() {-a1b2c3- // Direct computation logic-a1b2c3- return end - start; // Example logic-a1b2c3- }-a1b2c3--a1b2c3- public static void main(String[] args) {-a1b2c3- ForkJoinPool pool = new ForkJoinPool();-a1b2c3- ForkJoinExample task = new ForkJoinExample(0, 100);-a1b2c3- int result = pool.invoke(task);-a1b2c3- System.out.println("Result: " + result);-a1b2c3- }-a1b2c3-}

At Rapid Innovation, we understand the complexities of multi-threaded programming and the importance of efficient data handling. By leveraging our expertise in concurrent queues and stacks and advanced concurrency patterns, we can help you optimize your applications for better performance and reliability. Partnering with us means you can expect enhanced scalability, reduced latency, and ultimately, a greater return on investment as we tailor solutions to meet your specific needs. Let us guide you in achieving your goals effectively and efficiently.

9.1. Dining Philosophers Problem

The Dining Philosophers Problem is a classic synchronization problem in computer science that illustrates the challenges of resource sharing among multiple processes. It involves five philosophers sitting around a table, where each philosopher alternates between thinking and eating. To eat, a philosopher needs two forks, which are shared with their neighbors.

Key concepts:

- Deadlock: A situation where philosophers hold one fork and wait indefinitely for the other.

- Starvation: A scenario where a philosopher is perpetually denied access to both forks.

- Concurrency: Multiple philosophers trying to eat simultaneously.

To solve the Dining Philosophers Problem, several strategies can be employed:

- Resource Hierarchy: Assign a strict order to the forks, ensuring that philosophers pick up the lower-numbered fork first.

- Chandy/Misra Solution: Introduce a token system where a philosopher must request permission to pick up forks.

- Asymmetric Solution: Make one philosopher pick up the left fork first and the other the right fork, reducing the chances of deadlock.

Example code for a simple solution using semaphores:

language="language-python"import threading-a1b2c3-import time-a1b2c3--a1b2c3-class Philosopher(threading.Thread):-a1b2c3- def __init__(self, name, left_fork, right_fork):-a1b2c3- threading.Thread.__init__(self)-a1b2c3- self.name = name-a1b2c3- self.left_fork = left_fork-a1b2c3- self.right_fork = right_fork-a1b2c3--a1b2c3- def run(self):-a1b2c3- while True:-a1b2c3- self.think()-a1b2c3- self.eat()-a1b2c3--a1b2c3- def think(self):-a1b2c3- print(f"{self.name} is thinking.")-a1b2c3- time.sleep(1)-a1b2c3--a1b2c3- def eat(self):-a1b2c3- with self.left_fork:-a1b2c3- with self.right_fork:-a1b2c3- print(f"{self.name} is eating.")-a1b2c3- time.sleep(1)-a1b2c3--a1b2c3-forks = [threading.Lock() for _ in range(5)]-a1b2c3-philosophers = [Philosopher(f"Philosopher {i}", forks[i], forks[(i + 1) % 5]) for i in range(5)]-a1b2c3--a1b2c3-for philosopher in philosophers:-a1b2c3- philosopher.start()

9.2. Readers-Writers Problem

The Readers-Writers Problem addresses the situation where multiple processes need to read and write shared data. The challenge lies in ensuring that readers can access the data simultaneously while writers have exclusive access.

Key concepts:

- Readers: Can read the data concurrently.

- Writers: Require exclusive access to modify the data.

- Priority: Deciding whether to prioritize readers or writers can affect performance.

To solve the Readers-Writers Problem, various strategies can be implemented:

- First Readers-Writers Solution: Allow multiple readers but block writers until all readers finish.

- Second Readers-Writers Solution: Prioritize writers, allowing them to access the data as soon as they request it.

- Read-Write Locks: Use specialized locks that allow multiple readers or a single writer.

Example code using read-write locks:

language="language-python"import threading-a1b2c3--a1b2c3-class ReadWriteLock:-a1b2c3- def __init__(self):-a1b2c3- self.readers = 0-a1b2c3- self.lock = threading.Lock()-a1b2c3- self.write_lock = threading.Lock()-a1b2c3--a1b2c3- def acquire_read(self):-a1b2c3- with self.lock:-a1b2c3- self.readers += 1-a1b2c3- if self.readers == 1:-a1b2c3- self.write_lock.acquire()-a1b2c3--a1b2c3- def release_read(self):-a1b2c3- with self.lock:-a1b2c3- self.readers -= 1-a1b2c3- if self.readers == 0:-a1b2c3- self.write_lock.release()-a1b2c3--a1b2c3- def acquire_write(self):-a1b2c3- self.write_lock.acquire()-a1b2c3--a1b2c3- def release_write(self):-a1b2c3- self.write_lock.release()-a1b2c3--a1b2c3-rw_lock = ReadWriteLock()-a1b2c3--a1b2c3-def reader():-a1b2c3- rw_lock.acquire_read()-a1b2c3- print("Reading data.")-a1b2c3- rw_lock.release_read()-a1b2c3--a1b2c3-def writer():-a1b2c3- rw_lock.acquire_write()-a1b2c3- print("Writing data.")-a1b2c3- rw_lock.release_write()

9.3. Producer-Consumer Pattern

The Producer-Consumer Pattern is a classic synchronization problem where producers generate data and place it into a buffer, while consumers retrieve and process that data. The challenge is to ensure that the buffer does not overflow (when producers produce too quickly) or underflow (when consumers consume too quickly).

Key concepts:

- Buffer: A shared resource that holds data produced by producers.

- Synchronization: Ensuring that producers and consumers operate without conflicts.

To implement the Producer-Consumer Pattern, you can use semaphores or condition variables:

- Bounded Buffer: Use a fixed-size buffer to limit the number of items.

- Semaphores: Use semaphores to signal when the buffer is full or empty.

Example code using a bounded buffer:

language="language-python"import threading-a1b2c3-import time-a1b2c3-import random-a1b2c3--a1b2c3-buffer = []-a1b2c3-buffer_size = 5-a1b2c3-buffer_lock = threading.Lock()-a1b2c3-empty = threading.Semaphore(buffer_size)-a1b2c3-full = threading.Semaphore(0)-a1b2c3--a1b2c3-def producer():-a1b2c3- while True:-a1b2c3- item = random.randint(1, 100)-a1b2c3- empty.acquire()-a1b2c3- buffer_lock.acquire()-a1b2c3- buffer.append(item)-a1b2c3- print(f"Produced {item}.")-a1b2c3- buffer_lock.release()-a1b2c3- full.release()-a1b2c3- time.sleep(random.random())-a1b2c3--a1b2c3-def consumer():-a1b2c3- while True:-a1b2c3- full.acquire()-a1b2c3- buffer_lock.acquire()-a1b2c3- item = buffer.pop(0)-a1b2c3- print(f"Consumed {item}.")-a1b2c3- buffer_lock.release()-a1b2c3- empty.release()-a1b2c3- time.sleep(random.random())-a1b2c3--a1b2c3-threading.Thread(target=producer).start()-a1b2c3-threading.Thread(target=consumer).start()

In the context of synchronization issues, one might encounter problems such as 'outlook sync issue', 'outlook sync problems', or 'outlook synchronization issues' when dealing with shared resources. These issues can be analogous to the challenges faced in the Dining Philosophers Problem, where resource contention can lead to deadlock or starvation. Similarly, in the Readers-Writers Problem, one might experience 'outlook folder sync issues' if multiple processes are trying to access shared data simultaneously, leading to synchronization problems. In the Producer-Consumer Pattern, if the buffer is not managed correctly, it could result in 'ms outlook sync issues' or 'outlook 365 sync issues', reflecting the need for proper synchronization mechanisms to avoid overflow or underflow situations.

9.4. Implementing a Thread-Safe Singleton

A Singleton is a design pattern that restricts the instantiation of a class to one single instance. In multi-threaded applications, ensuring that this instance is created in a thread-safe manner is crucial to avoid issues like race conditions. Here are some common approaches to implement a thread-safe Singleton:

- Eager Initialization: The instance is created at the time of class loading. This is simple but can lead to resource wastage if the instance is never used.

language="language-java"public class Singleton {-a1b2c3- private static final Singleton instance = new Singleton();-a1b2c3--a1b2c3- private Singleton() {}-a1b2c3--a1b2c3- public static Singleton getInstance() {-a1b2c3- return instance;-a1b2c3- }-a1b2c3-}

- Lazy Initialization with Synchronization: The instance is created only when it is needed, but synchronized access is required to ensure thread safety. This is a common approach in thread safe singleton c# implementations.

language="language-java"public class Singleton {-a1b2c3- private static Singleton instance;-a1b2c3--a1b2c3- private Singleton() {}-a1b2c3--a1b2c3- public static synchronized Singleton getInstance() {-a1b2c3- if (instance == null) {-a1b2c3- instance = new Singleton();-a1b2c3- }-a1b2c3- return instance;-a1b2c3- }-a1b2c3-}

- Double-Checked Locking: This approach reduces the overhead of acquiring a lock by first checking if the instance is null without synchronization. This method is often used in thread safe singleton c++ implementations.

language="language-java"public class Singleton {-a1b2c3- private static volatile Singleton instance;-a1b2c3--a1b2c3- private Singleton() {}-a1b2c3--a1b2c3- public static Singleton getInstance() {-a1b2c3- if (instance == null) {-a1b2c3- synchronized (Singleton.class) {-a1b2c3- if (instance == null) {-a1b2c3- instance = new Singleton();-a1b2c3- }-a1b2c3- }-a1b2c3- }-a1b2c3- return instance;-a1b2c3- }-a1b2c3-}

- Bill Pugh Singleton Design: This method uses a static inner helper class to hold the Singleton instance, which is only loaded when it is referenced. This is a widely accepted pattern in threadsafe singleton implementations.

language="language-java"public class Singleton {-a1b2c3- private Singleton() {}-a1b2c3--a1b2c3- private static class SingletonHelper {-a1b2c3- private static final Singleton INSTANCE = new Singleton();-a1b2c3- }-a1b2c3--a1b2c3- public static Singleton getInstance() {-a1b2c3- return SingletonHelper.INSTANCE;-a1b2c3- }-a1b2c3-}

10. Performance Optimization and Profiling

Performance optimization is essential in software development to ensure that applications run efficiently and effectively. Profiling helps identify bottlenecks and areas for improvement. Here are some strategies for performance optimization:

- Code Optimization: Refactor code to eliminate unnecessary computations and improve algorithm efficiency.

- Memory Management: Use memory efficiently by avoiding memory leaks and unnecessary object creation.

- Concurrency: Utilize multi-threading to improve performance, especially in I/O-bound applications. This is particularly relevant when implementing a threadsafe singleton.

- Caching: Implement caching mechanisms to store frequently accessed data, reducing the need for repeated calculations or database queries.

- Database Optimization: Optimize database queries and use indexing to speed up data retrieval.

- Profiling Tools: Use profiling tools like JProfiler, VisualVM, or YourKit to analyze application performance and identify bottlenecks.

10.1. Benchmarking Concurrent Code

Benchmarking concurrent code is crucial to understand its performance under various conditions. Here are steps to effectively benchmark concurrent code:

- Define Metrics: Determine what metrics are important (e.g., response time, throughput).

- Choose a Benchmarking Framework: Use frameworks like JMH (Java Microbenchmark Harness) for Java applications.

- Set Up Test Scenarios: Create scenarios that simulate real-world usage patterns.

- Run Benchmarks: Execute the benchmarks multiple times to gather reliable data.

- Analyze Results: Review the results to identify performance bottlenecks and areas for improvement.

- Iterate: Make necessary code optimizations and re-run benchmarks to measure improvements.

By following these guidelines, developers can ensure that their applications are not only functional but also optimized for performance in a concurrent environment.

At Rapid Innovation, we understand the importance of these principles in delivering high-quality software solutions. Our expertise in AI and Blockchain development allows us to implement these best practices effectively, ensuring that your applications are robust, efficient, and scalable. By partnering with us, you can expect greater ROI through optimized performance, reduced operational costs, and enhanced user satisfaction. Let us help you achieve your goals efficiently and effectively.

10.2. Identifying and Resolving Bottlenecks

Bottlenecks in a system can significantly hinder performance and efficiency. Identifying and resolving these bottlenecks is crucial for optimizing application performance, and at Rapid Innovation, we specialize in helping our clients achieve this.

- Monitor Performance Metrics: We utilize advanced tools like Application Performance Management (APM) to track response times, CPU usage, memory consumption, and I/O operations, ensuring that your application runs smoothly.

- Analyze Logs: Our team reviews application logs to identify slow queries, errors, or unusual patterns that may indicate a bottleneck, allowing us to proactively address issues before they escalate.

- Profile the Application: We employ profiling tools to analyze code execution and identify functions or methods that consume excessive resources, enabling targeted optimizations.

- Load Testing: By simulating high traffic, we observe how your system behaves under stress and pinpoint areas that may fail to scale, ensuring that your application can handle increased demand.

- Database Optimization: Our experts examine database queries for inefficiencies, such as missing indexes or poorly structured queries, to enhance performance.

- Code Review: We conduct regular code reviews to identify inefficient algorithms or data structures that may slow down performance, ensuring your codebase remains optimized.

Resolving bottlenecks often involves:

- Refactoring Code: We optimize algorithms and data structures to improve efficiency, leading to faster application performance.

- Scaling Resources: Our team can increase server capacity or distribute load across multiple servers, ensuring your application can handle growth.

- Caching Strategies: We implement caching mechanisms to reduce load on databases and improve response times, resulting in a more responsive user experience.

10.3. Cache-Friendly Concurrent Algorithms

Cache-friendly concurrent algorithms are designed to optimize the use of CPU caches, which can significantly enhance performance in multi-threaded environments. At Rapid Innovation, we leverage these techniques to maximize efficiency for our clients.

- Data Locality: We structure data to maximize spatial and temporal locality, ensuring that frequently accessed data is stored close together in memory.

- Minimize False Sharing: Our solutions avoid situations where multiple threads modify variables that reside on the same cache line, leading to unnecessary cache invalidation.

- Use Lock-Free Data Structures: We implement data structures that allow multiple threads to operate without locks, reducing contention and improving throughput.

- Batch Processing: Our approach includes processing data in batches to minimize cache misses and improve cache utilization.

- Thread Affinity: We bind threads to specific CPU cores to take advantage of cache locality, reducing the overhead of cache misses.

Example of a cache-friendly algorithm:

language="language-python"def cache_friendly_sum(array):-a1b2c3- total = 0-a1b2c3- for i in range(len(array)):-a1b2c3- total += array[i]-a1b2c3- return total

- Use of Arrays: We prefer arrays over linked lists due to their contiguous memory allocation, which is more cache-friendly.

- Parallel Processing: Our strategies involve dividing the array into chunks and processing them in parallel, ensuring that each thread works on a separate cache line.

10.4. Scalability Analysis

Scalability analysis is essential for understanding how a system can handle increased loads and how it can be improved to accommodate growth. Rapid Innovation provides comprehensive scalability analysis to ensure your systems are future-ready.

- Vertical Scaling: We assess the potential for upgrading existing hardware (CPU, RAM) to improve performance.

- Horizontal Scaling: Our team evaluates the ability to add more machines to distribute the load effectively, ensuring your application can grow seamlessly.

- Load Balancing: We implement load balancers to distribute incoming traffic evenly across servers, preventing any single server from becoming a bottleneck.

- Microservices Architecture: We consider breaking down monolithic applications into microservices to allow independent scaling of components, enhancing flexibility and performance.

- Performance Testing: Our rigorous stress tests determine the maximum load your system can handle before performance degrades, providing valuable insights for optimization.

Key metrics to analyze include:

- Throughput: We measure the number of transactions processed in a given time frame to ensure your application meets business demands.

- Latency: Our monitoring of the time taken to process requests under varying loads helps identify potential performance issues.

- Resource Utilization: We track CPU, memory, and network usage to identify potential scaling issues, ensuring your infrastructure is optimized.