Table Of Contents

Category

Artificial Intelligence

1. Introduction

Artificial Intelligence (AI) has been a transformative force in the 21st century, revolutionizing industries, enhancing human capabilities, and reshaping the way we interact with technology. From its early conceptual stages in the mid-20th century to the sophisticated systems we see today, AI has made significant strides. Initially, AI was limited to simple rule-based systems and basic machine learning algorithms. However, with the advent of big data, increased computational power, and advanced algorithms, AI has evolved into a powerful tool capable of performing complex tasks that were once thought to be the exclusive domain of human intelligence.

The journey of AI has been marked by several key milestones. The development of neural networks in the 1980s, the advent of deep learning in the 2010s, and the recent breakthroughs in natural language processing and computer vision have all contributed to the rapid advancement of AI. Today, AI is not just a theoretical concept but a practical tool used in various applications, from healthcare and finance to entertainment and transportation.

As we move into 2024, the landscape of AI continues to evolve at an unprecedented pace. The integration of AI into everyday life is becoming more seamless, and its applications are expanding into new and exciting areas. This evolution is driven by continuous technological advancements, increased investment in AI research and development, and a growing recognition of the potential benefits of AI across different sectors. In this context, it is essential to understand how AI is evolving and what technological advancements are shaping its future.

2. How is AI Evolving in 2024?

The evolution of AI in 2024 is characterized by several key trends and developments. One of the most significant trends is the increasing integration of AI into various aspects of daily life. From smart home devices and personal assistants to autonomous vehicles and advanced healthcare systems, AI is becoming an integral part of our everyday experiences. This integration is facilitated by advancements in AI technologies, increased computational power, and the proliferation of connected devices.

Another important trend is the growing emphasis on ethical AI. As AI systems become more powerful and pervasive, there is a heightened awareness of the ethical implications of AI. Issues such as bias in AI algorithms, data privacy, and the potential for AI to be used in harmful ways are becoming central to discussions about the future of AI. In response, there is a growing focus on developing ethical guidelines and frameworks to ensure that AI is used responsibly and for the benefit of all.

In addition to these trends, AI is also evolving in terms of its capabilities. Advances in machine learning, natural language processing, and computer vision are enabling AI systems to perform increasingly complex tasks with greater accuracy and efficiency. For example, AI systems are now capable of understanding and generating human language with a high degree of fluency, recognizing and interpreting images and videos, and making sophisticated predictions based on large datasets.

Furthermore, the evolution of AI is being driven by increased investment in AI research and development. Governments, corporations, and academic institutions around the world are investing heavily in AI, recognizing its potential to drive economic growth and innovation. This investment is leading to rapid advancements in AI technologies and the development of new applications and use cases.

2.1. Technological Advancements

Technological advancements are at the heart of the evolution of AI in 2024. One of the most significant advancements is in the field of machine learning. Machine learning algorithms are becoming more sophisticated, enabling AI systems to learn from data more effectively and make more accurate predictions. This is being facilitated by the development of new algorithms, the availability of large datasets, and increased computational power.

Another key advancement is in the field of natural language processing (NLP). NLP technologies have made significant strides in recent years, enabling AI systems to understand and generate human language with a high degree of accuracy. This has led to the development of advanced chatbots, virtual assistants, and other AI applications that can interact with humans in a natural and intuitive way.

Computer vision is another area where significant advancements are being made. AI systems are now capable of recognizing and interpreting images and videos with a high degree of accuracy. This is being used in a wide range of applications, from autonomous vehicles and surveillance systems to medical imaging and augmented reality.

In addition to these advancements, there are also significant developments in the field of AI hardware. The development of specialized AI chips and processors is enabling AI systems to perform complex computations more efficiently. This is leading to the development of more powerful and efficient AI systems that can be used in a wide range of applications.

Furthermore, advancements in quantum computing are also expected to have a significant impact on the evolution of AI. Quantum computers have the potential to perform complex computations at speeds that are currently unimaginable with classical computers. This could lead to significant breakthroughs in AI, enabling the development of more advanced AI systems and applications.

In conclusion, the evolution of AI in 2024 is being driven by a combination of technological advancements, increased investment in AI research and development, and a growing recognition of the potential benefits of AI. As AI continues to evolve, it is likely to have an increasingly significant impact on various aspects of our lives, from the way we work and communicate to the way we live and interact with the world around us.

2.2. Ethical Considerations

Ethical considerations in technology, particularly in fields like artificial intelligence (AI) and machine learning, are paramount to ensure that advancements benefit society without causing harm. As AI systems become more integrated into daily life, the ethical implications of their use must be carefully examined. One of the primary ethical concerns is bias. AI systems are trained on large datasets, and if these datasets contain biased information, the AI can perpetuate and even amplify these biases. This can lead to unfair treatment of individuals based on race, gender, or other characteristics. For instance, facial recognition technology has been shown to have higher error rates for people of color, which can lead to wrongful accusations or other serious consequences.

Another significant ethical consideration is privacy. AI systems often require vast amounts of data to function effectively. This data can include personal information, which raises concerns about how this information is collected, stored, and used. There is a risk that personal data could be misused or fall into the wrong hands, leading to breaches of privacy. Ensuring that data is handled responsibly and that individuals' privacy is protected is crucial.

Transparency and accountability are also key ethical issues. AI systems can be complex and opaque, making it difficult for users to understand how decisions are made. This lack of transparency can lead to a lack of trust in AI systems. It is essential that AI systems are designed to be as transparent as possible, with clear explanations of how decisions are made. Additionally, there must be mechanisms in place to hold developers and users of AI systems accountable for their actions.

The potential for job displacement is another ethical concern. As AI systems become more capable, there is a risk that they could replace human workers in various industries. This could lead to significant economic and social disruption. It is important to consider how to mitigate these impacts, such as through retraining programs or other support for displaced workers.

Finally, the ethical use of AI in decision-making processes is a critical consideration. AI systems are increasingly being used to make decisions in areas such as healthcare, criminal justice, and finance. These decisions can have significant impacts on individuals' lives, and it is essential that they are made fairly and ethically. This includes ensuring that AI systems are used to support human decision-making rather than replace it entirely, and that there are safeguards in place to prevent misuse.

In conclusion, ethical considerations in AI and technology are multifaceted and complex. Addressing these issues requires a collaborative effort from developers, policymakers, and society as a whole. By prioritizing ethics in the development and deployment of AI systems, we can ensure that these technologies are used in ways that are fair, transparent, and beneficial to all.

3. What is Advanced Natural Language Processing (NLP)?

Advanced Natural Language Processing (NLP) is a subfield of artificial intelligence that focuses on the interaction between computers and human language. It involves the development of algorithms and models that enable computers to understand, interpret, and generate human language in a way that is both meaningful and useful. NLP combines computational linguistics, which models the structure and meaning of language, with machine learning, which allows systems to learn from data and improve over time.

One of the primary goals of advanced NLP is to bridge the gap between human communication and computer understanding. This involves a range of tasks, from basic ones like tokenization and part-of-speech tagging to more complex tasks like sentiment analysis, machine translation, and question answering. Advanced NLP goes beyond simple keyword matching and involves understanding the context, semantics, and nuances of language.

The scope of advanced natural language processing is vast and encompasses various applications. In the realm of text analysis, advanced NLP can be used to extract insights from large volumes of unstructured text data, such as social media posts, customer reviews, and news articles. This can help businesses understand customer sentiment, identify trends, and make data-driven decisions. In healthcare, advanced NLP can be used to analyze clinical notes and medical records, aiding in disease diagnosis and treatment planning.

Another significant application of advanced NLP is in the development of conversational agents and chatbots. These systems can interact with users in natural language, providing information, answering questions, and performing tasks. Advanced NLP techniques enable these systems to understand user intent, manage dialogue, and generate coherent and contextually appropriate responses.

Machine translation is another area where advanced NLP has made significant strides. Systems like Google Translate use sophisticated NLP models to translate text from one language to another, taking into account the nuances and context of the source language to produce accurate translations.

Advanced NLP also plays a crucial role in information retrieval and search engines. By understanding the context and intent behind user queries, advanced NLP can improve the relevance and accuracy of search results. This involves not only matching keywords but also understanding the semantics of the query and the content of the documents being searched.

In summary, advanced NLP is a dynamic and rapidly evolving field that seeks to enable computers to understand and generate human language. Its applications are diverse and have the potential to transform various industries by making human-computer interaction more natural and intuitive.

3.1. Definition and Scope

Natural Language Processing (NLP) is defined as the branch of artificial intelligence that focuses on the interaction between computers and humans through natural language. The primary objective of NLP is to enable computers to understand, interpret, and generate human language in a way that is both meaningful and useful. This involves a combination of computational linguistics, which models the structure and meaning of language, and machine learning, which allows systems to learn from data and improve over time.

The scope of advanced natural language processing is broad and encompasses a wide range of tasks and applications. At its core, advanced NLP involves several fundamental tasks, including tokenization, which is the process of breaking down text into individual words or tokens; part-of-speech tagging, which involves identifying the grammatical categories of words; and named entity recognition, which involves identifying and classifying entities such as names, dates, and locations within text.

Beyond these basic tasks, advanced NLP also involves more complex tasks such as sentiment analysis, which aims to determine the sentiment or emotion expressed in a piece of text; machine translation, which involves translating text from one language to another; and text summarization, which involves generating a concise summary of a longer piece of text.

One of the key challenges in advanced NLP is dealing with the ambiguity and variability of human language. Words can have multiple meanings depending on the context, and the same idea can be expressed in many different ways. Advanced NLP techniques use context and semantics to disambiguate and understand the true meaning of text.

The applications of advanced NLP are diverse and span various industries. In the business world, advanced NLP can be used for customer sentiment analysis, enabling companies to understand customer opinions and feedback from social media, reviews, and surveys. In healthcare, advanced NLP can be used to analyze clinical notes and medical records, aiding in disease diagnosis and treatment planning. In the legal field, advanced NLP can be used to analyze legal documents and contracts, helping lawyers and legal professionals to identify relevant information and make informed decisions.

Another significant application of advanced NLP is in the development of conversational agents and chatbots. These systems can interact with users in natural language, providing information, answering questions, and performing tasks. Advanced NLP techniques enable these systems to understand user intent, manage dialogue, and generate coherent and contextually appropriate responses.

In summary, the definition and scope of advanced NLP encompass a wide range of tasks and applications aimed at enabling computers to understand and generate human language. By combining computational linguistics and machine learning, advanced NLP seeks to bridge the gap between human communication and computer understanding, making human-computer interaction more natural and intuitive.

3.2. Key Technologies

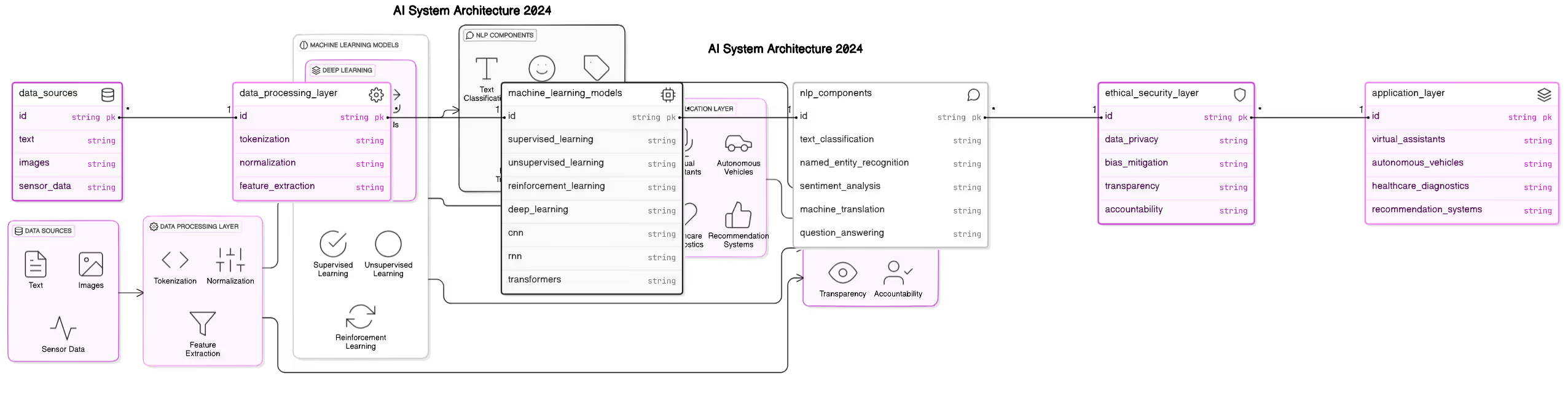

Key technologies in the realm of artificial intelligence (AI) and natural language processing (NLP) are the foundational elements that drive advancements and applications in these fields. These technologies encompass a wide range of tools, frameworks, and methodologies that enable machines to understand, interpret, and generate human language, as well as to learn from data and make intelligent decisions.

One of the most critical technologies in AI and NLP is machine learning (ML). Machine learning involves the development of algorithms that allow computers to learn from and make predictions or decisions based on data. Within ML, there are several subfields, including supervised learning, unsupervised learning, and reinforcement learning. Supervised learning involves training a model on a labeled dataset, where the correct output is provided for each input. This approach is commonly used for tasks such as classification and regression. Unsupervised learning, on the other hand, deals with unlabeled data and aims to find hidden patterns or intrinsic structures within the data. Clustering and dimensionality reduction are typical examples of unsupervised learning tasks. Reinforcement learning involves training an agent to make a sequence of decisions by rewarding it for desirable actions and penalizing it for undesirable ones. This approach is often used in robotics and game playing.

Deep learning, a subset of machine learning, has gained significant attention in recent years due to its success in various AI applications. Deep learning models, particularly neural networks, are designed to mimic the human brain's structure and function. These models consist of multiple layers of interconnected nodes (neurons) that process and transform data. Convolutional neural networks (CNNs) are widely used for image recognition and processing, while recurrent neural networks (RNNs) and their variants, such as long short-term memory (LSTM) networks, are commonly used for sequential data processing, including language modeling and translation.

Natural language processing itself relies on several key technologies to function effectively. Tokenization is the process of breaking down text into smaller units, such as words or phrases, which can then be analyzed. Part-of-speech tagging involves identifying the grammatical categories of words in a sentence, such as nouns, verbs, and adjectives. Named entity recognition (NER) is used to identify and classify entities within text, such as names of people, organizations, and locations. Parsing involves analyzing the grammatical structure of a sentence to understand its meaning.

Another crucial technology in NLP is word embeddings, which are dense vector representations of words. Word embeddings capture the semantic meaning of words by placing them in a continuous vector space, where words with similar meanings are located close to each other. Popular word embedding techniques include Word2Vec, GloVe, and FastText. These embeddings are used as input features for various NLP tasks, such as text classification, sentiment analysis, and machine translation.

Transfer learning has also become a key technology in AI and NLP. Transfer learning involves pre-training a model on a large dataset and then fine-tuning it on a smaller, task-specific dataset. This approach has been particularly successful with models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), which have achieved state-of-the-art performance on a wide range of NLP tasks.

In addition to these technologies, advancements in hardware, such as graphics processing units (GPUs) and tensor processing units (TPUs), have played a significant role in accelerating AI and NLP research. These specialized hardware components are designed to handle the computational demands of training and deploying complex machine learning models.

Overall, the key technologies in AI and NLP are continually evolving, driven by ongoing research and development. These technologies form the backbone of modern AI systems, enabling machines to process and understand human language, learn from data, and perform a wide range of intelligent tasks.

4. Types of AI and NLP Technologies

Artificial intelligence (AI) and natural language processing (NLP) encompass a broad spectrum of technologies, each designed to address specific challenges and applications. Understanding the different types of AI and NLP technologies is crucial for grasping the capabilities and limitations of these fields.

AI technologies can be broadly categorized into three types: narrow AI, general AI, and superintelligent AI. Narrow AI, also known as weak AI, is designed to perform specific tasks within a limited domain. Examples of narrow AI include virtual assistants like Siri and Alexa, recommendation systems used by Netflix and Amazon, and image recognition systems used in medical diagnostics. These systems are highly specialized and excel at their designated tasks but lack the ability to perform tasks outside their specific domain.

General AI, also known as strong AI or artificial general intelligence (AGI), refers to systems that possess the ability to understand, learn, and apply knowledge across a wide range of tasks, similar to human intelligence. AGI remains a theoretical concept and has not yet been achieved. Researchers are working towards developing AGI, but it presents significant technical and ethical challenges.

Superintelligent AI refers to AI systems that surpass human intelligence in all aspects, including creativity, problem-solving, and emotional intelligence. This type of AI is purely speculative and remains a topic of debate among researchers and ethicists. The potential implications of superintelligent AI raise important questions about control, safety, and the future of humanity.

NLP technologies can be categorized based on their applications and underlying methodologies. Some of the key types of NLP technologies include:

1. Text Classification: Text classification involves categorizing text into predefined classes or categories. This technology is used in spam detection, sentiment analysis, and topic categorization. Machine learning models, such as support vector machines (SVM) and neural networks, are commonly used for text classification tasks.

2. Named Entity Recognition (NER): NER is the process of identifying and classifying named entities, such as names of people, organizations, locations, and dates, within text. NER is used in information extraction, question answering, and text summarization. Techniques like conditional random fields (CRF) and deep learning models are often employed for NER tasks.

3. Machine Translation: Machine translation involves automatically translating text from one language to another. This technology is used in applications like Google Translate and multilingual communication tools. Neural machine translation (NMT) models, such as the Transformer architecture, have significantly improved the quality of machine translation in recent years.

4. Sentiment Analysis: Sentiment analysis aims to determine the sentiment or emotion expressed in a piece of text, such as positive, negative, or neutral. This technology is used in social media monitoring, customer feedback analysis, and market research. Techniques like lexicon-based approaches and deep learning models are commonly used for sentiment analysis.

5. Question Answering: Question answering systems are designed to provide accurate answers to user queries based on a given context or knowledge base. These systems are used in virtual assistants, search engines, and customer support. Deep learning models, such as BERT and GPT, have achieved state-of-the-art performance in question answering tasks.

6. Text Summarization: Text summarization involves generating a concise summary of a longer text while preserving its key information. This technology is used in news aggregation, document summarization, and content curation. Techniques like extractive summarization and abstractive summarization are employed for this task.

7. Speech Recognition: Speech recognition technology converts spoken language into written text. This technology is used in voice assistants, transcription services, and accessibility tools. Deep learning models, such as recurrent neural networks (RNNs) and convolutional neural networks (CNNs), are commonly used for speech recognition.

These are just a few examples of the diverse range of AI and NLP technologies. Each type of technology has its own set of challenges and applications, and ongoing research continues to push the boundaries of what is possible in these fields.

4.1. Machine Learning Models

Machine learning models are the backbone of many AI and NLP technologies, enabling systems to learn from data and make intelligent decisions. These models can be broadly categorized into several types based on their learning paradigms and architectures.

Supervised learning models are trained on labeled data, where each input is paired with the correct output. The goal is to learn a mapping from inputs to outputs that can be used to make predictions on new, unseen data. Common supervised learning models include linear regression, logistic regression, support vector machines (SVM), decision trees, and neural networks. Linear regression is used for predicting continuous values, while logistic regression is used for binary classification tasks. SVMs are powerful classifiers that find the optimal hyperplane to separate different classes. Decision trees are used for both classification and regression tasks, and they work by recursively splitting the data based on feature values. Neural networks, particularly deep learning models, have gained popularity due to their ability to learn complex patterns and representations from data.

Unsupervised learning models, on the other hand, deal with unlabeled data and aim to discover hidden patterns or structures within the data. Common unsupervised learning models include clustering algorithms, such as k-means and hierarchical clustering, and dimensionality reduction techniques, such as principal component analysis (PCA) and t-distributed stochastic neighbor embedding (t-SNE). Clustering algorithms group similar data points together based on their features, while dimensionality reduction techniques reduce the number of features in the data while preserving its essential structure.

Reinforcement learning models involve training an agent to make a sequence of decisions by interacting with an environment. The agent receives rewards or penalties based on its actions and learns to maximize its cumulative reward over time. Common reinforcement learning algorithms include Q-learning, deep Q-networks (DQN), and policy gradient methods. Reinforcement learning has been successfully applied to various tasks, such as game playing, robotics, and autonomous driving.

Deep learning models, a subset of machine learning models, have revolutionized the field of AI and NLP. These models consist of multiple layers of interconnected nodes (neurons) that process and transform data. Convolutional neural networks (CNNs) are widely used for image recognition and processing, while recurrent neural networks (RNNs) and their variants, such as long short-term memory (LSTM) networks and gated recurrent units (GRUs), are commonly used for sequential data processing, including language modeling and translation. The Transformer architecture, introduced in the paper "Attention is All You Need," has become the foundation for many state-of-the-art NLP models, such as BERT and GPT. Transformers use self-attention mechanisms to capture dependencies between words in a sentence, allowing them to handle long-range dependencies more effectively than RNNs.

Ensemble learning models combine the predictions of multiple base models to improve overall performance. Common ensemble learning techniques include bagging, boosting, and stacking. Bagging, or bootstrap aggregating, involves training multiple models on different subsets of the data and averaging their predictions. Random forests are a popular example of a bagging technique. Boosting involves training models sequentially, where each model focuses on correcting the errors of the previous models. Gradient boosting machines (GBM) and AdaBoost are well-known boosting algorithms. Stacking involves training a meta-model to combine the predictions of multiple base models, often leading to improved performance.

Transfer learning has also become an essential aspect of machine learning models, particularly in NLP. Transfer learning involves pre-training a model on a large dataset and then fine-tuning it on a smaller, task-specific dataset. This approach has been particularly successful with models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), which have achieved state-of-the-art performance on a wide range of NLP tasks.

In summary, machine learning models are diverse and versatile, each with its own strengths and applications. From supervised and unsupervised learning to reinforcement learning and deep learning, these models form the foundation of modern AI and NLP systems, enabling machines to learn from data and perform a wide range of intelligent tasks.

4.2. Deep Learning Architectures

Deep learning architectures are a subset of machine learning models that are inspired by the structure and function of the human brain. These architectures are composed of multiple layers of artificial neurons, which are designed to automatically and adaptively learn hierarchical representations of data. The most common deep learning architectures include Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Generative Adversarial Networks (GANs).

Convolutional Neural Networks (CNNs) are primarily used for image and video recognition tasks. They consist of convolutional layers that apply filters to input data, pooling layers that reduce the dimensionality of the data, and fully connected layers that perform classification. CNNs have been highly successful in various applications, such as object detection, facial recognition, and medical image analysis. The hierarchical structure of CNNs allows them to capture spatial hierarchies in images, making them particularly effective for visual data. Popular CNN architectures include VGG16, VGG19, and Inception V3.

Recurrent Neural Networks (RNNs) are designed for sequential data, such as time series, natural language, and speech. Unlike traditional feedforward neural networks, RNNs have connections that form directed cycles, allowing them to maintain a memory of previous inputs. This makes RNNs well-suited for tasks that require context, such as language modeling, machine translation, and speech recognition. However, RNNs can suffer from issues like vanishing and exploding gradients, which can hinder their ability to learn long-term dependencies. Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) are variants of RNNs that address these issues by incorporating gating mechanisms to control the flow of information.

Generative Adversarial Networks (GANs) are a class of deep learning models that consist of two neural networks: a generator and a discriminator. The generator creates synthetic data, while the discriminator evaluates the authenticity of the data. The two networks are trained simultaneously in a process known as adversarial training, where the generator aims to produce data that is indistinguishable from real data, and the discriminator aims to correctly identify real and synthetic data. GANs have been used for various applications, including image generation, style transfer, and data augmentation. They have also shown promise in generating realistic synthetic data for training other machine learning models.

Deep learning architectures have revolutionized many fields by enabling the automatic extraction of features from raw data, reducing the need for manual feature engineering. They have achieved state-of-the-art performance in numerous tasks, such as image classification, speech recognition, and natural language processing. However, deep learning models are often computationally intensive and require large amounts of labeled data for training. Additionally, they can be prone to overfitting and may lack interpretability, making it challenging to understand their decision-making processes.

4.3. Reinforcement Learning

Reinforcement learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with an environment. The agent receives feedback in the form of rewards or penalties based on its actions, and its goal is to maximize the cumulative reward over time. Unlike supervised learning, where the model is trained on a fixed dataset, reinforcement learning involves learning from the consequences of actions in a dynamic environment.

The core components of a reinforcement learning system include the agent, the environment, the state, the action, and the reward. The agent observes the current state of the environment and selects an action based on a policy, which is a mapping from states to actions. The environment then transitions to a new state and provides a reward to the agent. The agent updates its policy based on the received reward and the new state, aiming to improve its future actions.

There are several key algorithms in reinforcement learning, including Q-learning, Deep Q-Networks (DQNs), and policy gradient methods. Q-learning is a model-free algorithm that learns the value of state-action pairs, known as Q-values. The agent uses these Q-values to select actions that maximize the expected cumulative reward. DQNs extend Q-learning by using deep neural networks to approximate the Q-values, enabling the agent to handle high-dimensional state spaces, such as images. Policy gradient methods, on the other hand, directly optimize the policy by adjusting the parameters of a neural network to maximize the expected reward. These methods are particularly useful for continuous action spaces and complex tasks.

Reinforcement learning has been successfully applied to various domains, including robotics, game playing, and autonomous driving. In robotics, RL enables robots to learn complex behaviors, such as grasping objects and navigating environments, through trial and error. In game playing, RL has achieved superhuman performance in games like Go, chess, and video games, demonstrating the potential of RL to solve complex decision-making problems. In autonomous driving, RL is used to train self-driving cars to navigate traffic, avoid obstacles, and make safe driving decisions.

Despite its successes, reinforcement learning faces several challenges. One major challenge is the exploration-exploitation trade-off, where the agent must balance exploring new actions to discover their rewards and exploiting known actions to maximize the cumulative reward. Another challenge is the sample efficiency, as RL algorithms often require a large number of interactions with the environment to learn effective policies. Additionally, RL can be sensitive to the choice of hyperparameters and may struggle with stability and convergence issues.

5. Benefits of Advanced NLP and Ethical AI

Advanced Natural Language Processing (NLP) and Ethical Artificial Intelligence (AI) offer numerous benefits across various domains, enhancing human-computer interaction, improving decision-making, and ensuring responsible AI deployment. NLP, a subfield of AI, focuses on enabling machines to understand, interpret, and generate human language. Ethical AI, on the other hand, emphasizes the development and deployment of AI systems that are fair, transparent, and aligned with human values.

One of the primary benefits of advanced NLP is its ability to improve communication between humans and machines. NLP-powered applications, such as chatbots, virtual assistants, and language translation services, enable seamless and natural interactions, making technology more accessible and user-friendly. For instance, virtual assistants like Siri, Alexa, and Google Assistant leverage NLP to understand and respond to user queries, providing information, performing tasks, and offering personalized recommendations. Language translation services, such as Google Translate, use advanced NLP techniques to accurately translate text and speech between different languages, breaking down language barriers and facilitating global communication.

Advanced NLP also plays a crucial role in information extraction and knowledge discovery. By analyzing large volumes of unstructured text data, NLP algorithms can identify relevant information, extract key insights, and generate summaries. This capability is particularly valuable in fields like healthcare, finance, and legal, where professionals need to process vast amounts of textual information. For example, in healthcare, NLP can analyze medical records, research papers, and clinical notes to extract critical information, aiding in diagnosis, treatment planning, and medical research. In finance, NLP can analyze news articles, financial reports, and social media to identify market trends, assess risks, and inform investment decisions.

Ethical AI ensures that AI systems are developed and deployed in a manner that respects human rights, promotes fairness, and mitigates biases. One of the key benefits of ethical AI is the reduction of bias and discrimination in decision-making processes. AI systems trained on biased data can perpetuate and amplify existing biases, leading to unfair outcomes. Ethical AI practices, such as bias detection and mitigation, help identify and address these biases, ensuring that AI systems make fair and equitable decisions. For example, in hiring processes, ethical AI can help eliminate biases related to gender, race, and socioeconomic status, promoting diversity and inclusion in the workplace.

Transparency and accountability are also critical aspects of ethical AI. Transparent AI systems provide clear explanations of their decision-making processes, enabling users to understand how and why decisions are made. This transparency fosters trust and allows for the identification and correction of errors or biases. Accountability mechanisms ensure that AI developers and deployers are responsible for the outcomes of their systems, promoting ethical behavior and adherence to regulatory standards. For instance, in the context of autonomous vehicles, ethical AI practices ensure that the decision-making algorithms are transparent, and any accidents or malfunctions are thoroughly investigated and addressed.

5.1. Improved Human-AI Collaboration

The integration of artificial intelligence (AI) into various sectors has significantly enhanced human-AI collaboration, leading to more efficient and innovative outcomes. This collaboration is not about replacing humans with machines but rather augmenting human capabilities with AI's computational power and data processing abilities. One of the primary benefits of improved human-AI collaboration is the ability to handle large volumes of data quickly and accurately. AI systems can analyze vast datasets to identify patterns and insights that would be impossible for humans to discern in a reasonable timeframe. This capability is particularly valuable in fields such as healthcare, finance, and marketing, where data-driven decisions are crucial.

In healthcare, for instance, AI can assist doctors by providing diagnostic suggestions based on a patient's medical history and current symptoms. This collaboration allows for more accurate diagnoses and personalized treatment plans, ultimately improving patient outcomes. Similarly, in finance, AI algorithms can analyze market trends and predict stock movements, enabling traders to make more informed investment decisions. Marketing professionals can also benefit from AI by using it to analyze consumer behavior and preferences, allowing for more targeted and effective marketing campaigns.

Another significant aspect of human-AI collaboration is the enhancement of creativity and innovation. AI can generate new ideas and solutions that humans might not have considered. For example, in the field of design, AI can create unique and innovative designs by analyzing existing patterns and trends. This collaboration between human creativity and AI's analytical capabilities can lead to groundbreaking products and services.

Moreover, improved human-AI collaboration can lead to increased productivity and efficiency in the workplace. AI can automate repetitive and mundane tasks, freeing up human workers to focus on more complex and strategic activities. This not only increases productivity but also enhances job satisfaction, as employees can engage in more meaningful and fulfilling work.

However, for human-AI collaboration to be truly effective, it is essential to address certain challenges. One of the main challenges is ensuring that AI systems are transparent and explainable. Users need to understand how AI makes decisions to trust and effectively collaborate with it. Additionally, there is a need for continuous training and education to equip the workforce with the necessary skills to work alongside AI.

In conclusion, improved human-AI collaboration has the potential to revolutionize various industries by enhancing data analysis, creativity, and productivity. By addressing the challenges associated with AI integration, we can harness the full potential of this collaboration to achieve more efficient and innovative outcomes.

5.2. Enhanced Decision-Making

The advent of artificial intelligence (AI) has significantly transformed the decision-making process across various sectors. Enhanced decision-making through AI involves leveraging advanced algorithms and machine learning techniques to analyze data, predict outcomes, and provide actionable insights. This transformation is particularly evident in industries such as healthcare, finance, and logistics, where data-driven decisions are critical.

In healthcare, AI-powered decision-making tools can analyze patient data to predict disease outbreaks, recommend treatment plans, and even assist in surgical procedures. For instance, AI algorithms can process medical images to detect anomalies with higher accuracy than human radiologists. This capability not only speeds up the diagnostic process but also reduces the likelihood of human error, leading to better patient outcomes. Additionally, AI can analyze electronic health records to identify patterns and trends, enabling healthcare providers to make more informed decisions about patient care and resource allocation.

In the financial sector, AI enhances decision-making by analyzing market data, identifying investment opportunities, and assessing risks. AI algorithms can process vast amounts of financial data in real-time, providing traders and investors with insights that would be impossible to obtain manually. This capability allows for more accurate predictions of market trends and better-informed investment decisions. Furthermore, AI can help financial institutions detect fraudulent activities by analyzing transaction patterns and flagging suspicious behavior. For more insights on how AI is transforming finance, check out AI and Blockchain: Revolutionizing Decentralized Finance.

Logistics and supply chain management also benefit from AI-enhanced decision-making. AI can optimize routes, predict demand, and manage inventory levels, leading to more efficient operations and cost savings. For example, AI algorithms can analyze historical sales data to forecast future demand, allowing companies to adjust their inventory levels accordingly. This capability helps prevent stockouts and overstock situations, ensuring that products are available when and where they are needed.

Moreover, AI-enhanced decision-making is not limited to specific industries. It can be applied to various aspects of business operations, such as human resources, marketing, and customer service. In human resources, AI can analyze employee data to identify trends and make recommendations for improving employee engagement and retention. In marketing, AI can analyze consumer behavior to develop targeted campaigns and optimize advertising spend. In customer service, AI-powered chatbots can provide instant support and resolve issues, improving customer satisfaction.

However, the implementation of AI-enhanced decision-making also presents certain challenges. One of the main challenges is ensuring the quality and accuracy of the data used by AI algorithms. Poor-quality data can lead to incorrect predictions and suboptimal decisions. Additionally, there is a need for transparency and explainability in AI decision-making processes. Users need to understand how AI arrives at its conclusions to trust and effectively use its recommendations.

In conclusion, AI-enhanced decision-making has the potential to revolutionize various industries by providing more accurate, timely, and actionable insights. By addressing the challenges associated with data quality and transparency, organizations can harness the full potential of AI to make better-informed decisions and achieve more efficient and effective outcomes.

5.3. Ethical and Fair AI Systems

As artificial intelligence (AI) continues to permeate various aspects of society, the importance of developing ethical and fair AI systems has become increasingly evident. Ethical AI refers to the design and implementation of AI technologies that adhere to moral principles and values, ensuring that they do not cause harm and are used for the benefit of society. Fair AI, on the other hand, focuses on ensuring that AI systems do not exhibit biases and treat all individuals and groups equitably.

One of the primary concerns in the development of ethical AI systems is the potential for AI to cause harm, either intentionally or unintentionally. For example, AI algorithms used in autonomous vehicles must be designed to prioritize human safety and make ethical decisions in critical situations. Similarly, AI systems used in healthcare must ensure that patient data is handled with the utmost confidentiality and that treatment recommendations do not cause harm. To address these concerns, developers must adhere to ethical guidelines and principles, such as those outlined by organizations like the IEEE and the European Commission.

Fairness in AI is another critical aspect that requires attention. AI systems are often trained on large datasets, and if these datasets contain biases, the resulting AI models can perpetuate and even amplify these biases. For instance, facial recognition systems have been found to exhibit racial and gender biases, leading to higher error rates for certain demographic groups. To ensure fairness, it is essential to use diverse and representative datasets and to implement techniques for detecting and mitigating biases in AI models. Additionally, ongoing monitoring and evaluation of AI systems are necessary to identify and address any biases that may arise over time.

Transparency and explainability are also crucial components of ethical and fair AI systems. Users need to understand how AI systems make decisions to trust and effectively use them. This is particularly important in high-stakes applications, such as criminal justice and finance, where AI decisions can have significant consequences. Techniques such as explainable AI (XAI) aim to make AI models more interpretable, allowing users to understand the reasoning behind AI decisions and ensuring accountability.

Moreover, the development of ethical and fair AI systems requires collaboration between various stakeholders, including AI developers, policymakers, and the public. Policymakers play a crucial role in establishing regulations and standards that promote ethical AI practices. For example, the European Union's General Data Protection Regulation (GDPR) includes provisions that address the ethical use of AI, such as the right to explanation for automated decisions. Public engagement is also essential to ensure that AI technologies align with societal values and address the concerns of diverse communities. For more insights on the evolution of ethical AI, check out The Evolution of Ethical AI in 2024.

In conclusion, the development of ethical and fair AI systems is essential to ensure that AI technologies are used responsibly and for the benefit of society. By adhering to ethical guidelines, addressing biases, and promoting transparency and explainability, we can build AI systems that are trustworthy, equitable, and aligned with societal values. Collaboration between developers, policymakers, and the public is crucial to achieving these goals and ensuring that AI technologies contribute positively to our world.

6. Challenges in Implementing Advanced NLP and Ethical AI

The implementation of advanced Natural Language Processing (NLP) and Ethical Artificial Intelligence (AI) is fraught with numerous challenges. These challenges can be broadly categorized into technical challenges and ethical dilemmas. Both aspects are critical to address in order to create systems that are not only efficient and effective but also fair and responsible.

6.1. Technical Challenges

One of the primary technical challenges in implementing advanced NLP is the complexity of human language itself. Human language is inherently ambiguous, context-dependent, and constantly evolving. This makes it difficult for NLP models to accurately understand and generate human-like text. For instance, words can have multiple meanings depending on the context, and idiomatic expressions can be particularly challenging for NLP models to interpret correctly.

Another significant technical challenge is the need for large amounts of high-quality data. Training advanced NLP models, such as those based on deep learning, requires vast datasets to achieve high levels of accuracy. However, obtaining and curating such datasets can be time-consuming and expensive. Additionally, the data must be representative of the diverse ways in which language is used to avoid biases in the model's outputs.

Computational resources also pose a challenge. Advanced NLP models, especially those based on deep learning architectures like transformers, require substantial computational power for training and inference. This can be a barrier for smaller organizations or researchers with limited access to high-performance computing resources.

Moreover, the interpretability of NLP models is a significant technical hurdle. Many advanced NLP models operate as "black boxes," making it difficult to understand how they arrive at their conclusions. This lack of transparency can be problematic, especially in applications where understanding the decision-making process is crucial, such as in healthcare or legal contexts.

Finally, the integration of NLP systems with other technologies and platforms can be challenging. Ensuring that NLP models can seamlessly interact with existing systems, handle real-time data, and scale effectively requires careful planning and robust engineering.

6.2. Ethical Dilemmas

The ethical dilemmas associated with implementing advanced NLP and Ethical AI are equally, if not more, challenging. One of the foremost ethical concerns is bias. NLP models can inadvertently learn and perpetuate biases present in the training data. This can lead to discriminatory outcomes, particularly against marginalized groups. For example, a language model trained on biased data might generate outputs that reinforce harmful stereotypes or exclude certain dialects and languages.

Privacy is another critical ethical issue. NLP systems often require access to large amounts of personal data to function effectively. Ensuring that this data is collected, stored, and used in a manner that respects individuals' privacy rights is paramount. This includes implementing robust data anonymization techniques and obtaining informed consent from users.

The potential for misuse of NLP and AI technologies also raises ethical concerns. These technologies can be used to create deepfakes, generate misleading information, or automate harmful activities such as cyberbullying or harassment. Addressing these risks requires not only technical safeguards but also regulatory frameworks and ethical guidelines.

Transparency and accountability are essential ethical considerations. Users and stakeholders need to understand how NLP and AI systems make decisions and who is responsible for those decisions. This is particularly important in high-stakes applications like criminal justice or financial services, where the consequences of automated decisions can be significant.

Finally, there is the broader ethical question of the impact of NLP and AI on employment and society. As these technologies become more advanced, they have the potential to automate a wide range of tasks, leading to job displacement in certain sectors. Ensuring that the benefits of these technologies are distributed equitably and that there are measures in place to support those affected by automation is a critical ethical challenge.

In conclusion, while the implementation of advanced NLP and Ethical AI holds great promise, it is accompanied by a host of technical and ethical challenges. Addressing these challenges requires a multidisciplinary approach, involving not only technologists but also ethicists, policymakers, and other stakeholders. By doing so, we can work towards creating AI systems that are not only powerful and efficient but also fair, transparent, and beneficial to all.

6.3. Regulatory Hurdles

Regulatory hurdles represent one of the most significant challenges in the development and deployment of artificial intelligence (AI) technologies. As AI continues to evolve and integrate into various sectors, the need for comprehensive regulatory frameworks becomes increasingly critical. These frameworks are essential to ensure that AI systems are safe, ethical, and beneficial to society. However, creating and implementing these regulations is a complex task that involves multiple stakeholders, including governments, private companies, and international organizations.

One of the primary regulatory hurdles is the lack of standardized guidelines. Different countries and regions have varying approaches to AI regulation, which can lead to inconsistencies and confusion. For instance, the European Union has been proactive in proposing the AI Act, which aims to create a unified regulatory framework for AI across its member states. In contrast, the United States has taken a more sector-specific approach, with different agencies developing their own guidelines for AI applications in areas such as healthcare, finance, and transportation. This disparity can create challenges for companies operating in multiple jurisdictions, as they must navigate a patchwork of regulations.

Another significant hurdle is the rapid pace of AI development. Technological advancements often outstrip the ability of regulatory bodies to keep up. This lag can result in outdated or inadequate regulations that fail to address the latest developments in AI. For example, the rise of deep learning and neural networks has introduced new complexities that existing regulations may not adequately cover. This gap can lead to potential risks, such as biased algorithms, lack of transparency, and privacy concerns.

Ethical considerations also play a crucial role in regulatory hurdles. AI systems can have profound impacts on society, raising questions about fairness, accountability, and human rights. Regulators must grapple with these ethical dilemmas to create policies that protect individuals and communities while fostering innovation. For instance, the use of AI in surveillance and law enforcement has sparked debates about privacy and civil liberties. Balancing these ethical concerns with the benefits of AI is a delicate task that requires careful consideration and stakeholder engagement.

Moreover, the global nature of AI development poses additional challenges. International cooperation is essential to address cross-border issues and ensure that AI regulations are harmonized. Organizations such as the United Nations and the Organisation for Economic Co-operation and Development (OECD) are working towards creating global standards for AI. However, achieving consensus among diverse countries with different priorities and values is a formidable challenge.

In conclusion, regulatory hurdles are a significant barrier to the widespread adoption of AI technologies. Addressing these challenges requires a coordinated effort from governments, industry, and international organizations. By developing comprehensive, flexible, and ethical regulatory frameworks, we can harness the potential of AI while mitigating its risks.

7. Future of Human-AI Collaboration

The future of human-AI collaboration holds immense promise, with the potential to revolutionize various aspects of our lives, from work and education to healthcare and entertainment. As AI technologies continue to advance, they are increasingly being integrated into everyday tasks, augmenting human capabilities and enabling new forms of interaction. This collaboration between humans and AI is expected to bring about significant changes in how we live and work, leading to greater efficiency, creativity, and innovation.

One of the key areas where human-AI collaboration is expected to have a profound impact is the workplace. AI-powered tools and systems are already being used to automate routine tasks, allowing employees to focus on more complex and creative activities. For example, AI can handle data analysis, customer service, and administrative tasks, freeing up human workers to engage in strategic planning, problem-solving, and innovation. This shift is expected to lead to increased productivity and job satisfaction, as employees can leverage AI to enhance their skills and capabilities.

In the field of education, human-AI collaboration is poised to transform the learning experience. AI-driven personalized learning platforms can tailor educational content to individual students' needs, providing customized support and feedback. This can help students learn at their own pace and overcome specific challenges, leading to improved learning outcomes. Additionally, AI can assist teachers by automating administrative tasks, allowing them to focus on instruction and student engagement. The integration of AI in education also opens up new possibilities for lifelong learning and skill development, as individuals can access tailored learning resources throughout their lives.

Healthcare is another domain where human-AI collaboration is expected to bring about significant advancements. AI-powered diagnostic tools can assist doctors in identifying diseases and conditions more accurately and quickly, leading to earlier interventions and better patient outcomes. AI can also help in the development of personalized treatment plans, taking into account individual patient data and medical history. Furthermore, AI-driven research can accelerate the discovery of new drugs and therapies, addressing some of the most pressing health challenges of our time.

In the realm of entertainment, human-AI collaboration is already making waves. AI algorithms are being used to create music, art, and literature, pushing the boundaries of creativity and enabling new forms of expression. AI can also enhance the gaming experience by creating more realistic and immersive environments, as well as personalized content that adapts to individual players' preferences. This collaboration between humans and AI is expected to lead to new and exciting forms of entertainment that were previously unimaginable.

However, the future of human-AI collaboration also raises important ethical and societal questions. As AI systems become more integrated into our lives, issues related to privacy, security, and bias must be addressed. Ensuring that AI technologies are developed and used responsibly is crucial to maximizing their benefits while minimizing potential risks. This requires ongoing dialogue and collaboration among policymakers, industry leaders, and the public to create frameworks that promote ethical AI development and use.

In conclusion, the future of human-AI collaboration holds great potential to transform various aspects of our lives, leading to increased efficiency, creativity, and innovation. By addressing the ethical and societal challenges associated with AI, we can harness its power to create a better future for all.

7.1. Emerging Trends

As we look towards the future of human-AI collaboration, several emerging trends are shaping the landscape and driving innovation. These trends reflect the evolving capabilities of AI technologies and their increasing integration into various aspects of our lives. Understanding these trends can provide valuable insights into the potential directions of AI development and its impact on society.

One of the most significant emerging trends is the rise of explainable AI (XAI). As AI systems become more complex and sophisticated, there is a growing need for transparency and interpretability. Explainable AI aims to make AI decision-making processes more understandable to humans, allowing users to gain insights into how and why certain decisions are made. This is particularly important in high-stakes domains such as healthcare, finance, and law, where understanding the rationale behind AI decisions is crucial for trust and accountability. By making AI more transparent, XAI can help build confidence in AI systems and facilitate their adoption in critical applications.

Another important trend is the increasing focus on ethical AI. As AI technologies become more pervasive, concerns about bias, fairness, and accountability have come to the forefront. Ethical AI initiatives aim to address these issues by developing guidelines and frameworks for responsible AI development and use. This includes efforts to mitigate bias in AI algorithms, ensure data privacy and security, and promote transparency and accountability. Organizations and governments around the world are working to establish ethical standards for AI, recognizing that responsible AI is essential for building trust and ensuring that AI benefits all members of society.

The integration of AI with other emerging technologies is also a key trend shaping the future of human-AI collaboration. For example, the convergence of AI and the Internet of Things (IoT) is creating new opportunities for smart environments and connected devices. AI-powered IoT systems can collect and analyze vast amounts of data in real-time, enabling more efficient and intelligent decision-making. This has applications in various domains, including smart cities, healthcare, and industrial automation. Similarly, the combination of AI and blockchain technology is being explored for applications such as secure data sharing, supply chain management, and decentralized AI networks.

Human-centered AI is another emerging trend that emphasizes the importance of designing AI systems with the user in mind. This approach focuses on creating AI technologies that enhance human capabilities and improve user experiences. Human-centered AI involves understanding user needs, preferences, and behaviors, and designing AI systems that are intuitive, user-friendly, and aligned with human values. This trend is particularly relevant in areas such as human-computer interaction, where the goal is to create seamless and natural interactions between humans and AI.

Finally, the democratization of AI is a trend that aims to make AI technologies more accessible to a broader audience. This involves developing tools and platforms that enable individuals and organizations, regardless of their technical expertise, to leverage AI for various applications. The democratization of AI can empower small businesses, non-profits, and individuals to harness the power of AI for innovation and problem-solving. This trend is supported by the growing availability of open-source AI frameworks, cloud-based AI services, and educational resources that make AI more accessible to a wider audience.

In conclusion, the future of human-AI collaboration is being shaped by several emerging trends, including explainable AI, ethical AI, the integration of AI with other technologies, human-centered AI, and the democratization of AI. These trends reflect the evolving capabilities of AI and its increasing impact on various aspects of our lives. By understanding and embracing these trends, we can harness the potential of AI to create a better and more inclusive future.

7.2. Long-term Implications

The long-term implications of technological advancements, particularly in fields like artificial intelligence and blockchain, are profound and multifaceted. These technologies are not just transient trends; they are poised to fundamentally reshape various aspects of society, economy, and daily life.

One of the most significant long-term implications of AI is its potential to revolutionize the labor market. AI systems can perform tasks that were previously thought to be the exclusive domain of humans, such as data analysis, pattern recognition, and even creative endeavors like writing and art. This could lead to increased productivity and efficiency across industries. However, it also raises concerns about job displacement and the need for workforce reskilling. According to a report by the World Economic Forum, by 2025, 85 million jobs may be displaced by a shift in the division of labor between humans and machines, while 97 million new roles may emerge that are more adapted to the new division of labor between humans, machines, and algorithms (source: https://www.weforum.org/reports/the-future-of-jobs-report-2020).

In the realm of blockchain, the long-term implications are equally transformative. Blockchain technology offers a decentralized and transparent way to record transactions, which can significantly reduce fraud and increase trust in various sectors, including finance, supply chain management, and healthcare. For instance, in finance, blockchain can enable faster and more secure transactions, reducing the need for intermediaries and lowering costs. In supply chain management, it can provide an immutable record of the journey of goods from origin to consumer, enhancing transparency and accountability. In healthcare, blockchain can ensure the integrity and confidentiality of patient records, facilitating better data sharing and improving patient outcomes.

Moreover, the integration of AI and blockchain can lead to even more groundbreaking innovations. AI can enhance the capabilities of blockchain by providing advanced data analytics and decision-making tools, while blockchain can offer secure and transparent data management for AI systems. This synergy can drive advancements in areas such as smart contracts, where AI can automate and optimize contract execution based on predefined conditions, and blockchain can ensure the integrity and transparency of the process.

However, these long-term implications also come with challenges. Ethical considerations, such as data privacy, algorithmic bias, and the potential for misuse of technology, need to be addressed. Regulatory frameworks must evolve to keep pace with technological advancements, ensuring that the benefits of AI and blockchain are realized while mitigating potential risks. Additionally, there is a need for ongoing research and development to address technical limitations and enhance the scalability, security, and interoperability of these technologies.

In conclusion, the long-term implications of AI and blockchain are vast and far-reaching. These technologies have the potential to drive significant economic growth, improve efficiency and transparency, and address complex societal challenges. However, realizing these benefits requires careful consideration of ethical, regulatory, and technical issues, as well as a commitment to continuous innovation and adaptation.

8. Why Choose Rapid Innovation for Implementation and Development?

Choosing rapid innovation for implementation and development is crucial in today's fast-paced and highly competitive technological landscape. Rapid innovation refers to the accelerated process of developing and deploying new technologies, products, or services. This approach is essential for several reasons, including staying ahead of the competition, meeting evolving customer demands, and driving continuous improvement.

One of the primary reasons to choose rapid innovation is the need to stay ahead of the competition. In industries where technological advancements occur at a breakneck pace, companies that fail to innovate quickly risk falling behind. Rapid innovation allows organizations to bring new products and services to market faster, gaining a competitive edge and capturing market share. For example, in the tech industry, companies like Apple and Google have consistently demonstrated the importance of rapid innovation by regularly releasing new and improved products, thereby maintaining their leadership positions.

Another critical reason for rapid innovation is the ability to meet evolving customer demands. In today's digital age, customers have high expectations for personalized, efficient, and seamless experiences. Rapid innovation enables companies to quickly respond to changing customer needs and preferences, ensuring that they remain relevant and competitive. For instance, the rise of e-commerce and digital services has led to increased demand for fast and convenient online shopping experiences. Companies like Amazon have leveraged rapid innovation to continuously enhance their platforms, offering features such as one-click purchasing, same-day delivery, and personalized recommendations.

Rapid innovation also drives continuous improvement within organizations. By fostering a culture of innovation and agility, companies can continuously refine their processes, products, and services. This iterative approach allows for the identification and resolution of issues more quickly, leading to higher quality and better performance. Additionally, rapid innovation encourages experimentation and risk-taking, which can lead to breakthrough discoveries and advancements. For example, in the pharmaceutical industry, rapid innovation in drug development processes has led to the accelerated discovery and approval of life-saving treatments, such as the COVID-19 vaccines.

However, rapid innovation is not without its challenges. It requires a significant investment in research and development, as well as a willingness to embrace change and uncertainty. Organizations must also ensure that they have the right infrastructure, talent, and resources to support rapid innovation efforts. This includes fostering a culture of collaboration and open communication, as well as implementing agile methodologies and tools that enable quick iteration and feedback.

In conclusion, choosing rapid innovation for implementation and development is essential for staying competitive, meeting customer demands, and driving continuous improvement. While it presents challenges, the benefits of rapid innovation far outweigh the risks, making it a critical strategy for success in today's dynamic and fast-paced technological landscape.

8.1. Expertise in AI and Blockchain

Expertise in AI and blockchain is a critical factor for organizations looking to leverage these technologies for innovation and growth. Both AI and blockchain are complex and rapidly evolving fields that require specialized knowledge and skills to implement effectively. Having expertise in these areas can provide a significant competitive advantage, enabling organizations to develop cutting-edge solutions, optimize processes, and create new business opportunities.

In the field of AI, expertise encompasses a deep understanding of various AI techniques and methodologies, such as machine learning, deep learning, natural language processing, and computer vision. It also involves proficiency in programming languages and tools commonly used in AI development, such as Python, TensorFlow, and PyTorch. Additionally, expertise in AI requires knowledge of data science principles, including data collection, preprocessing, and analysis, as well as the ability to design and train AI models.

Having expertise in AI allows organizations to harness the power of data to drive decision-making and innovation. For example, AI can be used to analyze large volumes of data to identify patterns and trends, enabling businesses to make more informed decisions and optimize their operations. In the healthcare industry, AI-powered diagnostic tools can analyze medical images and patient data to assist doctors in making accurate diagnoses and treatment recommendations. In the financial sector, AI algorithms can detect fraudulent transactions and assess credit risk, enhancing security and efficiency.

Similarly, expertise in blockchain involves a thorough understanding of blockchain architecture, cryptographic principles, consensus mechanisms, and smart contracts. It also requires familiarity with blockchain platforms and frameworks, such as Ethereum, Hyperledger, and Corda. Additionally, expertise in blockchain includes knowledge of regulatory and compliance considerations, as well as the ability to design and implement secure and scalable blockchain solutions.

Blockchain expertise enables organizations to leverage the unique features of blockchain technology, such as decentralization, transparency, and immutability, to create innovative solutions and improve existing processes. For instance, in supply chain management, blockchain can provide a transparent and tamper-proof record of the journey of goods, enhancing traceability and accountability. In the financial industry, blockchain can facilitate faster and more secure cross-border transactions, reducing costs and increasing efficiency. In the realm of digital identity, blockchain can provide a secure and decentralized way to manage and verify identities, protecting against identity theft and fraud.

Moreover, the combination of AI and blockchain expertise can lead to even more powerful and innovative solutions. For example, AI can enhance the capabilities of blockchain by providing advanced data analytics and decision-making tools, while blockchain can offer secure and transparent data management for AI systems. This synergy can drive advancements in areas such as decentralized finance (DeFi), where AI can optimize investment strategies and risk management, and blockchain can ensure the security and transparency of transactions.

In conclusion, expertise in AI and blockchain is essential for organizations looking to leverage these technologies for innovation and growth. By developing and maintaining specialized knowledge and skills in these areas, organizations can create cutting-edge solutions, optimize processes, and unlock new business opportunities. As AI and blockchain continue to evolve, ongoing education and training will be crucial to staying at the forefront of these transformative technologies.

8.2. Customized Solutions

In today's fast-paced and highly competitive business environment, one-size-fits-all solutions are often inadequate. Customized solutions are tailored to meet the specific needs and challenges of individual businesses, ensuring that they can achieve their unique goals and objectives. These solutions are designed to address the particular requirements of a company, taking into account its industry, size, market position, and strategic objectives.

Customized solutions offer several advantages over generic alternatives. Firstly, they provide a higher level of flexibility. Businesses can adapt these solutions to their changing needs and circumstances, ensuring that they remain relevant and effective over time. This flexibility is particularly important in industries that are subject to rapid technological advancements or shifting market dynamics.

Secondly, customized solutions can lead to improved efficiency and productivity. By addressing the specific pain points and bottlenecks within a business, these solutions can streamline processes, reduce waste, and enhance overall operational efficiency. For example, a customized software solution can automate repetitive tasks, freeing up employees to focus on more strategic activities.

Moreover, customized solutions can provide a competitive edge. In a crowded marketplace, businesses that can differentiate themselves through unique and tailored offerings are more likely to stand out and attract customers. Customized solutions can help businesses to innovate and develop new products or services that meet the specific needs of their target audience.

The development of customized solutions typically involves a collaborative process between the solution provider and the client. This process begins with a thorough analysis of the client's needs and objectives. The solution provider then designs and develops a solution that is specifically tailored to meet these requirements. Throughout this process, there is ongoing communication and feedback to ensure that the final solution aligns with the client's expectations.

One of the key challenges in developing customized solutions is ensuring that they are scalable and sustainable. As businesses grow and evolve, their needs and requirements may change. Therefore, it is important that customized solutions are designed with scalability in mind, allowing them to be easily adapted and expanded as needed.

In conclusion, customized solutions offer a range of benefits for businesses, including increased flexibility, improved efficiency, and a competitive edge. By working closely with solution providers to develop tailored solutions, businesses can address their unique challenges and achieve their specific goals. As the business landscape continues to evolve, the demand for customized solutions is likely to grow, making them an essential component of modern business strategy.

For more information on how customized solutions can benefit various industries, you can explore AI & Blockchain Services for Art & Entertainment Industry, AI & Blockchain Solutions for Fintech & Banking Industry, and AI & Blockchain Development Services for Healthcare Industry.

8.3. Proven Methodologies

Proven methodologies refer to established and well-documented approaches that have been tested and validated over time. These methodologies provide a structured framework for achieving specific goals and objectives, ensuring that processes are carried out in a consistent and effective manner. In the context of business and project management, proven methodologies are essential for ensuring successful outcomes and minimizing risks.

One of the most widely recognized proven methodologies is the Project Management Institute's (PMI) Project Management Body of Knowledge (PMBOK). The PMBOK provides a comprehensive set of guidelines and best practices for managing projects, covering areas such as scope, time, cost, quality, and risk management. By following the PMBOK framework, project managers can ensure that their projects are completed on time, within budget, and to the required quality standards.