Table Of Contents

Category

Computer Vision

Artificial Intelligence

1. Introduction to Computer Vision in Autonomous Vehicles

Computer vision is a critical technology that enables autonomous vehicles to interpret and understand their surroundings. By mimicking human visual perception, computer vision systems allow vehicles to make informed decisions based on visual data.

- Autonomous vehicles rely on a combination of sensors, including cameras, LiDAR, and radar, to gather information about their environment.

- Computer vision processes this data to identify objects, track movements, and assess distances.

- The technology enhances safety by enabling real-time analysis of road conditions, traffic signals, pedestrians, and other vehicles.

- Major companies in the automotive industry, such as Tesla, Waymo, and Ford, are investing heavily in computer vision for autonomous vehicles to improve their self-driving capabilities.

- The integration of computer vision with machine learning algorithms allows vehicles to learn from experience, improving their performance over time.

At Rapid Innovation, we understand the transformative potential of computer vision in autonomous driving. Our expertise in computer vision development positions us to help clients leverage this technology effectively, ensuring they achieve their goals efficiently and with greater ROI.

2. Fundamentals of Computer Vision

Computer vision encompasses a range of techniques and methodologies that enable machines to interpret visual information. Understanding the fundamentals is essential for developing effective systems for autonomous vehicles.

- Image acquisition: The first step involves capturing images or video from the vehicle's cameras.

- Image processing: This step involves enhancing and preparing the images for analysis.

- Feature extraction: Key features are identified and extracted from the images to facilitate recognition and classification.

- Object detection: The system identifies and locates objects within the visual data.

- Image classification: Objects are categorized based on their features and characteristics.

- Scene understanding: The system interprets the overall context of the scene, including spatial relationships and dynamics.

2.1. Image Processing

Image processing is a crucial component of computer vision that involves manipulating images to improve their quality and extract useful information.

- Preprocessing: This step includes techniques such as noise reduction, contrast enhancement, and normalization to prepare images for analysis.

- Filtering: Various filters (e.g., Gaussian, median) are applied to remove unwanted artifacts and enhance important features.

- Edge detection: Algorithms like the Canny edge detector help identify boundaries within images, which is essential for object recognition.

- Image segmentation: This process divides an image into meaningful regions or segments, making it easier to analyze specific areas.

- Morphological operations: Techniques such as dilation and erosion are used to manipulate the structure of objects within an image.

- Color space conversion: Images may be converted from one color space (e.g., RGB) to another (e.g., HSV) to facilitate better analysis and feature extraction.

By employing these image processing techniques, computer vision in autonomous vehicles can effectively interpret their surroundings, leading to safer and more efficient navigation. Partnering with Rapid Innovation allows clients to harness these advancements, ensuring they stay ahead in the competitive landscape of autonomous technology. Our tailored solutions not only enhance operational efficiency but also drive significant returns on investment.

2.2. Feature Detection and Extraction

Feature detection and extraction are crucial steps in computer vision that help in identifying and describing key elements within an image. These features can be points, edges, or regions that are significant for further analysis.

- Key Concepts:

- Feature Detection: The process of identifying points of interest in an image, such as corners, edges, or blobs.

- Feature Extraction: Involves transforming the detected features into a format that can be used for analysis, often resulting in feature descriptors.

- Common Techniques:

- Harris Corner Detector: Identifies corners in images, which are useful for tracking and matching.

- SIFT (Scale-Invariant Feature Transform): Detects and describes local features in images, robust to changes in scale and rotation.

- SURF (Speeded-Up Robust Features): Similar to SIFT but faster, making it suitable for real-time applications.

- Applications:

- Image stitching, where multiple images are combined into a single panorama.

- Object tracking in video sequences.

- Image retrieval systems that search for similar images based on features.

- Computer vision image segmentation techniques that help in partitioning an image into meaningful segments.

- Challenges:

- Variability in lighting and viewpoint can affect feature detection.

- Computational complexity can be high for large images or real-time applications.

2.3. Object Recognition

Object recognition is the process of identifying and classifying objects within an image or video. It involves understanding the content of the image and determining what objects are present.

- Key Concepts:

- Classification: Assigning a label to an object based on its features.

- Localization: Determining the position of an object within an image.

- Common Techniques:

- Convolutional Neural Networks (CNNs): Deep learning models that automatically learn features from images, achieving high accuracy in object recognition tasks.

- Region-based CNN (R-CNN): Combines region proposals with CNNs to improve detection accuracy.

- YOLO (You Only Look Once): A real-time object detection system that predicts bounding boxes and class probabilities directly from full images.

- Advanced methods and deep learning in computer vision have significantly improved object detection capabilities.

- Applications:

- Autonomous vehicles that recognize pedestrians, traffic signs, and other vehicles, utilizing applied deep learning and computer vision for self-driving cars.

- Security systems that identify intruders or suspicious objects.

- Augmented reality applications that overlay digital information on real-world objects.

- Challenges:

- Variability in object appearance due to occlusion, lighting, and background clutter.

- The need for large labeled datasets for training deep learning models, particularly in computer vision segmentation algorithms.

2.4. Machine Learning in Computer Vision

Machine learning plays a pivotal role in advancing computer vision technologies. It enables systems to learn from data and improve their performance over time without being explicitly programmed.

- Key Concepts:

- Supervised Learning: Involves training models on labeled datasets, where the input data is paired with the correct output.

- Unsupervised Learning: Models learn patterns and structures from unlabeled data, useful for clustering and anomaly detection.

- Common Techniques:

- Deep Learning: Utilizes neural networks with multiple layers to learn complex representations of data. CNNs are a popular choice for image-related tasks.

- Transfer Learning: Involves taking a pre-trained model and fine-tuning it on a new dataset, reducing the need for large amounts of labeled data.

- Reinforcement Learning: A type of learning where an agent learns to make decisions by receiving rewards or penalties based on its actions.

- Deep reinforcement learning in computer vision is an emerging area that combines these techniques for enhanced performance.

- Applications:

- Facial recognition systems that identify individuals in images or videos.

- Medical imaging analysis for detecting diseases from X-rays or MRIs.

- Image classification tasks in social media platforms for tagging and organizing content.

- Violence detection in video using computer vision techniques to enhance security measures.

- Challenges:

- Overfitting, where a model performs well on training data but poorly on unseen data.

- The requirement for large datasets and significant computational resources for training complex models, particularly in traditional computer vision techniques.

At Rapid Innovation, we leverage these advanced techniques in feature detection, object recognition, and machine learning to help our clients achieve their goals efficiently and effectively. By partnering with us, clients can expect enhanced ROI through improved accuracy in image analysis, faster processing times, and the ability to harness the power of AI and blockchain technologies for innovative solutions. Our expertise ensures that we can navigate the challenges of computer vision, providing tailored solutions that meet the unique needs of each client, including machine vision techniques in AI and classical computer vision techniques.

3. Sensors and Data Acquisition

At Rapid Innovation, we understand that sensors and data acquisition systems, such as thermocouple daq, strain gauge data acquisition, and load cell daq, are pivotal for gathering critical information about the environment across various applications, including robotics, autonomous vehicles, and smart cities. These technologies empower machines to perceive their surroundings and make informed decisions based on the data collected, ultimately driving efficiency and effectiveness in operations.

3.1. Cameras (Monocular, Stereo, and 360-degree)

Cameras are among the most widely utilized sensors for data acquisition, capturing visual information that can be processed to extract valuable insights.

- Monocular Cameras:

- Utilize a single lens to capture images.

- Provide 2D images, which can be analyzed for object detection and recognition.

- Commonly employed in applications such as surveillance, robotics, and augmented reality.

- Limitations include depth perception challenges, as they cannot gauge distances without additional information.

- Stereo Cameras:

- Comprise two lenses positioned at a fixed distance apart.

- Capture two images simultaneously, allowing for depth perception through triangulation.

- Particularly useful in applications requiring accurate distance measurements, such as autonomous navigation and 3D mapping.

- Capable of creating detailed 3D models of environments, enhancing the understanding of spatial relationships.

- 360-degree Cameras:

- Equipped with multiple lenses to capture a full panoramic view of the surroundings.

- Ideal for immersive experiences, such as virtual reality and surveillance.

- Provide comprehensive situational awareness, making them suitable for autonomous vehicles and robotics.

- Data processing can be complex due to the large volume of information generated.

3.2. LiDAR (Light Detection and Ranging)

LiDAR is a cutting-edge remote sensing technology that employs laser light to measure distances and create high-resolution maps of the environment.

- Working Principle:

- Emits laser pulses towards a target and measures the time it takes for the light to return.

- Calculates distances based on the speed of light, enabling precise mapping of surfaces and objects.

- Applications:

- Widely utilized in autonomous vehicles for obstacle detection and navigation.

- Essential in topographic mapping, forestry, and environmental monitoring.

- Provides detailed 3D models of landscapes, aiding in urban planning and construction.

- Advantages:

- Offers high accuracy and resolution compared to traditional sensors.

- Operates effectively in various lighting conditions, including darkness.

- Can penetrate vegetation, making it invaluable for applications in forestry and ecology.

- Limitations:

- The high cost of LiDAR systems can be a barrier for some applications.

- Data processing requires significant computational resources.

- Performance can be affected by weather conditions, such as heavy rain or fog.

By partnering with Rapid Innovation, clients can leverage our expertise in sensor technology and data acquisition, including strain gauge data acquisition systems and accelerometer daq, to enhance their operational capabilities. Our tailored solutions not only improve data accuracy but also drive greater ROI through informed decision-making and optimized processes. We are committed to helping you achieve your goals efficiently and effectively, ensuring that your investment in technology translates into tangible results.

3.3. Radar

Radar (Radio Detection and Ranging) is a critical technology used in autonomous vehicle perception for detecting and tracking objects in the environment. It operates by emitting radio waves and analyzing the reflected signals to determine the distance, speed, and direction of objects.

- Working Principle:

- Radar systems send out radio waves that bounce off objects.

- The time it takes for the waves to return helps calculate the distance to the object.

- Doppler effect is used to determine the speed of moving objects.

- Advantages:

- All-Weather Capability: Radar can function effectively in various weather conditions, including rain, fog, and snow, where optical sensors may struggle.

- Long Range Detection: Radar can detect objects at significant distances, making it suitable for highway driving.

- Speed Measurement: It can accurately measure the speed of moving vehicles, which is essential for adaptive cruise control and collision avoidance systems.

- Limitations:

- Resolution: Radar typically has lower resolution compared to cameras, making it challenging to identify small or closely spaced objects.

- Interference: Radar signals can be affected by other radar systems, leading to potential false readings.

- Applications in Autonomous Vehicles:

- Collision avoidance systems

- Adaptive cruise control

- Blind spot detection

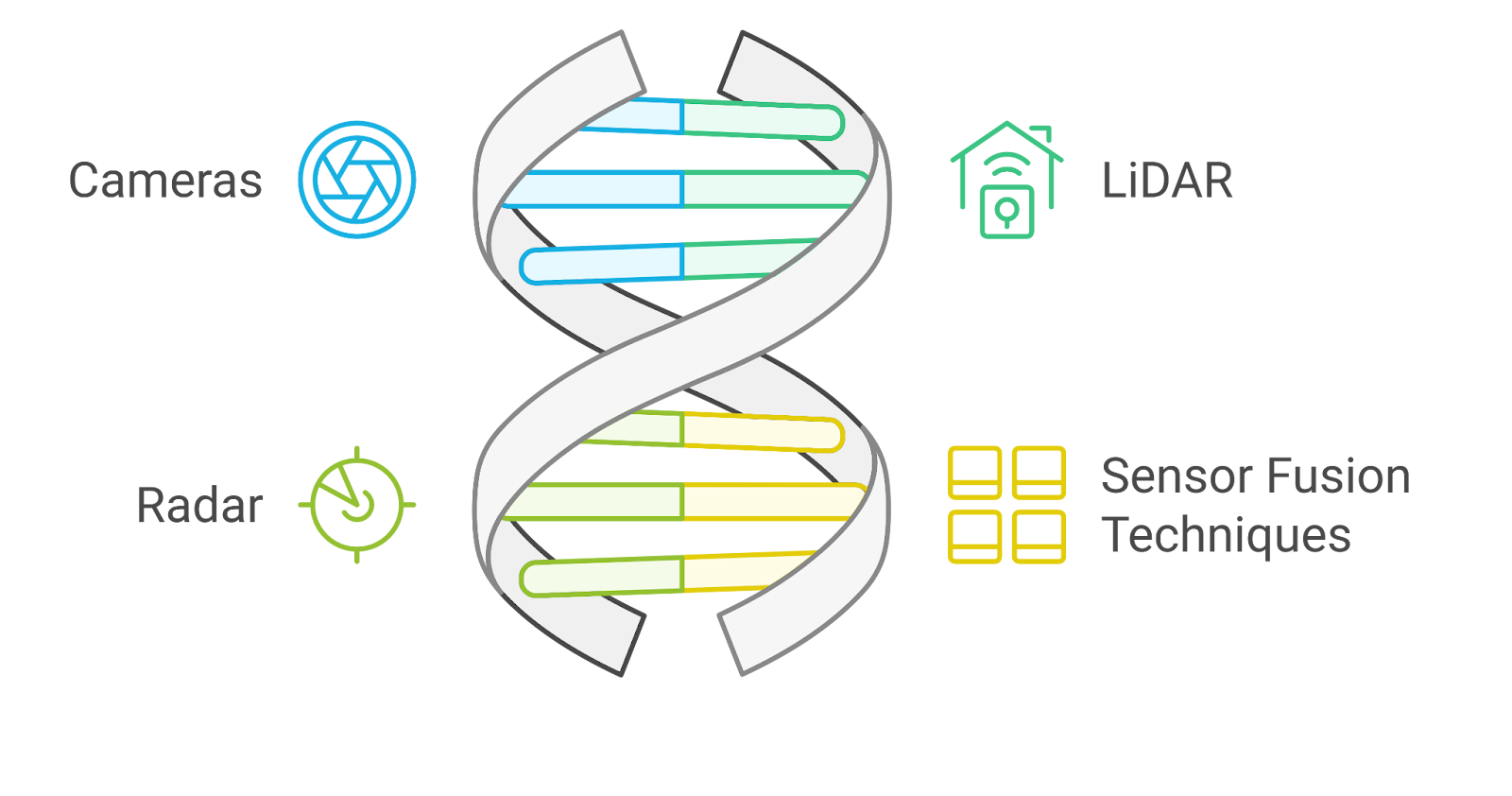

3.4. Sensor Fusion Techniques

Sensor fusion is the process of integrating data from multiple sensors to improve the accuracy and reliability of perception in autonomous driving perception. This technique combines information from various sources, such as cameras, LiDAR, and radar, to create a comprehensive understanding of the vehicle's surroundings.

- Types of Sensor Fusion:

- Low-Level Fusion: Combines raw data from sensors to create a unified data set.

- Mid-Level Fusion: Integrates processed data from sensors to enhance object detection and classification.

- High-Level Fusion: Merges information from different sources to make decisions and predictions about the environment.

- Benefits:

- Improved Accuracy: By leveraging the strengths of different sensors, sensor fusion can provide more accurate and reliable data.

- Redundancy: If one sensor fails or provides inaccurate data, others can compensate, enhancing system reliability.

- Robustness: Sensor fusion allows for better performance in diverse conditions, such as varying light and weather.

- Challenges:

- Complexity: Integrating data from multiple sensors requires sophisticated algorithms and processing power.

- Calibration: Sensors must be accurately calibrated to ensure that their data aligns correctly.

- Data Overload: Managing and processing large volumes of data from multiple sensors can be challenging.

- Common Techniques:

- Kalman filters

- Bayesian networks

- Neural networks

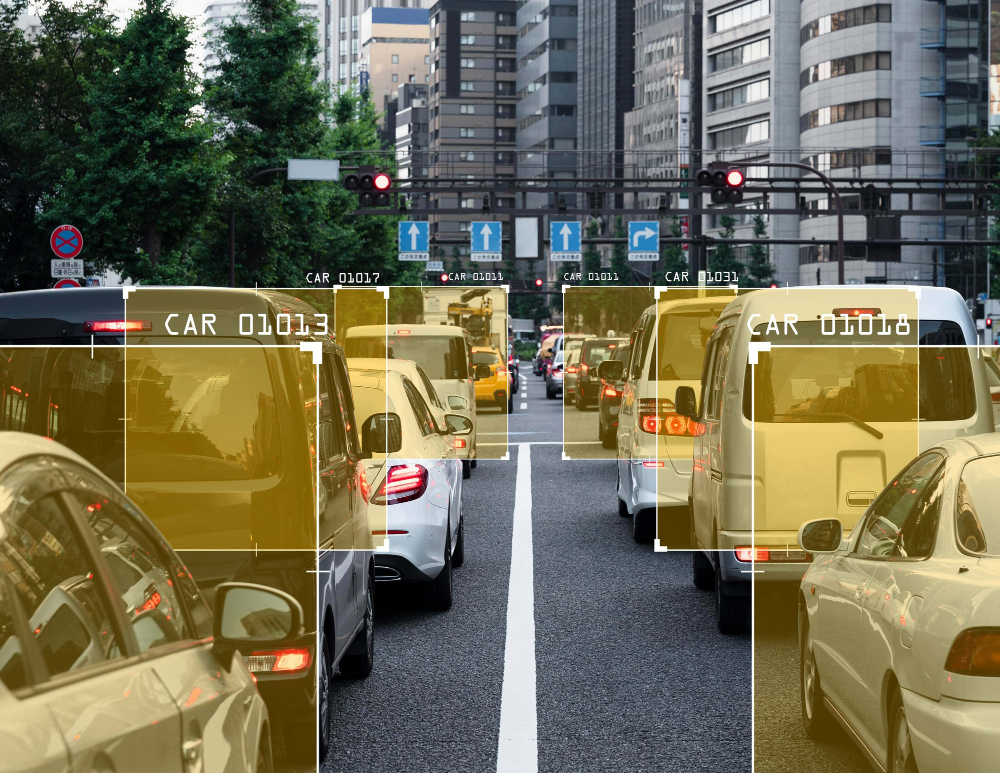

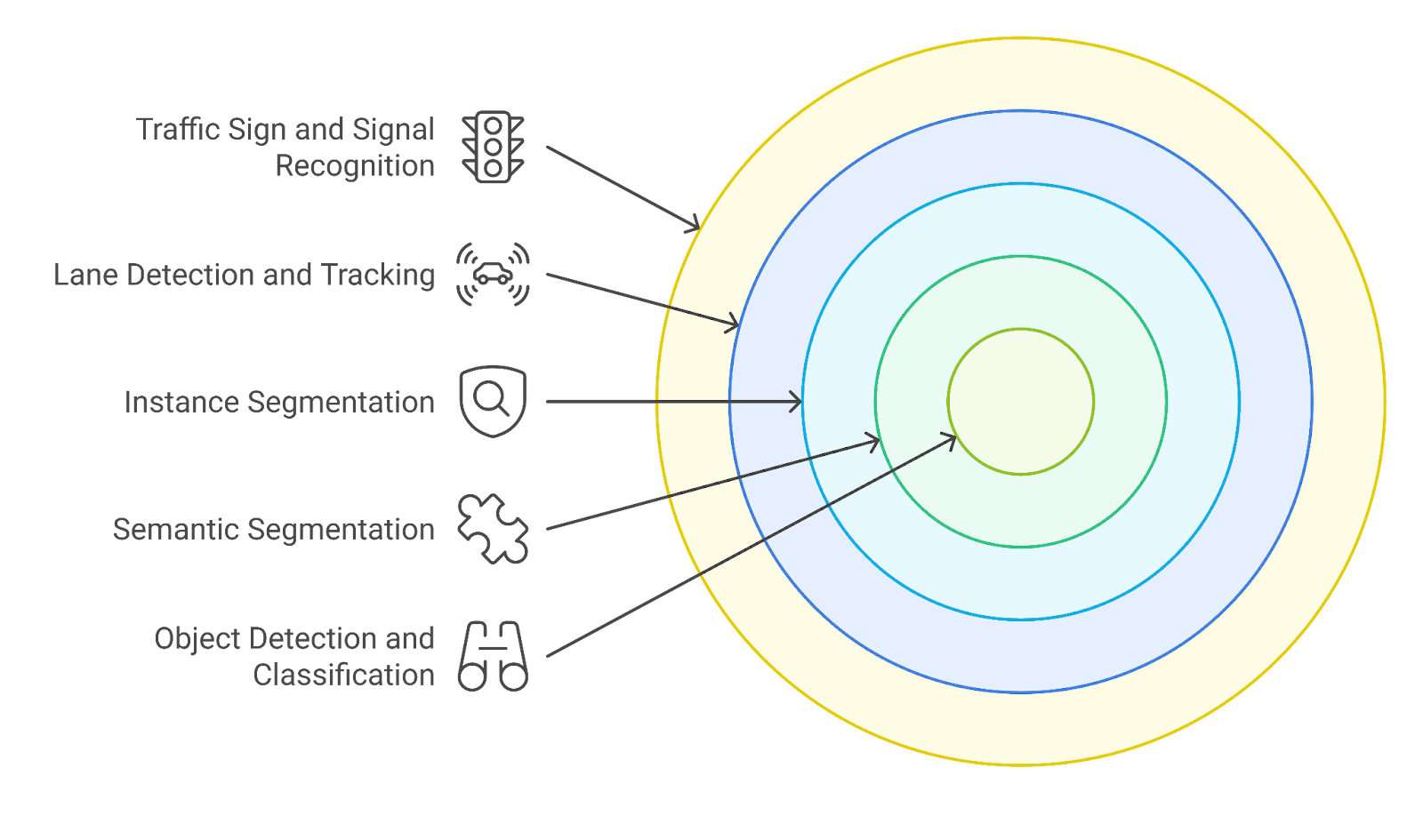

4. Perception Tasks in Autonomous Vehicles

Perception tasks are essential for enabling autonomous vehicles to understand and navigate their environment. These tasks involve interpreting data from various sensors to identify and track objects, assess the vehicle's surroundings, and make informed driving decisions.

- Key Perception Tasks:

- Object Detection: Identifying and locating objects such as vehicles, pedestrians, and obstacles in the environment.

- Object Classification: Determining the type of detected objects (e.g., car, truck, bicycle) to understand their behavior and potential risks.

- Tracking: Continuously monitoring the movement of detected objects to predict their future positions and actions.

- Semantic Segmentation: Classifying each pixel in an image to understand the scene better, distinguishing between road, sidewalk, and other elements.

- Technologies Used:

- Cameras: Provide high-resolution images for object detection and classification.

- LiDAR: Offers precise 3D mapping of the environment, useful for detecting and tracking objects.

- Radar: Complements other sensors by providing speed and distance information, especially in adverse weather conditions.

- Importance of Perception:

- Safety: Accurate perception is crucial for avoiding collisions and ensuring passenger safety.

- Navigation: Understanding the environment helps in route planning and navigation.

- Interaction: Perception enables vehicles to interact with other road users, such as yielding to pedestrians or responding to traffic signals.

- Future Trends:

- Enhanced AI Algorithms: Development of more sophisticated machine learning models for better perception accuracy.

- Integration of New Sensors: Exploring the use of additional sensors, such as thermal cameras and ultrasonic sensors, for improved environmental understanding.

- Real-Time Processing: Advancements in computing power will enable faster data processing, allowing for real-time decision-making.

At Rapid Innovation, we leverage our expertise in AI and blockchain technology to help clients integrate these advanced systems into their autonomous vehicle perception systems. By partnering with us, clients can expect enhanced safety, improved navigation, and a significant return on investment through the efficient deployment of cutting-edge technologies. Our tailored solutions ensure that your autonomous vehicle systems are not only effective but also future-proof, allowing you to stay ahead in a rapidly evolving market.

4.1. Object Detection and Classification

Object detection and classification are fundamental tasks in computer vision that involve identifying and categorizing objects within images or video frames. At Rapid Innovation, we leverage these technologies to help our clients achieve their goals efficiently and effectively.

- Object Detection:

- The process of locating instances of objects within an image.

- Typically involves drawing bounding boxes around detected objects.

- Algorithms like YOLO (You Only Look Once) and SSD (Single Shot MultiBox Detector) are popular for real-time detection.

- Applications include surveillance, autonomous vehicles, and image retrieval.

- Techniques such as object detection and classification segmentation are also employed to enhance accuracy.

- Object Classification:

- Involves assigning a label to an entire image or to the detected objects within it.

- Techniques often use convolutional neural networks (CNNs) for feature extraction.

- Common datasets for training include COCO (Common Objects in Context) and PASCAL VOC.

- Used in applications like facial recognition, medical imaging, and quality control in manufacturing.

- The difference between image classification and object detection is crucial for understanding their unique roles in computer vision.

- Combined Approach:

- Many modern systems integrate both detection and classification to provide comprehensive analysis.

- This allows for more complex tasks, such as identifying multiple objects in a scene and understanding their relationships.

- The difference between object detection and classification is often highlighted in discussions about their integration.

By implementing these advanced techniques, we have helped clients in various sectors enhance their operational efficiency, leading to greater ROI. For instance, a client in the retail industry utilized our object detection solutions to optimize inventory management, resulting in a 30% reduction in stock discrepancies.

4.2. Semantic Segmentation

Semantic segmentation is a pixel-level classification task that assigns a class label to each pixel in an image. Our expertise in this area allows us to provide tailored solutions that meet the specific needs of our clients.

- Definition:

- Unlike object detection, which identifies objects with bounding boxes, semantic segmentation provides a more detailed understanding of the scene.

- Each pixel is classified into categories such as "car," "tree," "road," etc.

- Techniques:

- Common methods include Fully Convolutional Networks (FCNs) and U-Net architectures.

- These models are trained on labeled datasets where each pixel is annotated with its corresponding class.

- Loss functions like cross-entropy are often used to optimize the model during training.

- Applications:

- Widely used in autonomous driving for road and obstacle detection.

- Important in medical imaging for segmenting organs or tumors.

- Useful in agricultural monitoring to assess crop health and land use.

- Challenges:

- Requires large amounts of labeled data for training.

- Struggles with occlusions and varying object scales.

- Real-time processing can be computationally intensive.

Our clients have seen significant improvements in their projects by utilizing our semantic segmentation capabilities. For example, a healthcare provider improved diagnostic accuracy by 25% through enhanced medical imaging analysis.

4.3. Instance Segmentation

Instance segmentation is an advanced form of segmentation that not only classifies each pixel but also differentiates between separate instances of the same object class. Rapid Innovation's expertise in this domain enables us to deliver cutting-edge solutions that drive results.

- Definition:

- Combines object detection and semantic segmentation.

- Each object instance is assigned a unique label, allowing for differentiation even among similar objects.

- Techniques:

- Popular algorithms include Mask R-CNN, which extends Faster R-CNN by adding a branch for predicting segmentation masks.

- Other methods involve using deep learning frameworks that can handle both bounding box detection and pixel-wise segmentation.

- Applications:

- Essential in robotics for object manipulation and interaction.

- Used in video surveillance to track individuals or vehicles.

- Important in augmented reality for overlaying digital content on real-world objects.

- Challenges:

- More complex than semantic segmentation due to the need for precise boundary delineation.

- Requires high-quality annotations for training, which can be labor-intensive.

- Performance can degrade in crowded scenes where objects overlap significantly.

By partnering with Rapid Innovation, clients can expect to harness the full potential of instance segmentation, leading to improved operational capabilities and enhanced user experiences. For instance, a client in the logistics sector improved their package sorting accuracy by 40% through our tailored instance segmentation solutions.

In conclusion, by collaborating with Rapid Innovation, clients can expect not only to achieve their goals but also to realize significant returns on their investments through our advanced AI and blockchain solutions.

4.4. Lane Detection and Tracking

Lane detection and tracking are critical components of advanced driver-assistance systems (ADAS) and autonomous vehicles. This lane detection technology enables vehicles to understand their position on the road and maintain safe driving practices.

- Functionality:

- Uses computer vision algorithms to identify lane markings on the road.

- Employs techniques like Hough Transform and Convolutional Neural Networks (CNNs) for accurate detection.

- Challenges:

- Variability in lane markings due to weather conditions, road wear, or construction.

- Complex scenarios such as merging lanes or multi-lane roads can complicate detection.

- Technologies Used:

- Cameras and LiDAR sensors are commonly used to gather data.

- Machine learning models are trained on large datasets to improve accuracy.

- Applications:

- Lane keeping assist systems that help drivers stay within their lane.

- Autonomous vehicles that require precise lane tracking for navigation.

4.5. Traffic Sign and Signal Recognition

Traffic sign and signal recognition is essential for ensuring that vehicles can respond appropriately to road conditions and regulations. This technology enhances safety and compliance with traffic laws.

- Functionality:

- Utilizes image processing techniques to identify and classify traffic signs and signals.

- Algorithms can recognize various signs, including stop signs, yield signs, and speed limits.

- Challenges:

- Variability in sign designs and conditions (e.g., obscured by foliage or damaged).

- Different countries have different traffic sign standards, complicating recognition for global applications.

- Technologies Used:

- Deep learning models, particularly CNNs, are effective for image classification tasks.

- Integration with GPS and mapping data to provide context-aware information.

- Applications:

- Advanced navigation systems that provide real-time updates to drivers.

- Autonomous vehicles that need to interpret traffic signals for safe operation.

- Advanced navigation systems that provide real-time updates to drivers.

5. 3D Vision and Depth Perception

3D vision and depth perception are vital for understanding the environment around a vehicle. This technology allows vehicles to perceive their surroundings in three dimensions, enhancing navigation and obstacle avoidance.

- Functionality:

- Combines data from multiple sensors (e.g., cameras, LiDAR, and radar) to create a 3D representation of the environment.

- Depth perception helps in determining the distance to objects, which is crucial for safe driving.

- Challenges:

- Sensor fusion can be complex, requiring sophisticated algorithms to integrate data from different sources.

- Environmental factors like fog, rain, or low light can affect sensor performance.

- Technologies Used:

- Stereo vision systems use two or more cameras to estimate depth.

- LiDAR provides precise distance measurements by emitting laser pulses and measuring their return time.

- Applications:

- Collision avoidance systems that detect obstacles in real-time.

- Enhanced navigation systems that provide a more accurate representation of the vehicle's surroundings.

At Rapid Innovation, we understand the complexities and challenges associated with implementing advanced technologies like lane detection, lane detection autonomous driving, traffic sign recognition, and 3D vision. Our expertise in AI and blockchain development allows us to provide tailored solutions that enhance the efficiency and effectiveness of your projects. By partnering with us, clients can expect greater ROI through improved safety features, enhanced user experiences, and streamlined operations. Our commitment to innovation ensures that you stay ahead in a rapidly evolving technological landscape. Let us help you achieve your goals with precision and confidence. For more information on related technologies, visit Object Recognition | Advanced AI-Powered Solutions.

5.1. Stereo Vision

Stereo vision is a technique used to perceive depth and three-dimensional structures from two-dimensional images. It mimics human binocular vision, where the brain combines images from both eyes to gauge distance and depth.

- Principle of Operation:

- Two cameras are positioned at a fixed distance apart.

- Each camera captures an image of the same scene from slightly different angles.

- The disparity between the two images is analyzed to calculate depth information.

- Applications:

- Robotics: Enables robots to navigate and interact with their environment.

- Autonomous Vehicles: Helps in obstacle detection and distance measurement.

- 3D Reconstruction: Used in creating 3D models from 2D images, including low polygon modeling and polygon modeling.

- Challenges:

- Occlusions: Objects blocking the view of others can complicate depth perception.

- Lighting Conditions: Variations in light can affect image quality and depth calculations.

- Calibration: Accurate calibration of cameras is essential for precise measurements.

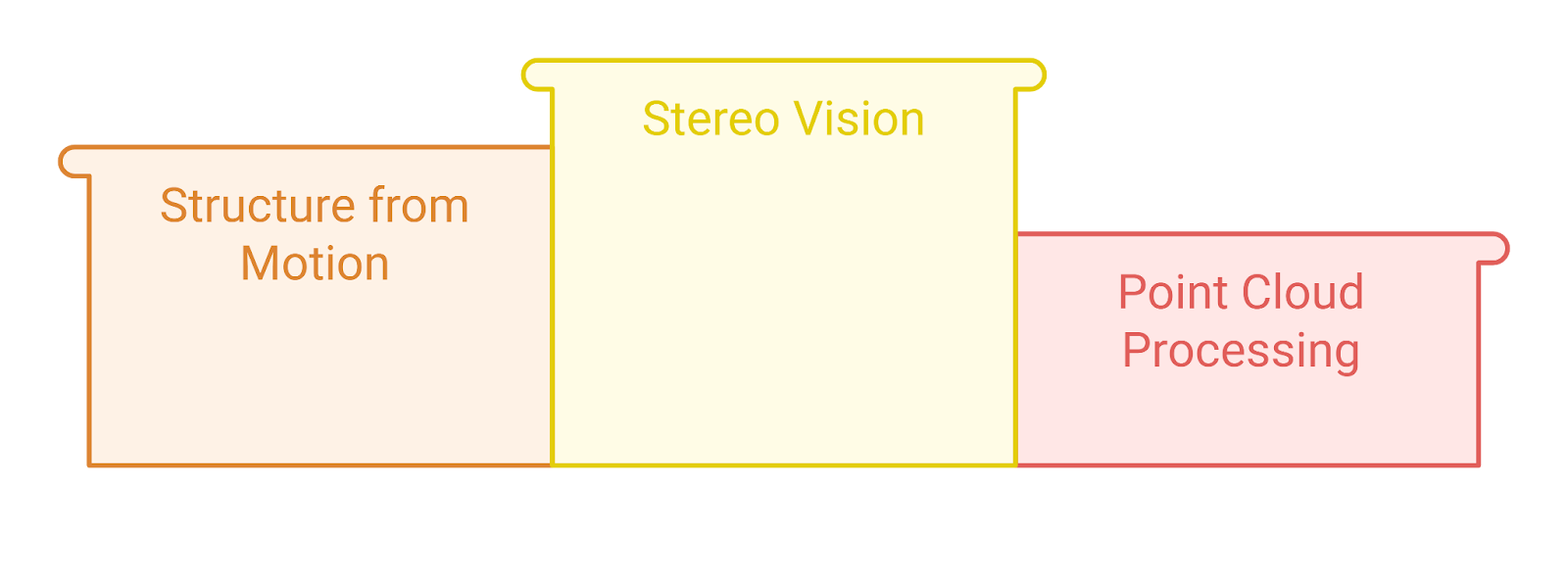

5.2. Structure from Motion

Structure from Motion (SfM) is a photogrammetric technique that reconstructs three-dimensional structures from a series of two-dimensional images taken from different viewpoints.

- How It Works:

- Multiple images of a scene are captured from various angles.

- Key points are identified and matched across the images.

- The relative positions of the cameras are estimated, and a 3D point cloud is generated.

- Applications:

- Archaeology: Used to document and reconstruct historical sites.

- Virtual Reality: Creates immersive environments from real-world locations.

- Geographic Information Systems (GIS): Helps in mapping and analyzing terrain, including applications in 3D modeling techniques and methods.

- Advantages:

- Non-invasive: Can capture data without physical contact with the subject.

- Cost-effective: Utilizes standard cameras and software for processing.

- Versatile: Applicable in various fields, including film, gaming, and urban planning.

- Limitations:

- Requires a significant number of images for accurate reconstruction.

- Sensitive to motion blur and changes in lighting.

- Computationally intensive, requiring robust algorithms for processing.

5.3. Point Cloud Processing

Point cloud processing involves the manipulation and analysis of point clouds, which are sets of data points in a three-dimensional coordinate system. These points represent the external surface of an object or scene.

- Key Processes:

- Filtering: Removing noise and outliers to improve data quality.

- Segmentation: Dividing the point cloud into meaningful clusters or regions.

- Registration: Aligning multiple point clouds to create a unified model.

- Applications:

- 3D Modeling: Used in architecture and engineering for creating detailed models, including zbrush hard surface modeling and sculpt modeling.

- Robotics: Assists in navigation and environment mapping.

- Medical Imaging: Helps in visualizing complex anatomical structures.

- Techniques:

- Surface Reconstruction: Creating a continuous surface from discrete points, applicable in 3D polygon model creation.

- Feature Extraction: Identifying key characteristics within the point cloud for further analysis.

- Machine Learning: Applying algorithms to classify and interpret point cloud data.

- Challenges:

- Data Density: High-density point clouds can be difficult to process and visualize.

- Computational Load: Requires significant processing power and memory.

- Accuracy: Ensuring precision in measurements and reconstructions can be complex.

At Rapid Innovation, we leverage advanced techniques like stereo vision, structure from motion, and point cloud processing to help our clients achieve their goals efficiently and effectively. By integrating these technologies into your projects, we can enhance your operational capabilities, leading to greater ROI.

Our expertise in AI and blockchain development allows us to provide tailored solutions that not only meet your immediate needs but also position you for future growth. When you partner with us, you can expect benefits such as improved data accuracy, cost savings through efficient processes, and innovative solutions that keep you ahead of the competition. Let us help you transform your vision into reality with our knowledge in 3D modeling methods and blender modeling techniques.

6. Motion Estimation and Tracking

At Rapid Innovation, we recognize that motion estimation and tracking are pivotal in the realm of computer vision. These techniques empower systems to comprehend and interpret the movement of objects within a scene, making them indispensable across various applications, including robotics, autonomous vehicles, and video analysis. By leveraging our expertise in these areas, we can help clients achieve their goals efficiently and effectively, ultimately leading to greater ROI.

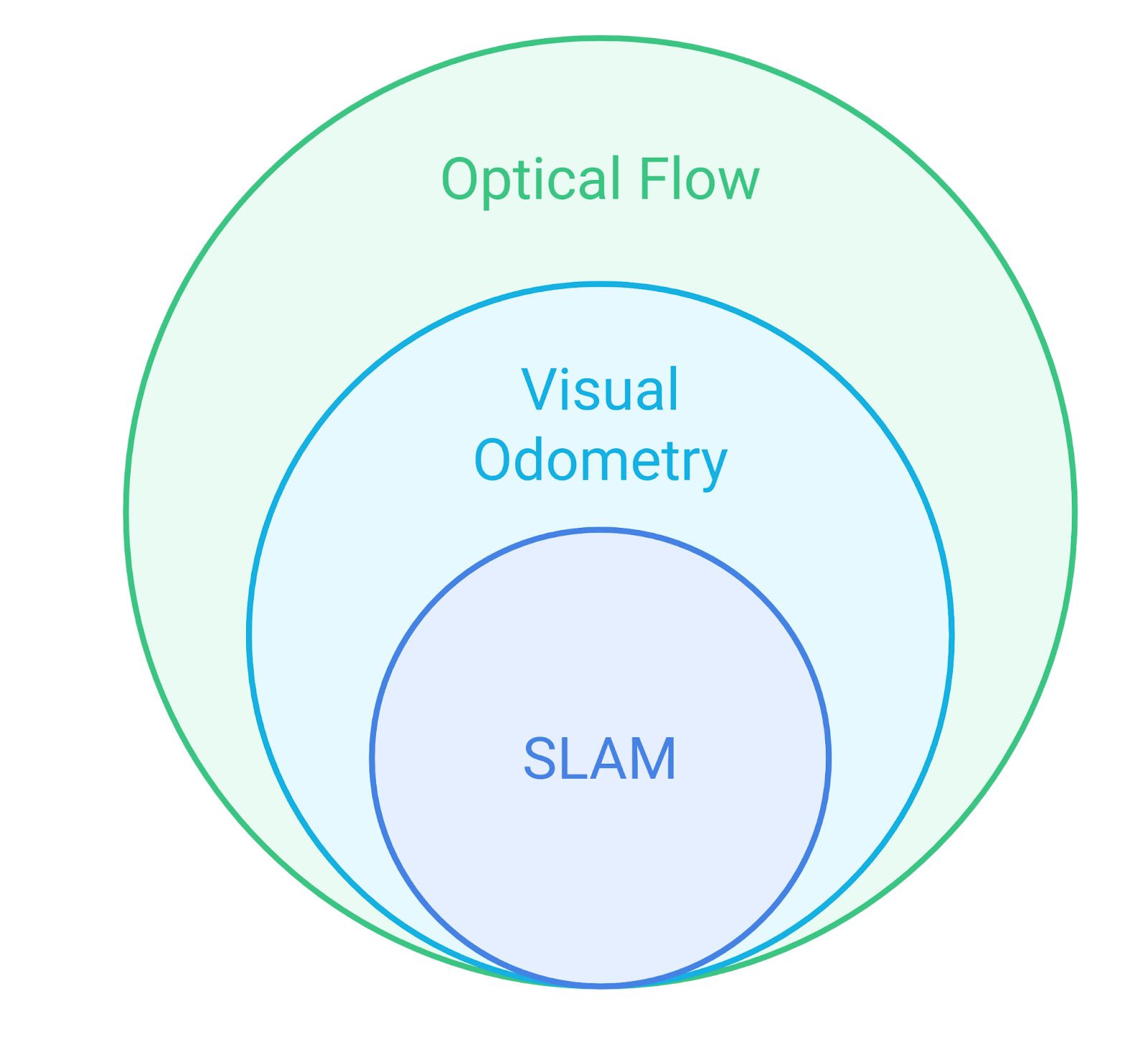

6.1. Optical Flow

Optical flow refers to the pattern of apparent motion of objects in a visual scene based on the movement of brightness patterns between two consecutive frames. It is a fundamental technique for motion estimation and tracking.

- Key Concepts:

- Optical flow is derived from the assumption that the intensity of pixels remains constant over time.

- It calculates the motion vector for each pixel, indicating how much and in which direction the pixel has moved.

- Applications:

- Object tracking: Helps in following moving objects in video sequences, enhancing surveillance and monitoring systems.

- Motion analysis: Used in sports analytics to study player movements, providing teams with insights to improve performance.

- Video compression: Assists in reducing data by predicting motion between frames, optimizing storage and bandwidth usage.

- Methods:

- Lucas-Kanade method: A widely used differential method that assumes a small motion between frames, ideal for real-time applications.

- Horn-Schunck method: A global method that imposes smoothness constraints on the flow field, ensuring more accurate results in complex scenes.

- Challenges:

- Occlusions: When an object moves behind another, it can disrupt flow estimation, necessitating advanced algorithms to mitigate this issue.

- Illumination changes: Variations in lighting can affect pixel intensity, complicating flow calculations, which we address through robust preprocessing techniques.

6.2. Visual Odometry

Visual odometry is the process of estimating the position and orientation of a camera by analyzing the sequence of images it captures. It is essential for navigation in robotics and autonomous systems.

- Key Concepts:

- Visual odometry uses features extracted from images to determine the camera's movement over time.

- It typically involves two main steps: feature extraction and motion estimation.

- Applications:

- Autonomous vehicles: Helps in real-time navigation and obstacle avoidance, ensuring safety and efficiency on the road.

- Augmented reality: Enhances user experience by accurately tracking the user's position, leading to more immersive applications.

- Robotics: Enables robots to navigate and map their environment, improving operational efficiency in various industries.

- Methods:

- Monocular visual odometry: Uses a single camera to estimate motion, often relying on depth estimation techniques, making it cost-effective.

- Stereo visual odometry: Utilizes two cameras to provide depth information directly, improving accuracy and reliability in dynamic environments.

- Challenges:

- Scale ambiguity: Monocular systems struggle with determining the actual scale of movement, which we address through advanced calibration techniques.

- Dynamic environments: Moving objects can introduce noise and inaccuracies in motion estimation, requiring sophisticated filtering methods.

By partnering with Rapid Innovation, clients can expect to harness the full potential of motion estimation and tracking technologies. Our tailored solutions not only enhance operational efficiency but also drive significant cost savings and improved performance metrics. Let us help you navigate the complexities of computer vision and achieve your strategic objectives with confidence.

6.3. Simultaneous Localization and Mapping (SLAM)

Simultaneous Localization and Mapping (SLAM) is a critical technology in robotics and computer vision that enables a device to create a map of an unknown environment while simultaneously keeping track of its own location within that environment. This technology is often referred to as simultaneous localization and mapping slam.

Key components of SLAM:

- Localization: Determining the position of the robot or device in the environment, which is essential for slam localization.

- Mapping: Creating a representation of the environment, which can be a 2D or 3D map, a process known as slam mapping.

Applications of SLAM:

- Robotics: Used in autonomous robots for navigation and obstacle avoidance, a field often referred to as slam robotics.

- Augmented Reality (AR): Helps devices understand their surroundings to overlay digital information accurately, as seen in augmented reality slam applications.

- Self-driving Cars: Essential for real-time navigation and understanding of the vehicle's environment, leveraging simultaneous localization and mapping applications.

Types of SLAM:

- Visual SLAM: Utilizes cameras to gather visual information for mapping and localization, also known as visual simultaneous localization and mapping.

- Lidar SLAM: Employs laser scanning technology to create detailed maps of the environment.

- Inertial SLAM: Combines data from inertial measurement units (IMUs) with other sensors for improved accuracy.

Challenges in SLAM:

- Data Association: Correctly matching observed features with previously mapped features.

- Loop Closure: Recognizing when the device returns to a previously visited location to correct drift in the map.

- Dynamic Environments: Adapting to changes in the environment, such as moving objects.

7. Environmental Understanding

Environmental understanding refers to the ability of machines and systems to perceive, interpret, and interact with their surroundings. This capability is essential for various applications, including robotics, autonomous vehicles, and smart cities.

Importance of Environmental Understanding:

- Enhances the ability of machines to operate autonomously.

- Improves safety and efficiency in navigation and task execution.

- Facilitates human-robot interaction by enabling machines to understand human actions and intentions.

Components of Environmental Understanding:

- Perception: Gathering data from sensors (cameras, Lidar, etc.) to understand the environment.

- Interpretation: Analyzing the gathered data to identify objects, obstacles, and relevant features.

- Decision Making: Using the interpreted data to make informed decisions about navigation and interaction.

7.1. Scene Understanding

Scene understanding is a subset of environmental understanding that focuses specifically on interpreting the visual aspects of a scene. It involves recognizing objects, their relationships, and the overall context of the environment.

Key aspects of Scene Understanding:

- Object Detection: Identifying and classifying objects within a scene.

- Semantic Segmentation: Assigning labels to each pixel in an image to delineate different objects and regions.

- Depth Estimation: Determining the distance of objects from the camera to create a 3D representation of the scene.

Techniques used in Scene Understanding:

- Deep Learning: Utilizing neural networks to improve accuracy in object detection and classification.

- Computer Vision Algorithms: Implementing algorithms for feature extraction and image processing.

- 3D Reconstruction: Creating a three-dimensional model of the scene from 2D images.

Applications of Scene Understanding:

- Autonomous Vehicles: Enabling cars to recognize road signs, pedestrians, and other vehicles.

- Robotics: Allowing robots to navigate complex environments and perform tasks like picking and placing objects.

- Smart Surveillance: Enhancing security systems by identifying suspicious activities or objects in real-time.

Challenges in Scene Understanding:

- Variability in Scenes: Dealing with different lighting conditions, occlusions, and cluttered environments.

- Real-time Processing: Ensuring that scene understanding occurs quickly enough for applications like autonomous driving.

- Generalization: Developing models that can accurately interpret scenes they have not encountered before.

7.2. Behavior Prediction of Other Road Users

At Rapid Innovation, we understand that behavior prediction is crucial for the safe operation of autonomous vehicles. By accurately understanding the intentions and actions of other road users—such as pedestrians, cyclists, and other vehicles—autonomous systems can make informed decisions that enhance safety and efficiency. This is particularly important in the context of behavior prediction autonomous driving.

- Our predictive models analyze historical data to forecast future actions, ensuring that your autonomous systems are equipped with the best insights.

- We employ advanced machine learning algorithms, particularly recurrent neural networks (RNNs), to enhance the accuracy of behavior predictions.

- Key factors influencing behavior prediction include:

- Speed and direction of other road users

- Environmental context (e.g., traffic signals, road conditions)

- Social cues (e.g., eye contact, body language)

- By utilizing real-time data from sensors such as LiDAR and cameras, we significantly enhance prediction accuracy.

- Our collaborative filtering techniques improve predictions by considering the behavior of similar road users, allowing for more nuanced decision-making.

- Integrating behavior prediction into decision-making processes is essential for collision avoidance and safe navigation, and we are here to help you achieve that. Our focus on autonomous vehicle behavior prediction ensures that we stay ahead in this critical area.

7.3. Obstacle Avoidance

Obstacle avoidance is a fundamental capability for autonomous vehicles, and at Rapid Innovation, we ensure that your systems can navigate safely in dynamic environments.

- Our approach involves detecting, classifying, and responding to obstacles in real-time, which is critical for operational safety.

- Key components of our obstacle avoidance systems include:

- Sensor fusion: We combine data from multiple sensors (LiDAR, radar, cameras) to provide a comprehensive view of the environment.

- Path planning algorithms: Our algorithms determine the best route while avoiding obstacles, optimizing for safety and efficiency.

- Control systems: These systems execute the planned path, adjusting speed and direction as necessary to ensure smooth navigation.

- Techniques we utilize in obstacle avoidance include:

- Static and dynamic obstacle detection using advanced computer vision.

- Predictive algorithms to anticipate the movement of moving obstacles.

- Reinforcement learning to improve decision-making in complex scenarios.

- We address challenges such as:

- Differentiating between temporary and permanent obstacles.

- Adapting to unpredictable behavior from other road users.

- Operating in various weather conditions that may affect sensor performance.

8. Deep Learning Approaches in Autonomous Vehicle Vision

Deep learning has revolutionized the field of computer vision, and at Rapid Innovation, we leverage this technology to significantly enhance the capabilities of autonomous vehicles.

- We utilize Convolutional Neural Networks (CNNs) for image recognition tasks, enabling vehicles to identify objects, road signs, and lane markings with high accuracy.

- Key advantages of our deep learning solutions in autonomous vehicle vision include:

- High accuracy in object detection and classification.

- The ability to learn from vast amounts of data, improving performance over time.

- Robustness to variations in lighting, weather, and environmental conditions.

- Our applications of deep learning in autonomous vehicle vision include:

- Semantic segmentation: Classifying each pixel in an image to better understand the scene.

- Object tracking: Following the movement of identified objects over time.

- Scene understanding: Integrating information from multiple sources to create a comprehensive view of the environment.

- We also address challenges faced in deep learning for autonomous vehicles, such as:

- The need for large, annotated datasets for training models.

- Ensuring real-time processing capabilities for immediate decision-making.

- Addressing ethical concerns related to data privacy and bias in training datasets.

- Our ongoing research focuses on improving model efficiency and interpretability, making our deep learning approaches more reliable for autonomous driving applications.

By partnering with Rapid Innovation, you can expect to achieve greater ROI through enhanced safety, efficiency, and reliability in your autonomous vehicle systems. Our expertise in AI and blockchain development ensures that you are at the forefront of technological advancements, allowing you to meet your goals effectively and efficiently.

8.1. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks are a class of deep learning models primarily used for processing structured grid data, such as images. They are designed to automatically and adaptively learn spatial hierarchies of features from input images.

Key Characteristics:

- Convolutional Layers: These layers apply convolution operations to the input, allowing the model to learn local patterns. This is a fundamental aspect of convolutional neural network architecture.

- Pooling Layers: These layers reduce the spatial dimensions of the data, helping to decrease computation and control overfitting.

- Activation Functions: Commonly used functions like ReLU (Rectified Linear Unit) introduce non-linearity into the model.

Applications:

- Image Classification: CNNs excel in tasks like identifying objects in images, as demonstrated in the ImageNet competition, where architectures like AlexNet and SqueezeNet have shown remarkable performance.

- Object Detection: They are utilized in systems that detect and localize objects within images, such as YOLO and Faster R-CNN.

- Medical Image Analysis: CNNs assist in diagnosing diseases by analyzing medical images like X-rays and MRIs.

Advantages:

- Parameter Sharing: This characteristic reduces the number of parameters, making the model more efficient.

- Translation Invariance: CNNs can recognize objects regardless of their position in the image.

8.2. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks are a type of neural network designed for sequential data. They are particularly effective for tasks where context and order are important, such as time series analysis and natural language processing.

Key Characteristics:

- Recurrent Connections: RNNs have connections that loop back on themselves, allowing them to maintain a memory of previous inputs.

- Hidden States: They utilize hidden states to capture information from previous time steps, enabling the model to learn temporal dependencies. LSTM networks and LSTM architecture are popular variations that address some of the limitations of standard RNNs.

Applications:

- Natural Language Processing: RNNs are widely used for tasks like language modeling, text generation, and machine translation.

- Speech Recognition: They assist in converting spoken language into text by processing audio signals sequentially.

- Time Series Prediction: RNNs can forecast future values based on historical data, which is useful in finance and weather forecasting.

Challenges:

- Vanishing Gradient Problem: RNNs can struggle to learn long-range dependencies due to gradients diminishing over time.

- Training Difficulty: They can be more challenging to train compared to feedforward networks.

8.3. Generative Adversarial Networks (GANs)

Generative Adversarial Networks are a class of machine learning frameworks designed for generating new data samples that resemble a given dataset. They consist of two neural networks, the generator and the discriminator, that compete against each other.

Key Characteristics:

- Generator: This network creates new data samples from random noise, aiming to produce outputs indistinguishable from real data.

- Discriminator: This network evaluates the authenticity of the generated samples, distinguishing between real and fake data.

Applications:

- Image Generation: GANs can create realistic images, often used in art and design.

- Data Augmentation: They help in generating additional training data, improving model performance in scenarios with limited data.

- Video Generation: GANs are being explored for generating realistic video sequences.

Advantages:

- High-Quality Outputs: GANs can produce outputs that are often indistinguishable from real data.

- Versatility: They can be adapted for various types of data, including images, audio, and text.

Challenges:

- Training Instability: The adversarial nature of GANs can lead to difficulties in training, often resulting in mode collapse.

- Resource Intensive: Training GANs can require significant computational resources and time.

At Rapid Innovation, we leverage these advanced neural network architectures, including convolutional neural network structure and neural architecture search, to help our clients achieve their goals efficiently and effectively. By integrating CNNs, RNNs, and GANs into tailored solutions, we enable businesses to enhance their operational capabilities, improve decision-making processes, and ultimately achieve greater ROI. Partnering with us means you can expect innovative solutions, expert guidance, and a commitment to driving your success in the rapidly evolving landscape of AI and blockchain technology.

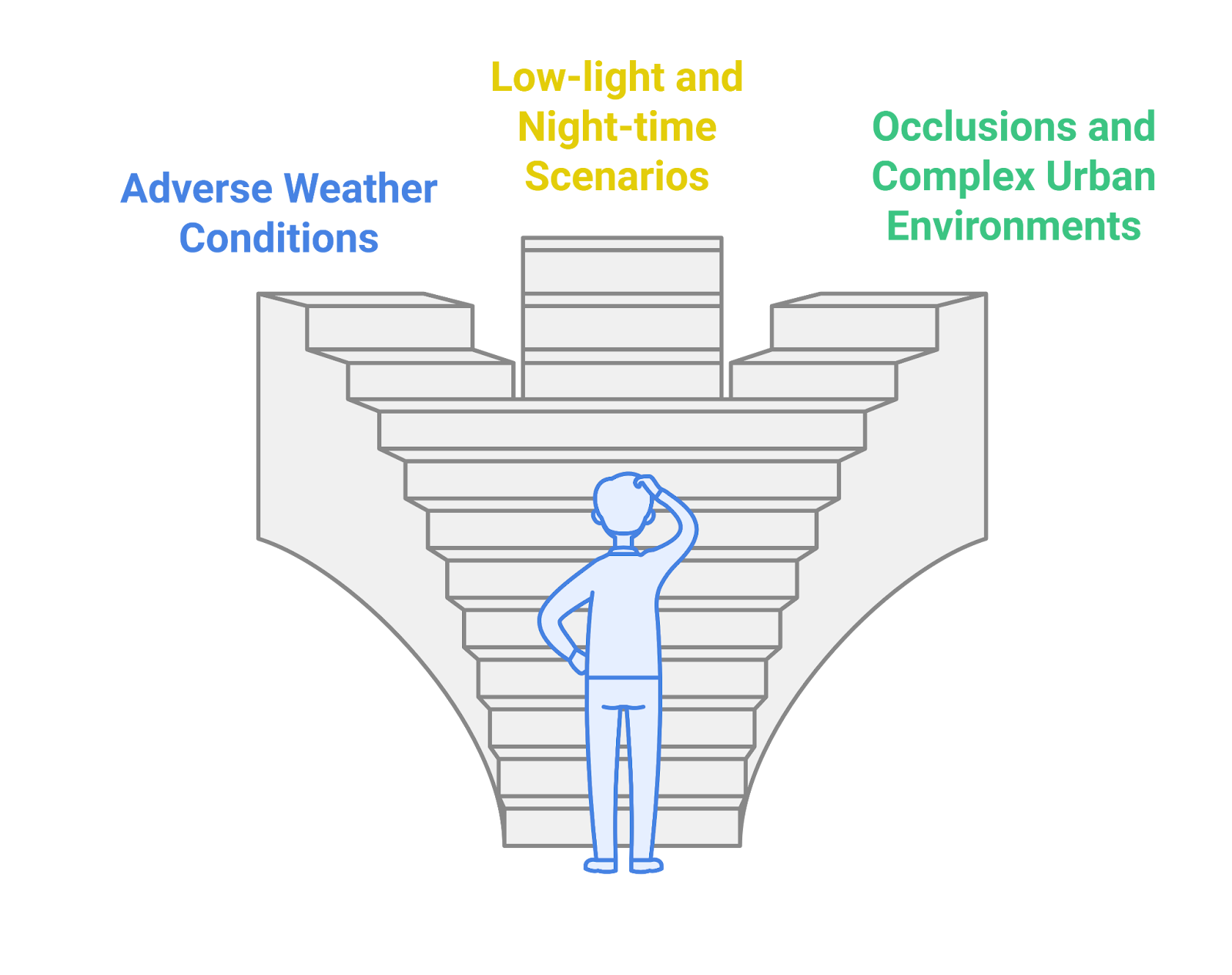

9. Challenges and Limitations

The implementation of various technologies, particularly in fields like transportation, agriculture, and outdoor activities, faces several challenges and limitations, including technology challenges and problems in technology today. Understanding these obstacles is crucial for improving systems and ensuring safety and efficiency.

9.1. Adverse Weather Conditions

Adverse weather conditions can significantly impact the performance and reliability of various technologies.

- Rain, snow, and ice can reduce visibility and traction, affecting vehicles and machinery.

- Sensors and cameras may struggle to function optimally in heavy rain or snow, leading to inaccurate data collection.

- Wind can disrupt aerial technologies, such as drones, making them difficult to control and operate safely.

- Temperature extremes can affect battery performance, particularly in electric vehicles and portable devices.

- Weather-related disruptions can lead to delays in transportation and logistics, impacting supply chains.

These factors necessitate the development of more robust systems that can withstand and adapt to changing weather conditions. At Rapid Innovation, we specialize in creating resilient solutions that enhance operational efficiency, even in adverse weather scenarios, ensuring that our clients can maintain productivity and minimize disruptions. However, we also recognize the technological problems that can arise, such as security and privacy issues in IoT, which must be addressed.

9.2. Low-light and Night-time Scenarios

Low-light and night-time scenarios present unique challenges for technology deployment and operation.

- Reduced visibility can hinder the effectiveness of cameras and sensors, making it difficult to capture accurate data.

- Many autonomous systems rely on visual recognition, which can be compromised in low-light conditions.

- Night-time operations may require additional lighting, which can increase energy consumption and operational costs.

- Safety concerns arise in low-light environments, as the risk of accidents and collisions increases.

- Certain technologies may not be designed for night-time use, limiting their functionality and effectiveness.

Addressing these challenges requires innovative solutions, such as advanced imaging technologies and improved sensor capabilities, to enhance performance in low-light conditions. Rapid Innovation is committed to developing cutting-edge technologies that empower our clients to operate effectively, regardless of the time of day or environmental conditions. By partnering with us, clients can expect enhanced safety, improved data accuracy, and ultimately, a greater return on investment. Additionally, we are aware of the education technology issues and healthcare technology issues that can impact the adoption and effectiveness of new technologies in various sectors.

9.3. Occlusions and Complex Urban Environments

Occlusions in urban environments pose significant challenges for various technologies, particularly in fields like robotics, autonomous vehicles, and augmented reality. These challenges arise from the dense arrangement of buildings, vehicles, and other structures that can obstruct sensors and cameras.

- Types of Occlusions:

- Static occlusions: Buildings, trees, and other permanent structures that block the line of sight.

- Dynamic occlusions: Moving objects such as pedestrians, cars, and cyclists that can temporarily obstruct sensors.

- Impact on Technology:

- Reduced accuracy: Occlusions can lead to incomplete data, affecting navigation and object detection.

- Increased complexity: Algorithms must account for unpredictable movements and varying environmental conditions.

- Solutions and Strategies:

- Sensor fusion: Combining data from multiple sensors (e.g., LiDAR, cameras) to create a more comprehensive view of the environment.

- Machine learning: Utilizing AI to predict and fill in gaps in data caused by occlusions.

- 3D mapping: Creating detailed 3D models of urban areas to better understand and navigate complex environments.

- Real-world Applications:

- Autonomous vehicles: Need to navigate safely through urban landscapes while avoiding obstacles.

- Robotics: Robots operating in urban settings must adapt to dynamic environments and occlusions.

- Augmented reality: Applications must accurately overlay digital information onto the physical world, which can be hindered by occlusions.

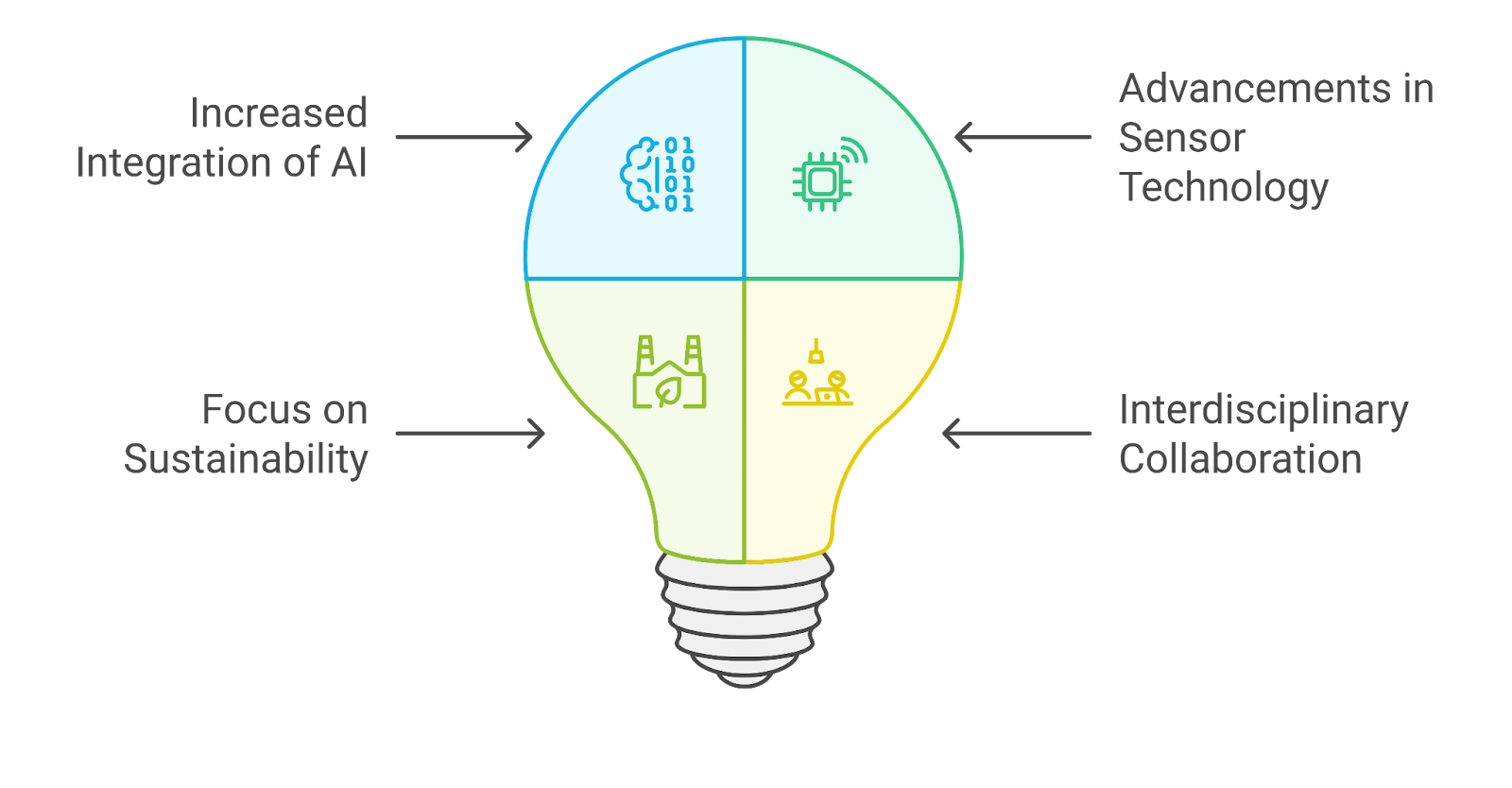

10. Future Trends and Research Directions

As technology continues to evolve, several trends and research directions are emerging that will shape the future of various fields, particularly in urban environments and smart technologies.

- Increased Integration of AI:

- AI will play a crucial role in enhancing decision-making processes across various applications.

- Machine learning algorithms will improve the ability to predict and respond to dynamic urban environments.

- Advancements in Sensor Technology:

- Development of more sophisticated sensors that can operate effectively in complex environments.

- Miniaturization and cost reduction of sensors will make them more accessible for widespread use.

- Focus on Sustainability:

- Research will increasingly prioritize sustainable practices in urban planning and technology deployment.

- Smart city initiatives will aim to reduce energy consumption and improve resource management.

- Interdisciplinary Collaboration:

- Collaboration between fields such as urban planning, engineering, and computer science will drive innovation.

- Sharing knowledge and resources will lead to more effective solutions for urban challenges.

10.1. Edge Computing for Real-time Processing

Edge computing is emerging as a critical technology for enabling real-time processing in various applications, particularly in urban environments where immediate data analysis is essential.

- Definition and Importance:

- Edge computing refers to processing data closer to the source rather than relying on centralized data centers.

- This approach reduces latency, allowing for faster decision-making and response times.

- Benefits of Edge Computing:

- Reduced bandwidth usage: Less data needs to be transmitted to the cloud, saving on bandwidth costs.

- Enhanced privacy and security: Sensitive data can be processed locally, minimizing exposure to potential breaches.

- Improved reliability: Local processing can continue even if connectivity to the cloud is disrupted.

- Applications in Urban Environments:

- Smart traffic management: Real-time data from sensors can optimize traffic flow and reduce congestion.

- Public safety: Edge computing can enable faster response times for emergency services by processing data from surveillance cameras and sensors.

- Environmental monitoring: Local processing of data from air quality sensors can provide immediate insights and alerts.

- Future Research Directions:

- Development of more efficient algorithms for edge computing to handle complex data processing tasks.

- Exploration of hybrid models that combine edge and cloud computing for optimal performance.

- Investigation into the integration of edge computing with IoT devices for enhanced functionality in smart cities.

At Rapid Innovation, we understand the complexities of urban environments and the challenges posed by urban occlusions technology. Our expertise in AI and blockchain development allows us to provide tailored solutions that enhance the efficiency and effectiveness of your projects. By leveraging advanced technologies such as sensor fusion and machine learning, we help our clients achieve greater ROI through improved accuracy and decision-making capabilities. Partnering with us means you can expect innovative solutions that not only address current challenges but also position you for future success in an ever-evolving technological landscape.

10.2. Explainable AI in Computer Vision

At Rapid Innovation, we understand that Explainable AI (XAI) in computer vision is crucial for making AI models' decision-making processes transparent and comprehensible. This transparency is especially vital in sectors such as healthcare, autonomous driving, and security, where the implications of AI decisions can significantly affect outcomes.

- Importance of Explainability

- Enhances trust in AI systems, fostering user confidence.

- Facilitates debugging and improvement of models, leading to better performance.

- Ensures compliance with regulations and ethical standards, safeguarding your organization’s reputation.

- Techniques for Explainability

- Saliency Maps: These visual representations highlight areas in an image that most influence the model's predictions, allowing stakeholders to see what the AI is focusing on.

- LIME (Local Interpretable Model-agnostic Explanations): This method approximates the model locally to provide insights into individual predictions, making it easier to understand specific outcomes.

- Grad-CAM (Gradient-weighted Class Activation Mapping): This technique uses gradients to produce visual explanations for decisions made by convolutional neural networks, enhancing interpretability.

- Applications

- Healthcare: In medical imaging, explainable AI can assist radiologists in understanding why a model flagged a particular image, thereby aiding in accurate diagnosis and treatment.

- Autonomous Vehicles: Understanding the rationale behind a vehicle's AI system decisions is crucial for safety and accountability, ensuring that stakeholders can trust the technology.

- Security: In surveillance, explainable AI clarifies why certain behaviors were flagged as suspicious, enabling more informed responses.

- Challenges

- Balancing model performance with interpretability can be complex.

- Developing standardized metrics for evaluating explainability remains a challenge.

- Ensuring that explanations are understandable to non-experts is essential for broader acceptance.

At Rapid Innovation, we focus on explainable and interpretable models in computer vision and machine learning to enhance the understanding of AI systems. Our commitment to explainable AI in computer vision ensures that stakeholders can make informed decisions based on the insights provided by these models.

10.3. Integration with V2X Communication

At Rapid Innovation, we recognize that Vehicle-to-Everything (V2X) communication is a transformative technology that allows vehicles to communicate with various entities, including other vehicles (V2V), infrastructure (V2I), and even pedestrians (V2P). The integration of AI with V2X communication significantly enhances the capabilities of smart transportation systems.

- Benefits of Integration

- Improved Safety: AI can analyze data from V2X communications to predict and prevent accidents, ultimately saving lives.

- Traffic Management: Real-time data sharing optimizes traffic flow and reduces congestion, leading to more efficient transportation systems.

- Enhanced Navigation: AI can provide drivers with real-time updates on road conditions, hazards, and alternative routes, improving the overall driving experience.

- Key Technologies

- 5G Networks: High-speed, low-latency communication is essential for effective V2X interactions, enabling rapid data exchange.

- Edge Computing: Processing data closer to the source reduces latency and improves response times, making systems more responsive.

- Machine Learning Algorithms: These algorithms analyze vast amounts of data from V2X communications to make informed decisions, enhancing system intelligence.

- Use Cases

- Autonomous Vehicles: AI systems can leverage V2X data to make real-time driving decisions, improving safety and efficiency on the roads.

- Smart Traffic Lights: AI can adjust traffic signals based on real-time vehicle flow data, reducing wait times and improving traffic flow.

- Emergency Response: V2X communication can alert vehicles to the presence of emergency vehicles, allowing for quicker response times and potentially saving lives.

- Challenges

- Ensuring data security and privacy in V2X communications is paramount to maintain user trust.

- Standardizing communication protocols across different manufacturers and systems is essential for seamless integration.

- Addressing the potential for increased complexity in vehicle systems is crucial to ensure reliability and performance.

By partnering with Rapid Innovation, clients can expect to achieve greater ROI through enhanced decision-making capabilities, improved operational efficiencies, and a competitive edge in their respective markets. Our expertise in AI development ensures that we deliver tailored solutions that meet your unique business needs, driving innovation and success.

.jpg)