Table Of Contents

Category

1. Introduction to Computer Vision in ADAS

At Rapid Innovation, we recognize that computer vision is a critical technology in Advanced Driver Assistance Systems (ADAS), enhancing vehicle safety and improving driving experiences. As vehicles become more automated, the integration of computer vision systems is essential for interpreting the surrounding environment and making informed decisions. Our expertise in AI and Blockchain development positions us to help clients leverage these technologies effectively.

1.1. Definition and Importance of ADAS

ADAS refers to a range of safety features and technologies designed to assist drivers in the driving process. These systems aim to increase vehicle safety and improve overall driving efficiency.

- Key components of ADAS include:

- Adaptive cruise control

- Lane departure warning

- Automatic emergency braking

- Parking assistance

- Collision avoidance systems

- Importance of ADAS:

- Reduces the likelihood of accidents by providing real-time alerts and interventions.

- Enhances driver comfort by automating routine tasks.

- Supports the transition towards fully autonomous vehicles.

- Contributes to the reduction of traffic congestion and emissions by optimizing driving patterns.

According to the National Highway Traffic Safety Administration (NHTSA), ADAS technologies can potentially prevent up to 40% of crashes, highlighting their significance in modern vehicles.

1.2. Role of Computer Vision in ADAS

Computer vision plays a pivotal role in the functionality of ADAS by enabling vehicles to perceive and interpret their surroundings. The application of computer vision in ADAS is crucial for tasks such as object detection, lane detection, and scene understanding.

- Functions of computer vision in ADAS:

- Object detection: Identifying and classifying objects such as pedestrians, vehicles, and road signs.

- Lane detection: Recognizing lane markings to assist with lane-keeping and lane departure warnings.

- Depth perception: Estimating distances to objects to facilitate safe navigation and collision avoidance.

- Scene understanding: Analyzing complex environments to make informed decisions, such as recognizing traffic signals and road conditions.

- Benefits of computer vision in ADAS:

- Enhances situational awareness for drivers by providing critical information about the environment.

- Enables real-time processing of visual data, allowing for immediate responses to potential hazards.

- Supports the development of more sophisticated autonomous driving systems by providing a foundation for machine learning and artificial intelligence applications.

By partnering with Rapid Innovation, clients can expect to achieve greater ROI through the implementation of cutting-edge computer vision technologies in their ADAS solutions. Our tailored development and consulting services ensure that your systems are not only efficient but also scalable, paving the way for future advancements in vehicle automation and smart transportation systems. Together, we can enhance safety, improve user experiences, and drive innovation in the automotive industry, particularly in the realm of computer vision in ADAS.

1.3. Historical Development of Computer Vision in Automotive Applications

- The roots of computer vision in automotive applications can be traced back to the 1960s and 1970s when early research focused on basic image processing techniques.

- In the 1980s, the introduction of more sophisticated algorithms allowed for the detection of simple objects, laying the groundwork for future advancements.

- The 1990s saw the emergence of machine learning techniques, which enabled vehicles to recognize and classify objects in their environment more effectively.

- The early 2000s marked a significant shift with the advent of real-time processing capabilities, allowing for the integration of computer vision systems in vehicles.

- By the mid-2000s, automotive manufacturers began incorporating computer vision technologies into advanced driver-assistance systems (ADAS), enhancing safety features such as lane departure warnings and adaptive cruise control.

- The 2010s brought about a surge in research and development, driven by the rise of autonomous vehicles. Companies like Google and Tesla began investing heavily in computer vision in automotive for navigation and obstacle detection.

- Recent advancements in deep learning and neural networks have revolutionized the field, enabling vehicles to interpret complex scenes and make real-time decisions based on visual data.

- Today, computer vision in automotive is a critical component of autonomous driving technology, with applications ranging from pedestrian detection to traffic sign recognition.

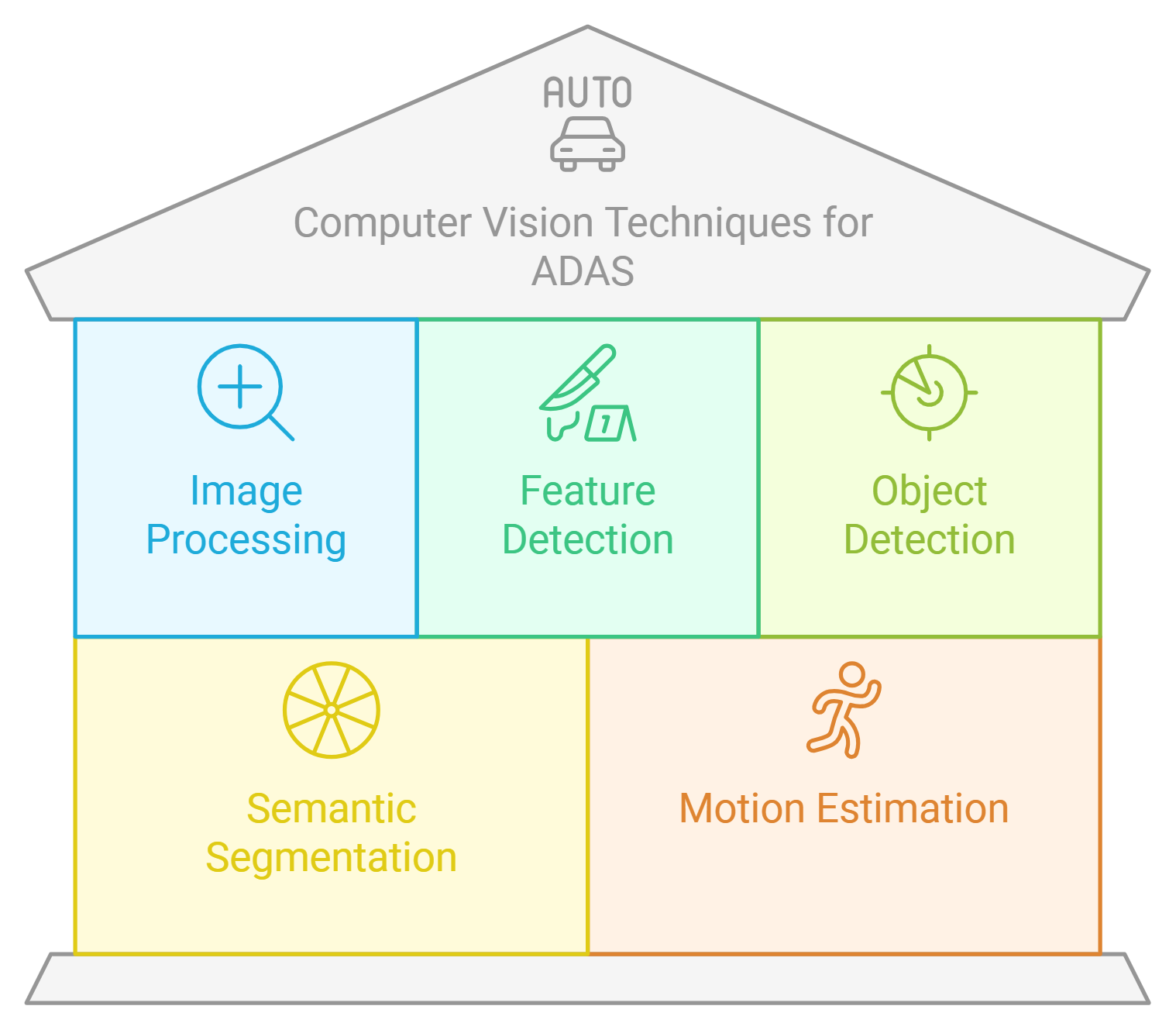

2. Fundamental Computer Vision Techniques for ADAS

- Computer vision techniques are essential for the development of advanced driver-assistance systems (ADAS), which enhance vehicle safety and automation.

- Key techniques include:

- Object detection: Identifying and locating objects within an image, such as vehicles, pedestrians, and obstacles.

- Image segmentation: Dividing an image into meaningful segments to simplify analysis and improve object recognition.

- Feature extraction: Identifying key characteristics of objects to aid in classification and recognition.

- Optical flow: Analyzing the motion of objects in a sequence of images to understand their movement and predict future positions.

- Stereo vision: Using two or more cameras to create a 3D representation of the environment, allowing for depth perception.

- These techniques work together to provide a comprehensive understanding of the vehicle's surroundings, enabling safer driving experiences.

2.1. Image Processing and Enhancement

- Image processing and enhancement are crucial for improving the quality of visual data captured by vehicle cameras.

- Techniques include:

- Noise reduction: Removing unwanted noise from images to enhance clarity and detail.

- Contrast enhancement: Adjusting the brightness and contrast of images to make features more distinguishable.

- Edge detection: Identifying boundaries within images to highlight important features and objects.

- Histogram equalization: Improving the contrast of images by redistributing pixel intensity values.

- Color correction: Adjusting colors to ensure accurate representation, which is vital for object recognition.

- These enhancements help in:

- Improving the accuracy of object detection and recognition algorithms.

- Ensuring reliable performance in various lighting and weather conditions.

- Facilitating better decision-making for ADAS applications, ultimately contributing to safer driving experiences.

At Rapid Innovation, we leverage our expertise in AI and blockchain technology to help automotive companies enhance their computer vision in automotive capabilities. By partnering with us, clients can expect greater ROI through improved safety features, streamlined development processes, and cutting-edge solutions tailored to their specific needs. Our commitment to innovation ensures that your projects are not only efficient but also positioned for future advancements in the automotive industry.

2.2. Feature Detection and Extraction

Feature detection and extraction are crucial steps in computer vision that help in identifying and describing key points in images. These features can be utilized for various applications, including image matching, object recognition, and scene understanding.

- Key Points:

- Features are distinctive points in an image that can be reliably detected under various conditions.

- Common algorithms for feature detection include Harris Corner Detector, SIFT (Scale-Invariant Feature Transform), and SURF (Speeded-Up Robust Features).

- Feature Descriptors:

- Once key points are detected, descriptors are computed to characterize the features.

- Descriptors provide a way to compare features across different images.

- Popular descriptors include SIFT, ORB (Oriented FAST and Rotated BRIEF), and BRIEF (Binary Robust Invariant Scalable Keypoints).

- Applications:

- Image stitching: Combining multiple images into a single panorama.

- Object recognition: Identifying objects within images by matching features.

- 3D reconstruction: Creating three-dimensional models from two-dimensional images.

- Challenges:

- Variability in lighting, scale, and rotation can affect feature detection.

- Occlusions and clutter in images can make it difficult to extract reliable features.

2.3. Object Detection and Recognition

Object detection and recognition involve identifying and classifying objects within an image or video stream. This process is essential for applications such as autonomous vehicles, surveillance systems, and image retrieval.

- Object Detection:

- The goal is to locate instances of objects within an image and draw bounding boxes around them.

- Techniques include traditional methods like Haar cascades and modern deep learning approaches such as YOLO (You Only Look Once) and SSD (Single Shot MultiBox Detector). Additionally, object detection techniques in computer vision have evolved to include advanced methods and deep learning in computer vision.

- Object Recognition:

- After detection, the next step is to classify the detected objects into predefined categories.

- Convolutional Neural Networks (CNNs) are widely used for this purpose, providing high accuracy in recognizing objects.

- Applications:

- Autonomous driving: Detecting pedestrians, vehicles, and traffic signs, which is crucial for applied deep learning and computer vision for self-driving cars.

- Retail: Inventory management through automated product recognition.

- Security: Identifying suspicious activities or individuals in surveillance footage.

- Challenges:

- Variability in object appearance due to changes in lighting, angle, and occlusion.

- The need for large annotated datasets for training deep learning models, particularly in the context of computer vision image segmentation.

2.4. Semantic Segmentation

Semantic segmentation is a process in computer vision that involves classifying each pixel in an image into a category. This technique provides a more detailed understanding of the scene compared to object detection, as it identifies the exact boundaries of objects.

- Pixel Classification:

- Each pixel is assigned a label corresponding to the object or region it belongs to.

- Common categories include background, road, vehicles, pedestrians, and more.

- Techniques:

- Fully Convolutional Networks (FCNs) are a popular architecture for semantic segmentation.

- Other methods include U-Net, DeepLab, and Mask R-CNN, which extend traditional CNNs to handle pixel-wise classification.

- Applications:

- Autonomous vehicles: Understanding road scenes for navigation and obstacle avoidance, which is a key aspect of computer vision image segmentation.

- Medical imaging: Identifying and segmenting anatomical structures in scans.

- Augmented reality: Overlaying digital information on real-world objects.

- Challenges:

- High computational cost due to the need for processing every pixel.

- Difficulty in achieving high accuracy in complex scenes with overlapping objects, as seen in violence detection in video using computer vision techniques.

At Rapid Innovation, we leverage these advanced techniques in feature detection, object recognition, and semantic segmentation to help our clients achieve their goals efficiently and effectively. By partnering with us, clients can expect enhanced ROI through improved accuracy in their applications, reduced time-to-market, and the ability to harness cutting-edge technology for their specific needs. Our expertise ensures that we can navigate the challenges of computer vision, providing tailored solutions that drive success in various industries, including machine vision techniques in AI and classical computer vision techniques.

2.5. Motion Estimation and Tracking

Motion estimation and tracking are critical components in various fields, including computer vision, robotics, and video processing. These processes involve determining the movement of objects within a scene and predicting their future positions.

- Motion estimation refers to the process of calculating the motion of objects between frames in a video sequence.

- It typically involves algorithms that analyze pixel changes to identify how objects move over time.

- Common techniques include optical flow, block matching, and feature-based methods.

- Optical flow calculates the apparent motion of objects based on the brightness patterns in the image.

- Block matching divides the image into blocks and finds corresponding blocks in subsequent frames to estimate motion.

- Feature-based methods track distinct features (like corners or edges) across frames to determine motion.

Tracking, on the other hand, involves maintaining the identity of an object as it moves through a sequence of frames.

- Tracking algorithms can be categorized into:

- Point tracking: Follows specific points of interest.

- Kernel tracking: Uses a region around the object to track its movement.

- Model-based tracking: Utilizes a predefined model of the object to assist in tracking.

Applications of motion estimation and tracking include:

- Video surveillance: Monitoring movements in real-time for security purposes.

- Autonomous vehicles: Understanding the environment and predicting the movement of other vehicles and pedestrians.

- Augmented reality: Integrating virtual objects into real-world scenes by accurately tracking the user's movements.

3. Sensors and Data Acquisition

Sensors and data acquisition systems are essential for collecting information from the environment, enabling various applications in automation, robotics, and data analysis.

- Sensors convert physical phenomena into measurable signals.

- Common types of sensors include:

- Temperature sensors: Measure heat levels.

- Proximity sensors: Detect the presence of nearby objects.

- Light sensors: Measure illumination levels.

- Motion sensors: Detect movement within a specified area.

Data acquisition systems gather and process data from these sensors.

- They typically consist of:

- Signal conditioning: Enhances the quality of the signal for accurate measurement.

- Analog-to-digital conversion: Converts analog signals into digital form for processing.

- Data storage: Saves the collected data for analysis and retrieval.

Applications of sensors and data acquisition include:

- Environmental monitoring: Tracking air quality, temperature, and humidity levels.

- Industrial automation: Monitoring machinery performance and production processes.

- Healthcare: Collecting patient data for diagnostics and monitoring.

3.1. Camera Types and Configurations

Cameras are vital tools in data acquisition, particularly in fields like photography, surveillance, and scientific research. Different types of cameras and configurations serve various purposes.

- Types of cameras include:

- Digital cameras: Capture images electronically, offering high resolution and flexibility.

- Analog cameras: Use film to capture images, providing a classic photography experience.

- Action cameras: Compact and rugged, designed for capturing high-quality video in dynamic environments.

- 360-degree cameras: Capture panoramic images and videos, providing immersive experiences.

Camera configurations can significantly impact the quality and type of data collected.

- Key configurations include:

- Lens type: Determines the field of view and depth of field. Common lens types are wide-angle, telephoto, and macro.

- Sensor size: Affects image quality and low-light performance. Larger sensors typically yield better results.

- Mounting options: Cameras can be fixed, handheld, or mounted on drones and vehicles, influencing their application.

- Resolution: Higher resolution cameras capture more detail, essential for applications like surveillance and scientific imaging.

Applications of different camera types and configurations include:

- Surveillance: Using high-resolution cameras for security monitoring in public spaces.

- Scientific research: Employing specialized cameras for capturing data in experiments and studies.

- Media production: Utilizing various camera types to create engaging content for films and television.

At Rapid Innovation, we leverage our expertise in motion estimation and tracking to help clients achieve their goals efficiently and effectively. By integrating advanced technologies, we enable businesses to enhance their operational capabilities, leading to greater ROI. Partnering with us means you can expect improved accuracy in data collection, enhanced security measures, and innovative solutions tailored to your specific needs. Let us help you transform your vision into reality. At Rapid Innovation, we understand that the choice of camera technology, such as thermal security cameras and IP video surveillance cameras, can significantly impact your project's success and return on investment (ROI). Our expertise in AI and Blockchain development allows us to guide you in selecting the right camera systems tailored to your specific needs, ensuring that you achieve your goals efficiently and effectively.

3.1.1. Monocular cameras

Monocular cameras are single-lens devices that capture images and videos from a single viewpoint. They are widely used in various applications due to their simplicity and cost-effectiveness.

- Basic functionality:

- Capture 2D images.

- Provide a single perspective of the scene.

- Applications:

- Common in smartphones, webcams, and security cameras, including avigilon video surveillance.

- Used in robotics for object detection and recognition.

- Advantages:

- Lower cost compared to multi-lens systems.

- Easier to integrate into existing technologies.

- Limitations:

- Lack depth perception, making it challenging to gauge distances.

- Limited 3D reconstruction capabilities.

By leveraging monocular cameras, we can help clients reduce costs while still achieving satisfactory results in applications where depth perception is not critical. Our team can assist in integrating these systems into your existing technology stack, ensuring a seamless transition and minimal disruption.

3.1.2. Stereo cameras

Stereo cameras consist of two or more lenses positioned at different angles, mimicking human binocular vision. This setup allows them to capture depth information, making them suitable for various advanced applications.

- Basic functionality:

- Capture 3D images by comparing two or more perspectives.

- Calculate depth by analyzing the disparity between images.

- Applications:

- Widely used in autonomous vehicles for navigation and obstacle detection.

- Employed in 3D modeling and virtual reality environments.

- Advantages:

- Enhanced depth perception and spatial awareness.

- Improved accuracy in object localization and tracking.

- Limitations:

- More complex and expensive than monocular cameras.

- Requires calibration to ensure accurate depth measurements.

When clients require advanced depth perception, our expertise in stereo camera systems can provide significant advantages. We can help you implement these systems in applications such as autonomous navigation, ensuring that you achieve greater accuracy and safety, ultimately leading to a higher ROI.

3.1.3. Infrared cameras

Infrared cameras detect infrared radiation, which is emitted by objects based on their temperature. These cameras are particularly useful in low-light conditions and for thermal imaging, such as with the best thermal security camera options available.

- Basic functionality:

- Capture images based on heat signatures rather than visible light.

- Can visualize temperature differences in a scene.

- Applications:

- Used in surveillance, search and rescue operations, and building inspections.

- Common in medical imaging to detect abnormalities in body temperature.

- Advantages:

- Operate effectively in complete darkness.

- Can reveal hidden issues, such as heat loss in buildings or electrical faults.

- Limitations:

- Typically more expensive than standard cameras.

- Limited resolution compared to visible light cameras, which can affect detail.

By incorporating infrared cameras into your projects, we can help you uncover critical insights that may not be visible through standard imaging techniques. Our team can guide you in selecting the right infrared solutions, ensuring that you maximize the benefits while minimizing costs.

In conclusion, partnering with Rapid Innovation means gaining access to a wealth of knowledge and experience in camera technology, including security surveillance software and face recognition software for CCTV. We are committed to helping you achieve your goals by providing tailored solutions that enhance your operational efficiency and drive greater ROI. Let us help you navigate the complexities of camera systems, such as avigilon video management systems and industrial wireless security cameras, and unlock the full potential of your projects.

3.2. Sensor Fusion with Other ADAS Technologies

At Rapid Innovation, we understand that sensor fusion is a critical component in Advanced Driver Assistance Systems (ADAS). By combining data from multiple sensors, we enhance the vehicle's perception of its environment, improving the accuracy and reliability of the information used for decision-making. This ultimately leads to safer driving experiences for end-users. The two primary technologies we often integrate with other sensors in ADAS are LiDAR and Radar, as well as adas sensor fusion techniques.

3.2.1. LiDAR

LiDAR (Light Detection and Ranging) is a remote sensing technology that utilizes laser light to measure distances. It plays a significant role in ADAS by providing high-resolution 3D maps of the vehicle's surroundings.

- High Precision: LiDAR can detect objects with great accuracy, even in complex environments, ensuring that your systems are equipped to handle real-world challenges.

- 360-Degree Coverage: It offers a comprehensive view around the vehicle, which is essential for navigation and obstacle detection, enhancing the overall safety of the driving experience.

- Performance in Various Conditions: LiDAR systems can function effectively in low-light conditions, unlike traditional cameras, ensuring reliable performance at all times.

- Data Integration: When fused with other sensors, LiDAR enhances the overall perception system, allowing for better object classification and tracking, which is crucial for advanced driving functionalities.

- Cost Considerations: While LiDAR provides excellent data quality, it can be expensive, which may limit its widespread adoption in lower-end vehicles. Our consulting services can help you navigate these cost challenges effectively.

3.2.2. Radar

Radar (Radio Detection and Ranging) is another key technology used in ADAS. It employs radio waves to detect objects and measure their distance, speed, and direction.

- Robustness: Radar systems are less affected by environmental conditions such as fog, rain, or snow, making them reliable for various driving scenarios, thus enhancing user trust in your systems.

- Long-Range Detection: Radar can detect objects at significant distances, which is crucial for functions like adaptive cruise control and collision avoidance, ensuring a proactive approach to safety.

- Speed Measurement: Radar is particularly effective in measuring the speed of moving objects, providing critical data for maintaining safe distances, which is essential for user safety.

- Complementary to LiDAR: When combined with LiDAR, radar can fill in gaps where LiDAR may struggle, such as in adverse weather conditions or when detecting small objects, creating a more robust system.

- Cost-Effectiveness: Radar systems are generally more affordable than LiDAR, making them a popular choice for many manufacturers looking to implement ADAS features. Our expertise can help you select the right technology mix for your budget and needs, including adas fusion strategies.

In conclusion, sensor fusion with LiDAR and radar enhances the capabilities of ADAS by leveraging the strengths of each technology. This integration leads to improved safety, reliability, and overall performance of advanced driving systems. By partnering with Rapid Innovation, you can expect greater ROI through our tailored development and consulting solutions, ensuring that your ADAS technologies are not only cutting-edge but also cost-effective and reliable. Let us help you achieve your goals efficiently and effectively.

3.2.3. Ultrasonic Sensors

Ultrasonic sensors are advanced devices that utilize sound waves to detect objects and measure distances. Their versatility makes them invaluable across various applications, including automotive systems, robotics, and industrial automation.

- Functionality:

- Emit ultrasonic sound waves.

- Measure the time it takes for the sound waves to bounce back after hitting an object.

- Calculate the distance based on the speed of sound.

- Characteristics:

- Typically operate at frequencies between 20 kHz and 400 kHz.

- Effective for short-range detection, usually up to 4-5 meters.

- Provide accurate distance measurements in various environmental conditions.

- Applications in Vehicles:

- Parking Assistance Systems: Help drivers park by detecting obstacles around the vehicle using ultrasonic sensors.

- Blind Spot Detection: Alert drivers to vehicles in their blind spots with the help of ultrasonic transducers.

- Collision Avoidance: Assist in preventing accidents by detecting nearby objects through ultrasonic flow measurement.

- Advantages:

- Cost-effective compared to other sensor types.

- Simple to implement and integrate into existing systems, including ultrasonic flow metering.

- Reliable performance in various weather conditions.

- Limitations:

- Limited range compared to radar or lidar sensors.

- Performance can be affected by environmental factors like temperature and humidity.

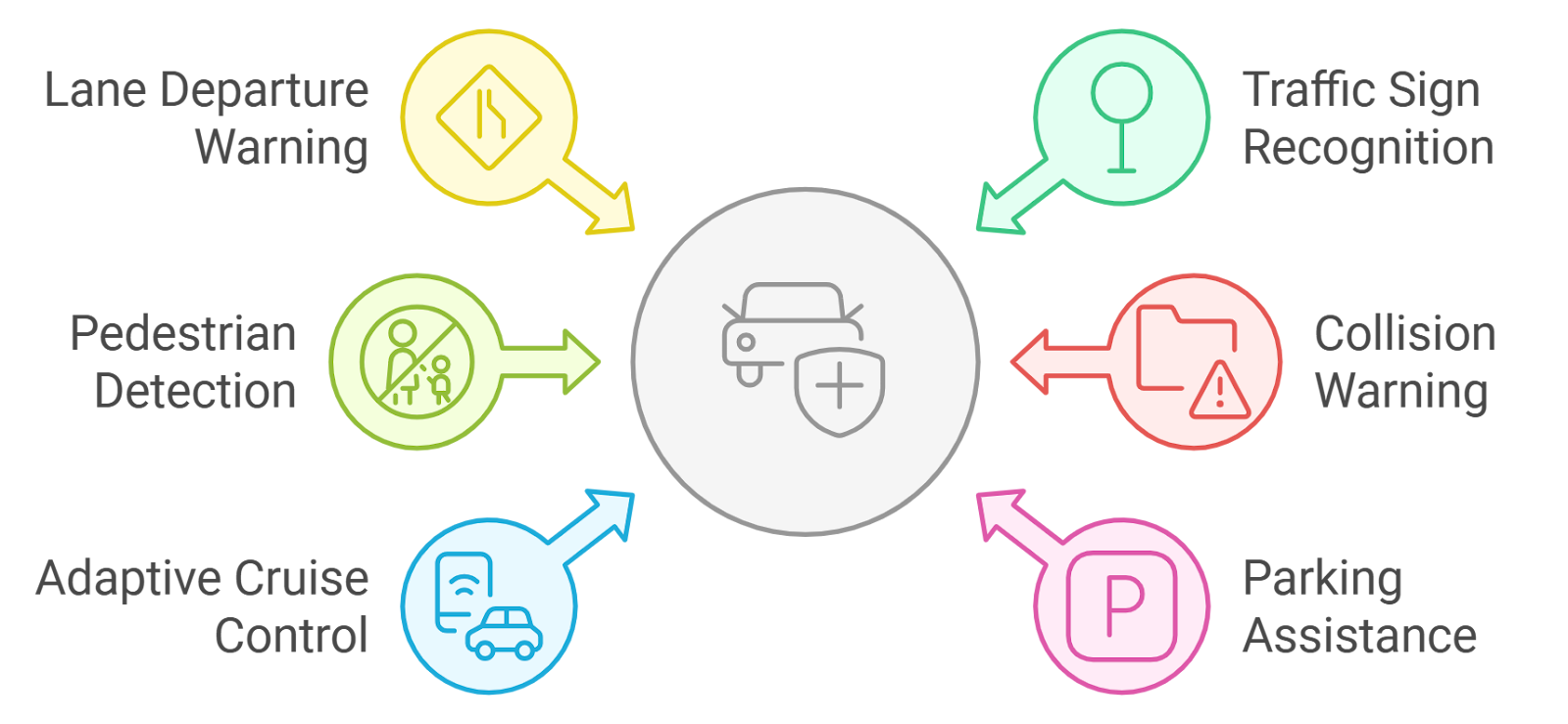

4. Key ADAS Applications Using Computer Vision

Advanced Driver Assistance Systems (ADAS) leverage cutting-edge computer vision technology to enhance vehicle safety and improve driving experiences. These systems analyze visual data from cameras and sensors to make real-time decisions.

- Key Applications Include:

- Lane Departure Warning (LDW)

- Lane Keeping Assist (LKA)

- Adaptive Cruise Control

- Traffic Sign Recognition

- Automatic Emergency Braking

- Benefits of Computer Vision in ADAS:

- Enhanced situational awareness for drivers.

- Improved safety through real-time monitoring of the driving environment.

- Reduction in human error by providing timely alerts and assistance.

4.1. Lane Departure Warning and Lane Keeping Assist

Lane Departure Warning (LDW) and Lane Keeping Assist (LKA) are two critical features of ADAS that utilize computer vision to enhance road safety.

- Lane Departure Warning (LDW):

- Monitors vehicle position within lane markings using cameras.

- Alerts drivers when the vehicle unintentionally drifts out of its lane.

- Provides visual, audible, or haptic feedback to prompt corrective action.

- Lane Keeping Assist (LKA):

- Builds on LDW by actively helping to keep the vehicle centered in its lane.

- Uses steering interventions to guide the vehicle back into the lane if it begins to drift.

- Can operate in conjunction with adaptive cruise control for a more automated driving experience.

- Key Features:

- Real-time lane detection using image processing algorithms.

- Ability to function in various driving conditions, including day and night.

- Integration with other ADAS features for comprehensive safety solutions.

- Benefits:

- Reduces the risk of accidents caused by lane drifting.

- Enhances driver confidence and comfort during long drives.

- Contributes to overall road safety by promoting better lane discipline.

- Challenges:

- Performance can be affected by poor road markings or adverse weather conditions.

- Requires continuous updates and improvements in algorithms for accuracy.

- May lead to over-reliance on technology, reducing driver attentiveness.

In conclusion, ultrasonic sensors and computer vision applications like lane departure warning and lane keeping assist play vital roles in enhancing vehicle safety and driving experiences. These technologies are essential components of modern ADAS, contributing to the ongoing evolution of automotive safety systems. At Rapid Innovation, we specialize in integrating these advanced technologies, including ultrasonic transducer cleaning and ultrasonic liquid level sensors, into your projects, ensuring that you achieve greater ROI and enhanced operational efficiency. Partnering with us means you can expect innovative solutions tailored to your specific needs, ultimately driving your success in the competitive landscape.

4.2. Traffic Sign Recognition

Traffic sign recognition is a critical component of advanced driver-assistance systems (ADAS) and autonomous vehicles. This technology enables vehicles to identify and interpret various traffic signs, enhancing road safety and improving navigation.

- Uses cameras and sensors to detect traffic signs.

- Processes images using machine learning algorithms to recognize signs.

- Provides real-time information to drivers about speed limits, stop signs, and other important regulations.

- Can alert drivers to changes in traffic conditions or potential hazards.

- Enhances the vehicle's ability to comply with traffic laws, reducing the likelihood of violations.

The effectiveness of traffic sign recognition systems can vary based on factors such as lighting conditions, weather, and the angle of the sign. Continuous advancements in artificial intelligence and computer vision are improving the accuracy and reliability of these systems. At Rapid Innovation, we leverage our expertise in AI and machine learning to develop robust traffic sign recognition technology and traffic sign recognition software solutions that can be tailored to meet the specific needs of our clients, ultimately leading to greater ROI through enhanced safety and compliance. For more insights on how AI is changing safety in transportation, check out AI's Leap in Driving Safety & Vigilance.

4.3. Pedestrian and Cyclist Detection

Pedestrian and cyclist detection is essential for ensuring the safety of vulnerable road users. This technology helps vehicles recognize and respond to pedestrians and cyclists in their vicinity, significantly reducing the risk of accidents.

- Utilizes a combination of cameras, radar, and lidar to detect pedestrians and cyclists.

- Employs algorithms to analyze movement patterns and predict potential collisions.

- Can differentiate between pedestrians, cyclists, and other objects, enhancing decision-making.

- Provides alerts to drivers and can initiate automatic braking if a collision is imminent.

- Plays a crucial role in urban environments where pedestrian and cyclist traffic is high.

The integration of pedestrian and cyclist detection systems is vital for the development of smart cities and the promotion of sustainable transportation. As cities become more congested, these technologies will be increasingly important for ensuring safety and efficiency on the roads. By partnering with Rapid Innovation, clients can expect cutting-edge solutions that not only enhance safety but also contribute to a more sustainable urban environment, ultimately driving higher returns on investment. For further reading on advancements in safety technology, see Visionary Roadways: AI's Leap in Driving Safety.

4.4. Forward Collision Warning and Automatic Emergency Braking

Forward collision warning (FCW) and automatic emergency braking (AEB) are two interconnected safety features designed to prevent or mitigate collisions. These systems work together to enhance driver awareness and vehicle response in critical situations.

- FCW uses sensors to monitor the distance and speed of vehicles ahead, providing visual and audible alerts to the driver if a potential collision is detected.

- AEB takes this a step further by automatically applying the brakes if the driver does not respond to the warning in time.

- Both systems are designed to reduce the severity of accidents or prevent them altogether, particularly in rear-end collisions.

- They are increasingly becoming standard features in new vehicles, contributing to overall road safety.

- Studies have shown that vehicles equipped with AEB can reduce rear-end crashes by up to 50%.

The combination of FCW and AEB represents a significant advancement in automotive safety technology, helping to protect drivers, passengers, and other road users. As these systems continue to evolve, they will play a crucial role in the future of transportation. At Rapid Innovation, we are committed to developing and implementing these advanced safety features, ensuring our clients can offer state-of-the-art solutions that enhance safety and improve customer satisfaction, leading to a substantial increase in ROI. For more on the impact of technology in safety, explore Impact of Real-Time Object Recognition on Industry Advancements.

4.5. Adaptive Cruise Control

Adaptive cruise control (ACC) is an advanced driver assistance system that enhances traditional cruise control by automatically adjusting the vehicle's speed to maintain a safe distance from the vehicle ahead. Various types of ACC, such as autonomous cruise control, active cruise control, and smart cruise control, are available in modern vehicles.

- Uses sensors and cameras to monitor traffic conditions.

- Automatically accelerates or decelerates based on the speed of the vehicle in front.

- Reduces driver fatigue during long trips by maintaining a consistent speed.

- Can be integrated with other systems like lane-keeping assistance for a more comprehensive driving experience.

- Some systems can even bring the vehicle to a complete stop and resume driving when traffic conditions allow.

ACC is particularly beneficial in highway driving, where maintaining a safe distance can be challenging due to varying speeds of surrounding vehicles. According to a study, vehicles equipped with ACC, including advanced cruise control systems and automatic cruise control cars, can reduce the likelihood of rear-end collisions by up to 40%.

4.6. Parking Assistance Systems

Parking assistance systems are designed to help drivers park their vehicles safely and efficiently. These systems utilize a combination of sensors, cameras, and sometimes even artificial intelligence to assist in parking maneuvers.

- Types of parking assistance include:

- Rear parking sensors that alert drivers to obstacles behind the vehicle.

- Front sensors that help in tight spaces.

- 360-degree cameras providing a bird's-eye view of the vehicle's surroundings.

- Automated parking systems that can park the vehicle with minimal driver input.

- Benefits of parking assistance systems:

- Reduces the risk of accidents while parking.

- Saves time and reduces stress associated with parking in crowded areas.

- Enhances the overall driving experience, especially for novice drivers.

Research indicates that parking assistance systems can reduce parking-related accidents by as much as 30%. These systems are becoming increasingly common in modern vehicles, making parking easier and safer for everyone.

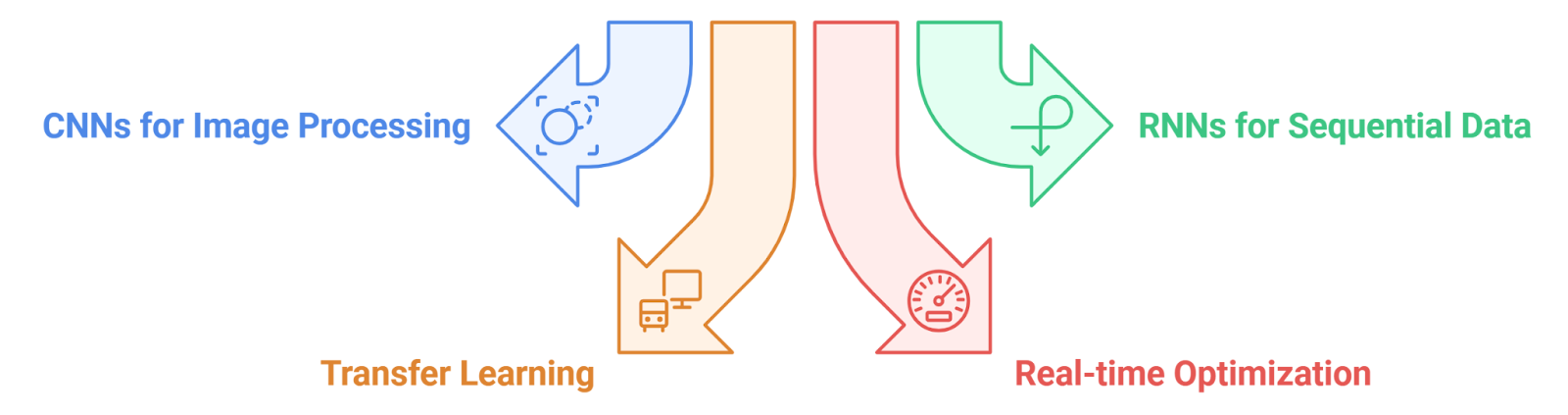

5. Deep Learning in ADAS Computer Vision

Deep learning is a subset of artificial intelligence that has significantly advanced the capabilities of computer vision in Advanced Driver Assistance Systems (ADAS).

- Deep learning algorithms analyze vast amounts of data to recognize patterns and make decisions.

- In ADAS, these algorithms are used for:

- Object detection: Identifying pedestrians, vehicles, and obstacles in real-time.

- Lane detection: Recognizing lane markings to assist with lane-keeping features.

- Traffic sign recognition: Understanding and responding to road signs.

- Benefits of deep learning in ADAS:

- Improved accuracy in detecting and classifying objects, leading to safer driving.

- Enhanced ability to operate in various weather conditions and lighting scenarios.

- Continuous learning capabilities, allowing systems to improve over time as they gather more data.

Deep learning models are trained on large datasets, which helps them generalize better to new situations. This technology is crucial for the development of fully autonomous vehicles, as it enables them to interpret complex environments. According to a report, deep learning has improved object detection accuracy in ADAS by over 20% compared to traditional methods.

At Rapid Innovation, we leverage these advanced technologies to help our clients enhance their product offerings, improve safety features, and ultimately achieve greater ROI. By integrating cutting-edge solutions like autonomous cruise control systems, active cruise control, and parking assistance systems, we enable our clients to stay ahead in a competitive market while ensuring a superior user experience. Partnering with us means you can expect increased efficiency, reduced development costs, and a significant boost in customer satisfaction. Let us help you navigate the complexities of AI and blockchain technology to achieve your business goals effectively and efficiently.

5.1. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a class of deep learning algorithms primarily used for processing structured grid data, such as images. They are particularly effective in tasks like image recognition, object detection, and segmentation.

- Key components of CNNs:

- Convolutional Layers: These layers apply filters to the input data to create feature maps, capturing spatial hierarchies.

- Pooling Layers: These layers reduce the dimensionality of feature maps, retaining essential information while decreasing computational load.

- Fully Connected Layers: These layers connect every neuron in one layer to every neuron in the next, enabling the network to make predictions based on the features extracted.

- Applications in automotive:

- Autonomous Driving: CNNs are utilized for recognizing road signs, pedestrians, and other vehicles, enhancing safety and navigation.

- Driver Assistance Systems: They play a crucial role in lane detection and monitoring driver behavior, contributing to a more secure driving experience.

- Image Processing: CNNs improve image quality and assist in real-time video analysis, providing valuable insights for various automotive applications, including deep learning based object classification on automotive radar spectra.

5.2. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are designed to handle sequential data, making them suitable for tasks where context and order matter, such as time series analysis and natural language processing.

- Characteristics of RNNs:

- Memory: RNNs maintain a hidden state that captures information from previous inputs, allowing them to learn from sequences.

- Feedback Loops: Unlike traditional neural networks, RNNs have connections that loop back, enabling them to process sequences of varying lengths.

- Applications in automotive:

- Predictive Maintenance: RNNs analyze time-series data from vehicle sensors to predict potential failures, reducing downtime and maintenance costs.

- Speech Recognition: They are employed in voice-activated systems for hands-free control in vehicles, enhancing user convenience.

- Driver Behavior Analysis: RNNs can model and predict driver actions based on historical data, allowing for improved safety measures and personalized experiences.

5.3. Transfer Learning and Fine-Tuning for Automotive Applications

Transfer learning is a technique where a pre-trained model is adapted to a new but related task, significantly reducing the time and data required for training.

- Benefits of transfer learning:

- Reduced Training Time: Leveraging existing models accelerates the training process, allowing for quicker deployment of solutions.

- Improved Performance: Pre-trained models often achieve better accuracy due to their exposure to large datasets, ensuring high-quality outcomes.

- Less Data Requirement: Transfer learning enables models to perform well even with limited data, making it a cost-effective solution.

- Fine-tuning process:

- Layer Freezing: Initially, some layers of the pre-trained model are frozen to retain learned features, ensuring stability during training.

- Gradual Unfreezing: Layers are gradually unfrozen and trained on the new dataset to adapt the model to specific tasks, optimizing performance.

- Applications in automotive:

- Object Detection: Pre-trained models can be fine-tuned for specific tasks like detecting vehicles or pedestrians in various environments, enhancing safety features.

- Semantic Segmentation: Transfer learning aids in segmenting images for tasks like identifying road boundaries and lane markings, improving navigation systems.

- Anomaly Detection: Fine-tuning models on specific vehicle data can enhance the detection of unusual patterns or behaviors, contributing to proactive safety measures.

By partnering with Rapid Innovation, clients can leverage these advanced technologies to achieve greater ROI through enhanced efficiency, reduced costs, and improved performance in their automotive applications, including deep learning automotive applications. Our expertise in AI and blockchain development ensures that we provide tailored solutions that align with your business goals, driving innovation and success.

5.4. Real-time inference optimization

Real-time inference optimization is crucial for deploying machine learning models in applications that require immediate responses, such as autonomous vehicles, robotics, and real-time video analysis. The goal is to enhance the speed and efficiency of model predictions without sacrificing accuracy.

- Techniques for optimization:

- Model pruning: Reducing the size of the model by removing less important weights, which can lead to faster inference times.

- Quantization: Converting model weights from floating-point to lower precision formats (e.g., int8), which reduces memory usage and speeds up computation.

- Knowledge distillation: Training a smaller model (student) to replicate the behavior of a larger, more complex model (teacher), allowing for faster inference with minimal loss in performance.

- Hardware acceleration:

- Utilizing GPUs, TPUs, or FPGAs can significantly enhance the speed of inference tasks.

- Edge computing allows processing data closer to the source, reducing latency and bandwidth usage.

- Frameworks and tools:

- TensorRT, ONNX Runtime, and OpenVINO are popular frameworks that provide optimization capabilities for real-time inference.

- These tools often include features for automatic optimization, making it easier for developers to implement.

- Performance metrics:

- Latency: The time taken for a model to produce an output after receiving input.

- Throughput: The number of inferences a model can perform in a given time frame.

- Accuracy: Maintaining a balance between speed and the correctness of predictions is essential.

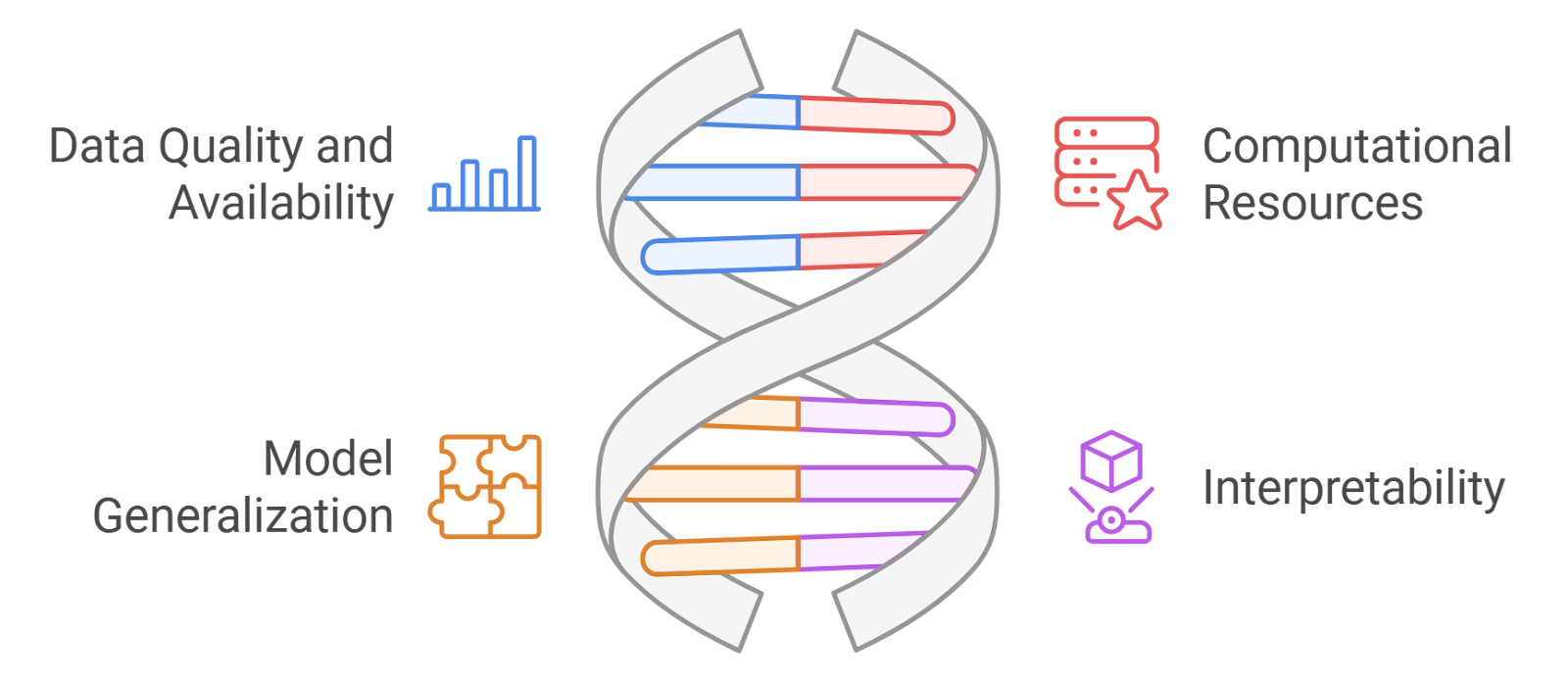

6. Challenges and Limitations

Despite advancements in machine learning and computer vision, several challenges and limitations persist that can hinder the effectiveness of these technologies.

- Data quality and availability:

- Insufficient or biased datasets can lead to poor model performance.

- Data privacy concerns may limit access to necessary training data.

- Computational resources:

- High-performance models often require significant computational power, which may not be feasible for all applications.

- Limited hardware capabilities can restrict the deployment of complex models in real-time scenarios.

- Model generalization:

- Models trained on specific datasets may struggle to generalize to new, unseen data.

- Overfitting can occur when a model learns noise in the training data rather than the underlying patterns.

- Interpretability:

- Many machine learning models, especially deep learning, operate as "black boxes," making it difficult to understand their decision-making processes.

- Lack of transparency can hinder trust and adoption in critical applications.

6.1. Environmental factors affecting vision systems

Environmental factors play a significant role in the performance of vision systems, impacting their accuracy and reliability.

- Lighting conditions:

- Variations in natural and artificial lighting can affect image quality and object detection.

- Shadows, glare, and low-light conditions can lead to misinterpretations by vision systems.

- Weather conditions:

- Rain, fog, snow, and other adverse weather can obscure visibility and affect sensor performance.

- Changes in environmental conditions can lead to increased false positives or negatives in object detection.

- Background clutter:

- Complex backgrounds can confuse vision systems, making it difficult to distinguish between relevant objects and noise.

- Cluttered environments may require advanced algorithms to filter out distractions.

- Motion blur:

- Rapid movement of objects or the camera can result in motion blur, complicating the task of accurately identifying and tracking objects.

- Stabilization techniques may be necessary to mitigate this issue.

- Temperature and humidity:

- Extreme temperatures can affect the performance of sensors and cameras, leading to potential failures.

- High humidity can cause condensation on lenses, impacting image clarity.

- Calibration and alignment:

- Proper calibration of cameras and sensors is essential for accurate measurements and predictions.

- Misalignment can lead to distorted images and incorrect interpretations.

By understanding these environmental factors, developers can design more robust vision systems that are resilient to various challenges.

At Rapid Innovation, we leverage our expertise in real-time inference optimization to help clients enhance their machine learning applications. By employing advanced techniques and utilizing cutting-edge hardware, we ensure that our clients achieve greater ROI through improved performance and efficiency. Partnering with us means you can expect tailored solutions that address your unique challenges, ultimately leading to faster deployment and better outcomes for your business.

6.2. Computational Constraints in Embedded Systems

Embedded systems are specialized computing systems that perform dedicated functions within larger mechanical or electrical systems. They often face several computational constraints, including constraints of embedded systems, that can impact their performance and functionality.

- Limited Processing Power:

- Embedded systems typically use microcontrollers or microprocessors with lower clock speeds and fewer cores compared to general-purpose computers.

- This limitation restricts the complexity of algorithms and applications that can be run.

- Memory Limitations:

- These systems often have constrained RAM and storage, which can limit the amount of data they can process or store.

- Efficient memory management is crucial to ensure that applications run smoothly without exceeding available resources.

- Energy Consumption:

- Many embedded systems are battery-operated, necessitating energy-efficient designs to prolong battery life.

- This often requires trade-offs between performance and power consumption, leading to the need for optimized algorithms.

- Real-Time Processing Requirements:

- Many embedded systems must operate in real-time, meaning they need to process inputs and produce outputs within strict time constraints.

- This requirement can complicate software design and necessitate the use of real-time operating systems (RTOS).

- Hardware Limitations:

- The specific hardware used in embedded systems can impose constraints on the types of tasks that can be performed.

- Developers must often work within the limitations of the hardware, which can affect the overall system design.

6.3. Safety and Reliability Concerns

Safety and reliability are critical aspects of embedded systems, especially in applications where failure can lead to catastrophic consequences.

- System Failures:

- Embedded systems can fail due to hardware malfunctions, software bugs, or unexpected environmental conditions.

- Ensuring reliability often involves rigorous testing and validation processes.

- Safety-Critical Applications:

- Many embedded systems are used in safety-critical applications, such as automotive, medical devices, and aerospace.

- These systems must adhere to strict safety standards and regulations to minimize risks.

- Redundancy and Fault Tolerance:

- Implementing redundancy (e.g., duplicate components) can enhance system reliability by providing backup options in case of failure.

- Fault-tolerant designs can help systems continue to operate correctly even when some components fail.

- Software Reliability:

- Software bugs can lead to system failures, making it essential to use robust coding practices and thorough testing methodologies.

- Techniques such as formal verification can help ensure that software behaves as intended under all conditions.

- Environmental Factors:

- Embedded systems may operate in harsh environments, which can affect their reliability.

- Designers must consider factors like temperature, humidity, and electromagnetic interference when developing these systems.

6.4. Ethical Considerations and Decision-Making

The integration of embedded systems into various applications raises several ethical considerations that must be addressed during the design and implementation phases.

- Data Privacy:

- Many embedded systems collect and process personal data, raising concerns about user privacy and data protection.

- Developers must ensure compliance with data protection regulations and implement measures to safeguard user information.

- Autonomy and Control:

- As embedded systems become more autonomous, questions arise about the level of control users have over these systems.

- Ethical considerations include the potential for misuse or unintended consequences of autonomous decision-making.

- Accountability:

- Determining who is responsible for the actions of an embedded system can be complex, especially in safety-critical applications.

- Clear accountability frameworks must be established to address liability in case of failures or accidents.

- Bias in Algorithms:

- Embedded systems often rely on algorithms that can inadvertently introduce bias, leading to unfair or discriminatory outcomes.

- Developers must be vigilant in ensuring that algorithms are designed to be fair and unbiased.

- Environmental Impact:

- The production and disposal of embedded systems can have significant environmental consequences.

- Ethical considerations include the sustainability of materials used and the lifecycle management of these systems.

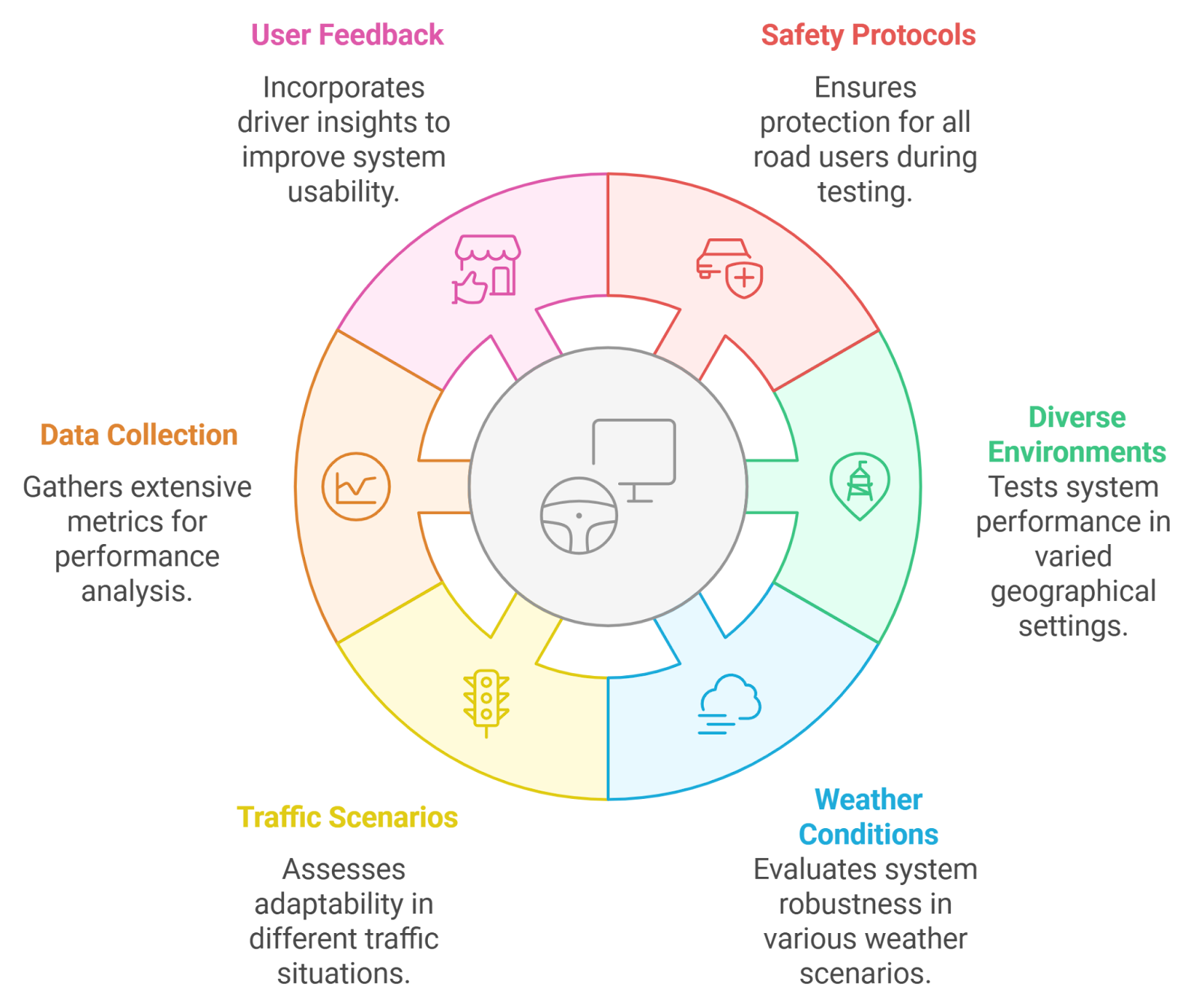

7. Testing and Validation of Vision-Based ADAS

At Rapid Innovation, we understand that testing and validation are critical components in the development of vision-based Advanced Driver Assistance Systems (ADAS). These systems rely heavily on computer vision to interpret data from the vehicle's surroundings, making it essential to ensure their reliability and safety. Our expertise in AI and blockchain technology allows us to provide comprehensive solutions that enhance the testing and validation process, ultimately helping our clients achieve greater ROI.

7.1. Simulation and Virtual Testing Environments

Simulation and virtual testing environments play a vital role in the development and validation of vision-based ADAS. They provide a controlled setting where various scenarios can be tested without the risks associated with real-world driving. By partnering with Rapid Innovation, clients can leverage our advanced simulation capabilities to streamline their development processes.

- Cost-effective: Simulations reduce the need for extensive physical testing, which can be expensive and time-consuming. This efficiency translates into significant cost savings for our clients.

- Controlled scenarios: Developers can create specific driving conditions, such as adverse weather, complex traffic situations, or unusual obstacles, to evaluate system performance. Our tailored simulation solutions ensure that clients can test their systems under a wide range of conditions.

- Repeatability: Tests can be repeated under the same conditions to ensure consistent results, which is crucial for validating system reliability. This repeatability enhances the credibility of the testing process.

- Data generation: Simulations can generate vast amounts of data, allowing for the training and refinement of machine learning algorithms used in vision-based ADAS. Our data analytics capabilities help clients make informed decisions based on this data.

- Integration testing: Virtual environments allow for the testing of how different components of the ADAS interact with each other and with the vehicle's overall systems. This holistic approach ensures that all elements work seamlessly together.

Several tools and platforms are available for simulation, including:

- CARLA: An open-source simulator for autonomous driving research.

- SUMO: A traffic simulation package that can model complex traffic scenarios.

- PreScan: A simulation tool specifically designed for testing vision-based ADAS and automated driving systems.

7.2. Real-World Testing Protocols

While simulations are invaluable, real-world testing is essential to validate the performance of vision-based ADAS in actual driving conditions. This phase ensures that the systems can handle the unpredictability of real-world environments. Rapid Innovation's expertise in developing robust testing protocols ensures that our clients' systems are thoroughly validated.

- Safety protocols: Real-world testing must adhere to strict safety guidelines to protect test drivers, pedestrians, and other road users. Our commitment to safety ensures that clients can conduct tests with confidence.

- Diverse environments: Testing should occur in various settings, including urban, suburban, and rural areas, to assess system performance across different conditions. Our extensive network allows clients to access diverse testing locations.

- Weather conditions: Systems should be tested in various weather scenarios, such as rain, snow, fog, and bright sunlight, to evaluate their robustness. We help clients prepare for all possible conditions.

- Traffic scenarios: Real-world tests should include a range of traffic situations, from heavy congestion to open highways, to ensure the system can adapt to changing conditions. Our expertise in traffic modeling enhances the realism of these tests.

- Data collection: During real-world testing, extensive data should be collected to analyze system performance, including metrics like response time, accuracy, and failure rates. Our data management solutions facilitate effective analysis.

- User feedback: Engaging with test drivers can provide valuable insights into the system's usability and effectiveness, helping to identify areas for improvement. We prioritize user experience to enhance system performance.

Real-world testing is often conducted in phases, starting with closed-course testing before moving to public roads. This gradual approach helps to mitigate risks while ensuring comprehensive validation of the vision-based ADAS. By partnering with Rapid Innovation, clients can expect a streamlined testing process that maximizes efficiency and effectiveness, ultimately leading to greater ROI.

7.3. Performance Metrics and Benchmarks

Performance metrics and benchmarks are essential tools for evaluating the effectiveness and efficiency of processes, products, or services. They provide a framework for measuring success and identifying areas for improvement, ultimately enabling organizations to achieve greater returns on investment (ROI).

- Definition of Performance Metrics:

- Quantitative measures used to assess the performance of an organization or system.

- Examples include sales growth, customer satisfaction scores, and operational efficiency.

- Importance of Benchmarks:

- Benchmarks serve as reference points for comparison.

- They help organizations understand their position relative to industry standards or competitors, allowing for strategic adjustments that can enhance profitability. This includes kpi benchmarks and industry kpi benchmarks.

- Types of Performance Metrics:

- Financial Metrics: Revenue growth, profit margins, return on investment (ROI).

- Operational Metrics: Cycle time, throughput, inventory turnover, and inventory turn benchmarks by industry.

- Customer Metrics: Net promoter score (NPS), customer retention rate, average response time.

- Setting Effective Benchmarks:

- Use historical data to establish realistic targets.

- Consider industry standards and best practices, such as performance measurement and benchmarking.

- Regularly review and adjust benchmarks to reflect changing conditions, ensuring that your organization remains competitive and efficient.

- Data Collection and Analysis:

- Utilize tools like dashboards and analytics software for real-time monitoring.

- Ensure data accuracy and consistency for reliable insights that can inform decision-making, including accounts payable benchmarks and oee benchmarks.

- Continuous Improvement:

- Use performance metrics to identify gaps and areas for enhancement.

- Implement strategies based on data-driven insights to drive performance, ultimately leading to improved ROI and operational success. This can include healthcare productivity benchmarks and maintenance kpi benchmarks.

7.4. Regulatory Compliance and Standards

Regulatory compliance and standards are critical for organizations to operate legally and ethically. They ensure that businesses adhere to laws and regulations that govern their industry, safeguarding their reputation and financial health.

- Definition of Regulatory Compliance:

- The process of ensuring that an organization follows relevant laws, regulations, and guidelines.

- Non-compliance can lead to legal penalties, fines, and reputational damage.

- Importance of Compliance:

- Protects the organization from legal risks and financial losses.

- Enhances credibility and trust with customers and stakeholders, which can lead to increased business opportunities.

- Key Regulatory Areas:

- Data Protection: Compliance with laws like GDPR or HIPAA to protect personal information.

- Environmental Regulations: Adhering to standards that minimize environmental impact.

- Health and Safety: Following regulations to ensure workplace safety and employee well-being.

- Standards Organizations:

- Bodies like ISO (International Organization for Standardization) set global standards for quality and safety.

- Compliance with these standards can improve operational efficiency and marketability, giving organizations a competitive edge.

- Compliance Programs:

- Develop comprehensive compliance programs that include training, monitoring, and reporting.

- Regular audits and assessments help ensure ongoing compliance, reducing the risk of penalties.

- Staying Updated:

- Monitor changes in regulations and standards to remain compliant.

- Engage with industry associations and legal experts for guidance, ensuring that your organization is always aligned with current requirements.

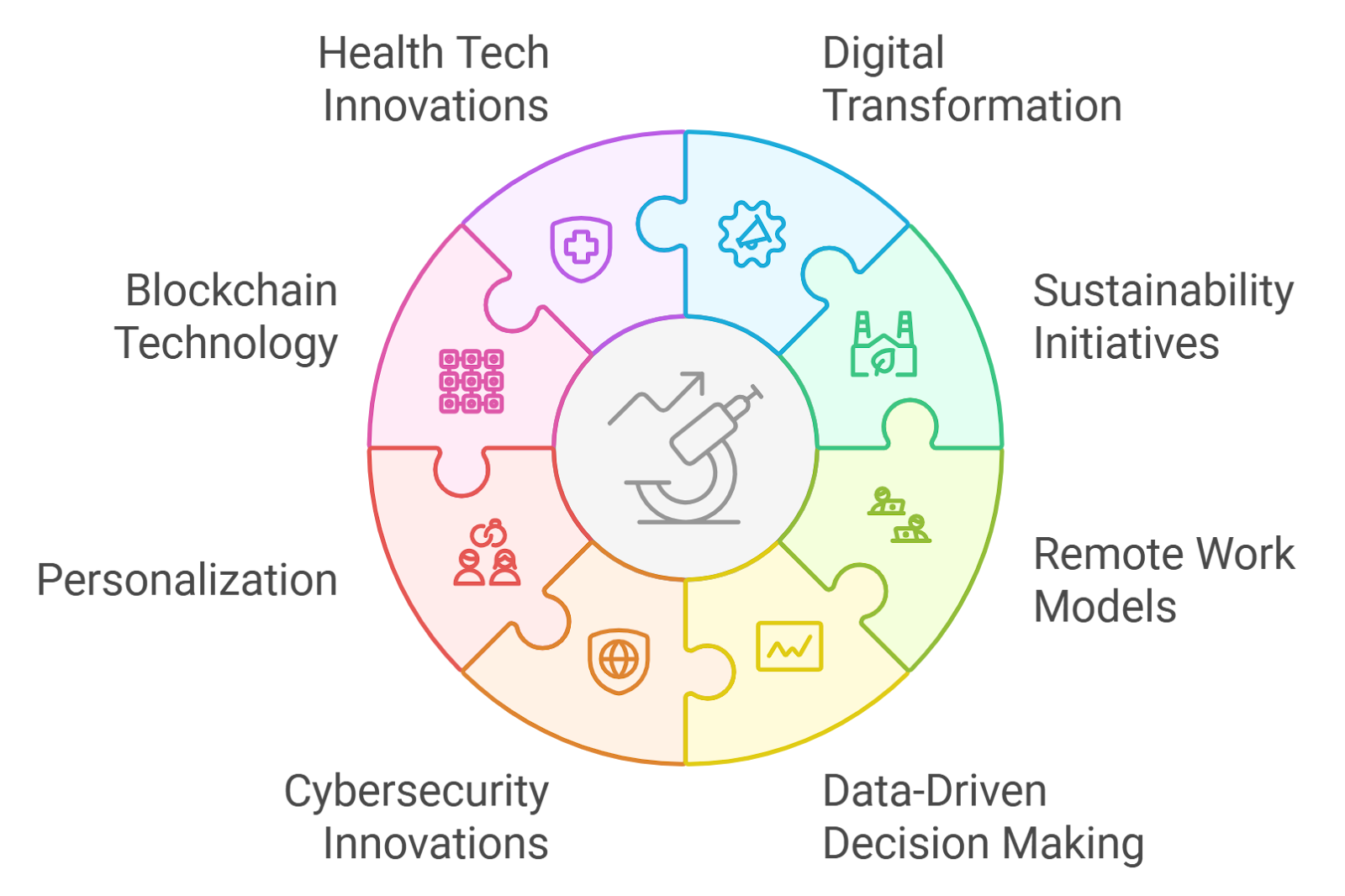

8. Future Trends and Innovations

The landscape of business and technology is constantly evolving, driven by innovation and emerging trends. Understanding these trends is crucial for organizations to stay competitive and maximize their ROI.

- Digital Transformation:

- Businesses are increasingly adopting digital technologies to enhance operations.

- This includes automation, artificial intelligence (AI), and cloud computing, which can streamline processes and reduce costs.

- Sustainability Initiatives:

- Growing emphasis on environmentally friendly practices.

- Companies are investing in sustainable products and processes to meet consumer demand, which can enhance brand loyalty and market share.

- Remote Work and Hybrid Models:

- The shift to remote work is becoming a permanent fixture in many industries.

- Organizations are adopting hybrid work models to improve flexibility and employee satisfaction, leading to higher productivity.

- Data-Driven Decision Making:

- Increased reliance on data analytics for strategic planning and operational efficiency.

- Organizations are leveraging big data to gain insights into customer behavior and market trends, enabling more informed decision-making.

- Cybersecurity Innovations:

- As cyber threats evolve, businesses are investing in advanced cybersecurity measures.

- Innovations include AI-driven security solutions and enhanced encryption technologies, protecting sensitive data and maintaining customer trust.

- Personalization and Customer Experience:

- Companies are focusing on personalized experiences to enhance customer engagement.

- Utilizing AI and machine learning to tailor products and services to individual preferences can lead to increased customer loyalty and sales.

- Blockchain Technology:

- Adoption of blockchain for secure transactions and transparency in supply chains.

- Potential to revolutionize industries by providing decentralized solutions that enhance trust and efficiency.

- Health Tech Innovations:

- Growth in telehealth and wearable technology for improved healthcare delivery.

- Innovations in medical devices and health monitoring systems are transforming patient care, leading to better health outcomes.

- Regulatory Changes:

- Anticipate evolving regulations in response to technological advancements.

- Organizations must stay agile to adapt to new compliance requirements, ensuring they remain competitive and compliant.

By keeping an eye on these trends and innovations, organizations can position themselves for future success and resilience in a rapidly changing environment, ultimately achieving their goals more efficiently and effectively. Partnering with Rapid Innovation can provide the expertise and support needed to navigate these complexities and drive greater ROI.

8.1. Integration with V2X Communication

Vehicle-to-Everything (V2X) communication is a critical component in the evolution of smart transportation systems. It enables vehicles to communicate with each other and with infrastructure, enhancing safety and efficiency.

- V2X includes Vehicle-to-Vehicle (V2V), Vehicle-to-Infrastructure (V2I), Vehicle-to-Pedestrian (V2P), and Vehicle-to-Network (V2N) communications.

- This integration allows for real-time data exchange, which can help in:

- Reducing traffic congestion

- Enhancing road safety by alerting drivers to potential hazards

- Improving navigation through real-time traffic updates

- V2X communication can facilitate cooperative driving, where vehicles work together to optimize traffic flow and reduce accidents.

- The technology relies on standards such as Dedicated Short-Range Communications (DSRC) and Cellular Vehicle-to-Everything (C-V2X), including LTE V2X and 5G V2X technologies.

- According to a report, V2X technology could reduce traffic accidents by up to 80% in certain scenarios.

- Various implementations of V2X, such as automotive V2X and DSRC V2X, are being explored to enhance vehicle-to-everything communication.

- The integration of C V2X communication and 3GPP C V2X standards is also crucial for the development of robust V2X systems.

8.2. Advancements in 3D Scene Understanding

3D scene understanding is essential for autonomous vehicles to navigate complex environments. It involves interpreting the surroundings in three dimensions to make informed driving decisions.

- Key advancements include:

- Enhanced sensor technologies, such as LiDAR and advanced cameras, which provide detailed spatial information.

- Machine learning algorithms that improve object detection and classification in real-time.

- Integration of data from multiple sensors to create a comprehensive 3D map of the environment.

- These advancements enable vehicles to:

- Identify and track dynamic objects, such as pedestrians and cyclists.

- Understand road conditions and obstacles, improving navigation and safety.

- Predict the behavior of other road users, allowing for proactive decision-making.

- Research indicates that improved 3D scene understanding can significantly enhance the reliability of autonomous systems in urban environments.

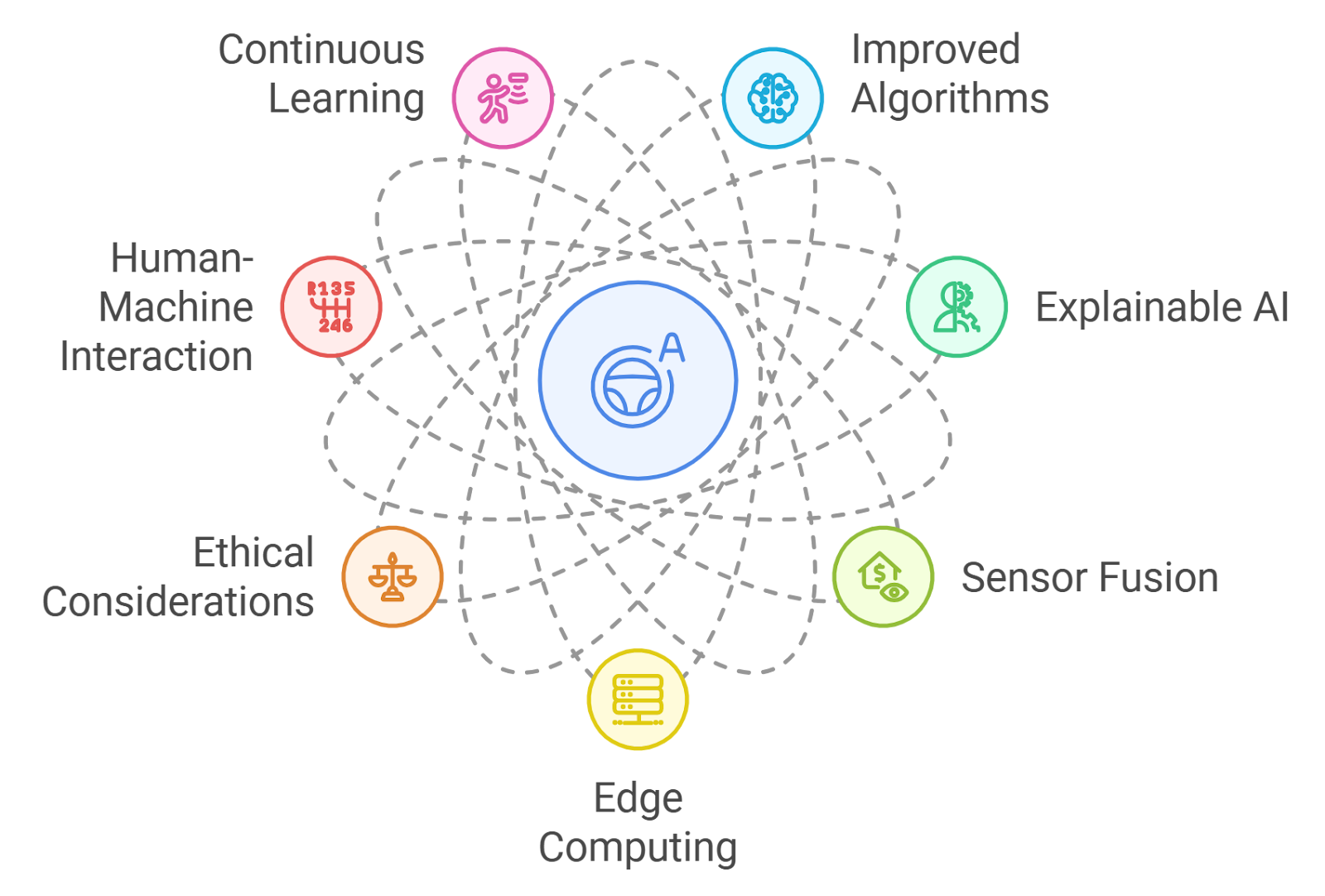

8.3. Towards Fully Autonomous Driving

The journey towards fully autonomous driving involves overcoming numerous technical, regulatory, and societal challenges. Fully autonomous vehicles, or Level 5 vehicles, can operate without human intervention in all conditions.

- Key developments in this area include:

- Advanced AI algorithms that enable vehicles to learn from vast amounts of driving data.

- Improved sensor fusion techniques that combine data from various sources for better situational awareness.

- Ongoing testing and validation in diverse environments to ensure safety and reliability.

- Regulatory frameworks are evolving to accommodate the deployment of autonomous vehicles, including:

- Establishing safety standards and testing protocols.

- Addressing liability and insurance issues related to autonomous driving.

- Public acceptance is crucial for the widespread adoption of fully autonomous vehicles, which involves:

- Educating the public about the benefits and safety of autonomous technology.

- Addressing concerns about job displacement in driving-related industries.

- According to industry forecasts, the global autonomous vehicle market is expected to reach $557 billion by 2026.

At Rapid Innovation, we understand the complexities and opportunities presented by these advancements in transportation technology. Our expertise in AI and blockchain development positions us uniquely to help clients navigate this evolving landscape. By partnering with us, clients can expect:

- Increased ROI: Our tailored solutions are designed to optimize operational efficiency, leading to significant cost savings and improved profitability.

- Enhanced Safety and Compliance: We ensure that your systems are compliant with the latest regulations and safety standards, reducing liability and enhancing public trust.

- Cutting-Edge Technology Integration: We leverage the latest advancements in V2X communication, including CV2X technology and 802.11p V2X, and 3D scene understanding to provide innovative solutions that keep you ahead of the competition.

- Strategic Insights: Our consulting services offer valuable insights into market trends and consumer behavior, enabling you to make informed decisions that drive growth.

By choosing Rapid Innovation, you are not just investing in technology; you are investing in a partnership that prioritizes your success and helps you achieve your goals efficiently and effectively.

8.4. Edge Computing and Distributed Processing

Edge computing refers to the practice of processing data near the source of data generation rather than relying solely on a centralized data center. This approach is becoming increasingly important due to the rise of IoT devices and the need for real-time data processing, particularly with solutions like edge computing platforms and edge cloud platforms.

- Reduces latency: By processing data closer to where it is generated, edge computing minimizes the time it takes for data to travel to a central server and back, which is crucial for applications in edge cloud and edge computing solutions.

- Bandwidth efficiency: It reduces the amount of data that needs to be sent over the network, which can alleviate congestion and lower costs, especially in environments utilizing cloud edge solutions.

- Enhanced security: Sensitive data can be processed locally, reducing the risk of exposure during transmission, a key advantage of edge computing platforms.

- Scalability: Edge computing allows for the distribution of processing tasks across multiple devices, making it easier to scale operations as needed, particularly in hybrid cloud edge computing scenarios.

- Real-time analytics: Businesses can gain insights and make decisions based on data in real-time, which is crucial for applications like autonomous vehicles and smart cities, often supported by IoT edge platforms.

Distributed processing complements edge computing by allowing multiple computers to work on a problem simultaneously. This can lead to:

- Increased processing power: Tasks can be divided among several machines, speeding up computation, which is essential for multi-access edge computing (MEC) solutions.

- Fault tolerance: If one node fails, others can take over, ensuring system reliability, a critical feature in edge cloud 5G environments.

- Resource optimization: Distributed systems can utilize underused resources across a network, improving overall efficiency, particularly in scenarios involving fog computing as a hardware solution.

Together, edge computing and distributed processing create a robust framework for handling the growing demands of data-intensive applications, including those found in the realm of nutanix edge computing and azure stack edge.

9. Case Studies and Real-World Implementations

Case studies provide valuable insights into how technologies are applied in real-world scenarios. They illustrate the practical benefits and challenges of implementing new systems and can guide future innovations.

- Industry applications: Various sectors, including healthcare, finance, and manufacturing, have successfully integrated advanced technologies to improve efficiency and service delivery, often leveraging edge computing solutions.

- Lessons learned: Analyzing case studies helps organizations understand potential pitfalls and best practices, enabling them to make informed decisions regarding edge to cloud platforms and edge to cloud platform as a service.

- Innovation drivers: Successful implementations often lead to further innovations, inspiring other companies to adopt similar technologies, including cloud IoT edge solutions.

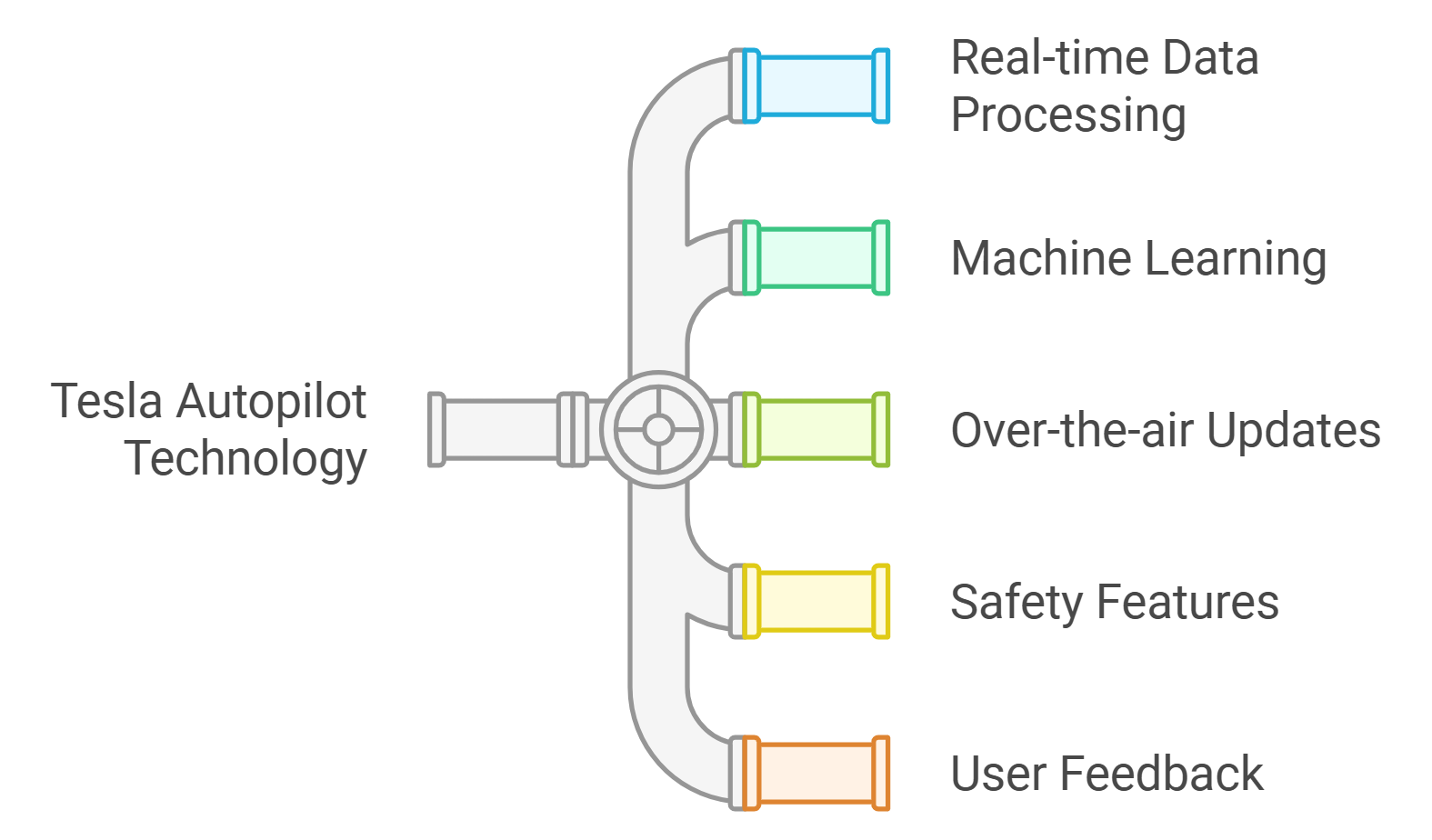

9.1. Tesla Autopilot

Tesla's Autopilot is a prime example of advanced technology in action, showcasing the integration of edge computing and distributed processing in the automotive industry.

- Real-time data processing: Tesla vehicles collect vast amounts of data from their sensors, which is processed locally to enable features like lane-keeping and adaptive cruise control, utilizing edge computing principles.

- Machine learning: The system uses machine learning algorithms to improve its performance over time, learning from the driving behavior of Tesla owners.

- Over-the-air updates: Tesla regularly updates its software, allowing the vehicles to improve their capabilities without requiring a visit to a service center.

- Safety features: Autopilot includes safety measures such as automatic emergency braking and collision avoidance, which rely on real-time data analysis.

- User feedback: Tesla gathers data from its fleet to refine its algorithms, ensuring that the system evolves based on real-world driving conditions.

Tesla's approach to Autopilot exemplifies how edge computing and distributed processing can enhance vehicle performance and safety, setting a benchmark for the future of autonomous driving.

At Rapid Innovation, we leverage these cutting-edge technologies to help our clients achieve their goals efficiently and effectively. By partnering with us, you can expect enhanced operational efficiency, reduced costs, and improved decision-making capabilities, ultimately leading to greater ROI. Our expertise in AI and blockchain development ensures that your organization stays ahead of the curve in an increasingly competitive landscape.

For more insights on how edge computing is transforming industries, check out AI-Driven Edge Computing: Revolutionizing Industries.

9.2. Mobileye Vision Technology

Mobileye is a leader in advanced driver-assistance systems (ADAS) and autonomous driving technologies. Their vision technology is primarily based on computer vision and machine learning algorithms that process data from cameras mounted on vehicles.

- Utilizes a combination of cameras and sensors to perceive the environment effectively, which is essential for systems like cruise autonomous vehicles.

- Employs advanced algorithms to detect and classify objects, such as pedestrians, vehicles, and road signs, ensuring enhanced safety on the road, similar to the capabilities of waymo driverless cars.

- Capable of real-time processing, allowing for immediate responses to dynamic driving conditions, which is crucial for accident prevention, a feature also found in cruise autonomous driving.

- Integrates seamlessly with other vehicle systems to enhance safety features, such as lane-keeping assistance and automatic emergency braking, akin to the technology used in waymo self driving.

- Mobileye's EyeQ chips are specifically designed for processing visual data, enabling efficient computation and low power consumption, which translates to cost savings for manufacturers, including those producing cruise automated cars.

- The technology is scalable, making it suitable for various vehicle types, from passenger cars to commercial trucks, thus broadening market reach, similar to the versatility of autonomous vehicles.

- Mobileye has established partnerships with major automotive manufacturers, enhancing the adoption of their technology in the market and driving innovation, much like waymo's partnerships in the autonomous driving sector.

9.3. Waymo's Computer Vision Approach

Waymo, a subsidiary of Alphabet Inc., is at the forefront of developing fully autonomous vehicles. Their computer vision approach is integral to their self-driving technology, relying heavily on a combination of sensors and advanced algorithms.

- Utilizes a suite of sensors, including LiDAR, radar, and cameras, to create a comprehensive view of the vehicle's surroundings, ensuring a high level of situational awareness, which is critical for autonomous driverless vehicles.

- Employs deep learning techniques to improve object detection and classification, allowing the vehicle to understand complex environments and make informed decisions, similar to the capabilities of cruise autonomous car technology.

- Focuses on high-definition mapping to provide precise localization, which is crucial for safe navigation in diverse driving conditions, a feature also emphasized in waymo autonomous driving.

- The system is designed to handle a wide range of driving scenarios, from urban environments to highways, showcasing its versatility, much like the operational capabilities of self driving car tesla.

- Waymo's vehicles are equipped with redundancy in their sensor systems to ensure reliability and safety, which is paramount in autonomous driving, akin to the safety measures in driverless cars tesla.

- Continuous data collection from real-world driving experiences helps improve the algorithms and overall performance of the system, leading to enhanced user experience, similar to the data-driven approach of autonomous driving uber.

- Waymo has conducted extensive testing, logging millions of miles on public roads to refine their technology, demonstrating a commitment to safety and reliability, much like the rigorous testing of uber autonomous driving.

10. Conclusion and Outlook

The advancements in vision technology by companies like Mobileye and Waymo are shaping the future of autonomous driving. As these technologies evolve, several trends and considerations emerge.

- Increased collaboration between tech companies and automotive manufacturers is likely to accelerate the development of autonomous vehicles, creating new opportunities for innovation, including in the realm of self driving autonomous car solutions.

- Regulatory frameworks will need to adapt to accommodate the rapid advancements in self-driving technology, ensuring safety and compliance, particularly for driverless automobiles.