Table Of Contents

Category

Artificial Intelligence

AIML

IoT

Blockchain

Retail & Ecommerce

1. Introduction to AI Agents in Data Analysis

Artificial Intelligence (AI) agents in data analysis are revolutionizing the way we analyze multi-dimensional data. These intelligent systems can process vast amounts of information, uncover patterns, and provide insights that would be difficult or impossible for humans to achieve alone. The integration of AI agents into data analysis offers numerous benefits, but it also presents several challenges that organizations must navigate.

AI agents can handle complex datasets, including structured and unstructured data. They utilize machine learning algorithms to improve their performance over time and can automate repetitive tasks, freeing up human analysts for more strategic work. At Rapid Innovation, we leverage these capabilities to help our clients streamline their data analysis processes, ultimately leading to greater efficiency and ROI.

The rise of big data has made traditional data analysis methods less effective. AI agents in data analysis can analyze data from various sources, such as social media, IoT devices, and transactional databases, providing a more comprehensive view of the information landscape. This capability is essential for businesses looking to make data-driven decisions in real-time. By implementing AI solutions, Rapid Innovation enables clients to harness the full potential of their data, driving informed decision-making and strategic growth.

Benefits of AI agents in data analysis include: - Enhanced decision-making through predictive analytics, allowing businesses to anticipate market trends and customer needs. - Improved accuracy in identifying trends and anomalies, which can lead to more effective marketing strategies and operational efficiencies. - Ability to process data at unprecedented speeds, enabling organizations to respond swiftly to changing market conditions.

However, the implementation of AI agents in data analysis is not without its challenges. Organizations must consider factors such as data quality, algorithm bias, and the need for skilled personnel to manage these systems. Rapid Innovation provides consulting services to help clients navigate these challenges, ensuring that they can effectively integrate AI into their operations.

Challenges include: - Data quality issues can lead to inaccurate insights, which we address through robust data governance frameworks. - Algorithm bias can result in skewed analysis and decision-making; our team emphasizes ethical AI practices to mitigate this risk. - The demand for skilled data scientists and AI specialists is increasing, and we offer training and support to empower our clients' teams.

In summary, AI agents in data analysis are transforming multi-dimensional data analysis by providing powerful tools for insight generation. While the benefits are significant, organizations must also address the challenges to fully leverage the potential of AI in their data analysis efforts. At Rapid Innovation, we are committed to guiding our clients through this transformative journey, ensuring they achieve their business goals efficiently and effectively. For more information on AI agents for marketing applications, you can read about their use cases, capabilities, best practices, and benefits.

1.1. Understanding Multi-dimensional Data

Multi-dimensional data refers to data that can be viewed and analyzed from multiple perspectives or dimensions. This type of data is crucial in various fields, including business intelligence, scientific research, and social sciences.

- Multi-dimensional data is often represented in the form of cubes, where each axis represents a different dimension.

- Common dimensions include time, geography, and product categories, allowing for complex analysis and insights.

- This data structure enables users to perform operations like slicing, dicing, and drilling down into the data for deeper insights.

- Multi-dimensional databases, such as OLAP (Online Analytical Processing), are specifically designed to handle this type of data efficiently.

- Understanding multi-dimensional data is essential for effective decision-making, as it provides a comprehensive view of the factors influencing outcomes.

At Rapid Innovation, we leverage multidimensional data analysis to help our clients gain deeper insights into their operations, enabling them to make informed decisions that drive greater ROI. We also utilize multidimensional data analysis techniques and provide examples of multidimensional data analysis in cube space to enhance our services.

1.2. Evolution of Data Analysis Techniques

The evolution of data analysis techniques has transformed how organizations interpret and utilize data.

- Early data analysis methods were primarily manual and involved basic statistical techniques.

- The introduction of computers in the 1960s and 1970s revolutionized data processing, allowing for more complex analyses.

- The rise of databases in the 1980s led to the development of SQL (Structured Query Language), enabling users to query large datasets efficiently.

- In the 1990s, data mining emerged, allowing analysts to discover patterns and relationships within large datasets. Techniques such as multidimensional analysis in data mining became prominent during this time.

- The 2000s saw the advent of big data technologies, such as Hadoop and Spark, which enabled the processing of vast amounts of unstructured data.

- Today, advanced analytics techniques, including predictive analytics and machine learning, are widely used to derive actionable insights from data.

At Rapid Innovation, we stay at the forefront of these evolving techniques, ensuring our clients can harness the latest advancements to optimize their data strategies and achieve significant returns on their investments. We also explore the role of Azure Analysis Services multidimensional in enhancing data analysis capabilities.

1.3. Role of AI Agents in Modern Analytics

AI agents play a pivotal role in modern analytics by automating processes and enhancing decision-making capabilities.

- AI agents can analyze large datasets quickly, identifying trends and anomalies that may not be apparent to human analysts.

- They utilize machine learning algorithms to improve their performance over time, adapting to new data and changing conditions.

- AI-driven analytics tools can provide real-time insights, enabling organizations to respond swiftly to market changes.

- Natural language processing (NLP) allows AI agents to interpret and analyze unstructured data, such as customer feedback and social media posts.

- By automating routine tasks, AI agents free up human analysts to focus on more strategic initiatives, enhancing overall productivity.

- The integration of AI in analytics is expected to continue growing, with predictions indicating that AI will significantly impact decision-making processes across industries.

Rapid Innovation specializes in deploying AI agents that empower organizations to unlock the full potential of their data. By integrating AI-driven analytics into their operations, our clients can achieve faster, more accurate insights, leading to improved decision-making and increased ROI. We also emphasize the importance of tabular and multidimensional models in our AI-driven analytics solutions.

1.4. Current State of the Industry

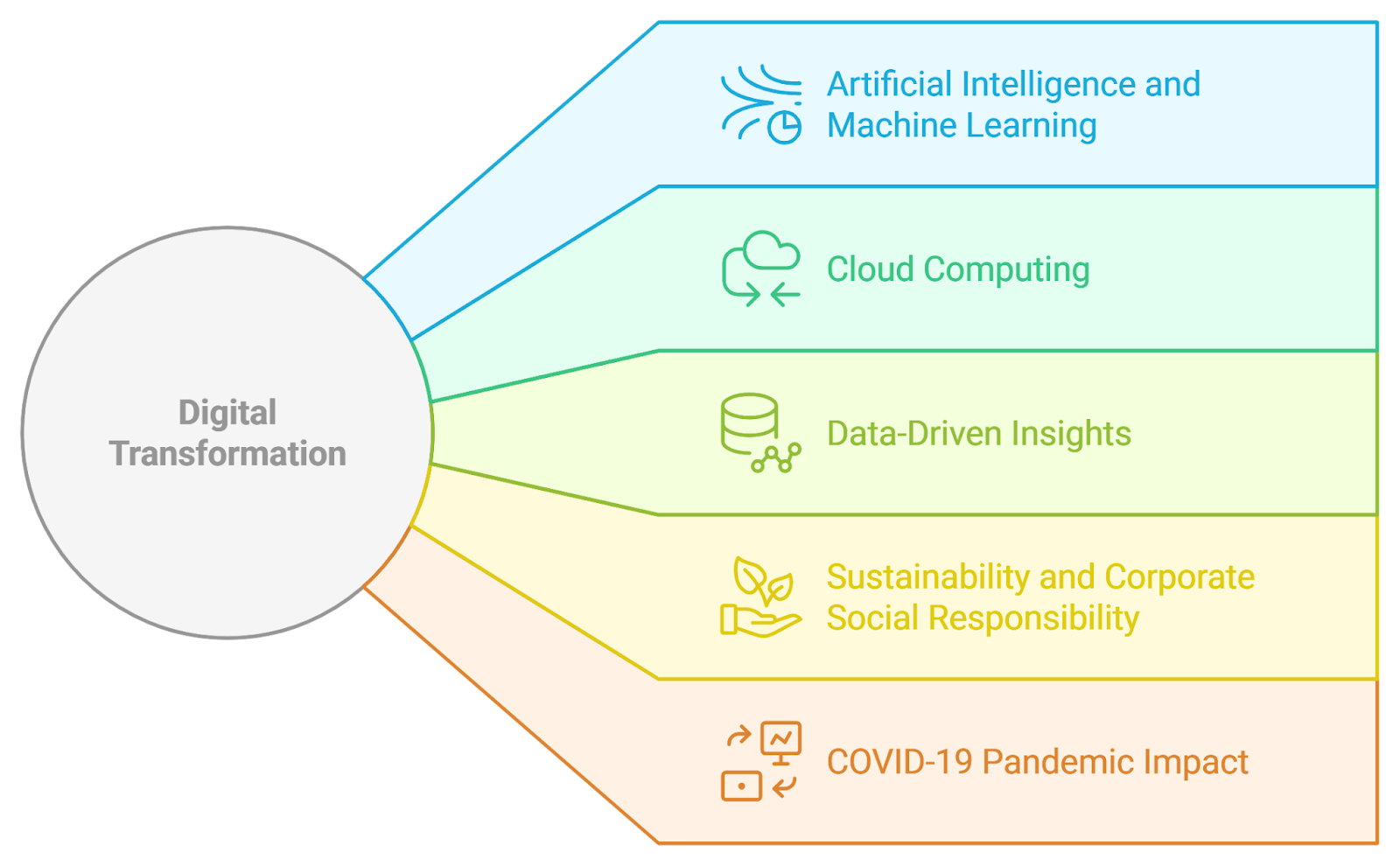

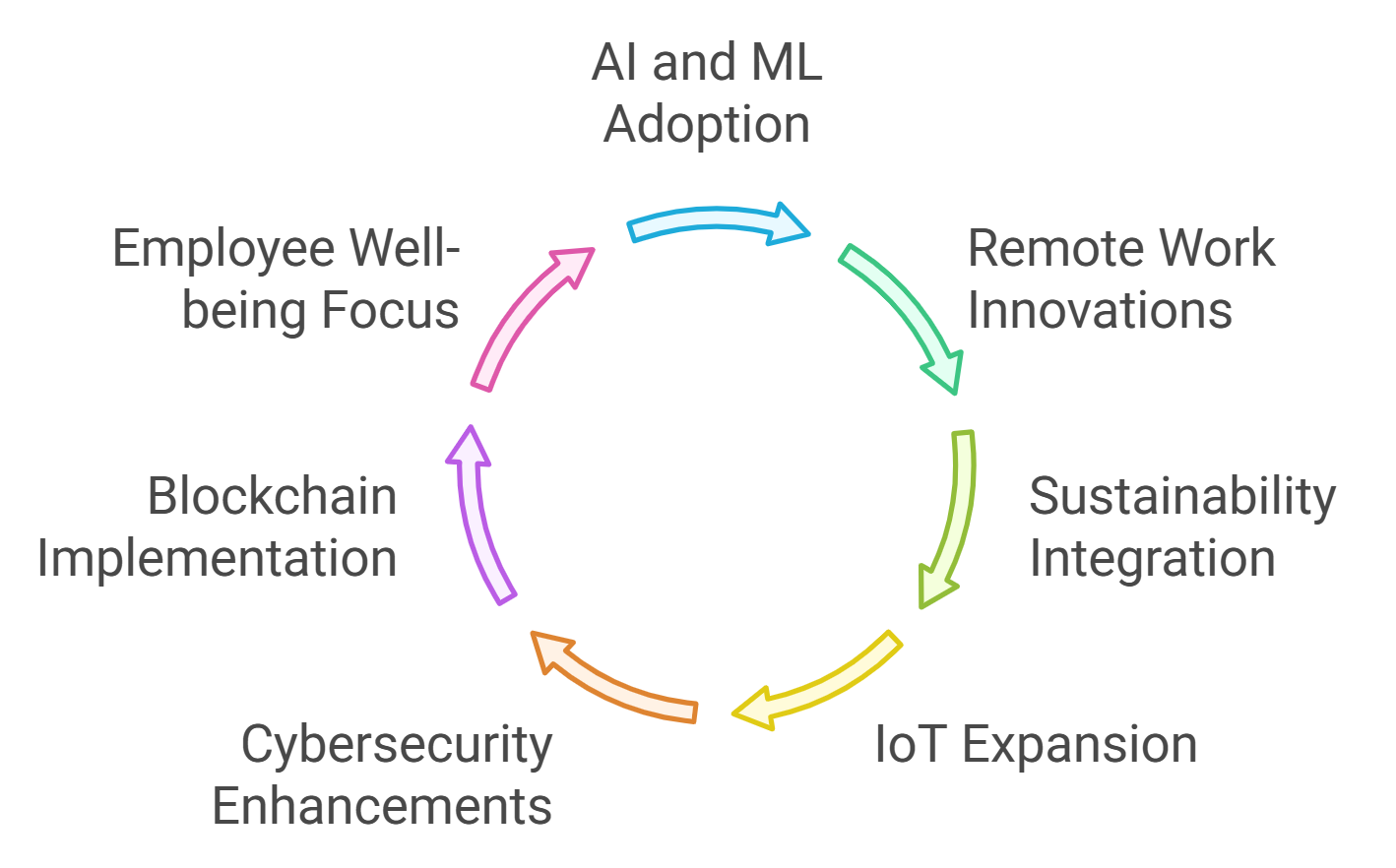

The current state of the industry is characterized by rapid advancements in technology, shifting consumer preferences, and increasing competition. Various sectors are experiencing transformative changes due to digitalization and the integration of innovative solutions, leading to a growing demand for digital transformation consulting.

- The rise of artificial intelligence (AI) and machine learning (ML) is reshaping how businesses operate, enabling them to analyze vast amounts of data for better decision-making. At Rapid Innovation, we leverage AI to develop tailored solutions that enhance operational efficiency and drive significant ROI for our clients, which is a key focus of digital transformation management consulting.

- Companies are increasingly adopting cloud computing, which allows for greater flexibility, scalability, and cost-effectiveness in managing resources. Our expertise in cloud-based solutions ensures that clients can optimize their infrastructure while reducing overhead costs, a critical aspect of digital transformation consulting services.

- The demand for data-driven insights is growing, leading to a surge in analytics tools and platforms that help organizations harness their data effectively. Rapid Innovation specializes in creating advanced analytics solutions that empower businesses to make informed decisions, ultimately boosting their profitability, a service often provided by digital transformation consulting firms.

- Sustainability and corporate social responsibility are becoming essential components of business strategies, as consumers are more inclined to support environmentally friendly practices. We assist clients in integrating sustainable practices into their operations through innovative technology solutions, enhancing their brand reputation and customer loyalty, which is increasingly part of business transformation consulting services.

- The COVID-19 pandemic has accelerated digital transformation across industries, pushing businesses to adapt quickly to remote work and online services. Our consulting services guide organizations through this transition, ensuring they remain competitive in a rapidly evolving landscape, a necessity for digital transformation consulting companies.

According to a report by McKinsey, companies that have embraced digital transformation are 2.5 times more likely to experience revenue growth than their peers. This highlights the importance of staying ahead in a rapidly evolving landscape, underscoring the value of engaging with the best digital transformation consulting firms.

2. Fundamental Concepts

Understanding fundamental concepts is crucial for grasping the complexities of any industry. These concepts serve as the building blocks for more advanced theories and practices.

- Fundamental concepts often include key terminologies, principles, and frameworks that guide decision-making and strategy formulation.

- They provide a common language for professionals within the industry, facilitating communication and collaboration.

- A solid grasp of these concepts enables individuals and organizations to adapt to changes and challenges effectively.

2.1. Multi-dimensional Data Structures

Multi-dimensional data structures are essential for organizing and analyzing complex datasets. They allow for the representation of data in multiple dimensions, making it easier to extract meaningful insights.

- These structures can represent data in various forms, such as arrays, matrices, or cubes, enabling users to analyze relationships and patterns across different dimensions.

- Multi-dimensional data structures are particularly useful in fields like data warehousing, business intelligence, and analytics, where large volumes of data need to be processed efficiently.

- They support advanced analytical techniques, such as OLAP (Online Analytical Processing), which allows users to perform complex queries and generate reports quickly.

- By utilizing multi-dimensional data structures, organizations can enhance their decision-making processes, leading to improved operational efficiency and strategic planning.

In summary, the current state of the industry reflects a dynamic environment driven by technological advancements and changing consumer expectations. Understanding fundamental concepts, particularly multi-dimensional data structures, is vital for navigating this landscape effectively. Rapid Innovation is committed to helping clients harness these advancements to achieve their business goals efficiently and effectively through comprehensive digital transformation consulting.

2.1.1. Data Cubes

Data cubes are a multidimensional array of values, primarily used in data warehousing and online analytical processing (OLAP). They allow for the organization of data in a way that enables efficient querying and analysis.

- Data cubes can represent data across multiple dimensions, such as time, geography, and product categories.

- They facilitate complex calculations and aggregations, making it easier to derive insights from large datasets.

- Users can perform operations like slicing, dicing, and drilling down into the data to explore different perspectives.

- Data cubes are particularly useful in business intelligence applications, where decision-makers need to analyze trends and patterns quickly.

- They can be implemented using various database technologies, including SQL databases and specialized OLAP systems, such as SQL Server Analysis Services cube and SSAS cube.

At Rapid Innovation, we leverage data cubes, including bi cube and analysis service cube, to help clients optimize their data analysis processes, enabling them to make informed decisions that drive greater ROI. By implementing tailored data cube solutions, we empower organizations to uncover actionable insights from their data, enhancing their strategic initiatives. Our expertise in data cubes in data warehouse environments ensures that we provide the best solutions for our clients. For more information on key concepts and technologies in AI.

2.1.2. Tensors

Tensors are mathematical objects that generalize scalars, vectors, and matrices to higher dimensions. They are fundamental in various fields, including physics, engineering, and machine learning. A tensor can be thought of as a multi-dimensional array, where a scalar is a 0-dimensional tensor, a vector is a 1-dimensional tensor, and a matrix is a 2-dimensional tensor.

- Tensors are crucial in deep learning, where they are used to represent data inputs, weights, and outputs in neural networks.

- Operations on tensors, such as addition, multiplication, and contraction, are essential for training machine learning models.

- Libraries like TensorFlow and PyTorch provide extensive support for tensor operations, making it easier for developers to build and train complex models.

At Rapid Innovation, our expertise in tensor manipulation allows us to develop advanced machine learning models that can significantly enhance predictive analytics for our clients. By utilizing state-of-the-art tensor operations, we help businesses achieve higher accuracy in their forecasts, leading to improved operational efficiency and ROI.

2.1.3. High-dimensional Arrays

High-dimensional arrays are data structures that extend the concept of arrays to multiple dimensions. They are commonly used in scientific computing, data analysis, and machine learning.

- High-dimensional arrays can store data in three or more dimensions, allowing for the representation of complex datasets.

- They enable efficient storage and manipulation of large datasets, which is essential in fields like image processing and genomics.

- Operations on high-dimensional arrays include reshaping, slicing, and broadcasting, which facilitate data manipulation and analysis.

- Libraries such as NumPy and SciPy in Python provide robust support for high-dimensional arrays, making it easier for researchers and developers to work with complex data.

- High-dimensional arrays are particularly useful in applications that require the analysis of multi-faceted data, such as time series analysis and multi-channel image processing.

Rapid Innovation employs high-dimensional arrays to enhance data processing capabilities for our clients, particularly in sectors like healthcare and finance. By implementing solutions that utilize these advanced data structures, we enable organizations to analyze complex datasets more effectively, ultimately driving better business outcomes and maximizing ROI.

2.2. AI Agent Architecture

AI agent architecture refers to the structured framework that defines how an AI agent operates, interacts with its environment, and processes information. This architecture is crucial for developing intelligent systems capable of performing tasks autonomously. The design of an AI agent typically includes several layers that facilitate perception, decision-making, and action. The architecture can be categorized into various types, such as reactive agents, deliberative agents, and hybrid agents. Each type has its own strengths and weaknesses, depending on the complexity of the tasks and the environment in which the agent operates. A well-defined architecture allows for scalability and adaptability, enabling agents to learn from experiences and improve their performance over time. This includes various models such as the bdi architecture in artificial intelligence and logic based agent architecture in ai.

2.2.1. Core Components

The core components of an AI agent architecture are essential for its functionality. These components work together to enable the agent to perceive its environment, make decisions, and take actions.

- Sensors: These are the input devices that allow the agent to gather information from its surroundings. Sensors can include cameras, microphones, and other data-gathering tools.

- Actuators: Actuators are the output devices that enable the agent to perform actions in the environment. This can include motors, speakers, or any mechanism that allows the agent to interact with the world.

- Knowledge Base: This component stores information and rules that the agent uses to make decisions. It can be static or dynamic, depending on whether the agent learns from new experiences.

- Reasoning Engine: The reasoning engine processes the information from the sensors and the knowledge base to make decisions. It can employ various algorithms, such as rule-based systems or machine learning models.

- Communication Interface: This allows the agent to interact with other agents or systems. Effective communication is vital for collaboration and information sharing.

2.2.2. Learning Mechanisms

Learning mechanisms are critical for AI agents to adapt and improve their performance over time. These mechanisms enable agents to learn from their experiences and modify their behavior based on new information.

- Supervised Learning: In this approach, the agent learns from labeled data. It uses input-output pairs to understand the relationship between them, allowing it to make predictions on new, unseen data.

- Unsupervised Learning: Here, the agent analyzes data without labeled outputs. It identifies patterns and structures within the data, which can be useful for clustering and anomaly detection.

- Reinforcement Learning: This mechanism involves agents learning through trial and error. They receive feedback in the form of rewards or penalties based on their actions, allowing them to optimize their behavior over time.

- Transfer Learning: This technique allows an agent to apply knowledge gained from one task to a different but related task. It enhances learning efficiency and reduces the amount of data needed for training.

- Online Learning: In this method, the agent continuously learns from new data as it becomes available. This is particularly useful in dynamic environments where conditions change frequently.

By integrating these learning mechanisms into the AI agent architecture, developers can create systems that are not only intelligent but also capable of evolving and improving in response to their environments. At Rapid Innovation, we leverage this architecture to build tailored AI solutions that help our clients achieve greater ROI by automating processes, enhancing decision-making, and providing actionable insights that drive business growth. This includes exploring various types of agent architecture in ai and the architecture of intelligent agent in ai to ensure optimal performance. For more details, check out the key components of modern AI agent architecture.

2.2.3. Decision-making Systems

Decision-making systems are integral components of artificial intelligence (AI) that facilitate the process of making informed choices based on data analysis. These systems utilize algorithms and models to evaluate various options and predict outcomes, ultimately guiding users or organizations in their decision-making processes, including mis and decision making.

- Types of Decision-making Systems:

- Rule-based systems: These systems apply predefined rules to make decisions. They are straightforward and effective for well-defined problems.

- Expert systems: These mimic human expertise in specific domains, using knowledge bases and inference engines to provide recommendations.

- Machine learning systems: These systems learn from data patterns and improve their decision-making capabilities over time, adapting to new information.

- Key Features:

- Data-driven: Decision-making systems rely heavily on data inputs to generate insights and recommendations.

- Predictive analytics: Many systems incorporate predictive models to forecast future trends and behaviors, enhancing decision quality.

- Real-time processing: Advanced systems can analyze data in real-time, allowing for immediate decision-making in dynamic environments.

- Applications:

- Business intelligence: Organizations use decision-making systems to analyze market trends, customer behavior, and operational efficiency. Rapid Innovation leverages these systems to help clients optimize their strategies and improve ROI through data-driven insights, including mis for decision making.

- Healthcare: AI systems assist in diagnosing diseases and recommending treatment plans based on patient data, enabling healthcare providers to make timely and effective decisions.

- Finance: These systems evaluate investment opportunities and risk management strategies, optimizing financial decisions. Rapid Innovation supports financial institutions in implementing robust decision-making systems that enhance profitability and reduce risks, including mis decision making.

2.3. Data Processing Paradigms

Data processing paradigms refer to the methodologies and frameworks used to handle, analyze, and interpret data. With the exponential growth of data, various paradigms have emerged to address the challenges of data processing effectively.

- Traditional Paradigms:

- Batch processing: This method involves collecting data over a period and processing it in bulk. It is efficient for large datasets but may not provide real-time insights.

- Stream processing: This paradigm processes data in real-time as it is generated. It is essential for applications requiring immediate analysis, such as fraud detection.

- Modern Paradigms:

- Distributed processing: This approach utilizes multiple machines to process data concurrently, enhancing speed and efficiency. Technologies like Apache Hadoop and Apache Spark exemplify this paradigm.

- Cloud computing: Leveraging cloud infrastructure allows for scalable data processing, enabling organizations to handle vast amounts of data without significant upfront investment.

- Key Considerations:

- Scalability: The chosen paradigm should accommodate growing data volumes without compromising performance.

- Flexibility: A good data processing paradigm should adapt to various data types and sources, including structured and unstructured data.

- Cost-effectiveness: Organizations must evaluate the cost implications of different paradigms, balancing performance with budget constraints.

3. Benefits of AI Agents in Data Analysis

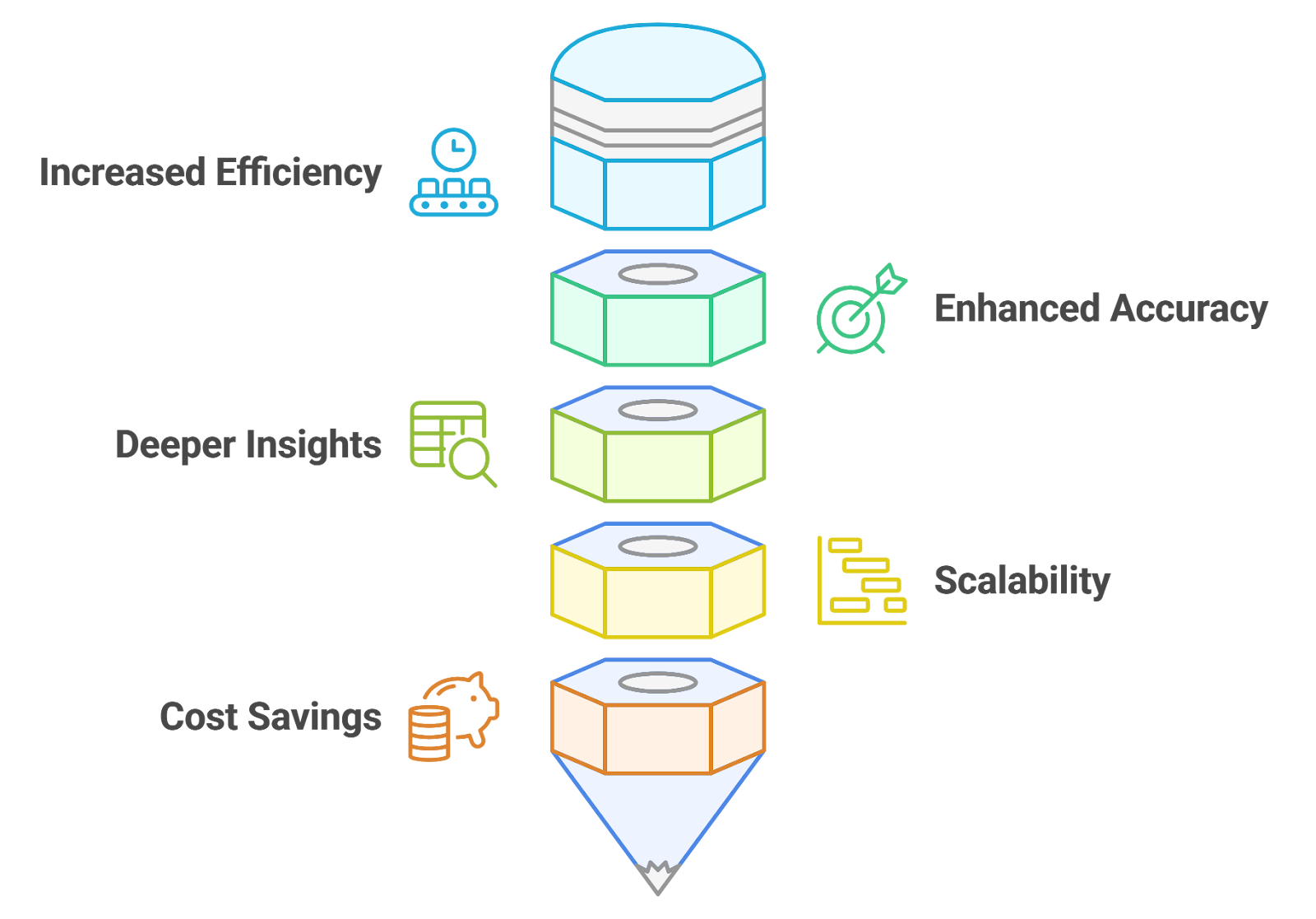

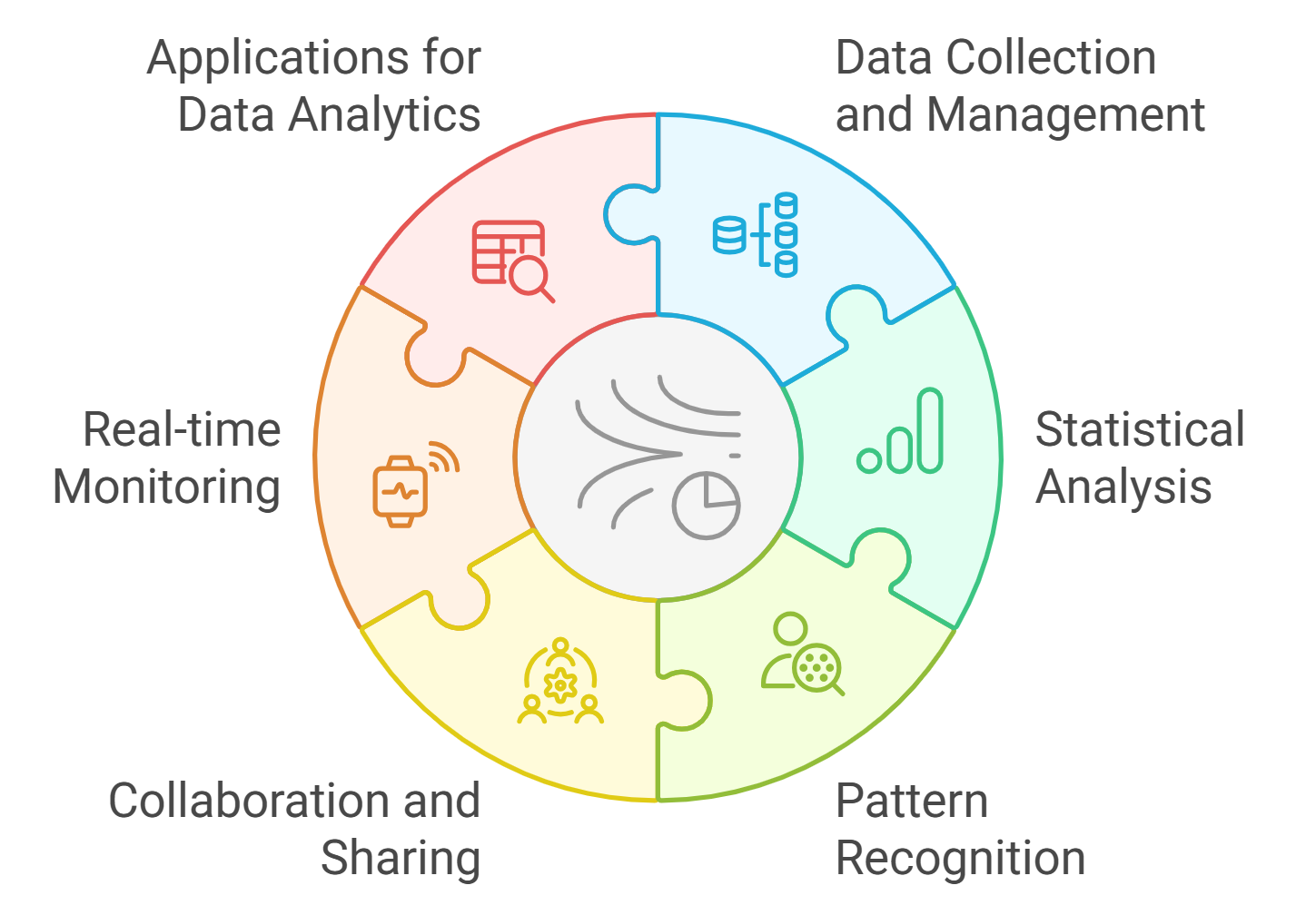

AI agents play a crucial role in enhancing data analysis by automating processes, providing insights, and improving decision-making. Their integration into data analysis workflows offers numerous advantages.

- Increased Efficiency:

- Automation of repetitive tasks: AI agents can handle data cleaning, preprocessing, and analysis, freeing up human analysts for more strategic tasks.

- Faster data processing: AI algorithms can analyze large datasets in a fraction of the time it would take traditional methods.

- Enhanced Accuracy:

- Reduced human error: AI agents minimize the risk of mistakes associated with manual data handling and analysis.

- Improved predictive capabilities: Machine learning models can identify patterns and trends that may be overlooked by human analysts.

- Deeper Insights:

- Advanced analytics: AI agents can perform complex analyses, such as sentiment analysis and anomaly detection, providing richer insights.

- Real-time monitoring: AI systems can continuously analyze data streams, offering immediate feedback and insights for timely decision-making.

- Scalability:

- Handling large datasets: AI agents can efficiently process and analyze vast amounts of data, making them suitable for big data applications.

- Adaptability: These agents can be trained on new data, allowing them to evolve and improve their analytical capabilities over time.

- Cost Savings:

- Reduced labor costs: Automating data analysis tasks can lead to significant savings in labor expenses.

- Optimized resource allocation: AI agents help organizations allocate resources more effectively by providing data-driven insights.

Incorporating AI agents into data analysis not only streamlines processes but also enhances the overall quality of insights derived from data, making them invaluable in today’s data-driven landscape. Rapid Innovation is committed to helping clients harness the power of AI and decision-making systems, including decisionmaking systems, to achieve their business goals efficiently and effectively, ultimately driving greater ROI.

3.1. Enhanced Pattern Recognition

Enhanced pattern recognition is a critical advancement in data analysis and machine learning. This capability allows systems to identify and interpret complex patterns within large datasets, leading to more accurate predictions and insights.

- Utilizes advanced algorithms, such as deep learning and neural networks, to detect intricate patterns that traditional methods may overlook.

- Improves decision-making processes across various industries, including finance, healthcare, and marketing.

- Enables businesses to identify trends and anomalies in customer behavior, leading to more targeted marketing strategies. For instance, python stock pattern recognition can be employed to analyze stock market trends effectively.

- Facilitates predictive maintenance in manufacturing by recognizing patterns that indicate equipment failure before it occurs.

- Supports fraud detection by analyzing transaction patterns to flag unusual activities.

At Rapid Innovation, we leverage enhanced pattern recognition to help our clients optimize their operations and drive greater ROI. For instance, in the finance sector, our solutions can identify fraudulent transactions in real-time, significantly reducing losses and enhancing security.

The ability to recognize patterns enhances the overall effectiveness of data-driven strategies, making it a vital component of modern analytics. Additionally, pattern recognition in data analysis plays a crucial role in extracting meaningful insights from complex datasets.

3.2. Automated Feature Engineering

Automated feature engineering is a transformative process in machine learning that streamlines the preparation of data for analysis. This technique automates the extraction and selection of relevant features from raw data, significantly reducing the time and effort required for data preprocessing.

- Increases efficiency by automating repetitive tasks, allowing data scientists to focus on model development and interpretation.

- Enhances model performance by identifying the most relevant features that contribute to predictive accuracy.

- Reduces the risk of human error in feature selection, leading to more reliable models.

- Supports a wide range of data types, including structured, unstructured, and time-series data.

- Facilitates the use of advanced techniques, such as ensemble learning, by providing a richer set of features for model training.

By automating feature engineering, organizations can accelerate their data analysis processes and improve the quality of their machine learning models. At Rapid Innovation, we implement automated feature engineering to help clients achieve faster insights and better decision-making, ultimately leading to increased ROI.

3.3. Real-time Analysis Capabilities

Real-time analysis capabilities are essential for organizations that require immediate insights from their data. This functionality allows businesses to process and analyze data as it is generated, enabling timely decision-making and responsiveness to changing conditions.

- Supports industries such as finance, where real-time data analysis is crucial for trading and risk management.

- Enhances customer experience by allowing businesses to respond to customer inquiries and behaviors instantly.

- Facilitates operational efficiency by monitoring systems and processes in real-time, identifying issues before they escalate.

- Enables predictive analytics by analyzing current data trends to forecast future outcomes.

- Integrates with IoT devices, allowing for continuous data collection and analysis from various sources.

At Rapid Innovation, our real-time analysis capabilities empower organizations to stay competitive in fast-paced environments. For example, in retail, we help businesses analyze customer behavior in real-time, allowing for immediate adjustments to marketing strategies and inventory management, thus maximizing ROI.

Real-time analysis capabilities are a key feature in modern data analytics solutions, ensuring that our clients can make informed decisions swiftly and effectively.

3.4. Scalability Advantages

Scalability is a crucial factor in the growth and efficiency of any system, particularly in technology and business. The scalability advantages of modern systems, especially those leveraging cloud computing and artificial intelligence, are significant. Scalable systems can allocate resources dynamically based on demand, meaning that during peak times, additional resources can be deployed without significant delays or costs. Businesses can save on costs by only paying for the resources they use, allowing for better financial planning and reducing waste through a pay-as-you-go model. Furthermore, scalability and flexibility in cloud computing enable systems to easily expand to serve a global audience, which is particularly important for businesses looking to enter new markets without the need for extensive infrastructure investments. Organizations can quickly adapt to changing market conditions or customer needs, providing essential flexibility in today’s fast-paced business environment. As user demand increases, scalable systems maintain performance levels, ensuring that users have a consistent experience without degradation in service.

- Resource Allocation: Scalable systems can allocate resources dynamically based on demand. This means that during peak times, additional resources can be deployed without significant delays or costs.

- Cost Efficiency: Businesses can save on costs by only paying for the resources they use. This pay-as-you-go model allows for better financial planning and reduces waste.

- Global Reach: Scalable systems can easily expand to serve a global audience. This is particularly important for businesses looking to enter new markets without the need for extensive infrastructure investments.

- Flexibility: Organizations can quickly adapt to changing market conditions or customer needs. This flexibility is essential in today’s fast-paced business environment, highlighting the flexibility and scalability of cloud computing.

- Performance Maintenance: As user demand increases, scalable systems maintain performance levels, ensuring that users have a consistent experience without degradation in service.

3.5. Reduced Human Bias

Human bias can significantly impact decision-making processes, often leading to unfair or inaccurate outcomes. The integration of technology, particularly artificial intelligence and machine learning, helps mitigate these biases. AI systems analyze vast amounts of data objectively, reducing the influence of personal biases that can affect human judgment. Automated systems follow predefined algorithms and rules, ensuring that decisions are made consistently and fairly across all cases. By utilizing diverse datasets, AI can provide a more balanced perspective, minimizing the risk of bias that may arise from limited or homogeneous data. Additionally, machine learning algorithms can be trained to recognize and adjust for biases in data, leading to more equitable outcomes over time. Many AI systems offer insights into their decision-making processes, allowing for greater scrutiny and accountability, which can help identify and correct biases.

- Data-Driven Decisions: AI systems analyze vast amounts of data objectively, reducing the influence of personal biases that can affect human judgment.

- Standardized Processes: Automated systems follow predefined algorithms and rules, ensuring that decisions are made consistently and fairly across all cases.

- Diverse Data Sources: By utilizing diverse datasets, AI can provide a more balanced perspective, minimizing the risk of bias that may arise from limited or homogeneous data.

- Continuous Learning: Machine learning algorithms can be trained to recognize and adjust for biases in data, leading to more equitable outcomes over time.

- Transparency: Many AI systems offer insights into their decision-making processes, allowing for greater scrutiny and accountability, which can help identify and correct biases.

3.6. Improved Accuracy and Precision

Accuracy and precision are vital in various fields, from healthcare to finance. The advancements in technology have significantly enhanced these aspects, leading to better outcomes. Advanced algorithms can process and analyze data with a level of accuracy that surpasses human capabilities, resulting in more reliable insights and predictions. Automated systems reduce the likelihood of human error, which is often a significant factor in inaccuracies, particularly in critical areas such as medical diagnostics and financial transactions. Technologies can analyze data in real-time, allowing for immediate adjustments and corrections, which is crucial in environments where timely decisions are essential. Machine learning models can improve over time, leading to increasingly accurate predictions based on historical data and trends. In manufacturing and production, automated systems can monitor processes continuously, ensuring that products meet quality standards with high precision.

- Data Analysis: Advanced algorithms can process and analyze data with a level of accuracy that surpasses human capabilities. This leads to more reliable insights and predictions.

- Error Reduction: Automated systems reduce the likelihood of human error, which is often a significant factor in inaccuracies. This is particularly important in critical areas such as medical diagnostics and financial transactions.

- Real-Time Processing: Technologies can analyze data in real-time, allowing for immediate adjustments and corrections. This is crucial in environments where timely decisions are essential.

- Enhanced Predictive Models: Machine learning models can improve over time, leading to increasingly accurate predictions based on historical data and trends.

- Quality Control: In manufacturing and production, automated systems can monitor processes continuously, ensuring that products meet quality standards with high precision.

By leveraging these advantages, organizations can enhance their operational efficiency, make better decisions, and ultimately achieve greater success in their respective fields. At Rapid Innovation, we specialize in harnessing the power of AI and blockchain technologies to help our clients realize these benefits, driving greater ROI and ensuring sustainable growth. For more insights, you can read about learning from real-world AI implementations.

4. Technical Implementation

Technical implementation is a crucial phase in any project, particularly in software development and system design. It involves translating the conceptual framework into a functional system. This section will delve into agent design principles and architecture selection, which are fundamental to creating effective and efficient agents.

4.1 Agent Design Principles

Agent design principles are guidelines that help in creating intelligent agents capable of performing tasks autonomously. These principles ensure that agents are efficient, reliable, and adaptable to changing environments. Key design principles include:

- Autonomy: Agents should operate independently, making decisions without human intervention. This autonomy allows for real-time responses to dynamic conditions, which can significantly enhance operational efficiency and reduce response times for businesses.

- Reactivity: Agents must be able to perceive their environment and respond to changes promptly. This involves continuous monitoring and quick adaptation to new information, ensuring that businesses can stay ahead of market trends and customer needs.

- Proactivity: Beyond mere reaction, agents should anticipate future events and take initiative. This proactive behavior enhances their effectiveness in achieving goals, leading to improved customer satisfaction and retention.

- Social Ability: Agents should be capable of interacting with other agents and humans. This includes communication protocols and collaboration strategies to work towards common objectives, fostering a more integrated and efficient workflow.

- Learning: Incorporating machine learning techniques allows agents to improve their performance over time. Learning from past experiences enables agents to adapt to new situations and optimize their actions, ultimately driving greater ROI for clients.

- Scalability: The design should accommodate growth, allowing the system to handle an increasing number of agents or tasks without significant performance degradation. This scalability is essential for businesses looking to expand their operations without incurring excessive costs.

- Robustness: Agents must be resilient to failures and capable of recovering from errors. This involves implementing error-handling mechanisms and redundancy, ensuring that business operations remain uninterrupted.

These principles guide the development of agents that can function effectively in various applications, from customer service bots to autonomous vehicles, ultimately helping clients achieve their business goals efficiently.

4.1.1 Architecture Selection

Choosing the right architecture is vital for the successful implementation of agents. The architecture defines how agents are structured, how they interact with their environment, and how they process information. Key considerations in architecture selection include:

- Type of Agent: Different types of agents (reactive, deliberative, hybrid) require different architectural approaches. Reactive agents respond to stimuli, while deliberative agents plan their actions based on goals, allowing businesses to tailor solutions to their specific needs.

- Modularity: A modular architecture allows for easier updates and maintenance. Components can be developed, tested, and replaced independently, enhancing flexibility and reducing downtime for clients.

- Scalability: The architecture should support scalability, enabling the system to grow without significant redesign. This is particularly important for applications that may expand in user base or functionality, ensuring that clients can adapt to changing market demands.

- Performance: The architecture must ensure that agents can process information and respond quickly. Performance metrics should be established to evaluate the efficiency of the architecture, directly impacting the ROI for clients.

- Interoperability: The ability to integrate with other systems and technologies is crucial. The architecture should support standard protocols and interfaces for seamless communication, facilitating collaboration across different platforms.

- Resource Management: Efficient use of resources (CPU, memory, bandwidth) is essential for optimal performance. The architecture should include mechanisms for resource allocation and management, helping clients maximize their investments.

- Security: As agents often operate in sensitive environments, the architecture must incorporate security measures to protect against unauthorized access and data breaches, safeguarding client information and maintaining trust.

Selecting the appropriate architecture involves balancing these considerations to meet the specific needs of the application. Various architectural frameworks, such as layered architectures, agent-oriented architectures, and service-oriented architectures, can be employed based on the project requirements.

In conclusion, the technical implementation of agents hinges on sound design principles and careful architecture selection. By adhering to these guidelines, Rapid Innovation can help clients create robust, efficient, and adaptable agents capable of performing complex tasks in dynamic environments, ultimately driving greater ROI and achieving business goals effectively. For more information on how we can assist you, visit our AI agent development company.

4.1.2. Algorithm Choice

Choosing the right algorithm is crucial for the success of any data-driven project. The algorithm you select can significantly impact the performance, accuracy, and efficiency of your model. Here are some key considerations when making your algorithm choice:

- Nature of the Problem: Identify whether your problem is a classification, regression, clustering, or time-series forecasting task. Different algorithms are suited for different types of problems, and Rapid Innovation can assist in selecting the most appropriate algorithm tailored to your specific business needs. For example, if you are implementing a selection sort algorithm, understanding its suitability for your data structure is essential.

- Data Characteristics: Analyze the size, quality, and type of data you have. For instance, decision trees work well with categorical data, while linear regression is better suited for continuous data. Our team at Rapid Innovation can help you assess your data characteristics to ensure optimal algorithm selection. If you are working with sorting algorithms like selection sort in Java or Python selection sort, the data characteristics will influence your choice.

- Performance Metrics: Define what success looks like for your project. Depending on whether you prioritize accuracy, speed, or interpretability, your choice of algorithm may vary. We can guide you in establishing clear performance metrics that align with your business objectives. For instance, comparing the performance of selection sort versus quickselect can provide insights into efficiency.

- Scalability: Consider how well the algorithm can handle increasing amounts of data. Some algorithms, like k-nearest neighbors, may struggle with large datasets, while others, like gradient boosting machines, can scale more effectively. Rapid Innovation specializes in scalable solutions that grow with your business. When implementing algorithms like bubble sort and selection sort, scalability can be a significant factor.

- Complexity and Interpretability: Simpler models like linear regression are easier to interpret, while complex models like deep learning may offer better performance but at the cost of interpretability. Our experts can help you balance complexity and interpretability based on your project requirements. For example, understanding the differences between insertion sort and selection sort can aid in making informed decisions.

- Computational Resources: Assess the computational power available. Some algorithms require more resources and time to train, which can be a limiting factor. Rapid Innovation can optimize your resource allocation to ensure efficient algorithm training and deployment. When considering algorithms like C++ selection sort or Java selection sort program, resource allocation is crucial.

4.1.3. Integration Patterns

Integration patterns refer to the methods and strategies used to combine different systems, applications, or data sources. Effective integration is essential for ensuring that data flows seamlessly across platforms. Here are some common integration patterns:

- Point-to-Point Integration: This is the simplest form of integration where two systems are directly connected. While easy to implement, it can become complex as the number of systems increases. Rapid Innovation can streamline this process to ensure efficient connectivity.

- Hub-and-Spoke Integration: In this pattern, a central hub connects multiple systems. This approach simplifies management and reduces the number of direct connections, making it easier to maintain. Our team can design a hub-and-spoke model that enhances your operational efficiency.

- API-Based Integration: Using APIs (Application Programming Interfaces) allows different systems to communicate with each other. This pattern is flexible and scalable, enabling real-time data exchange. Rapid Innovation excels in developing robust APIs that facilitate seamless integration.

- Event-Driven Integration: This pattern relies on events to trigger data exchanges. It is useful for real-time applications where immediate responses are required. We can implement event-driven architectures that enhance responsiveness and agility in your operations.

- Batch Processing: In scenarios where real-time integration is not necessary, batch processing can be used to transfer data at scheduled intervals. This is often more efficient for large datasets. Rapid Innovation can optimize batch processing strategies to improve data handling efficiency.

- Microservices Architecture: This modern approach involves breaking down applications into smaller, independent services that can be developed, deployed, and scaled independently. It enhances flexibility and allows for easier integration. Our expertise in microservices can help you build a resilient and scalable architecture.

4.2. Data Preprocessing Strategies

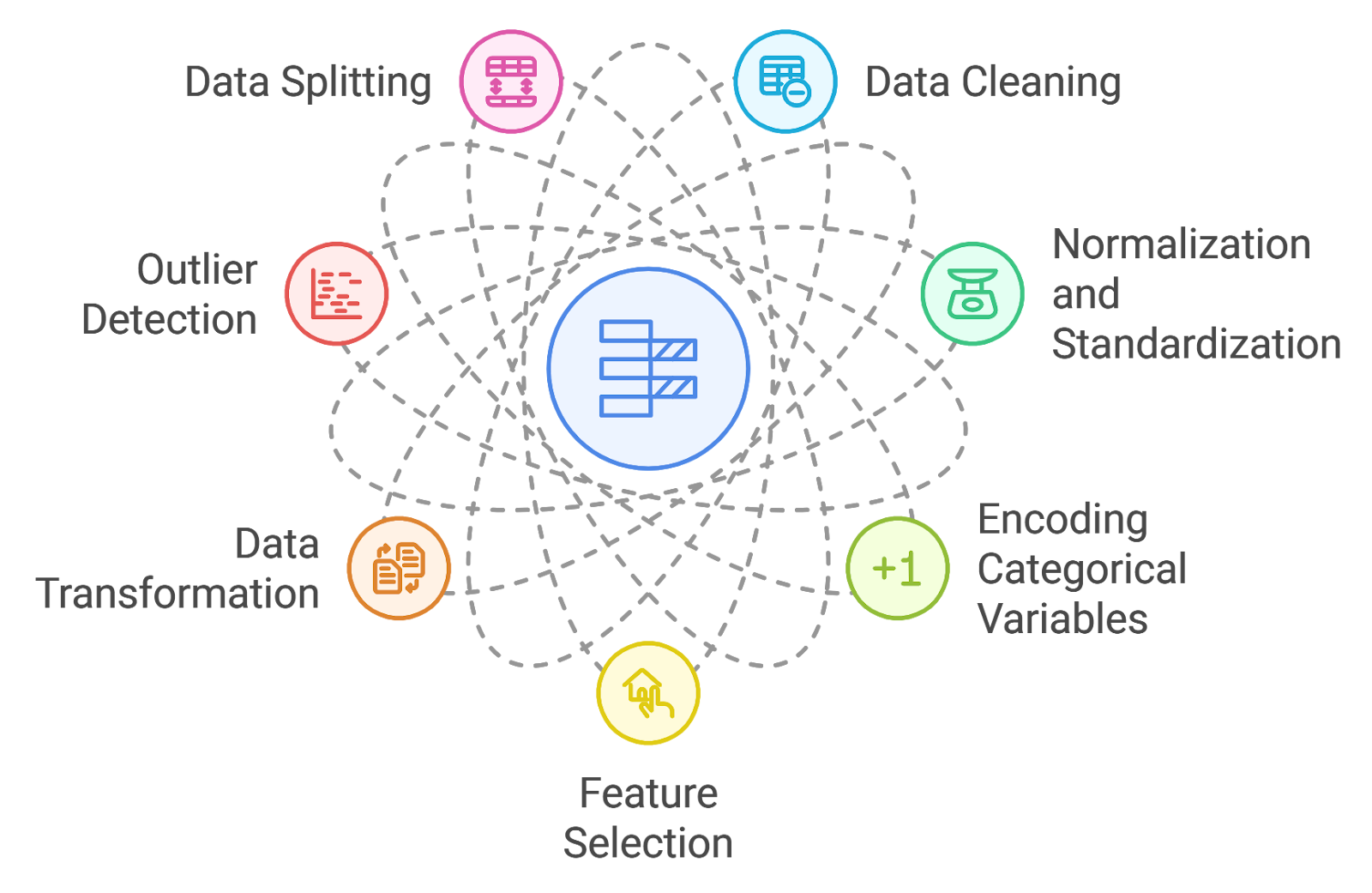

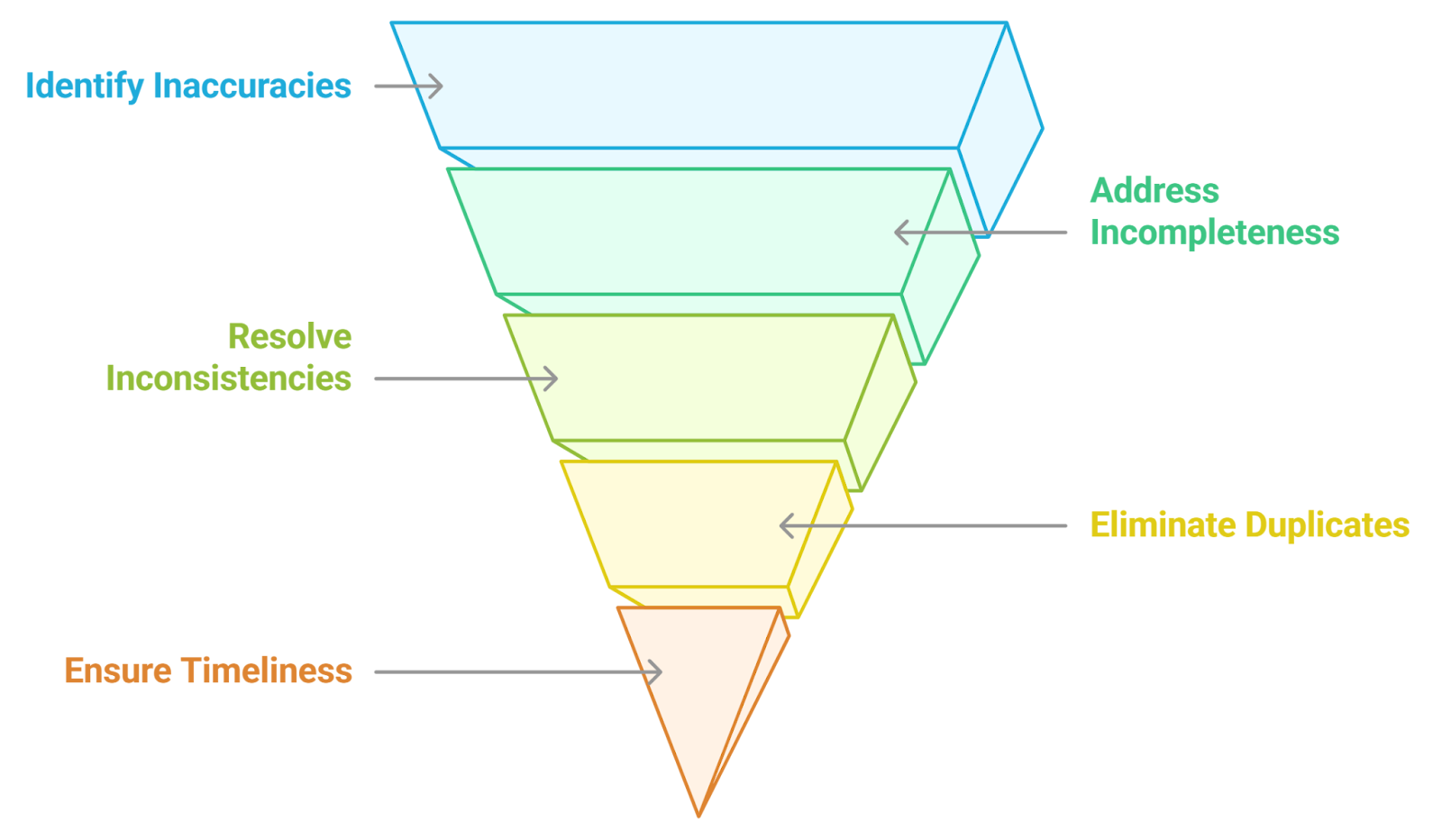

Data preprocessing is a critical step in the data analysis pipeline. It involves cleaning and transforming raw data into a format suitable for analysis. Here are some effective data preprocessing strategies:

- Data Cleaning: Remove or correct inaccuracies in the data. This includes handling missing values, correcting inconsistencies, and eliminating duplicates. Rapid Innovation can implement comprehensive data cleaning processes to ensure data integrity.

- Normalization and Standardization: Scale numerical data to ensure that it fits within a specific range or follows a standard distribution. Normalization rescales data to a range of [0, 1], while standardization transforms data to have a mean of 0 and a standard deviation of 1. Our team can apply these techniques to enhance model performance.

- Encoding Categorical Variables: Convert categorical data into numerical format using techniques like one-hot encoding or label encoding. This is essential for algorithms that require numerical input. We can assist in effectively encoding your data for optimal algorithm compatibility.

- Feature Selection: Identify and retain only the most relevant features for your model. This can improve model performance and reduce overfitting. Rapid Innovation employs advanced feature selection techniques to enhance your model's predictive power.

- Data Transformation: Apply transformations such as logarithmic or polynomial transformations to make the data more suitable for analysis. This can help in stabilizing variance and making relationships more linear. Our experts can determine the best transformations for your dataset.

- Outlier Detection: Identify and handle outliers that can skew your analysis. Techniques like Z-score or IQR (Interquartile Range) can be used to detect and manage outliers effectively. We can implement robust outlier detection methods to ensure accurate analysis.

- Data Splitting: Divide your dataset into training, validation, and test sets. This ensures that your model is evaluated on unseen data, providing a better estimate of its performance in real-world scenarios. Rapid Innovation can guide you in effectively splitting your data for optimal model evaluation.

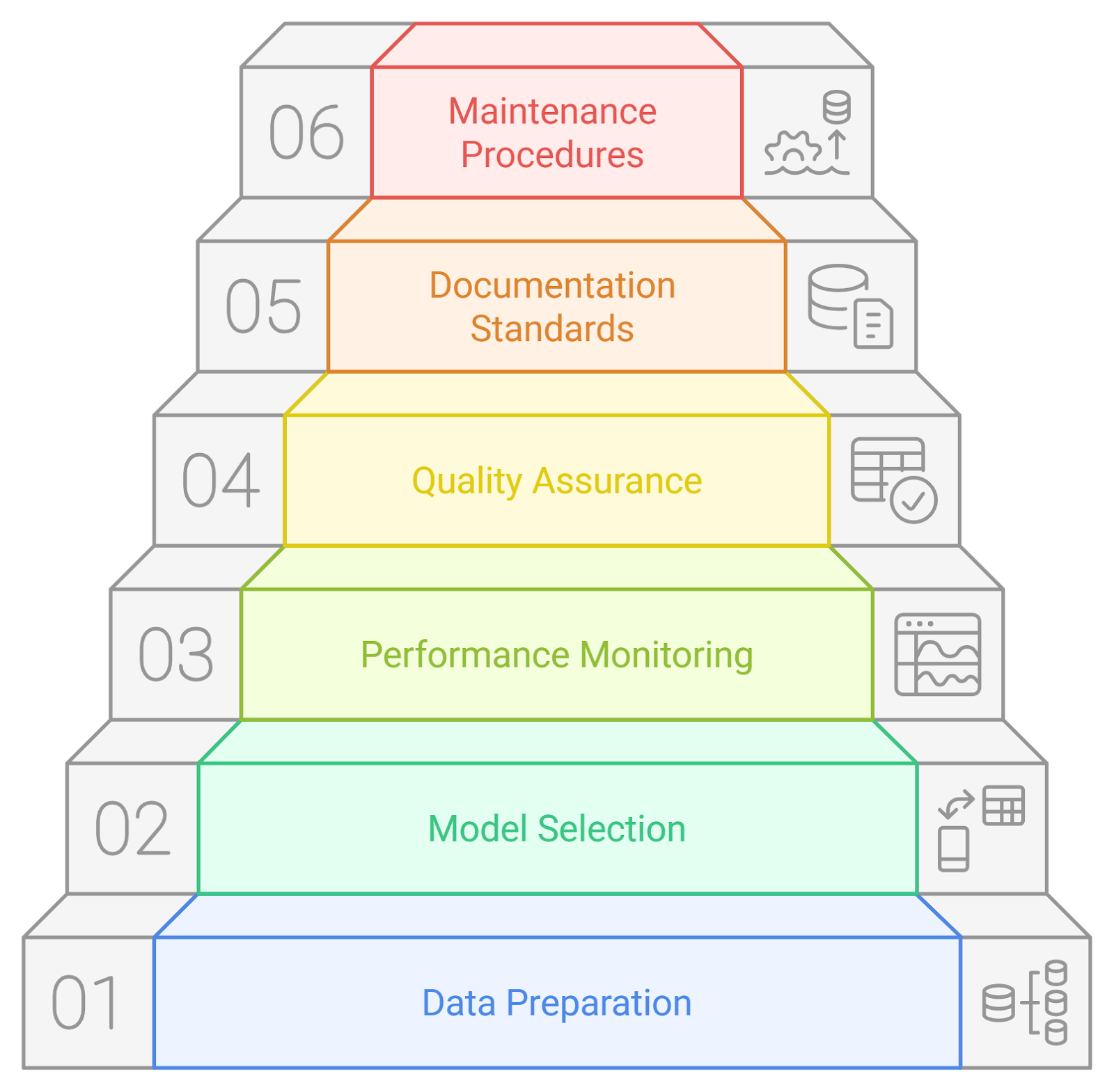

4.3. Model Training Approaches

Model training is a critical phase in the machine learning lifecycle, where algorithms learn from data to make predictions or decisions. Various approaches can be employed to enhance the training process, each with its own advantages and challenges.

- Supervised Learning: This approach involves training a model on labeled data, where the input-output pairs are known. It is widely used for tasks like classification and regression. Rapid Innovation leverages supervised learning to help clients develop predictive models that drive informed decision-making, ultimately leading to increased ROI. Techniques such as training in machine learning and training machine learning models are commonly utilized in this context.

- Unsupervised Learning: In this method, the model is trained on data without labels, allowing it to identify patterns and relationships. Common applications include clustering and dimensionality reduction. By utilizing unsupervised learning, Rapid Innovation assists clients in uncovering hidden insights within their data, enabling them to optimize operations and enhance customer experiences.

- Semi-Supervised Learning: This hybrid approach combines labeled and unlabeled data, leveraging the strengths of both supervised and unsupervised learning. It is particularly useful when acquiring labeled data is expensive or time-consuming. Rapid Innovation employs semi-supervised learning to maximize the value of existing data, ensuring clients achieve their business objectives efficiently.

- Reinforcement Learning: This approach focuses on training models through trial and error, where an agent learns to make decisions by receiving rewards or penalties based on its actions. It is commonly used in robotics and game playing. Rapid Innovation's expertise in reinforcement learning allows clients to develop adaptive systems that improve over time, leading to enhanced operational efficiency and cost savings.

- Transfer Learning: This technique involves taking a pre-trained model and fine-tuning it on a new, but related task. It significantly reduces training time and resource requirements, making it ideal for scenarios with limited data. Rapid Innovation utilizes transfer learning to accelerate project timelines and reduce costs for clients, ensuring they achieve greater ROI. Approaches like transfer learning deep learning are particularly effective in this area.

- Online Learning: This method allows models to be trained incrementally as new data becomes available, making it suitable for dynamic environments. Rapid Innovation incorporates online machine learning techniques to ensure models remain relevant and accurate over time.

- Federated Learning: This approach enables training models across multiple decentralized devices while keeping data localized. It enhances privacy and security, making it an attractive option for sensitive applications. Rapid Innovation explores federated learning model strategies to address client needs in data-sensitive industries.

- XGBoost Training: This specific technique focuses on training models using the XGBoost algorithm, known for its efficiency and performance in structured data tasks. Rapid Innovation employs XGBoost training to deliver high-performing models for clients.

4.4. Deployment Frameworks

Once a model is trained, deploying it effectively is crucial for real-world applications. Deployment frameworks provide the necessary tools and infrastructure to integrate machine learning models into production environments.

- TensorFlow Serving: This is an open-source framework designed for serving machine learning models in production. It supports various model formats and provides features like versioning and monitoring.

- Flask and FastAPI: These lightweight web frameworks allow developers to create APIs for their models, making it easy to integrate machine learning capabilities into web applications.

- Kubernetes: This container orchestration platform is widely used for deploying machine learning models at scale. It automates the deployment, scaling, and management of containerized applications.

- MLflow: An open-source platform that manages the machine learning lifecycle, MLflow provides tools for tracking experiments, packaging code into reproducible runs, and sharing models.

- Amazon SageMaker: A fully managed service that provides tools for building, training, and deploying machine learning models quickly. It offers built-in algorithms and supports custom model deployment.

4.5. Performance Optimization

Optimizing the performance of machine learning models is essential to ensure they operate efficiently and effectively in production. Various strategies can be employed to enhance model performance.

- Hyperparameter Tuning: This involves adjusting the parameters that govern the training process to improve model accuracy. Techniques like grid search and random search are commonly used for this purpose.

- Feature Engineering: Selecting and transforming input features can significantly impact model performance. Techniques such as normalization, encoding categorical variables, and creating interaction terms can enhance model accuracy.

- Model Compression: Reducing the size of a model while maintaining its performance can lead to faster inference times and lower resource consumption. Techniques include pruning, quantization, and knowledge distillation.

- Ensemble Methods: Combining multiple models can lead to improved performance. Techniques like bagging, boosting, and stacking leverage the strengths of different models to achieve better results.

- Monitoring and Maintenance: Continuous monitoring of model performance in production is crucial. Implementing feedback loops and retraining models with new data can help maintain accuracy over time. Rapid Innovation emphasizes the importance of ongoing model performance monitoring to ensure that clients' systems remain effective and aligned with their evolving business goals.

5. Advanced Analysis Techniques

Advanced analysis techniques are essential for extracting meaningful insights from complex datasets. These methods help in simplifying data, enhancing interpretability, and improving the performance of machine learning models. Among these techniques, dimensionality reduction plays a crucial role in managing high-dimensional data, which is particularly relevant for organizations looking to leverage AI for data-driven decision-making.

5.1. Dimensionality Reduction

Dimensionality reduction refers to the process of reducing the number of random variables under consideration, obtaining a set of principal variables. This technique is particularly useful in scenarios where datasets have a large number of features, which can lead to overfitting and increased computational costs. At Rapid Innovation, we utilize dimensionality reduction techniques, including feature reduction techniques and dimensionality reduction with PCA, to help our clients streamline their data analysis processes, ultimately leading to greater ROI.

Benefits of dimensionality reduction include:

- Improved Visualization: Reducing dimensions allows for easier visualization of data, making it possible to plot high-dimensional data in two or three dimensions. This is particularly beneficial for stakeholders who need to understand complex data insights quickly.

- Reduced Overfitting: By eliminating irrelevant features, models can generalize better to unseen data. This is crucial for businesses aiming to deploy robust AI models that perform well in real-world scenarios.

- Enhanced Performance: Fewer dimensions can lead to faster training times and improved model performance. This efficiency translates into cost savings and quicker time-to-market for AI solutions.

- Noise Reduction: Dimensionality reduction can help in filtering out noise from the data, leading to more accurate predictions. This is vital for organizations that rely on precise data analytics for strategic decision-making.

5.1.1. Principal Component Analysis

Principal Component Analysis (PCA) is one of the most widely used techniques for dimensionality reduction. It transforms the original variables into a new set of variables, known as principal components, which are orthogonal and capture the maximum variance in the data. At Rapid Innovation, we implement PCA for dimensionality reduction to enhance our clients' data processing capabilities, ensuring they derive actionable insights from their datasets.

Key aspects of PCA include:

- Variance Maximization: PCA identifies the directions (principal components) in which the data varies the most. The first principal component captures the most variance, followed by the second, and so on. This allows businesses to focus on the most significant factors influencing their operations.

- Linear Transformation: PCA is a linear technique, meaning it assumes that the relationships between variables are linear. This can be a limitation in cases where the data has non-linear relationships, prompting us to explore alternative methods when necessary.

- Feature Reduction: By selecting a subset of principal components, PCA reduces the dimensionality of the dataset while retaining most of the information. This is particularly useful for clients with large datasets, as it simplifies analysis without sacrificing quality.

- Data Preprocessing: PCA often requires data to be standardized or normalized, especially when the features have different units or scales. Our team at Rapid Innovation ensures that data preprocessing is handled meticulously to maximize the effectiveness of PCA.

Applications of PCA include:

- Image Compression: PCA can reduce the size of image files while preserving essential features, making it a valuable tool for companies in the media and entertainment sectors.

- Genomics: In bioinformatics, PCA is used to analyze gene expression data and identify patterns, aiding research institutions in their scientific endeavors.

- Finance: PCA helps in risk management by identifying key factors that influence asset prices, providing financial institutions with critical insights for investment strategies.

PCA is a powerful tool, but it is essential to understand its limitations. For instance, it may not perform well with non-linear data distributions. In such cases, other techniques like t-Distributed Stochastic Neighbor Embedding (t-SNE) or Uniform Manifold Approximation and Projection (UMAP) for dimension reduction may be more appropriate, and our experts are well-versed in selecting the right approach for each unique dataset.

In conclusion, advanced analysis techniques like dimensionality reduction and PCA are vital for effective data analysis. They enable organizations to manage high-dimensional data efficiently, leading to better insights and decision-making. At Rapid Innovation, we are committed to helping our clients harness these techniques, including dimensionality reduction in machine learning and feature reduction in machine learning, to achieve their business goals efficiently and effectively, ultimately driving greater ROI.

5.1.2. t-SNE

t-Distributed Stochastic Neighbor Embedding (t-SNE) is a powerful technique for dimensionality reduction, particularly useful for visualizing high-dimensional data. It is widely used in machine learning and data science to help understand complex datasets, including applications of dimensionality reduction in machine learning.

- t-SNE works by converting similarities between data points into joint probabilities. It aims to minimize the divergence between these probabilities in high-dimensional space and their corresponding probabilities in a lower-dimensional space.

- The algorithm is particularly effective for visualizing clusters in data, making it a popular choice for exploratory data analysis, often used alongside dimensionality reduction techniques like PCA for better visualization.

- t-SNE is sensitive to the choice of parameters, particularly the perplexity, which can affect the resulting visualization. A typical range for perplexity is between 5 and 50.

- It is computationally intensive, especially for large datasets, which can lead to longer processing times.

- t-SNE is often used in conjunction with other techniques, such as PCA, to first reduce dimensionality before applying t-SNE for better visualization, highlighting the importance of feature reduction techniques.

5.1.3. UMAP

Uniform Manifold Approximation and Projection (UMAP) is another dimensionality reduction technique that has gained popularity for its speed and effectiveness in preserving the global structure of data.

- UMAP is based on manifold learning and topological data analysis, making it suitable for a wide range of applications, including clustering and visualization.

- It is generally faster than t-SNE, allowing it to handle larger datasets more efficiently, which is crucial for dimensionality reduction with PCA and other algorithms.

- UMAP preserves both local and global structures, which can lead to more meaningful visualizations compared to t-SNE.

- The algorithm requires tuning of parameters such as the number of neighbors and minimum distance, which can significantly influence the output.

- UMAP is increasingly being used in various fields, including genomics, image processing, and natural language processing, due to its versatility and effectiveness in dimensionality reduction algorithms.

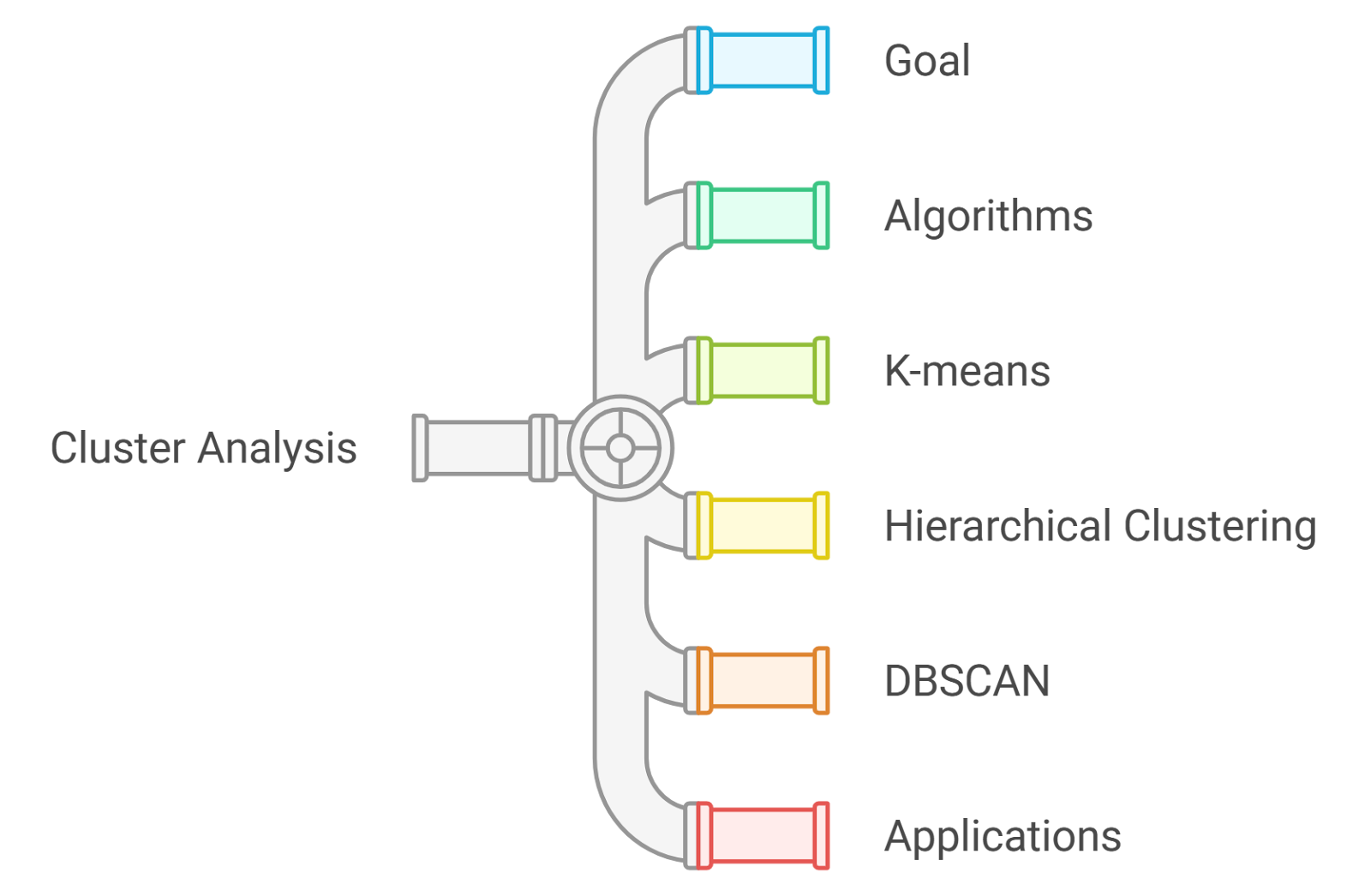

5.2. Cluster Analysis

Cluster analysis is a statistical technique used to group similar data points into clusters, allowing for better understanding and interpretation of data.

- The primary goal of cluster analysis is to identify natural groupings within a dataset, which can reveal patterns and relationships that may not be immediately apparent.

- There are several clustering algorithms available, including K-means, hierarchical clustering, and DBSCAN, each with its strengths and weaknesses.

- K-means is one of the most popular clustering methods, known for its simplicity and efficiency. It partitions data into K clusters by minimizing the variance within each cluster.

- Hierarchical clustering builds a tree-like structure of clusters, allowing for a more detailed view of data relationships. It can be agglomerative (bottom-up) or divisive (top-down).

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is effective for identifying clusters of varying shapes and sizes, making it suitable for datasets with noise and outliers.

- Cluster analysis is widely used in various fields, including marketing, biology, and social sciences, to segment populations, identify trends, and make data-driven decisions.

At Rapid Innovation, we leverage advanced techniques like t-SNE and UMAP in our AI solutions to help clients visualize and interpret their data more effectively. By employing cluster analysis and dimensionality reduction in machine learning, we assist businesses in uncovering valuable insights from their datasets, ultimately driving better decision-making and enhancing ROI. Our expertise in these areas ensures that clients can harness the full potential of their data, leading to more efficient and effective business strategies.

5.3. Anomaly Detection

Anomaly detection is a critical process in data analysis that focuses on identifying unusual patterns or outliers in datasets. These anomalies can indicate significant events, errors, or fraud, making their detection essential in various fields.

- Definition: Anomaly detection refers to the identification of data points that deviate significantly from the majority of the data. These deviations can be due to noise, errors, or genuine anomalies.

- Applications:

- Fraud detection in banking and finance, where Rapid Innovation employs advanced machine learning algorithms to identify suspicious transactions in real-time, significantly reducing financial losses.

- Network security to identify potential breaches, utilizing AI-driven solutions that monitor network traffic and detect anomalies indicative of cyber threats, including network traffic anomaly detection.

- Quality control in manufacturing processes, where our AI models analyze production data to flag defects early, ensuring product quality and reducing waste.

- Techniques:

- Statistical methods, such as Z-scores and Grubbs' test, which Rapid Innovation integrates into its analytics platforms for baseline anomaly detection, including statistical anomaly detection.

- Machine learning approaches, including supervised and unsupervised learning, allowing for adaptive learning from historical data to improve detection accuracy, as seen in anomaly detection using machine learning.

- Clustering methods, like k-means and DBSCAN, to group data and identify outliers, enhancing the robustness of our anomaly detection systems, which can also be applied in outlier detection algorithms.

- Challenges:

- High dimensionality can complicate the detection process, but our expertise in dimensionality reduction techniques helps streamline analysis.

- The need for labeled data in supervised learning can limit effectiveness; however, Rapid Innovation employs semi-supervised learning techniques to mitigate this issue.

- Balancing false positives and false negatives is crucial for practical applications, and our solutions are designed to optimize this balance, ensuring reliable outcomes.

5.4. Time Series Analysis

Time series analysis involves examining data points collected or recorded at specific time intervals. This technique is vital for understanding trends, seasonal patterns, and cyclical behaviors in data over time.

- Definition: Time series analysis is the statistical technique used to analyze time-ordered data to extract meaningful insights and forecast future values.

- Applications:

- Economic forecasting, such as predicting GDP growth, where Rapid Innovation utilizes sophisticated models to provide accurate economic insights.

- Stock market analysis to identify trends and make investment decisions, leveraging AI algorithms that analyze historical stock data for predictive analytics.

- Weather forecasting to predict climate patterns, employing machine learning models that analyze historical weather data for improved accuracy.

- Components:

- Trend: The long-term movement in the data.

- Seasonality: Regular patterns that repeat over specific intervals.

- Noise: Random variations that do not follow a pattern.

- Techniques:

- Autoregressive Integrated Moving Average (ARIMA) models for forecasting, which Rapid Innovation customizes for client-specific datasets, including time series outlier detection python.

- Seasonal decomposition of time series (STL) to separate components, enhancing the interpretability of time series data.

- Exponential smoothing methods for trend analysis, providing clients with actionable insights for strategic planning.

- Challenges:

- Handling missing data points can skew results; our solutions include robust imputation techniques to address this issue.

- Non-stationarity in time series data may require transformation, and our expertise ensures that data is appropriately pre-processed for analysis.

- Overfitting models can lead to poor predictive performance, which we mitigate through rigorous model validation processes.

5.5. Pattern Mining

Pattern mining is the process of discovering interesting and useful patterns from large datasets. This technique is widely used in data mining to extract valuable insights that can inform decision-making.

- Definition: Pattern mining involves identifying regularities, correlations, or trends within data, often using algorithms to automate the process.

- Applications:

- Market basket analysis to understand consumer purchasing behavior, where Rapid Innovation helps retailers optimize inventory and marketing strategies based on consumer patterns.

- Web usage mining to analyze user interactions on websites, providing insights that enhance user experience and engagement.

- Bioinformatics for discovering patterns in genetic data, where our advanced analytics support research and development in healthcare.

- Techniques:

- Association rule mining, such as the Apriori algorithm, to find relationships between variables, which we implement in various client projects to uncover hidden insights.

- Sequential pattern mining to identify patterns over time, enabling businesses to anticipate customer needs and behaviors.

- Clustering techniques to group similar data points, facilitating targeted marketing and personalized customer experiences.

- Challenges:

- The sheer volume of data can make pattern discovery computationally intensive; our scalable solutions ensure efficient processing.

- Identifying meaningful patterns without overwhelming noise is crucial, and our expertise in data cleaning and preprocessing enhances the quality of insights.

- Ensuring the patterns discovered are actionable and relevant to business objectives is a core focus of Rapid Innovation, driving tangible results for our clients, including anomaly detection techniques and outlier detection in data mining.

6. Challenges and Limitations

The implementation of advanced technologies and methodologies often comes with a set of challenges and limitations. Understanding these obstacles is crucial for effective planning and execution.

6.1 Technical Challenges

Technical challenges encompass a wide range of issues that can arise during the development and deployment of technology-driven solutions. These challenges can hinder progress and affect the overall effectiveness of a project. Some of the key technical challenges include:

- Integration with existing systems can be difficult, leading to compatibility issues that may disrupt business operations, particularly in the context of challenges implementing electronic health records.

- Data quality and availability can impact the accuracy of results, making it essential to establish robust data governance practices, especially when addressing challenges in implementing ehr.

- Security concerns may arise, especially when handling sensitive information, necessitating the implementation of stringent security protocols, which is crucial in overcoming challenges of implementing an ehr system.

- Rapid technological changes can make it hard to keep systems updated, requiring ongoing investment in training and resources, particularly in the face of emr implementation challenges.

- User adoption can be slow if the technology is not user-friendly, highlighting the importance of intuitive design and user experience, a common issue in challenges presented by implementing technology in a healthcare setting.

6.1.1 Computational Complexity

Computational complexity refers to the amount of computational resources required to solve a problem or execute an algorithm. This complexity can pose significant challenges in various fields, particularly in data science, machine learning, and artificial intelligence. The challenges associated with computational complexity include:

- High computational demands can lead to increased costs for hardware and software, impacting overall ROI, which is a concern in challenges of implementing health information systems.

- Algorithms with high complexity may take an impractical amount of time to execute, especially with large datasets, which can delay project timelines, similar to the top 10 ehr implementation challenges and how to overcome them.

- Optimization of algorithms is often necessary to reduce computational load, which can be a complex task in itself and may require specialized expertise.

- Scalability issues may arise when trying to apply solutions to larger datasets or more complex problems, necessitating a forward-thinking approach to architecture.

- The need for specialized knowledge in algorithm design and optimization can limit the pool of available talent, making it crucial to partner with experienced firms like Rapid Innovation.

Understanding these technical challenges and computational complexities is essential for organizations looking to leverage technology effectively. Addressing these issues proactively can lead to more successful outcomes and a smoother implementation process, ultimately helping clients achieve their business goals efficiently and effectively, especially when navigating challenges in implementing telemedicine and AI challenges and limitations.

6.1.2. Memory Management

Memory management is a critical aspect of computer systems and software development. It involves the efficient allocation, use, and release of memory resources to ensure optimal performance and stability. Effective memory management can significantly impact the speed and efficiency of applications, particularly when considering memory management techniques.

- Dynamic Memory Allocation: This allows programs to request memory at runtime, which is essential for applications that require varying amounts of memory. Techniques like

mallocandfreein C ornewanddeletein C++ are commonly used. At Rapid Innovation, we leverage dynamic memory allocation to build scalable AI solutions that adapt to varying workloads, ensuring efficient resource utilization. - Garbage Collection: Automatic memory management techniques, such as garbage collection, help reclaim memory that is no longer in use. Languages like Java and Python utilize garbage collectors to prevent memory leaks and optimize memory usage. Our blockchain applications also benefit from garbage collection, as it helps maintain system performance by managing memory effectively.

- Memory Leaks: A memory leak occurs when a program allocates memory but fails to release it after use. This can lead to increased memory consumption and eventual system crashes. Regular monitoring and profiling tools can help identify and fix memory leaks. Rapid Innovation employs advanced monitoring tools to ensure our applications remain robust and efficient, minimizing the risk of memory leaks.

- Fragmentation: Memory fragmentation can occur when free memory is split into small, non-contiguous blocks. This can hinder the allocation of larger memory requests. Techniques like compaction can help mitigate fragmentation issues. Our development practices include strategies to minimize fragmentation, ensuring that our applications run smoothly even under heavy loads.

- Performance Impact: Poor memory management can lead to slow application performance, increased latency, and higher resource consumption. Optimizing memory usage is crucial for applications that handle large datasets or require real-time processing. At Rapid Innovation, we focus on optimizing memory management techniques in OS to enhance the performance of our AI solutions and blockchain solutions, ultimately leading to greater ROI for our clients. Additionally, understanding Rust's memory management and ownership model can provide valuable insights into efficient memory handling practices.

6.1.3. Algorithm Scalability

Algorithm scalability refers to the ability of an algorithm to maintain its performance as the size of the input data increases. A scalable algorithm can efficiently handle growing datasets without a significant increase in resource consumption or processing time.

- Time Complexity: The efficiency of an algorithm is often measured in terms of time complexity, which describes how the execution time increases with the size of the input. Common complexities include O(1), O(n), O(log n), and O(n²). Our team at Rapid Innovation designs algorithms with optimal time complexity to ensure that our solutions can handle large-scale data efficiently.

- Space Complexity: Similar to time complexity, space complexity measures the amount of memory an algorithm uses relative to the input size. An algorithm with low space complexity is preferable, especially in memory-constrained environments. We prioritize space-efficient algorithms in our AI models, ensuring that they perform well even in resource-limited scenarios.

- Big O Notation: Big O notation is a mathematical representation used to describe the upper limit of an algorithm's performance. It helps developers understand how an algorithm will scale with larger inputs. Our expertise in algorithm design allows us to communicate performance expectations clearly to our clients, ensuring transparency in our development process.

- Real-World Applications: Scalable algorithms are essential in various fields, including data processing, machine learning, and web services. For instance, sorting algorithms like QuickSort and MergeSort are known for their scalability and efficiency with large datasets. Rapid Innovation applies these principles to develop scalable AI solutions that can grow alongside our clients' businesses.

- Testing Scalability: To ensure an algorithm is scalable, developers often conduct stress tests and performance benchmarks. These tests help identify bottlenecks and areas for optimization. Our rigorous testing methodologies at Rapid Innovation ensure that our solutions are not only scalable but also resilient under varying loads.

6.2. Data Quality Issues