Table Of Contents

Category

Artificial Intelligence

AIML

IoT

Blockchain

Healthcare & Medicine

1. Introduction to AI in Genomics

Artificial Intelligence (AI) is revolutionizing the field of genomics by enhancing the analysis and interpretation of genomic data. The integration of AI technologies into genomics is enabling researchers and healthcare professionals to make more informed decisions, leading to improved patient outcomes and advancements in personalized medicine.

- AI algorithms can process vast amounts of genomic data quickly and accurately, allowing for more efficient research and development cycles.

- Machine learning models can identify patterns and correlations that may not be evident through traditional analysis, providing insights that drive innovation in treatment strategies.

- AI tools are increasingly being used to predict disease susceptibility, treatment responses, and potential drug interactions, ultimately leading to better patient management and care.

The application of AI in genomics is not just limited to data analysis; it also encompasses various aspects of research and clinical practice. By leveraging AI, researchers can accelerate the discovery of new biomarkers and therapeutic targets, ultimately leading to more effective treatments and a higher return on investment (ROI) for healthcare organizations.

- AI can assist in the identification of genetic variants associated with diseases, streamlining the diagnostic process and reducing time to treatment.

- Natural language processing (NLP) can be used to extract relevant information from scientific literature and clinical records, enhancing the knowledge base available to researchers and clinicians.

- AI-driven platforms can facilitate collaboration among researchers by providing shared access to genomic data, fostering innovation and accelerating breakthroughs in the field.

As the field of genomics continues to evolve, the role of AI will become increasingly significant. The combination of AI and genomics holds the potential to transform healthcare by enabling precision medicine, where treatments are tailored to the individual characteristics of each patient. The emergence of AI in genomics is also reflected in the growing number of AI genomics companies that are developing innovative solutions. At Rapid Innovation, we are committed to helping our clients harness the power of AI in genomics to achieve their business goals efficiently and effectively, ultimately driving greater ROI and improving patient outcomes. The integration of artificial intelligence in clinical and genomic diagnostics is paving the way for a new era in healthcare, where AI in genomics market dynamics will continue to evolve.

1.1. Evolution of Genomic Data Processing

The evolution of genomic data processing has been a remarkable journey, driven by advancements in technology and an increasing understanding of genetics.

- Early sequencing methods, such as Sanger sequencing, laid the groundwork for genomic research but were time-consuming and costly.

- The introduction of next-generation sequencing (NGS) revolutionized the field, allowing for rapid sequencing of entire genomes at a fraction of the cost. This shift enabled large-scale genomic studies and personalized medicine.

- The Human Genome Project, completed in 2003, was a landmark achievement that provided a reference genome and spurred the development of bioinformatics tools for gene raw data analysis.

- As genomic data grew exponentially, the need for efficient data processing became critical. High-throughput sequencing technologies generated terabytes of data, necessitating robust computational frameworks.

- Cloud computing and big data technologies emerged, allowing researchers to store, manage, and analyze vast amounts of genomic data more effectively.

- The integration of machine learning and artificial intelligence into genomic data processing has further enhanced the ability to interpret complex datasets, leading to breakthroughs in understanding genetic diseases and drug development. At Rapid Innovation, we leverage these advancements to provide tailored AI solutions that optimize genomic data processing, ensuring our clients achieve greater efficiency and ROI. Additionally, understanding the importance of data quality is crucial for successful AI implementations in this field.

1.2. Current Challenges in Genomic Analysis

Despite the advancements in genomic data processing, several challenges persist in the field of genomic analysis.

- Data Volume: The sheer volume of genomic data generated poses significant storage and processing challenges. Managing terabytes of data requires sophisticated infrastructure and resources.

- Data Quality: Ensuring the accuracy and reliability of genomic data is crucial. Errors in sequencing can lead to incorrect interpretations and conclusions, impacting research and clinical outcomes.

- Interpretation of Variants: Identifying and interpreting genetic variants remains a complex task. Many variants have unknown significance, making it difficult to determine their role in diseases.

- Integration of Multi-Omics Data: Combining genomic data with other omics data (like transcriptomics and proteomics) is essential for a comprehensive understanding of biological systems. However, integrating these diverse datasets presents technical and analytical challenges.

- Ethical and Privacy Concerns: The use of genomic data raises ethical issues, particularly regarding consent, data sharing, and privacy. Ensuring that individuals' genetic information is protected is paramount.

- Skill Gap: There is a shortage of trained professionals who can analyze and interpret genomic data effectively. Bridging this skill gap is essential for advancing genomic research and applications. Rapid Innovation addresses this challenge by providing consulting services that equip organizations with the necessary expertise and tools to navigate the complexities of genomic analysis.

1.3. Role of AI Agents in Modern Genomics

Artificial intelligence (AI) agents are playing an increasingly vital role in modern genomics, transforming how researchers analyze and interpret genomic data.

- Data Analysis: AI algorithms can process vast amounts of genomic data quickly and accurately, identifying patterns and correlations that may be missed by traditional methods.

- Predictive Modeling: Machine learning models can predict disease susceptibility based on genetic information, enabling personalized medicine approaches tailored to individual patients.

- Variant Classification: AI tools assist in the classification of genetic variants, helping researchers determine their potential impact on health and disease. This is particularly useful in clinical genomics.

- Drug Discovery: AI is being used to identify potential drug targets and predict the efficacy of new compounds based on genomic data, accelerating the drug discovery process.

- Automation: AI agents can automate repetitive tasks in genomic analysis, freeing up researchers to focus on more complex problems and enhancing overall productivity.

- Enhanced Collaboration: AI platforms facilitate collaboration among researchers by providing shared tools and resources, fostering innovation and accelerating discoveries in genomics.

The integration of AI in genomics is not just a trend; it is reshaping the landscape of genetic research and clinical applications, paving the way for more effective and personalized healthcare solutions. At Rapid Innovation, we are committed to harnessing the power of AI to help our clients overcome challenges in genomic analysis, ultimately driving greater ROI and advancing the field of genomics.

1.4. Overview of Key Technologies and Frameworks

Artificial Intelligence (AI) has become a transformative force in various fields, including genomics. The integration of AI technologies and frameworks is crucial for enhancing genomic research and applications. Here are some key technologies and frameworks that are shaping the landscape of AI in genomics:

- Machine Learning (ML): This subset of AI focuses on algorithms that allow computers to learn from and make predictions based on data. In genomics, ML is used for tasks such as gene expression analysis, variant calling, and predicting disease susceptibility. Rapid Innovation leverages ML to develop tailored solutions that help clients achieve significant improvements in their genomic research outcomes. Companies specializing in AI genomics are increasingly utilizing these techniques to drive innovation.

- Deep Learning (DL): A more advanced form of ML, deep learning utilizes neural networks with many layers. It excels in processing large datasets, making it ideal for analyzing complex genomic data, such as whole-genome sequencing. Our expertise in DL enables us to assist clients in extracting deeper insights from their genomic data, ultimately leading to better decision-making. The application of deep learning in genomics is a key area of focus for AI in genomics.

- Natural Language Processing (NLP): NLP techniques are employed to extract meaningful information from unstructured data sources, such as scientific literature and clinical notes. This helps in identifying relevant genomic information and trends. Rapid Innovation utilizes NLP to enhance data interpretation, allowing clients to stay ahead in their research endeavors. The integration of NLP in genomics is essential for understanding the vast amount of literature related to artificial intelligence in clinical and genomic diagnostics.

- Bioinformatics Tools: Various bioinformatics frameworks, such as Bioconductor and Galaxy, provide essential tools for data analysis and visualization in genomics. These platforms facilitate the integration of AI algorithms with genomic data. We assist clients in implementing these tools effectively, ensuring they maximize their research capabilities. The use of bioinformatics tools is critical for companies focused on AI in genomics.

- Cloud Computing: The scalability and flexibility of cloud computing enable researchers to store and analyze vast amounts of genomic data efficiently. Platforms like AWS and Google Cloud offer specialized services for genomic data processing. Rapid Innovation helps clients harness cloud solutions to optimize their data management and analysis processes, leading to enhanced operational efficiency. The cloud computing landscape is vital for the growth of AI in genomics.

- Data Integration Frameworks: Tools like Apache Spark and TensorFlow Extended (TFX) allow for the integration of diverse data sources, enhancing the ability to analyze genomic data alongside clinical and environmental data. Our expertise in these frameworks enables clients to create comprehensive datasets that drive more accurate insights. The integration of data from various sources is essential for the success of AI in genomics.

- Genomic Databases: Resources such as The Cancer Genome Atlas (TCGA) and the Genome Aggregation Database (gnomAD) provide extensive datasets that can be leveraged by AI algorithms for research and clinical applications. Rapid Innovation guides clients in utilizing these databases effectively, ensuring they derive maximum value from their genomic research. The availability of genomic databases is a cornerstone for AI in genomics, enabling researchers to access critical information.

These technologies and frameworks collectively enhance the capabilities of AI in genomics, leading to improved diagnostics, personalized medicine, and a deeper understanding of genetic diseases. The intersection of artificial intelligence and genomics is paving the way for innovative solutions in healthcare.

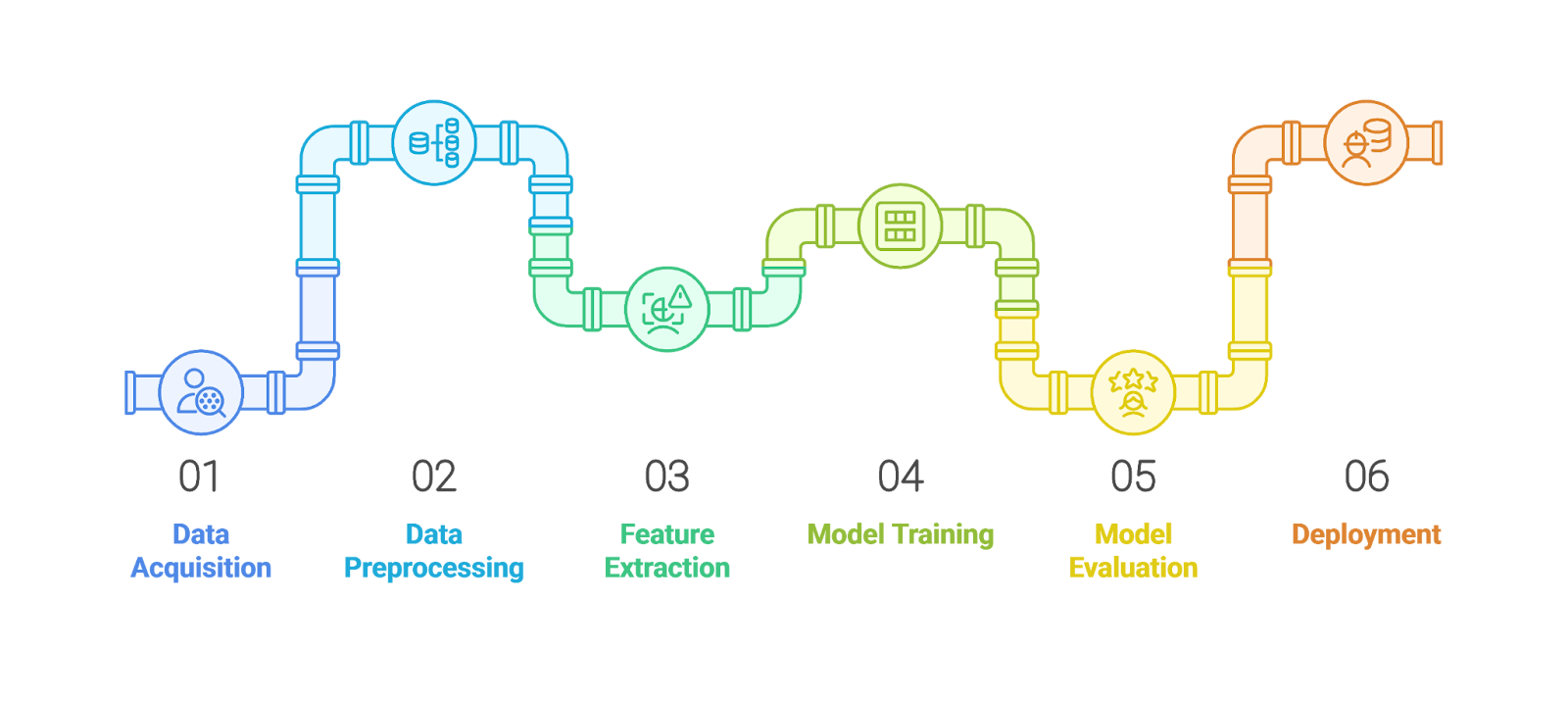

2. Fundamental Components of AI Agents in Genomics

AI agents in genomics are designed to perform specific tasks that enhance genomic research and clinical applications. Understanding the fundamental components of these agents is essential for leveraging their capabilities effectively. The key components include:

- Data Acquisition: This involves collecting genomic data from various sources, including sequencing technologies, clinical databases, and public repositories. High-quality data is crucial for training AI models. The role of AI in genomics is to streamline this process and ensure data integrity.

- Data Preprocessing: Raw genomic data often contains noise and inconsistencies. Preprocessing steps, such as normalization, filtering, and transformation, are necessary to prepare the data for analysis. Effective preprocessing is vital for the success of AI in genomics applications.

- Feature Extraction: Identifying relevant features from genomic data is critical for model performance. Techniques such as dimensionality reduction and selection algorithms help in isolating significant variables that influence outcomes. The application of machine learning and genomics is particularly relevant in this phase.

- Model Training: AI agents utilize machine learning algorithms to learn patterns from the processed data. This phase involves selecting appropriate models, tuning hyperparameters, and validating performance using training and test datasets. The training of models is a crucial step in the development of AI in genomics.

- Model Evaluation: After training, models must be evaluated to ensure their accuracy and reliability. Metrics such as precision, recall, and F1-score are commonly used to assess model performance in genomic applications. The evaluation process is essential for ensuring the effectiveness of AI in genomics.

- Deployment: Once validated, AI models can be deployed in clinical settings or research environments. This may involve integrating the models into existing workflows or developing user-friendly interfaces for researchers and clinicians. The deployment of AI solutions in genomics is a key factor in translating research into practice.

- Continuous Learning: AI agents should be designed to adapt and improve over time. Continuous learning mechanisms allow models to update their knowledge base as new genomic data becomes available, ensuring they remain relevant and effective. This adaptability is crucial for the ongoing evolution of AI in genomics.

2.1. Data Processing Architecture

The data processing architecture for AI agents in genomics is a critical component that determines how genomic data is handled, analyzed, and utilized. A well-structured architecture ensures efficient data flow and processing, enabling the effective application of AI techniques. Key elements of this architecture include:

- Data Ingestion: This is the initial step where genomic data is collected from various sources. It can include high-throughput sequencing data, clinical records, and public genomic databases. The role of AI in genomics is to facilitate this ingestion process.

- Data Storage: Efficient storage solutions are necessary to handle the large volumes of genomic data. Options include relational databases, NoSQL databases, and cloud storage solutions that provide scalability and accessibility. The storage of genomic data is a foundational aspect of AI in genomics.

- Data Processing Pipeline: A robust processing pipeline is essential for transforming raw data into usable formats. This pipeline typically includes:

- Data Cleaning: Removing errors and inconsistencies from the data.

- Data Transformation: Converting data into a suitable format for analysis, such as converting sequence data into numerical representations.

- Data Integration: Combining data from multiple sources to create a comprehensive dataset for analysis. The integration of diverse data sources is a hallmark of AI in genomics.

- Computational Resources: High-performance computing resources, including GPUs and distributed computing frameworks, are often required to process large genomic datasets efficiently. The computational demands of AI in genomics necessitate robust infrastructure.

- Analysis and Modeling: This stage involves applying AI algorithms to the processed data. It includes model training, evaluation, and optimization to ensure accurate predictions and insights. The analysis phase is where the true power of AI in genomics is realized.

- Visualization and Reporting: Effective visualization tools are necessary for interpreting genomic data and AI model outputs. Dashboards and reporting tools help researchers and clinicians understand the results and make informed decisions. Visualization is a critical component of communicating findings in AI and genomics.

- Feedback Loop: Incorporating a feedback mechanism allows for continuous improvement of the data processing architecture. This can involve updating algorithms based on new findings or user feedback to enhance performance. The feedback loop is essential for the iterative nature of AI in genomics.

By establishing a comprehensive data processing architecture, AI agents in genomics can effectively analyze complex datasets, leading to significant advancements in genomic research and personalized medicine. Rapid Innovation is committed to providing the expertise and solutions necessary for clients to achieve these advancements efficiently and effectively, including the exploration of AI genomics companies and the market for AI in genomics. For more insights on model development, you can refer to best practices for transformer model development.

2.1.1. Raw Data Preprocessing

Raw data preprocessing is a critical step in data analysis and machine learning. It involves transforming raw data into a clean and usable format, which is essential for ensuring the accuracy and reliability of the results derived from data analysis. At Rapid Innovation, we leverage our expertise in AI to streamline this process, enabling clients to achieve greater ROI through enhanced data quality.

- Data Cleaning: This involves identifying and correcting errors or inconsistencies in the data. Common tasks include:

- Removing duplicates

- Filling in missing values

- Correcting typos or formatting issues

- Data Transformation: This step modifies the data into a suitable format for analysis. Techniques include:

- Aggregating data to summarize information

- Encoding categorical variables into numerical formats

- Scaling numerical values to a standard range

- Data Integration: Combining data from different sources can provide a more comprehensive view. This may involve:

- Merging datasets from various databases

- Ensuring consistency in data formats across sources

- Data Reduction: Reducing the volume of data while maintaining its integrity is crucial. Methods include:

- Dimensionality reduction techniques like PCA (Principal Component Analysis)

- Sampling methods to select a representative subset of the data

Effective raw data preprocessing, including data preprocessing techniques in machine learning, can significantly enhance the quality of insights derived from data analysis, leading to better decision-making and ultimately driving business success.

2.1.2. Quality Control Systems

Quality control systems are essential for maintaining the integrity and reliability of data throughout its lifecycle. These systems ensure that data meets predefined standards and is suitable for analysis. Rapid Innovation implements robust quality control measures to help clients maintain high data standards, which is vital for informed decision-making.

- Data Validation: This process checks the accuracy and quality of data before it is used. Key aspects include:

- Implementing validation rules to catch errors

- Using automated tools to flag inconsistencies

- Monitoring and Auditing: Regular monitoring of data processes helps identify issues early. This includes:

- Conducting periodic audits of data sources

- Tracking changes and updates to data over time

- Error Reporting and Correction: Establishing a system for reporting and correcting errors is vital. This can involve:

- Creating a feedback loop for users to report discrepancies

- Implementing corrective actions to address identified issues

- Standard Operating Procedures (SOPs): Developing SOPs for data handling ensures consistency and quality. This includes:

- Defining roles and responsibilities for data management

- Outlining procedures for data entry, processing, and storage

Implementing robust quality control systems can lead to higher data accuracy, which is crucial for effective analysis and decision-making.

2.1.3. Data Normalization Techniques

Data normalization techniques are essential for preparing data for analysis, particularly in machine learning. Normalization ensures that different features contribute equally to the analysis, preventing bias towards certain variables. At Rapid Innovation, we utilize these techniques to enhance the performance of AI models, ensuring our clients achieve optimal results.

- Min-Max Normalization: This technique rescales the data to a fixed range, typically [0, 1]. It is useful for:

- Ensuring that all features have the same scale

- Making it easier to compare different features

- Z-Score Normalization: Also known as standardization, this method transforms data to have a mean of 0 and a standard deviation of 1. Benefits include:

- Reducing the impact of outliers

- Making the data distribution more Gaussian-like

- Decimal Scaling: This technique involves moving the decimal point of values to normalize the data. It is particularly useful for:

- Simplifying the representation of large numbers

- Ensuring that all values fall within a specific range

- Robust Scaler: This method uses the median and interquartile range for normalization, making it less sensitive to outliers. It is beneficial for:

- Maintaining the integrity of data with extreme values

- Providing a more accurate representation of the central tendency

Utilizing appropriate data normalization techniques, including data preprocessing algorithms, can enhance the performance of machine learning models and improve the interpretability of results, ultimately leading to greater ROI for our clients.

2.2. Machine Learning Components

Machine learning is a subset of artificial intelligence that enables systems to learn from data, identify patterns, and make decisions with minimal human intervention. The components of machine learning can be broadly categorized into various types, each serving a unique purpose in the data analysis process. Understanding these components is crucial for anyone looking to leverage machine learning in their projects, and Rapid Innovation is here to guide you through this journey to achieve your business goals efficiently and effectively.

- Data: The foundation of any machine learning model. The quality and quantity of data significantly impact the model's performance. At Rapid Innovation, we emphasize the importance of data quality and assist clients in curating and cleaning their datasets to maximize model efficacy.

- Algorithms: The mathematical procedures that process data and learn from it. Different algorithms are suited for different types of problems. Our team at Rapid Innovation employs a tailored approach, selecting the most appropriate algorithms based on the specific needs of your project to ensure optimal results. This includes techniques such as PCA (Principal Component Analysis) algorithm in machine learning, which is essential for dimensionality reduction.

- Models: The output of the training process, representing the learned patterns from the data. We help clients develop robust models that can adapt to changing data landscapes, ensuring long-term value and return on investment (ROI). For instance, we utilize PCA in machine learning python to enhance model performance.

- Features: The individual measurable properties or characteristics used by the model to make predictions. Our experts work closely with clients to identify and engineer the most relevant features, enhancing model performance and accuracy. This includes understanding the applications of PCA in machine learning to select the right features.

- Training and Testing: The process of training a model on a dataset and then testing its performance on unseen data. Rapid Innovation employs rigorous training and testing methodologies to validate model performance, ensuring that our clients can trust the insights generated.

2.2.1. Supervised Learning Models

Supervised learning is a type of machine learning where the model is trained on labeled data. This means that the input data is paired with the correct output, allowing the model to learn the relationship between the two. Supervised learning is widely used for classification and regression tasks.

- Classification: The task of predicting a categorical label, such as determining whether an email is spam or not. Rapid Innovation has successfully implemented classification models for various clients, enhancing their operational efficiency.

- Regression: The task of predicting a continuous value, for instance, predicting house prices based on various features like size and location. Our regression models have helped clients in real estate and finance make data-driven decisions that lead to increased profitability.

- Algorithms: Common algorithms include:

- Linear Regression

- Decision Trees

- Support Vector Machines (SVM)

- Neural Networks

- Applications: Supervised learning is used in various fields, including:

- Healthcare for disease diagnosis

- Finance for credit scoring

- Marketing for customer segmentation

The effectiveness of supervised learning models largely depends on the quality of the labeled data. A well-labeled dataset can significantly enhance the model's accuracy and reliability, and at Rapid Innovation, we ensure that our clients have access to high-quality data for their projects.

2.2.2. Unsupervised Learning Approaches

Unsupervised learning, in contrast to supervised learning, deals with unlabeled data. The model attempts to learn the underlying structure of the data without any explicit instructions on what to predict. This approach is particularly useful for exploratory data analysis and pattern recognition.

- Clustering: The process of grouping similar data points together. Common algorithms include:

- K-Means

- Hierarchical Clustering

- DBSCAN

- Dimensionality Reduction: Techniques used to reduce the number of features in a dataset while preserving its essential characteristics. Popular methods include:

- Principal Component Analysis (PCA)

- Kernel PCA in machine learning

- t-Distributed Stochastic Neighbor Embedding (t-SNE)

- Anomaly Detection: Identifying unusual data points that do not fit the expected pattern. This is crucial in fraud detection and network security, areas where Rapid Innovation has delivered significant value to clients.

- Applications: Unsupervised learning is widely applied in various domains, such as:

- Market basket analysis in retail

- Customer segmentation in marketing

- Image classification using PCA python

- Image compression in computer vision

Unsupervised learning is particularly valuable when labeled data is scarce or expensive to obtain. It allows organizations to uncover hidden patterns and insights from their data, leading to more informed decision-making. At Rapid Innovation, we leverage these techniques to help our clients gain a competitive edge in their respective markets, including the use of eigenvectors and eigenvalues in machine learning for deeper insights. For a comprehensive understanding of machine learning.

2.2.3. Deep Learning Architectures

Deep learning architectures are a subset of machine learning that utilize neural networks with multiple layers to analyze various types of data. These architectures have gained significant traction in recent years due to their ability to model complex patterns and relationships in large datasets, providing businesses with the tools to enhance decision-making and operational efficiency.

- Convolutional Neural Networks (CNNs):

- Primarily used for image processing tasks.

- Effective in identifying spatial hierarchies in data.

- Commonly applied in genomics for analyzing genomic sequences and images from microscopy, enabling researchers to derive insights that can lead to breakthroughs in personalized medicine. Architectures such as vgg16 architecture and vgg19 are popular examples of CNNs used in various applications.

- Recurrent Neural Networks (RNNs):

- Designed for sequential data analysis.

- Useful in tasks where context and order matter, such as time series data or natural language.

- In genomics, RNNs can be employed to predict gene expression levels based on previous sequences, allowing for more accurate forecasting of biological behaviors. The architecture of recurrent neural network is particularly suited for these tasks.

- Generative Adversarial Networks (GANs):

- Comprise two neural networks, a generator and a discriminator, that work against each other.

- Effective in generating new data samples that resemble the training data.

- In genomics, GANs can be used to synthesize new genomic sequences for research purposes, thus accelerating the pace of discovery and innovation. Variational autoencoder architecture is another generative model that can be utilized in similar contexts.

- Transformer Models:

- Utilize self-attention mechanisms to process data in parallel.

- Highly effective in natural language processing tasks.

- Their application in genomics is emerging, particularly in understanding complex relationships in genomic data, which can lead to more informed research outcomes. Deep learning architectures like inception v3 architecture are also being explored for their potential in genomic applications.

Deep learning architectures such as residual networks and resnet18 architecture are transforming the landscape of genomic research by enabling more accurate predictions and insights from vast amounts of data, ultimately helping organizations achieve greater ROI through enhanced research capabilities and faster time-to-market for new therapies.

2.3. Natural Language Processing for Genomic Literature

Natural Language Processing (NLP) is a field of artificial intelligence that focuses on the interaction between computers and human language. In the context of genomic literature, NLP plays a crucial role in extracting meaningful information from vast amounts of unstructured text data, thereby streamlining research processes.

- Information Extraction:

- NLP techniques can identify and extract relevant information from scientific papers, such as gene names, mutations, and disease associations. This helps researchers quickly gather insights from literature without manually reading each paper, saving time and resources.

- Text Mining:

- NLP enables the analysis of large volumes of genomic literature to identify trends, relationships, and gaps in research. Text mining tools can summarize findings, making it easier for researchers to stay updated on the latest developments and focus on high-impact areas.

- Sentiment Analysis:

- NLP can assess the sentiment of research articles, helping to gauge the scientific community's perception of specific genomic studies or findings. This can inform funding decisions and research directions, ensuring that investments are aligned with promising areas of study.

- Ontology Development:

- NLP aids in the creation of ontologies that standardize terminology in genomics, facilitating better data sharing and collaboration. Ontologies help in organizing knowledge and improving the interoperability of genomic databases, which is essential for collaborative research efforts.

By leveraging NLP, researchers can enhance their understanding of genomic literature, leading to more informed decisions and innovative discoveries that drive business growth and improve patient outcomes.

2.4. Visualization and Reporting Systems

Visualization and reporting systems are essential tools in genomics, enabling researchers to interpret complex data and communicate findings effectively. These systems transform raw data into visual formats that are easier to understand and analyze, thereby enhancing stakeholder engagement and decision-making.

- Data Visualization:

- Graphical representations of genomic data, such as heatmaps, scatter plots, and genome browsers, help researchers identify patterns and anomalies. Visualization tools can illustrate relationships between genes, mutations, and phenotypes, making it easier to draw conclusions that can inform strategic initiatives.

- Interactive Dashboards:

- These systems allow users to explore genomic data dynamically, providing filters and options to customize views. Interactive dashboards can facilitate real-time data analysis and collaboration among researchers, promoting a culture of innovation and agility.

- Reporting Tools:

- Automated reporting systems can generate comprehensive reports summarizing genomic findings, methodologies, and conclusions. These reports can be tailored for different audiences, including researchers, clinicians, and policymakers, ensuring that insights are communicated effectively.

- Integration with Other Systems:

- Visualization and reporting systems can integrate with databases and analytical tools, streamlining workflows. This integration enhances data accessibility and promotes collaboration across research teams, ultimately driving advancements in genomics research and personalized medicine.

Effective visualization and reporting systems are vital for translating complex genomic data into actionable insights, ultimately helping organizations achieve their business goals efficiently and effectively.

3. Core AI Technologies in Genomic Processing

The integration of artificial intelligence (AI) in genomic processing has revolutionized the field of genomics. Core AI technologies, particularly deep learning, have enabled researchers to analyze vast amounts of genomic data efficiently. This section delves into the significance of deep neural networks and their specific application through convolutional neural networks in genomic processing, particularly in the context of ai in genomic processing.

3.1. Deep Neural Networks

Deep neural networks (DNNs) are a class of machine learning algorithms that mimic the human brain's structure and function. They consist of multiple layers of interconnected nodes (neurons) that process data in a hierarchical manner. DNNs are particularly effective in handling complex datasets, making them ideal for genomic processing.

DNNs can learn intricate patterns in genomic data, which traditional algorithms may overlook. They are capable of performing tasks such as classification, regression, and clustering, which are essential in genomics. Additionally, DNNs can process unstructured data, such as DNA sequences, allowing for more comprehensive analyses.

The application of DNNs in genomics has led to significant advancements, including improved accuracy in predicting genetic disorders, enhanced understanding of gene expression and regulation, and accelerated drug discovery processes by identifying potential drug targets. At Rapid Innovation, we leverage DNNs to help our clients achieve greater ROI by streamlining their genomic research and development processes, ultimately leading to faster and more accurate results.

3.1.1. Convolutional Neural Networks

Convolutional neural networks (CNNs) are a specialized type of deep neural network designed to process structured grid data, such as images. In genomic processing, CNNs have gained traction due to their ability to analyze and interpret complex biological data, including DNA sequences and protein structures, which is a key aspect of ai in genomic processing.

CNNs utilize convolutional layers to automatically extract features from input data, reducing the need for manual feature engineering. They excel in recognizing spatial hierarchies, making them suitable for tasks like identifying motifs in DNA sequences.

Key applications of CNNs in genomic processing include:

- Genomic Sequence Analysis: CNNs can analyze DNA sequences to identify patterns associated with specific traits or diseases. This capability is crucial for personalized medicine and understanding genetic predispositions.

- Protein Structure Prediction: CNNs can predict the three-dimensional structure of proteins based on their amino acid sequences. This is vital for drug design and understanding biological functions.

- Image Analysis in Genomics: CNNs are also used in analyzing genomic images, such as those from microscopy or sequencing technologies. They can help in identifying cellular structures or anomalies in tissue samples.

The effectiveness of CNNs in genomic processing is supported by their ability to handle large datasets and their robustness in feature extraction. As genomic data continues to grow exponentially, the role of CNNs in this field is expected to expand, leading to more breakthroughs in understanding genetic information and its implications for health and disease. Rapid Innovation is committed to harnessing the power of CNNs to provide our clients with innovative solutions that enhance their genomic research capabilities and drive significant returns on investment.

3.1.2. Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are a class of artificial neural networks designed for processing sequential data. Unlike traditional feedforward neural networks, RNNs have connections that loop back on themselves, allowing them to maintain a form of memory. This characteristic makes RNNs particularly effective for tasks involving time series data, natural language processing, and other sequential inputs.

- Key Features of RNNs:

- Memory: RNNs can remember previous inputs due to their internal state, which is updated at each time step.

- Sequence Processing: They excel in tasks where the order of data points is crucial, such as speech recognition and language translation.

- Variable Input Length: RNNs can handle input sequences of varying lengths, making them versatile for different applications.

- Types of RNNs:

- Vanilla RNNs: The basic form of RNNs, which can struggle with long-term dependencies due to issues like vanishing gradients.

- Long Short-Term Memory (LSTM): A specialized type of RNN designed to remember information for longer periods, effectively addressing the vanishing gradient problem.

- Gated Recurrent Units (GRU): A simpler alternative to LSTMs, GRUs also manage long-term dependencies but with fewer parameters.

RNNs have been widely used in various applications, including text generation, sentiment analysis, and time series forecasting. At Rapid Innovation, we leverage RNNs to develop tailored solutions that enhance user engagement and improve predictive capabilities, ultimately driving greater ROI for our clients. Additionally, RNNs can be applied in advanced analytics, such as predictive analytics and geo spatial analytics, to extract insights from complex datasets.

3.1.3. Transformer Models

Transformer models have revolutionized the field of natural language processing (NLP) and beyond. Introduced in the paper "Attention is All You Need," transformers utilize a mechanism called self-attention, allowing them to weigh the importance of different words in a sentence regardless of their position. This architecture has led to significant improvements in performance across various tasks.

- Key Features of Transformer Models:

- Self-Attention Mechanism: This allows the model to focus on relevant parts of the input sequence, enhancing context understanding.

- Parallelization: Unlike RNNs, transformers can process entire sequences simultaneously, leading to faster training times.

- Scalability: Transformers can be scaled up with more layers and parameters, resulting in models like BERT and GPT that achieve state-of-the-art results.

- Applications of Transformer Models:

- Language Translation: Transformers have set new benchmarks in translating text between languages.

- Text Summarization: They can condense lengthy articles into concise summaries while retaining essential information.

- Question Answering: Transformers excel in understanding context and providing accurate answers to user queries.

The impact of transformer models extends beyond NLP, influencing fields such as computer vision and reinforcement learning, showcasing their versatility and effectiveness. Rapid Innovation harnesses the power of transformer models to create advanced applications that enhance customer experiences and streamline operations, leading to improved business outcomes.

3.2. Advanced Analytics

Advanced analytics refers to the use of sophisticated techniques and tools to analyze data and extract valuable insights. This approach goes beyond traditional data analysis methods, incorporating machine learning, predictive modeling, and statistical algorithms to uncover patterns and trends. Advanced analytics tools are essential for organizations looking to implement advanced marketing analytics and big data advanced analytics strategies.

- Key Components of Advanced Analytics:

- Predictive Analytics: This involves using historical data to forecast future outcomes, helping organizations make informed decisions.

- Prescriptive Analytics: This goes a step further by recommending actions based on predictive insights, optimizing decision-making processes.

- Descriptive Analytics: This provides insights into past performance, helping organizations understand what happened and why.

- Benefits of Advanced Analytics:

- Improved Decision-Making: Organizations can make data-driven decisions, reducing reliance on intuition.

- Enhanced Operational Efficiency: By identifying inefficiencies and bottlenecks, businesses can streamline operations.

- Competitive Advantage: Companies leveraging advanced analytics can gain insights that lead to innovative products and services.

- Applications of Advanced Analytics:

- Customer Segmentation: Businesses can analyze customer data to identify distinct segments, tailoring marketing strategies accordingly.

- Fraud Detection: Advanced analytics can help detect unusual patterns indicative of fraudulent activities in real-time.

- Supply Chain Optimization: Organizations can forecast demand and optimize inventory levels, reducing costs and improving service levels.

Incorporating advanced analytics into business strategies can lead to significant improvements in performance and profitability, making it an essential component of modern data-driven organizations. At Rapid Innovation, we empower our clients to leverage advanced analytics for strategic decision-making, ultimately enhancing their operational effectiveness and driving higher returns on investment. This includes utilizing data and advanced analytics to inform business strategies and improve outcomes in various sectors, including healthcare and marketing.

3.2.1. Predictive Modeling

Predictive modeling is a statistical technique that uses historical data to forecast future outcomes. It is widely used across various industries, including finance, healthcare, marketing, and more. The primary goal of predictive modeling is to identify patterns and trends that can help organizations make informed decisions.

- Key components of predictive modeling include:

- Data collection: Gathering relevant historical data is crucial for building an effective model.

- Feature selection: Identifying the most significant variables that influence the outcome.

- Model selection: Choosing the appropriate algorithm, such as regression analysis, decision trees, or neural networks.

- Validation: Testing the model against a separate dataset to ensure its accuracy and reliability.

- Applications of predictive modeling:

- Customer segmentation: Businesses can predict customer behavior and tailor marketing strategies accordingly, leading to improved engagement and higher conversion rates.

- Risk assessment: Financial institutions use predictive models to evaluate the creditworthiness of applicants, reducing default rates and enhancing profitability.

- Healthcare outcomes: Predictive modeling helps in forecasting patient outcomes and optimizing treatment plans, ultimately improving patient care and reducing costs.

At Rapid Innovation, we leverage advanced predictive analytics modeling and predictive modeling techniques to help our clients achieve greater ROI by enabling data-driven decision-making and enhancing operational efficiency. Our expertise in machine learning ensures that organizations can harness the full potential of their data through applied predictive modeling and predictive modeling methods.

3.2.2. Pattern Recognition

Pattern recognition is a branch of machine learning that focuses on identifying regularities and patterns in data. It plays a crucial role in various applications, from image and speech recognition to fraud detection and medical diagnosis.

- Key aspects of pattern recognition include:

- Data preprocessing: Cleaning and transforming raw data into a suitable format for analysis.

- Feature extraction: Identifying the most relevant features that represent the data effectively.

- Classification: Assigning labels to data points based on learned patterns, using algorithms like support vector machines or neural networks.

- Common applications of pattern recognition:

- Image recognition: Used in facial recognition systems and autonomous vehicles, enhancing security and user experience.

- Speech recognition: Powers virtual assistants like Siri and Google Assistant, streamlining user interactions and improving accessibility.

- Medical diagnosis: Assists in identifying diseases from medical images or patient data, leading to timely interventions and better health outcomes.

At Rapid Innovation, we implement cutting-edge pattern recognition solutions that empower businesses to automate processes, enhance customer experiences, and drive innovation. Our tailored approaches ensure that clients can effectively adapt to changing market demands.

3.2.3. Anomaly Detection

Anomaly detection, also known as outlier detection, is the process of identifying rare items, events, or observations that differ significantly from the majority of the data. It is crucial for various applications, including fraud detection, network security, and fault detection in manufacturing.

- Key elements of anomaly detection include:

- Data collection: Gathering a comprehensive dataset that includes both normal and anomalous instances.

- Model training: Using historical data to train models that can distinguish between normal and abnormal behavior.

- Evaluation: Assessing the model's performance using metrics like precision, recall, and F1 score.

- Applications of anomaly detection:

- Fraud detection: Financial institutions use anomaly detection to identify unusual transactions that may indicate fraud, protecting assets and maintaining trust.

- Network security: Monitoring network traffic to detect potential security breaches or attacks, safeguarding sensitive information.

- Predictive maintenance: Identifying equipment failures before they occur by detecting anomalies in sensor data, reducing downtime and maintenance costs.

Anomaly detection techniques can be categorized into supervised, unsupervised, and semi-supervised methods. Each approach has its advantages and is chosen based on the specific requirements of the application. The ability to detect anomalies effectively is vital for maintaining security and operational efficiency in various domains. At Rapid Innovation, we provide robust anomaly detection solutions that enhance risk management and operational resilience for our clients.

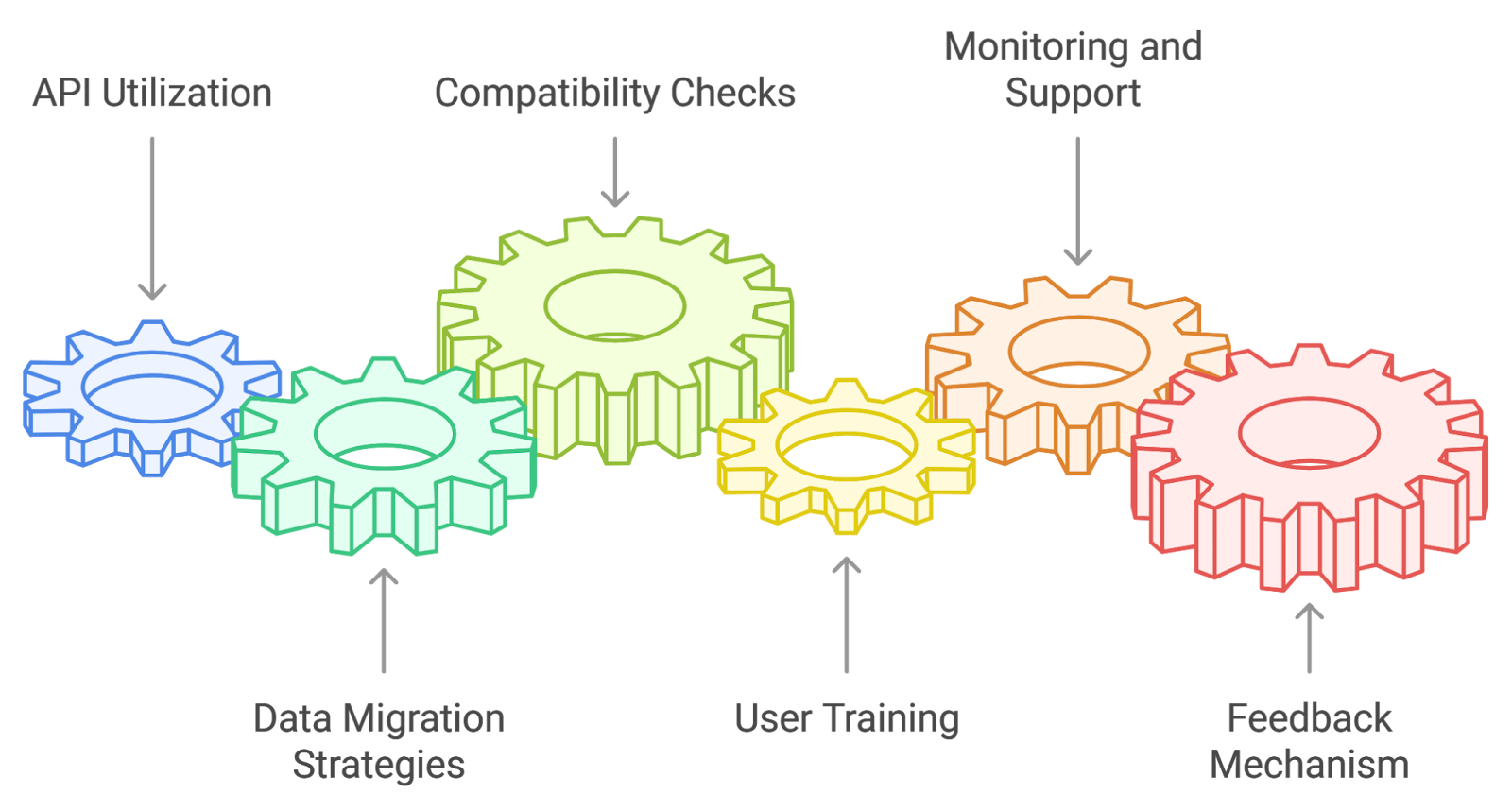

3.3. Integration with Bioinformatics Tools

The integration of bioinformatics tools integration with various biological and medical research processes is crucial for advancing our understanding of complex biological systems. This integration allows researchers to analyze large datasets efficiently and derive meaningful insights.

- Bioinformatics tools facilitate the analysis of genomic, proteomic, and metabolomic data, enabling researchers to identify patterns and correlations that would be difficult to discern manually.

- These tools often include software for sequence alignment, gene expression analysis, and structural bioinformatics, which are essential for interpreting biological data.

- By integrating bioinformatics tools with laboratory techniques, researchers can streamline workflows, reduce errors, and enhance reproducibility in experiments.

- The use of databases and algorithms in bioinformatics allows for the storage and retrieval of vast amounts of biological data, making it easier to conduct comparative studies and meta-analyses.

- Collaborative platforms that combine bioinformatics with machine learning and artificial intelligence are emerging, providing powerful resources for predictive modeling and hypothesis generation. Rapid Innovation specializes in developing such platforms, ensuring that our clients can leverage cutting-edge technology to enhance their research capabilities, AI agents for patient care.

4. Key Benefits and Advantages

The integration of bioinformatics tools into research and clinical practices offers numerous benefits that enhance the overall effectiveness of scientific inquiry and medical diagnostics.

- Enhanced data management capabilities allow for the organization and analysis of large datasets.

- Improved collaboration among researchers through shared platforms and tools.

- Accelerated discovery of new biomarkers and therapeutic targets, leading to advancements in personalized medicine.

4.1. Improved Accuracy and Precision

One of the most significant advantages of integrating bioinformatics tools is the improved accuracy and precision in data analysis and interpretation.

- Bioinformatics tools utilize sophisticated algorithms that minimize human error, leading to more reliable results.

- High-throughput sequencing technologies generate vast amounts of data, and bioinformatics tools are essential for processing and analyzing this data accurately.

- The ability to perform complex statistical analyses helps in identifying significant biological signals amidst noise, enhancing the reliability of findings.

- Integration with machine learning techniques allows for the development of predictive models that can forecast outcomes with high accuracy. Rapid Innovation's expertise in AI ensures that our clients can harness these advanced techniques effectively.

- Improved accuracy in bioinformatics can lead to better clinical decision-making, as healthcare providers can rely on precise data for diagnosis and treatment planning.

By leveraging bioinformatics tools integration, researchers and clinicians can achieve a higher level of accuracy and precision, ultimately leading to more effective interventions and a deeper understanding of biological processes. Rapid Innovation is committed to helping clients navigate this complex landscape, ensuring they achieve greater ROI through innovative solutions tailored to their specific needs.

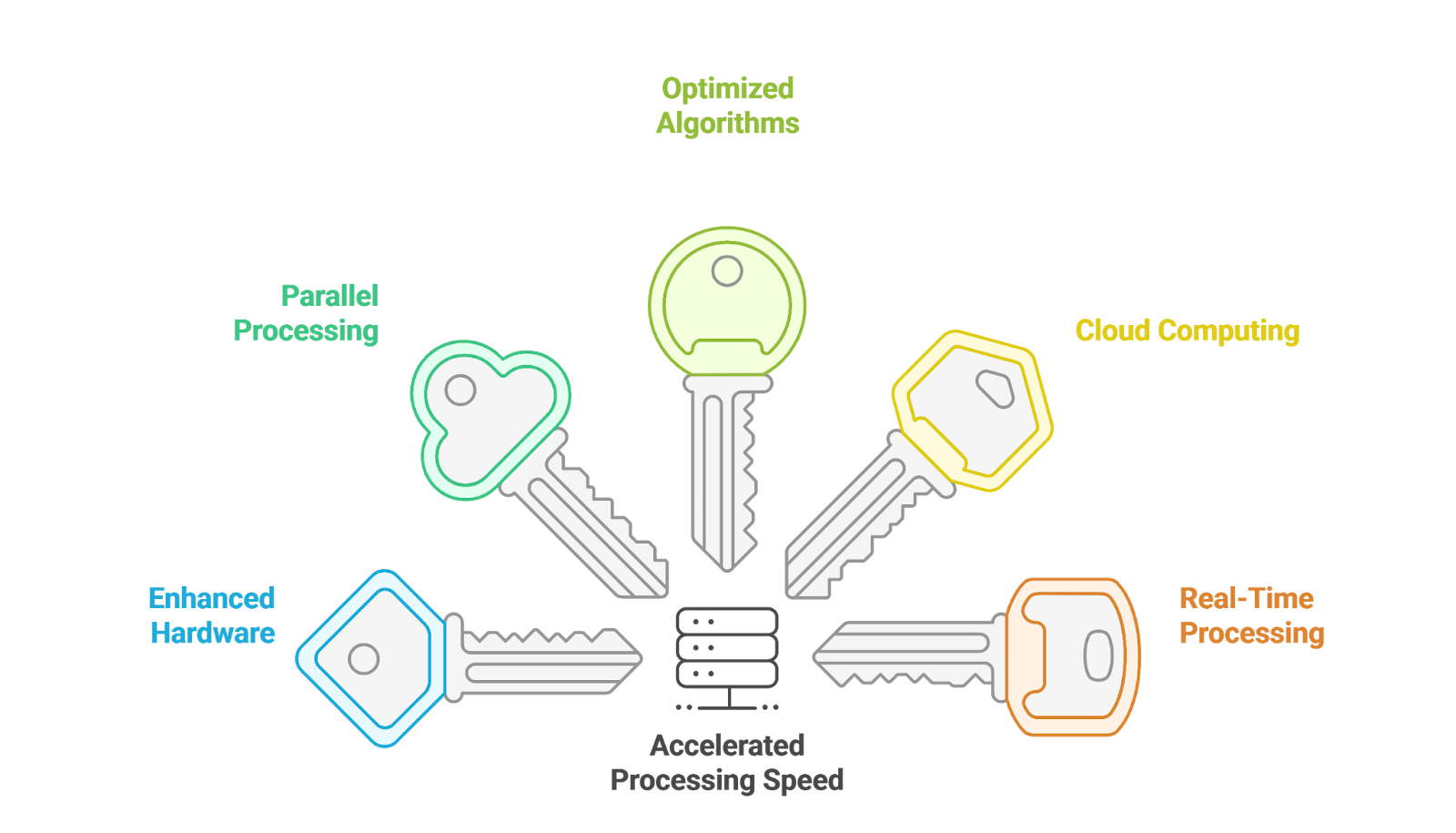

4.2. Accelerated Processing Speed

Accelerated processing speed is a critical factor in enhancing the efficiency of various systems, particularly in computing and data management. This speed is achieved through advancements in technology, including hardware improvements and optimized software algorithms. At Rapid Innovation, we leverage these advancements to help our clients achieve their business goals effectively.

- Enhanced hardware capabilities, such as multi-core processors and solid-state drives (SSDs), significantly boost processing speed, allowing businesses to handle larger datasets and complex computations with ease.

- Parallel processing allows multiple tasks to be executed simultaneously, reducing the time required for data processing. This is particularly beneficial for industries that rely on real-time analytics, such as finance and e-commerce.

- Optimized algorithms can streamline operations, leading to faster data retrieval and processing times. Our team specializes in developing custom algorithms tailored to specific business needs, ensuring maximum efficiency.

- Technologies like cloud computing enable on-demand resources, allowing for rapid scaling of processing power as needed. This flexibility is essential for businesses experiencing fluctuating workloads.

- Real-time data processing is becoming increasingly important in industries like finance and healthcare, where timely information is crucial. Rapid Innovation implements solutions that facilitate real-time insights, empowering clients to make informed decisions swiftly.

4.3. Cost Reduction

Cost reduction is a primary goal for businesses looking to improve their bottom line. By implementing efficient systems and technologies, organizations can significantly lower their operational costs. Rapid Innovation focuses on delivering solutions that drive cost efficiency for our clients.

- Automation of repetitive tasks reduces the need for manual labor, leading to lower labor costs. Our AI-driven automation tools help businesses streamline operations and minimize human error.

- Cloud services often provide a more cost-effective solution compared to traditional on-premises infrastructure, as they eliminate the need for extensive hardware investments. We guide clients in selecting the right cloud solutions that align with their budget and operational needs.

- Energy-efficient technologies can lead to substantial savings on utility bills, especially in data centers and large-scale operations. Our consulting services include recommendations for energy-efficient practices, such as energy savings solutions llc and energy efficiency solutions llc, that can enhance sustainability and reduce costs.

- Outsourcing non-core functions can help businesses focus on their primary objectives while reducing overhead costs. We assist clients in identifying functions that can be outsourced effectively, allowing them to concentrate on growth.

- Streamlined supply chain management can minimize waste and improve resource allocation, further driving down costs. Our blockchain solutions enhance transparency and efficiency in supply chains, leading to significant cost savings.

4.4. Scalability and Flexibility

Scalability and flexibility are essential attributes for modern businesses, allowing them to adapt to changing market demands and growth opportunities. Rapid Innovation provides scalable and flexible solutions that empower organizations to thrive in dynamic environments.

- Scalable solutions enable organizations to increase or decrease resources based on current needs without significant downtime or investment. Our cloud-based solutions are designed to grow with your business.

- Cloud computing offers unparalleled flexibility, allowing businesses to access resources and services from anywhere, at any time. We help clients implement cloud strategies that enhance operational agility.

- Modular systems can be easily expanded or modified, ensuring that businesses can adapt to new technologies or market conditions. Our development approach emphasizes modularity, allowing for seamless upgrades and integrations.

- The ability to quickly pivot strategies in response to market changes is crucial for maintaining a competitive edge. We provide strategic consulting that helps clients navigate market shifts effectively, including insights from corporate energy efficiency and business efficiency solutions.

- Flexible work environments, supported by remote access technologies, can enhance employee satisfaction and productivity, leading to better overall performance. Our solutions facilitate remote work capabilities, ensuring teams remain connected and productive regardless of location.

At Rapid Innovation, we are committed to helping our clients achieve greater ROI through our expertise in AI and blockchain technologies. Our tailored solutions are designed to enhance efficiency, reduce costs, and provide the scalability needed for future growth, including energy saving solutions for hotels and commercial energy efficiency solutions.

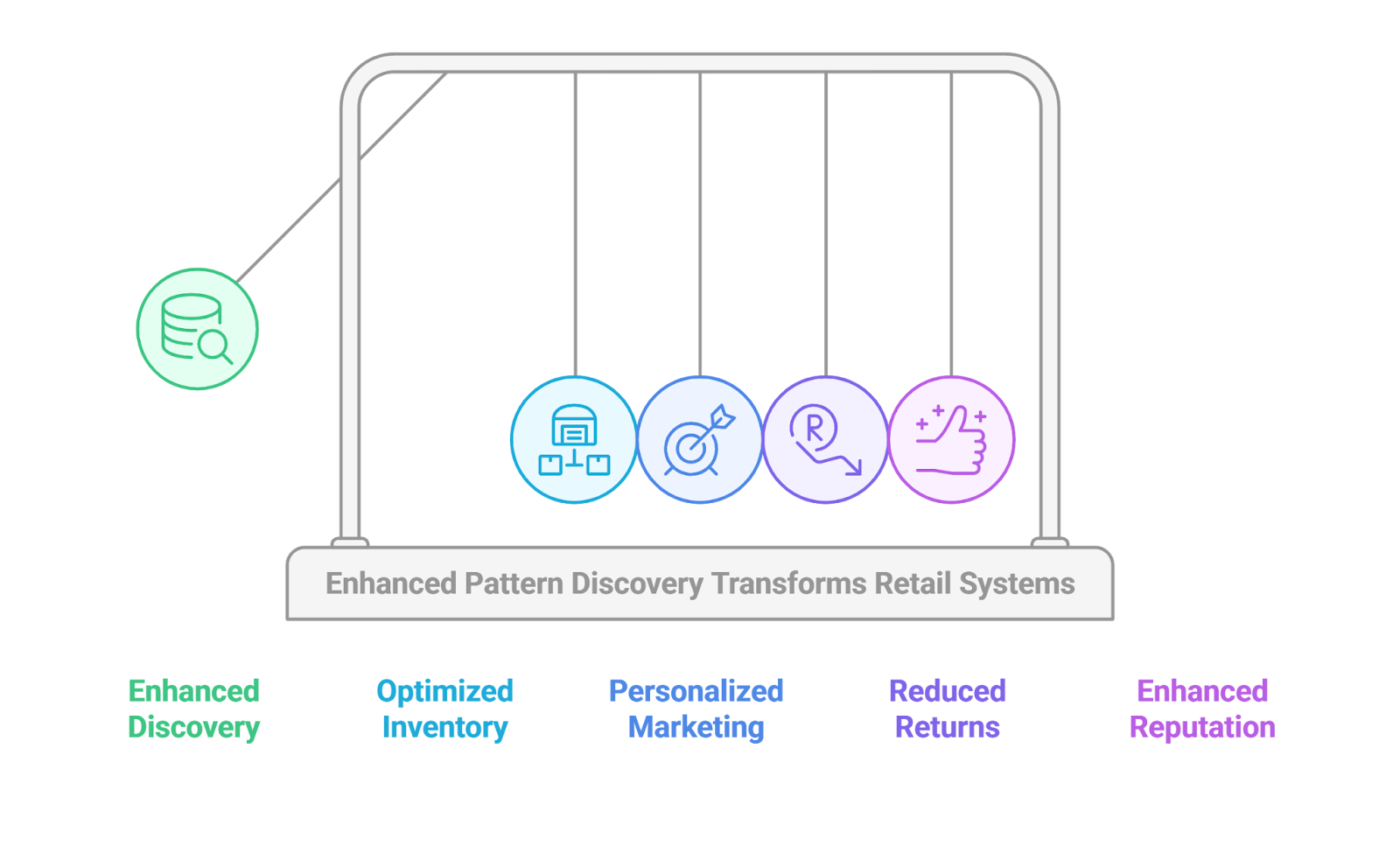

4.5. Enhanced Pattern Discovery

Enhanced pattern discovery refers to the advanced techniques and methodologies used to identify trends, correlations, and anomalies within large datasets. This process is crucial for businesses and researchers who seek to derive actionable insights from complex information.

- Utilizes machine learning algorithms to analyze data more efficiently.

- Employs data mining techniques, including pattern discovery in data mining, to uncover hidden patterns that traditional methods might miss.

- Involves the use of visualization tools to present data in an easily interpretable format.

- Supports predictive analytics, allowing organizations to forecast future trends based on historical data.

- Enhances decision-making by providing a deeper understanding of customer behavior and market dynamics.

The significance of enhanced pattern discovery lies in its ability to transform raw data into valuable insights. For instance, businesses can identify customer preferences, optimize marketing strategies, and improve product offerings. This capability is increasingly important in a data-driven world where organizations must adapt quickly to changing market conditions. At Rapid Innovation, we leverage our expertise in AI to implement enhanced pattern discovery solutions that drive greater ROI for our clients by enabling them to make informed decisions based on data-driven insights.

4.6. Automated Quality Control

Automated quality control (AQC) is a systematic approach that leverages technology to monitor and ensure the quality of products and services throughout the production process. This method reduces human error and increases efficiency, leading to higher standards of quality.

- Integrates sensors and IoT devices to collect real-time data on production processes.

- Utilizes machine learning algorithms to analyze data and detect anomalies or defects.

- Implements automated testing procedures to ensure products meet specified standards.

- Reduces the need for manual inspections, saving time and resources.

- Enhances traceability by maintaining detailed records of quality checks and outcomes.

The benefits of automated quality control are substantial. Companies can achieve consistent quality, reduce waste, and improve customer satisfaction. By automating quality checks, organizations can also respond more swiftly to issues, minimizing the impact on production and delivery timelines. Rapid Innovation's AQC solutions empower businesses to maintain high-quality standards while optimizing operational efficiency, ultimately leading to increased profitability.

5. Primary Use Cases

The primary use cases for enhanced pattern discovery and automated quality control span various industries, showcasing their versatility and effectiveness in improving operations and outcomes.

- Retail and E-commerce: Enhanced pattern discovery helps retailers analyze customer purchasing behavior, optimize inventory management, and personalize marketing efforts. Automated quality control ensures that products meet quality standards before reaching consumers, reducing returns and enhancing brand reputation.

- Manufacturing: In manufacturing, enhanced pattern discovery can identify inefficiencies in production processes, leading to improved operational efficiency. Automated quality control systems monitor production lines in real-time, detecting defects early and ensuring that only high-quality products are shipped.

- Healthcare: Enhanced pattern discovery is used to analyze patient data, leading to better diagnosis and treatment plans. Automated quality control in healthcare ensures that medical devices and pharmaceuticals meet stringent regulatory standards, safeguarding patient safety.

- Finance: Financial institutions utilize enhanced pattern discovery techniques to detect fraudulent activities and assess risk. Automated quality control processes help maintain compliance with regulations and ensure the accuracy of financial reporting.

- Telecommunications: Enhanced pattern discovery aids in analyzing customer usage patterns, enabling service providers to tailor offerings and improve customer satisfaction. Automated quality control ensures that network services meet performance standards, reducing downtime and enhancing user experience.

These use cases illustrate the transformative potential of enhanced pattern discovery and automated quality control across various sectors. By leveraging these technologies, organizations can drive innovation, improve efficiency, and deliver superior products and services. At Rapid Innovation, we are committed to helping our clients harness the power of AI and blockchain to achieve their business goals effectively and efficiently.

5.1. Sequence Analysis

Sequence analysis is a critical component of genomics and molecular biology, focusing on the examination of nucleotide sequences in DNA and RNA. This process allows researchers to understand genetic information, identify mutations, and explore gene functions. Sequence analysis can be performed using various techniques and tools, enabling scientists to derive meaningful insights from biological data.

- Helps in identifying genetic variations.

- Aids in understanding evolutionary relationships.

- Facilitates the discovery of new genes and regulatory elements.

5.1.1. DNA Sequencing

DNA sequencing is the process of determining the precise order of nucleotides within a DNA molecule. This technique has revolutionized genetics, enabling researchers to decode the genetic blueprint of organisms. There are several methods of DNA sequencing, including Sanger sequencing and next-generation sequencing (NGS).

- Sanger Sequencing: Developed in the 1970s, it uses chain-terminating inhibitors to produce fragments of varying lengths. It is ideal for sequencing small DNA fragments and is often used for targeted sequencing.

- Next-Generation Sequencing (NGS): This method allows for massive parallel sequencing, enabling the analysis of millions of DNA fragments simultaneously. It provides high-throughput capabilities, making it suitable for whole-genome sequencing and large-scale studies.

- Applications of DNA Sequencing:

- Identifying genetic disorders and mutations.

- Understanding cancer genomics.

- Exploring microbial diversity and evolution.

The advancements in DNA sequencing technologies have significantly reduced costs and increased accessibility, leading to a surge in genomic research. According to a report by the National Human Genome Research Institute, the cost of sequencing a human genome has dropped from approximately $100 million in 2001 to around $1,000 in recent years.

5.1.2. RNA Sequencing

RNA sequencing (RNA-seq) is a powerful technique used to analyze the transcriptome, which is the complete set of RNA transcripts produced by the genome at any given time. RNA-seq provides insights into gene expression levels, alternative splicing events, and non-coding RNA functions.

- Key Features of RNA Sequencing:

- High sensitivity and specificity in detecting low-abundance transcripts.

- Ability to capture a wide range of RNA types, including mRNA, lncRNA, and miRNA.

- Steps in RNA Sequencing:

- RNA extraction: Isolating RNA from cells or tissues.

- Library preparation: Converting RNA into complementary DNA (cDNA) and preparing it for sequencing.

- Sequencing: Using NGS platforms to read the cDNA fragments.

- Data analysis: Employing bioinformatics tools to interpret the sequencing data and quantify gene expression.

- Applications of RNA Sequencing:

- Identifying differentially expressed genes in various conditions.

- Understanding the regulatory mechanisms of gene expression.

- Investigating the role of non-coding RNAs in cellular processes.

RNA-seq has become a standard method in genomics, providing a comprehensive view of gene expression and regulation. A study published in Nature Biotechnology highlighted that RNA-seq can detect over 90% of expressed genes in a given sample, making it a reliable tool for transcriptomic analysis.

In summary, both DNA and RNA sequencing are integral to sequence analysis, offering valuable insights into genetic and transcriptomic landscapes. Techniques such as T7 RNA polymerase promoter sequence and T7 polymerase promoter sequence are essential for understanding transcription processes. Additionally, the conversion of DNA to RNA sequence and the reverse process of RNA to DNA sequence are fundamental in molecular biology. These techniques continue to evolve, driving advancements in personalized medicine, evolutionary biology, and biotechnology.

At Rapid Innovation, we leverage our expertise in AI and blockchain to enhance the efficiency and accuracy of sequence analysis. By integrating AI algorithms, we can automate data analysis processes, leading to faster and more reliable results. Additionally, utilizing blockchain technology ensures the integrity and security of genomic data, providing clients with a robust framework for managing sensitive information. Our solutions not only streamline research workflows but also maximize ROI by reducing time-to-insight and enhancing data trustworthiness.

5.1.3. Protein Structure Prediction

Protein structure prediction is a crucial aspect of bioinformatics and molecular biology. Understanding the three-dimensional structure of proteins is essential for elucidating their function and role in biological processes. The prediction of protein structures can be achieved through various computational methods, which can be broadly categorized into:

- Homology Modeling: This method relies on the known structures of homologous proteins. By aligning the target protein sequence with a template protein of known structure, researchers can predict the 3D structure of the target. This approach is effective when there is a high degree of sequence similarity.

- Ab Initio Methods: These methods predict protein structures from scratch, without relying on templates. They use physical principles and statistical potentials to explore possible conformations. While ab initio methods can be computationally intensive, they are essential for predicting the structures of novel proteins.

- Threading: This technique involves fitting the target sequence into a known structure by optimizing the alignment. It is particularly useful for proteins with low sequence similarity to known structures.

- Machine Learning Approaches: Recent advancements in artificial intelligence have led to the development of machine learning models that can predict protein structures with remarkable accuracy. For instance, AlphaFold, developed by DeepMind, has shown unprecedented success in predicting protein structures based on amino acid sequences. The AlphaFold database provides valuable resources for researchers, while tools like AlphaFold 2 and AlphaFold multimer enhance the capabilities of protein structure prediction.

The implications of accurate protein structure prediction are vast, impacting drug design, understanding disease mechanisms, and developing therapeutic interventions. As research continues to evolve, the integration of experimental data with computational predictions will enhance our understanding of protein dynamics and interactions. At Rapid Innovation, we leverage AI-driven solutions, including AI protein folding techniques, to optimize these predictions, enabling our clients to accelerate their research and development processes, ultimately leading to greater ROI through faster time-to-market for new therapeutics. Additionally, the role of generative AI in accelerating drug discovery and personalized medicine is becoming increasingly significant, further enhancing the capabilities of protein structure prediction.

5.2. Disease Research

Disease research is a multidisciplinary field that aims to understand the underlying mechanisms of diseases, identify potential therapeutic targets, and develop effective treatments. This area of research encompasses various approaches, including genomics, proteomics, and systems biology. Key aspects of disease research include:

- Genomic Studies: Analyzing the genetic basis of diseases helps identify mutations and variations that contribute to disease susceptibility and progression.

- Biomarker Discovery: Identifying biomarkers can aid in early diagnosis, prognosis, and monitoring of disease progression.

- Therapeutic Development: Understanding disease mechanisms allows researchers to develop targeted therapies that can improve patient outcomes.

- Clinical Trials: Testing new treatments in clinical settings is essential for validating the efficacy and safety of therapeutic interventions.

Advancements in technology, such as next-generation sequencing and high-throughput screening, have accelerated disease research, enabling researchers to uncover complex interactions within biological systems. Rapid Innovation employs cutting-edge AI and blockchain technologies to enhance data integrity and streamline the research process, ensuring that our clients can achieve their business goals efficiently.

5.2.1. Cancer Genomics

Cancer genomics is a specialized field within disease research that focuses on the genetic alterations associated with cancer. By studying the genomic landscape of tumors, researchers can gain insights into the mechanisms driving cancer development and progression. Key components of cancer genomics include:

- Mutational Analysis: Identifying mutations in oncogenes and tumor suppressor genes helps understand the genetic basis of cancer. For example, mutations in the TP53 gene are commonly associated with various cancers.

- Copy Number Variations: Changes in the number of copies of specific genes can contribute to cancer. Analyzing these variations can reveal potential therapeutic targets.

- Gene Expression Profiling: Understanding how gene expression changes in cancer cells compared to normal cells can provide insights into tumor biology and potential treatment strategies.

- Personalized Medicine: Cancer genomics enables the development of personalized treatment plans based on the unique genetic profile of an individual's tumor. This approach can lead to more effective therapies with fewer side effects.

- Clinical Applications: Genomic data can inform clinical decisions, such as selecting targeted therapies or determining prognosis. For instance, the presence of specific mutations can guide the use of targeted therapies like EGFR inhibitors in lung cancer.

The integration of cancer genomics with other omics technologies, such as proteomics and metabolomics, is paving the way for a more comprehensive understanding of cancer biology and the development of innovative therapeutic strategies. As research progresses, the potential for improving patient outcomes through genomics-based approaches continues to expand. Rapid Innovation is committed to supporting this evolution by providing advanced AI solutions that enhance data analysis and interpretation, ultimately driving better clinical outcomes and maximizing ROI for our clients.

5.2.2. Rare Disease Analysis

Rare diseases, often defined as conditions affecting fewer than 200,000 individuals in the United States, present unique challenges in diagnosis and treatment. The analysis of rare diseases is crucial for understanding their etiology, improving patient outcomes, and developing targeted therapies.

- Understanding the genetic basis: Many rare diseases have a genetic component, making genetic testing and analysis essential. Identifying mutations can lead to better diagnostic tools and potential treatments. Rapid Innovation leverages AI algorithms to analyze genetic data, enabling more accurate identification of mutations and facilitating the development of personalized treatment plans.

- Data collection and sharing: Collaboration among researchers, healthcare providers, and patients is vital. Platforms like the National Organization for Rare Disorders (NORD) facilitate data sharing, which can accelerate research efforts. Rapid Innovation can implement blockchain solutions to ensure secure and transparent data sharing, enhancing collaboration while maintaining patient privacy.

- Patient registries: Establishing registries helps in tracking disease prevalence, patient demographics, and treatment responses. This data is invaluable for clinical trials and understanding disease progression. Our expertise in AI can optimize the analysis of registry data, providing insights that drive better clinical decision-making.

- Innovative research methods: Utilizing advanced technologies such as CRISPR and next-generation sequencing can enhance our understanding of rare diseases and lead to novel therapeutic approaches. Rapid Innovation supports clients in integrating AI-driven analytics to streamline research processes and improve outcomes.

- Regulatory support: Agencies like the FDA provide incentives for developing treatments for rare diseases, including orphan drug designation, which can expedite the approval process. Our consulting services can guide clients through regulatory pathways, ensuring compliance and maximizing the potential for successful drug approval.

5.2.3. Pathogen Detection

Pathogen detection is a critical aspect of public health, especially in the context of infectious diseases. Rapid and accurate identification of pathogens can significantly impact treatment outcomes and control measures.

- Diagnostic technologies: Advances in molecular diagnostics, such as PCR (Polymerase Chain Reaction) and next-generation sequencing, allow for the rapid detection of pathogens at a genetic level. Rapid Innovation employs AI to enhance diagnostic accuracy and speed, ensuring timely interventions.

- Surveillance systems: Implementing robust surveillance systems helps in monitoring outbreaks and understanding pathogen spread. This includes both human and environmental monitoring. Our blockchain solutions can provide secure and immutable records of surveillance data, facilitating better public health responses.

- Point-of-care testing: Developing portable diagnostic tools enables healthcare providers to detect pathogens quickly, even in remote areas. This is crucial for timely intervention and treatment. Rapid Innovation can assist in the development of AI-powered point-of-care devices that deliver rapid results.

- Bioinformatics: Analyzing genomic data from pathogens can provide insights into their evolution, resistance patterns, and potential vulnerabilities, aiding in the development of targeted therapies. Our AI capabilities can process vast amounts of genomic data, identifying trends and patterns that inform treatment strategies.

- Global collaboration: International partnerships and data sharing among countries enhance the ability to respond to emerging infectious diseases and improve global health security. Rapid Innovation can facilitate these collaborations through secure blockchain networks that promote data integrity and trust.

5.3. Drug Discovery and Development

The drug discovery and development process is a complex and lengthy journey that transforms scientific research into effective therapies. This process involves several stages, each critical for ensuring the safety and efficacy of new medications.

- Target identification: The first step involves identifying biological targets associated with diseases. This can include proteins, genes, or pathways that play a role in disease progression. Rapid Innovation utilizes AI to analyze biological data, accelerating the identification of promising drug targets.

- High-throughput screening: Utilizing automated systems to test thousands of compounds against the identified targets allows researchers to identify potential drug candidates quickly. Our expertise in AI can optimize screening processes, increasing efficiency and reducing costs.

- Preclinical studies: Before human trials, drugs undergo rigorous testing in laboratory and animal models to assess their safety, efficacy, and pharmacokinetics. Rapid Innovation can support clients in designing and analyzing preclinical studies using advanced AI models.

- Clinical trials: This phase is divided into three main stages (Phase I, II, and III) to evaluate the drug's safety and effectiveness in humans. Each phase has specific objectives and participant criteria. Our consulting services can help streamline trial design and patient recruitment, enhancing the likelihood of success.

- Regulatory approval: After successful clinical trials, drug developers submit their findings to regulatory agencies like the FDA for approval. This process ensures that only safe and effective drugs reach the market. Rapid Innovation provides guidance on regulatory strategies, helping clients navigate complex approval processes.

- Post-marketing surveillance: Once a drug is approved, ongoing monitoring is essential to identify any long-term effects or rare side effects that may not have been evident during clinical trials. Our AI solutions can assist in analyzing post-marketing data, ensuring ongoing safety and efficacy.

The drug discovery process is not only time-consuming but also costly, with estimates suggesting that it can take over a decade and billions of dollars to bring a new drug to market. Rapid Innovation is committed to leveraging AI and blockchain technologies to streamline this process, ultimately enhancing ROI for our clients.

5.3.1. Target Identification