Table Of Contents

Category

Artificial Intelligence

AIML

IoT

CRM

Security

1. Introduction to AI-Powered Damage Evaluation

Artificial Intelligence (AI) is revolutionizing various industries, and AI damage evaluation is no exception. AI-powered damage evaluation refers to the use of advanced algorithms and machine learning techniques to assess and quantify damage in various contexts, such as insurance claims, property assessments, and disaster recovery. This innovative approach enhances accuracy, speeds up the evaluation process, and reduces human error. As businesses and organizations increasingly adopt AI technologies, the landscape of damage evaluation is transforming, leading to more efficient and reliable outcomes.

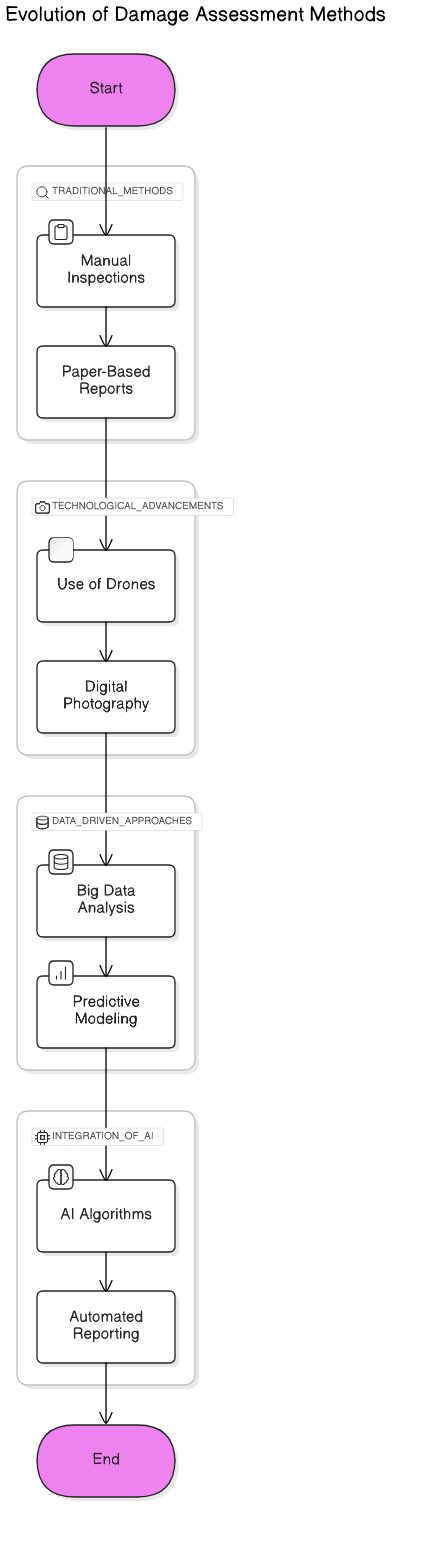

1.1. Evolution of Damage Assessment Methods

The methods of damage assessment have evolved significantly over the years, transitioning from manual processes to automated systems.

- Traditional Methods: Initially, damage assessment relied heavily on manual inspections and subjective evaluations by experts. These methods were time-consuming and often led to inconsistencies in assessments due to human bias.

- Technological Advancements: The introduction of digital tools, such as drones and imaging technology, marked a significant shift. These tools allowed for more precise measurements and visual documentation of damage.

- Data-Driven Approaches: The rise of big data analytics enabled the collection and analysis of vast amounts of information related to damage events. This data-driven approach improved the accuracy of assessments and facilitated better decision-making.

- Integration of AI: The latest evolution involves the integration of AI technologies, which can analyze data patterns and predict damage outcomes with remarkable precision. AI algorithms can process images, assess structural integrity, and even predict future risks based on historical data.

1.2. Role of AI in Modern Damage Evaluation

AI plays a crucial role in modern damage evaluation, offering numerous advantages over traditional methods.

- Enhanced Accuracy: AI algorithms can analyze images and data with a level of precision that surpasses human capabilities, leading to more accurate assessments and reducing the likelihood of errors.

- Speed and Efficiency: AI-powered systems can process large volumes of data quickly, significantly reducing the time required for damage evaluations. This efficiency is particularly beneficial in emergency situations where timely assessments are critical.

- Predictive Analytics: AI can utilize historical data to predict potential damage scenarios, allowing organizations to prepare and mitigate risks effectively. This proactive approach can save time and resources in the long run.

- Cost-Effectiveness: By automating the evaluation process, AI reduces the need for extensive manpower, leading to cost savings for businesses and organizations. This efficiency can also translate to faster claim processing in the insurance industry.

- Improved Decision-Making: AI provides data-driven insights that enhance decision-making processes. Stakeholders can make informed choices based on accurate assessments and predictive analytics.

- Real-Time Monitoring: AI technologies enable real-time monitoring of assets and properties, allowing for immediate damage assessments after incidents. This capability is particularly valuable in industries such as construction and insurance.

In conclusion, the integration of AI in damage evaluation represents a significant advancement in the field. By leveraging technology, organizations can achieve more accurate, efficient, and cost-effective assessments, ultimately leading to better outcomes in damage management and recovery. At Rapid Innovation, we specialize in implementing AI solutions tailored to your specific needs, ensuring that your organization can harness the full potential of AI-powered damage evaluation to achieve greater ROI and operational efficiency.

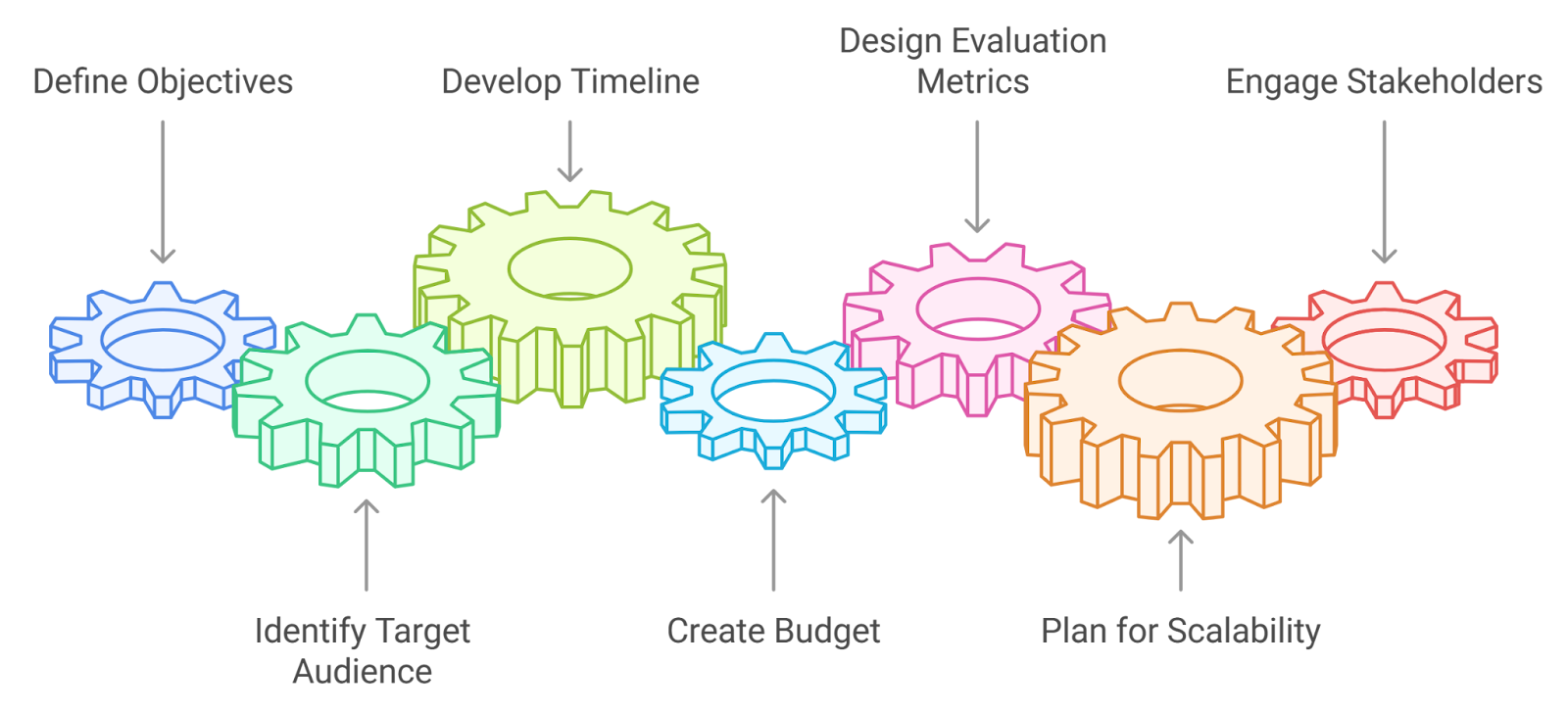

Refer to the image for a visual representation of the evolution and role of AI in damage evaluation:

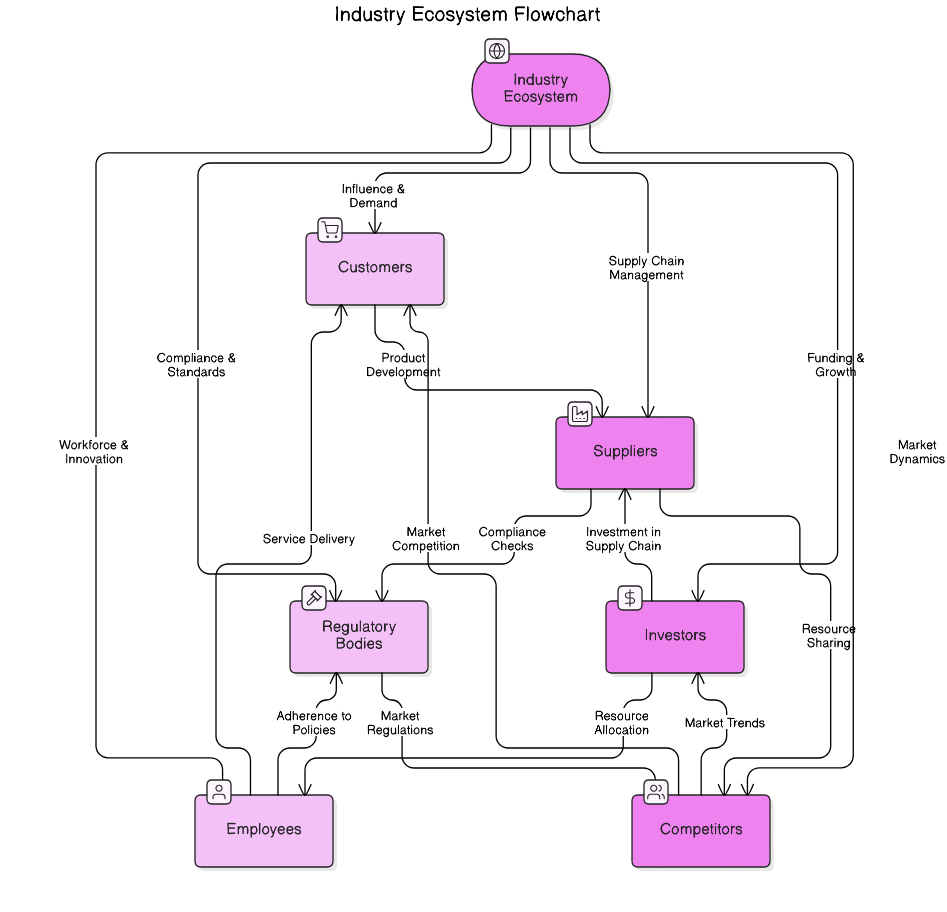

1.3. Key Stakeholders and Use Cases

In any industry, identifying key stakeholders is crucial for understanding the ecosystem and driving innovation. Stakeholders can vary widely depending on the sector, but they generally include:

- Customers: The end-users of products or services who drive demand and influence market trends. Their feedback is essential for product development and improvement.

- Suppliers: Entities that provide raw materials, components, or services necessary for production. Strong relationships with suppliers can lead to better pricing and quality control.

- Regulatory Bodies: Government agencies that enforce laws and regulations. Compliance with these regulations is vital for operational legitimacy and market access.

- Investors: Individuals or institutions that provide capital for business operations. Their expectations for returns can shape strategic decisions and growth trajectories.

- Employees: The workforce that drives the company’s operations. Employee satisfaction and engagement are critical for productivity and retention.

- Competitors: Other businesses in the same market. Understanding competitors’ strategies can help in positioning and differentiation.

Use cases for these stakeholders can include:

- Product Development: Engaging customers in the design process to create products that meet their needs, leveraging AI for insights and feedback analysis.

- Supply Chain Management: Collaborating with suppliers to optimize logistics and reduce costs, utilizing blockchain for transparency and efficiency.

- Regulatory Compliance: Working with regulatory bodies to ensure adherence to industry standards, employing AI to monitor compliance in real-time.

- Investment Strategies: Aligning business goals with investor expectations to secure funding, using data analytics to present compelling ROI forecasts.

- Employee Training: Implementing programs that enhance employee skills and job satisfaction, supported by AI-driven personalized learning paths.

- Market Analysis: Conducting competitive analysis to identify market gaps and opportunities, utilizing big data analytics for informed decision-making.

In this context, stakeholder management becomes essential. A stakeholder management plan can help in defining stakeholder management processes and ensuring effective stakeholder engagement. The stakeholder engagement strategy should include a stakeholder engagement assessment matrix to evaluate the level of involvement required for each stakeholder. Additionally, a stakeholder involvement plan can outline how to effectively communicate with stakeholders and keep them informed throughout the project lifecycle.

1.4. Current Industry Challenges

The landscape of any industry is fraught with challenges that can hinder growth and innovation. Some of the most pressing challenges include:

- Technological Disruption: Rapid advancements in technology can render existing business models obsolete. Companies must adapt quickly to stay relevant.

- Regulatory Compliance: Navigating complex regulations can be time-consuming and costly. Non-compliance can lead to severe penalties and reputational damage.

- Supply Chain Issues: Global supply chain disruptions, often exacerbated by geopolitical tensions or natural disasters, can impact production timelines and costs.

- Talent Acquisition and Retention: Finding and keeping skilled employees is increasingly difficult in a competitive job market. Companies must invest in employee development and workplace culture.

- Sustainability Pressures: Growing consumer demand for sustainable practices forces companies to rethink their operations and supply chains.

- Market Volatility: Economic fluctuations can affect consumer spending and investment, leading to uncertainty in business planning.

Addressing these challenges requires strategic planning, investment in technology, and a focus on building resilient business models. Understanding the meaning of stakeholder management and the importance of stakeholder involvement strategy can provide a framework for navigating these challenges effectively.

2. Core Technologies and Components

Core technologies and components are the backbone of any industry, enabling efficiency, innovation, and competitiveness. Key technologies include:

- Artificial Intelligence (AI): AI can automate processes, analyze data, and enhance decision-making. It is increasingly used in customer service, predictive analytics, and personalized marketing.

- Internet of Things (IoT): IoT devices collect and exchange data, providing real-time insights into operations. This technology is vital for smart manufacturing and supply chain optimization.

- Blockchain: This technology offers secure and transparent transaction records. It is particularly useful in supply chain management and financial services.

- Cloud Computing: Cloud services provide scalable resources and storage solutions, enabling businesses to operate more flexibly and cost-effectively.

- Big Data Analytics: Analyzing large datasets helps companies understand market trends, customer behavior, and operational efficiencies.

- Cybersecurity Solutions: As digital threats grow, robust cybersecurity measures are essential to protect sensitive data and maintain customer trust.

Components that support these technologies include:

- Software Platforms: Applications that facilitate data management, customer relationship management (CRM), and enterprise resource planning (ERP).

- Hardware Infrastructure: Servers, networking equipment, and IoT devices that support technology deployment.

- Data Management Systems: Tools for storing, processing, and analyzing data to derive actionable insights.

Investing in these core technologies and components is essential for businesses aiming to thrive in a competitive landscape. Rapid Innovation is positioned to assist clients in leveraging these technologies to achieve greater ROI and navigate industry challenges effectively. Understanding the stakeholder management meaning and the various aspects of stakeholder communication can further enhance the effectiveness of these initiatives. For instance, our expertise in AI Copilot Development can significantly improve product development and operational efficiency.

Refer to the image for a visual representation of key stakeholders and use cases in the industry.

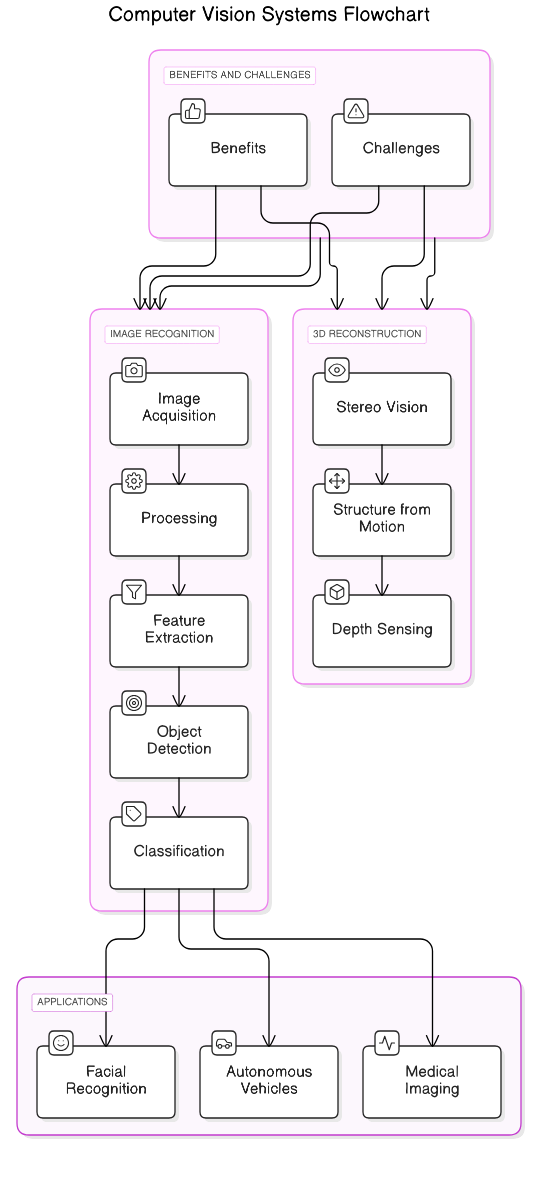

2.1. Computer Vision Systems

Computer vision systems are a subset of artificial intelligence that enable machines to interpret and understand visual information from the world. These systems utilize algorithms and models to process images and videos, allowing computers to perform tasks that typically require human vision. The applications of computer vision are vast, ranging from autonomous vehicles to medical imaging and facial recognition, including computer vision technology and computer vision in manufacturing.

- Key components of computer vision systems include:

- Image acquisition

- Image processing

- Feature extraction

- Object detection

- Image classification

2.1.1. Image Recognition

Image recognition is a critical aspect of computer vision that involves identifying and classifying objects within an image. This technology has advanced significantly due to deep learning techniques, particularly convolutional neural networks (CNNs). Image recognition systems can analyze visual data and make decisions based on the content of the images, including applications in facial recognition open cv and opencv facial recognition.

- Applications of image recognition include:

- Facial recognition for security systems

- Object detection in autonomous vehicles

- Medical image analysis for disease detection

- Image tagging in social media platforms

- Benefits of image recognition:

- Increased accuracy in identifying objects

- Automation of repetitive tasks

- Enhanced user experience in applications like augmented reality

- Challenges in image recognition:

- Variability in lighting and angles

- Occlusion of objects

- Need for large datasets for training models

Recent advancements in image recognition have led to impressive accuracy rates, with some systems achieving over 95% accuracy in specific tasks. Rapid Innovation leverages these advancements to help clients implement image recognition solutions that enhance operational efficiency and drive greater ROI, including computer vision software and computer vision image recognition.

2.1.2. 3D Reconstruction

3D reconstruction is the process of capturing the shape and appearance of real objects to create a three-dimensional model. This technology is essential in various fields, including robotics, virtual reality, and computer graphics. 3D reconstruction can be achieved through different methods, such as stereo vision, structure from motion, and depth sensing, which are integral to computer vision 3D.

- Key techniques in 3D reconstruction:

- Stereo vision: Uses two or more images to estimate depth.

- Structure from motion: Analyzes motion between images to reconstruct 3D structures.

- Depth sensors: Devices like LiDAR and time-of-flight cameras measure distance to create 3D maps.

- Applications of 3D reconstruction:

- Creating realistic environments in video games and simulations

- Architectural modeling and design

- Medical imaging for surgical planning

- Cultural heritage preservation through digital archiving

- Benefits of 3D reconstruction:

- Enhanced visualization of complex structures

- Improved accuracy in measurements and modeling

- Ability to simulate real-world scenarios for training and analysis

- Challenges in 3D reconstruction:

- Computational intensity and processing time

- Difficulty in capturing fine details

- Variability in object textures and materials

3D reconstruction technologies are continually evolving, with recent developments enabling real-time processing and higher fidelity models. Rapid Innovation is at the forefront of these advancements, providing clients with tailored 3D reconstruction solutions that not only meet their specific needs but also maximize their return on investment, including machine vision software open source and edge computer vision.

Refer to the image for a visual representation of the components and processes involved in computer vision systems.

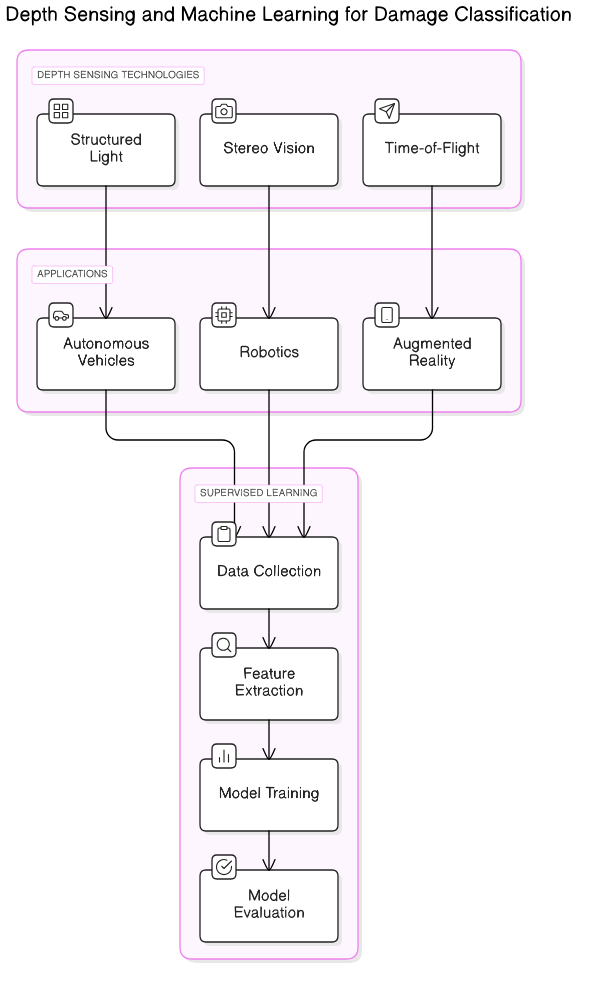

2.1.3. Depth Sensing

Depth sensing is a crucial technology that enables machines to perceive the world in three dimensions. It involves measuring the distance between the sensor and objects in the environment, providing valuable spatial information. This technology is widely used in various applications, including robotics, augmented reality, and autonomous vehicles.

- Depth sensors can be categorized into several types:

- Stereo vision: Utilizes two cameras to capture images from different angles, mimicking human binocular vision.

- Time-of-flight (ToF): Measures the time it takes for a light signal to travel to an object and back, calculating distance based on this time. This is a common feature in tof depth sensors.

- Structured light: Projects a known pattern onto a scene and analyzes the deformation of the pattern to determine depth.

- Applications of depth sensing include:

- Robotics: Enhances navigation and obstacle avoidance by providing spatial awareness, which is essential for efficient operation in dynamic environments. Depth sensors play a key role in this area.

- Augmented reality (AR): Enables realistic interaction between virtual objects and the real world by understanding the environment's depth, thereby improving user experience and engagement. Depth sensing cameras are often used in AR applications.

- Autonomous vehicles: Assists in safe navigation by detecting distances to other vehicles, pedestrians, and obstacles, significantly reducing the risk of accidents. Lidar depth sensors are particularly effective in this context.

Depth sensing technology is continually evolving, with advancements leading to improved accuracy and reduced costs. As a result, it is becoming increasingly accessible for various industries, enhancing the capabilities of machines and devices. At Rapid Innovation, we leverage depth sensing technology, including 3D depth sensors and depth sensing technology, to develop tailored solutions that help our clients achieve their business goals efficiently and effectively, ultimately driving greater ROI. For a comprehensive understanding of how machine learning integrates with depth sensing.

2.2. Machine Learning Models

Machine learning models are algorithms that enable computers to learn from data and make predictions or decisions without being explicitly programmed. These models are essential in analyzing complex datasets and extracting meaningful insights, making them invaluable in various fields, including finance, healthcare, and engineering.

- Key components of machine learning models include:

- Data: The foundation of any machine learning model, consisting of features (input variables) and labels (output variables).

- Training: The process of feeding data into the model to adjust its parameters and improve its accuracy.

- Evaluation: Assessing the model's performance using metrics such as accuracy, precision, and recall.

- Types of machine learning models:

- Supervised learning: Involves training a model on labeled data, where the desired output is known.

- Unsupervised learning: Deals with unlabeled data, focusing on finding patterns or groupings within the data.

- Reinforcement learning: Involves training an agent to make decisions by rewarding desired actions and penalizing undesired ones.

Machine learning models are transforming industries by automating processes, enhancing decision-making, and improving efficiency. As the volume of data continues to grow, the importance of these models will only increase. Rapid Innovation specializes in developing and implementing machine learning solutions that empower businesses to harness their data effectively, leading to improved operational efficiency and increased ROI.

2.2.1. Supervised Learning for Damage Classification

Supervised learning is a subset of machine learning where models are trained on labeled datasets. In the context of damage classification, supervised learning algorithms can effectively identify and categorize damage in various materials or structures, such as buildings, vehicles, and machinery.

The process of supervised learning for damage classification typically involves several steps:

- Data collection: Gathering labeled data that includes examples of damaged and undamaged instances.

- Feature extraction: Identifying relevant features from the data that will help the model distinguish between different types of damage.

- Model training: Using algorithms like decision trees, support vector machines, or neural networks to train the model on the labeled data.

- Model evaluation: Testing the model on a separate dataset to assess its accuracy and ability to generalize to new data.

The benefits of using supervised learning for damage classification are significant:

- High accuracy: Supervised learning models can achieve high levels of accuracy when trained on sufficient and representative data.

- Automation: These models can automate the damage assessment process, reducing the need for manual inspections and saving time and resources.

- Scalability: Once trained, supervised learning models can be deployed across various applications, making them versatile tools for damage classification.

Common applications of supervised learning in damage classification include:

- Infrastructure monitoring: Assessing the condition of bridges, roads, and buildings to identify potential hazards.

- Insurance claims: Automating the evaluation of damage in vehicles or properties for faster claims processing.

- Manufacturing: Detecting defects in products during the production process to ensure quality control.

Supervised learning for damage classification is a powerful approach that leverages the capabilities of machine learning to enhance safety, efficiency, and decision-making across multiple industries. At Rapid Innovation, we harness these advanced machine learning techniques to provide our clients with innovative solutions that drive operational excellence and maximize ROI.

Refer to the image for a visual representation of depth sensing technology and its applications.

2.2.2. Unsupervised Learning for Anomaly Detection

Unsupervised learning is a powerful approach in machine learning that does not require labeled data. It is particularly useful for anomaly detection, where the goal is to identify unusual patterns or outliers in data. This method is essential in various fields, including finance, healthcare, and cybersecurity.

- Definition: Unsupervised learning algorithms analyze data without prior labels, allowing them to discover hidden structures.

- Techniques: Common techniques include clustering, dimensionality reduction, and density estimation.

- Clustering: Algorithms like K-means and DBSCAN group similar data points, making it easier to spot anomalies that do not fit into any cluster. This is a key aspect of outlier detection.

- Dimensionality Reduction: Techniques such as PCA (Principal Component Analysis) reduce the number of features, helping to visualize data and identify outliers. This is particularly useful in anomaly detection using Python.

- Density Estimation: Methods like Gaussian Mixture Models (GMM) estimate the probability distribution of data points, allowing for the identification of low-density regions that may indicate anomalies. Statistical anomaly detection is often employed in this context.

- Applications: Unsupervised learning for anomaly detection is widely used in fraud detection, network security, and fault detection in manufacturing processes. Techniques such as scikit learn outlier detection are commonly utilized. At Rapid Innovation, we leverage these techniques to help clients enhance their security measures and operational efficiency, ultimately leading to greater ROI.

2.2.3. Deep Learning Architectures

Deep learning architectures are a subset of machine learning that utilize neural networks with multiple layers to model complex patterns in data. These architectures have gained popularity due to their ability to handle large datasets and extract intricate features.

- Types of Architectures:

- Convolutional Neural Networks (CNNs): Primarily used for image processing, CNNs excel at recognizing spatial hierarchies in images.

- Recurrent Neural Networks (RNNs): Ideal for sequential data, RNNs are used in natural language processing and time series analysis.

- Autoencoders: These are used for unsupervised learning tasks, particularly in anomaly detection, by learning efficient representations of data.

- Advantages:

- Feature Extraction: Deep learning models automatically learn features from raw data, reducing the need for manual feature engineering.

- Scalability: They can handle vast amounts of data, making them suitable for big data applications.

- Performance: Deep learning architectures often outperform traditional machine learning models in tasks like image and speech recognition.

- Challenges:

- Data Requirements: They typically require large datasets to train effectively.

- Computational Resources: Training deep learning models can be resource-intensive, requiring powerful hardware.

- Interpretability: Deep learning models are often seen as "black boxes," making it difficult to understand their decision-making processes.

At Rapid Innovation, we assist clients in implementing deep learning solutions tailored to their specific needs, ensuring they can harness the full potential of their data for improved decision-making and increased ROI.

2.3. Sensor Technologies

Sensor technologies play a crucial role in the collection of data for various applications, including IoT (Internet of Things), environmental monitoring, and industrial automation. These technologies enable the real-time gathering of information, which can be analyzed for insights and decision-making.

- Types of Sensors:

- Temperature Sensors: Used in HVAC systems, weather stations, and industrial processes to monitor temperature changes.

- Pressure Sensors: Essential in automotive and aerospace applications, these sensors measure pressure levels in various environments.

- Motion Sensors: Commonly used in security systems and smart homes, they detect movement and can trigger alarms or notifications.

- Applications:

- Smart Cities: Sensors are used to monitor traffic, air quality, and energy consumption, contributing to urban planning and sustainability.

- Healthcare: Wearable sensors track vital signs, enabling remote patient monitoring and personalized healthcare.

- Manufacturing: Sensors in production lines help monitor equipment health, predict failures, and optimize processes.

- Challenges:

- Data Management: The vast amount of data generated by sensors requires efficient storage and processing solutions.

- Interoperability: Different sensor technologies may not easily communicate with each other, leading to integration challenges.

- Security: As sensors become more connected, they are vulnerable to cyber threats, necessitating robust security measures.

In conclusion, unsupervised learning for anomaly detection, deep learning architectures, and sensor technologies are integral components of modern data analysis and machine learning applications. Each area presents unique opportunities and challenges, driving innovation across various industries. Rapid Innovation is committed to helping clients navigate these complexities, ensuring they achieve their business goals efficiently and effectively while maximizing their return on investment.

2.3.1. LiDAR Systems

LiDAR (Light Detection and Ranging) systems are advanced remote sensing technologies that utilize laser light to measure distances and create high-resolution maps. These systems are widely used in various fields, including geography, forestry, and urban planning, with numerous lidar applications.

- How it works: LiDAR emits laser pulses towards the ground and measures the time it takes for the light to return after hitting an object. This data is then used to calculate distances and create detailed 3D models of the terrain.

- Applications:

- Topographic mapping

- Vegetation analysis

- Infrastructure monitoring

- Application of lidar in civil engineering

- Advantages:

- High accuracy and precision

- Ability to penetrate vegetation

- Rapid data collection over large areas

- Limitations:

- High initial costs

- Requires skilled personnel for data interpretation

- Recent advancements: The integration of LiDAR with UAVs (drones) has revolutionized data collection, making it more accessible and efficient. At Rapid Innovation, we leverage these advancements to provide clients with tailored solutions that enhance operational efficiency and reduce costs, ultimately leading to greater ROI. The applications of lidar technology are expanding, particularly in civil engineering and urban planning. Additionally, the use of AI agents for IoT sensor integration is becoming increasingly relevant in optimizing these systems.

2.3.2. Thermal Imaging

Thermal imaging technology detects infrared radiation emitted by objects, allowing for the visualization of temperature differences. This technology is crucial in various sectors, including security, building inspections, and medical diagnostics.

- How it works: Thermal cameras capture the infrared radiation and convert it into an electronic signal, which is then processed to create a thermal image. The image displays temperature variations, with warmer areas appearing brighter.

- Applications:

- Building diagnostics (insulation and energy loss detection)

- Firefighting (locating hotspots)

- Medical imaging (detecting fevers or inflammation)

- Advantages:

- Non-invasive and safe

- Provides real-time data

- Effective in low-light conditions

- Limitations:

- Limited range compared to visible light cameras

- Requires calibration for accurate readings

- Recent trends: The use of thermal imaging in smart home technology is on the rise, enhancing security and energy efficiency. Rapid Innovation can assist clients in integrating thermal imaging solutions into their operations, leading to improved safety and reduced energy costs.

2.3.3. Acoustic Sensors

Acoustic sensors are devices that detect sound waves and convert them into electrical signals. These sensors are increasingly used in environmental monitoring, industrial applications, and security systems.

- How it works: Acoustic sensors capture sound waves through microphones or piezoelectric materials. The captured sound is then analyzed to determine its frequency, amplitude, and other characteristics.

- Applications:

- Wildlife monitoring (tracking animal movements)

- Structural health monitoring (detecting cracks or failures)

- Security systems (intrusion detection)

- Advantages:

- Can operate in various environmental conditions

- Capable of detecting a wide range of frequencies

- Relatively low cost compared to other sensor types

- Limitations:

- Susceptible to background noise

- Limited range for certain applications

- Emerging technologies: The integration of machine learning with acoustic sensors is enhancing their ability to analyze complex sound patterns, improving accuracy in various applications. At Rapid Innovation, we harness the power of AI to optimize the performance of acoustic sensors, enabling clients to achieve more accurate monitoring and analysis, thus maximizing their return on investment. The uses of lidar technology are also being explored in conjunction with acoustic sensors for enhanced environmental monitoring.

2.4. Data Processing Pipeline

A data processing pipeline is a series of data processing steps that transform raw data into a usable format. This pipeline is crucial for ensuring that data is clean, organized, and ready for analysis. The stages of a typical data processing pipeline include:

- Data Collection: Gathering data from various sources, such as databases, APIs, or user inputs. This can involve both structured data (like spreadsheets) and unstructured data (like text or images). This process is often part of an etl pipeline or an aws etl pipeline.

- Data Cleaning: Removing inaccuracies, duplicates, and irrelevant information. This step is essential to ensure the quality of the data, as poor-quality data can lead to misleading results.

- Data Transformation: Converting data into a suitable format for analysis. This may involve normalization, aggregation, or encoding categorical variables. An etl data pipeline is commonly used for this purpose.

- Data Storage: Storing the processed data in a database or data warehouse. This allows for efficient retrieval and management of data for future analysis.

- Data Analysis: Applying statistical methods or machine learning algorithms to extract insights from the data. This step is where the actual value of the data is realized. Data analysis can be part of a data analysis pipeline or a data processing pipeline.

- Data Visualization: Presenting the analyzed data in a visual format, such as charts or graphs, to make it easier to understand and communicate findings.

- Data Monitoring and Maintenance: Continuously monitoring the data pipeline for performance and making necessary adjustments to ensure it runs smoothly. This is crucial for maintaining an effective data pipeline management system.

The effectiveness of a data processing pipeline can significantly impact the quality of insights derived from data. Organizations that implement robust data processing pipelines can make more informed decisions and drive better business outcomes. At Rapid Innovation, we specialize in designing and implementing customized data processing pipelines, including python etl pipelines and data ingestion pipelines, that align with your business objectives, ensuring you achieve greater ROI through data-driven insights.

3. Industry-Specific Applications

Industry-specific applications of data processing and analysis are becoming increasingly important as businesses seek to leverage data for competitive advantage. Different industries utilize data in unique ways to address their specific challenges and opportunities. Some key applications include:

- Healthcare: Analyzing patient data to improve treatment outcomes and operational efficiency.

- Finance: Using data analytics for risk assessment, fraud detection, and investment strategies.

- Retail: Personalizing customer experiences through data-driven marketing and inventory management.

- Manufacturing: Implementing predictive maintenance and quality control through data analysis.

- Telecommunications: Enhancing customer service and network optimization using data insights.

These applications demonstrate the versatility of data processing across various sectors, highlighting the importance of tailored solutions to meet industry-specific needs.

3.1. Automotive Damage Assessment

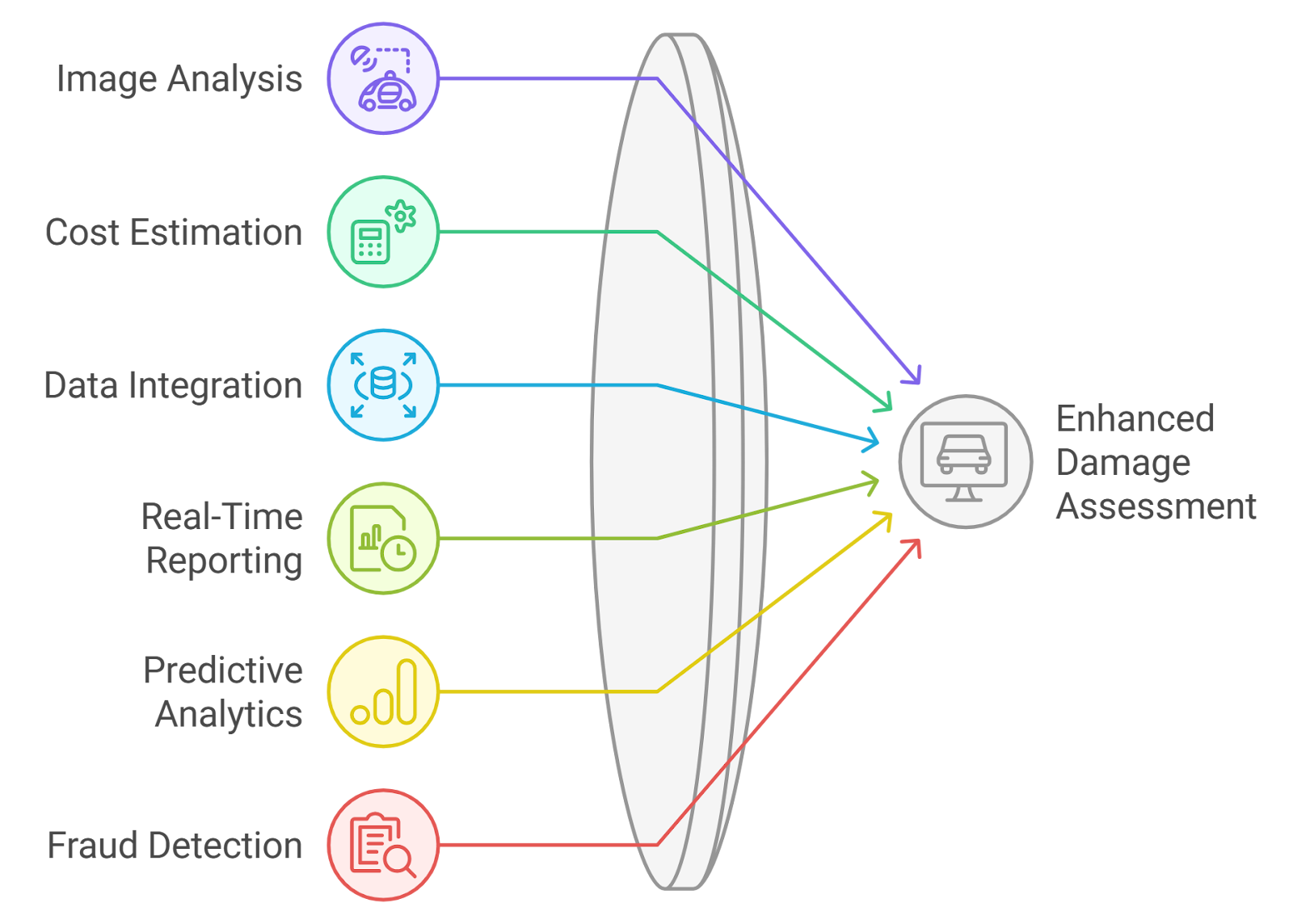

Automotive damage assessment is a critical application of data processing in the automotive industry. This process involves evaluating the extent of damage to vehicles after accidents or incidents. The integration of technology and data analytics has transformed how damage assessments are conducted. Key aspects include:

- Image Analysis: Utilizing computer vision and machine learning algorithms to analyze images of damaged vehicles. This technology can identify and quantify damage more accurately than traditional methods.

- Cost Estimation: Automating the estimation of repair costs based on the assessed damage, which can streamline the claims process for insurance companies and improve customer satisfaction.

- Data Integration: Combining data from various sources, such as repair history, parts availability, and labor costs, to provide a comprehensive assessment.

- Real-Time Reporting: Offering instant reports to stakeholders, including insurance adjusters and repair shops, which can expedite decision-making.

- Predictive Analytics: Using historical data to predict future repair needs and costs, helping businesses manage resources more effectively.

- Fraud Detection: Analyzing patterns in damage claims to identify potential fraud, protecting insurance companies from losses.

The use of data processing in automotive damage assessment not only enhances accuracy but also improves efficiency in the claims process. As technology continues to evolve, the automotive industry will likely see further advancements in damage assessment methodologies, leading to better outcomes for all parties involved. Rapid Innovation is committed to leveraging these advancements to help our clients optimize their operations and achieve significant returns on their investments.

3.1.1. Collision Analysis

Collision analysis is a critical process in understanding the dynamics of vehicle collisions. It involves examining the circumstances surrounding a vehicle collision to determine the cause and impact of the incident. This analysis is essential for insurance claims, legal proceedings, and improving vehicle safety standards. Key components of collision analysis include:

- Data Collection: Gathering data from the accident scene, including photographs, witness statements, and police reports.

- Vehicle Dynamics: Analyzing the speed, direction, and impact angles of the vehicles involved.

- Crash Reconstruction: Utilizing computer simulations and physical evidence to recreate the accident scenario, often enhanced by AI algorithms that can predict outcomes based on various parameters.

- Injury Assessment: Evaluating the injuries sustained by occupants to understand the collision's severity, which can be further analyzed using AI-driven predictive models to assess long-term impacts.

- Expert Testimony: Engaging accident reconstruction experts to provide insights in legal cases, supported by data analytics to strengthen arguments.

Collision analysis not only helps in determining liability but also aids in identifying trends that can lead to improved road safety measures, ultimately contributing to a reduction in accident rates and enhancing overall public safety.

3.1.2. Paint and Surface Damage

Paint and surface damage assessment is crucial in vehicle repair and restoration. This evaluation helps in determining the extent of damage and the necessary steps for repair. Understanding the types of paint and surface damage can also provide insights into the accident's severity. The assessment includes:

- Types of Damage: Identifying scratches, dents, and chips in the paintwork.

- Paint Layer Examination: Analyzing the layers of paint to determine if the damage penetrated to the metal.

- Color Matching: Ensuring that any repairs match the original paint color for aesthetic consistency, potentially utilizing AI tools for precise color matching.

- Corrosion Risk: Assessing the potential for rust and corrosion due to exposed metal surfaces.

- Repair Techniques: Exploring options such as touch-up paint, repainting, or professional detailing.

Proper assessment of paint and surface damage is essential for maintaining the vehicle's value and appearance, as well as ensuring long-term durability.

3.1.3. Structural Integrity Evaluation

Structural integrity evaluation is a vital aspect of vehicle safety assessment following a vehicle collision. This process involves examining the vehicle's frame and body to ensure it can withstand future impacts and provide adequate protection to occupants. The evaluation includes:

- Frame Inspection: Checking for bends, cracks, or misalignments in the vehicle's frame.

- Material Analysis: Evaluating the materials used in the vehicle's construction for strength and durability, potentially leveraging blockchain technology for traceability of material quality.

- Safety Features: Assessing the functionality of safety features such as airbags and crumple zones.

- Repair Feasibility: Determining whether the vehicle can be safely repaired or if it is a total loss.

- Regulatory Compliance: Ensuring that any repairs meet safety standards set by regulatory bodies.

A thorough structural integrity evaluation is essential for ensuring that a vehicle remains safe for operation after a vehicle collision, protecting both the driver and passengers. By integrating AI and blockchain technologies, Rapid Innovation can enhance the accuracy and reliability of these assessments, ultimately leading to greater ROI for clients in the automotive industry.

3.2. Property Damage Evaluation

Property damage evaluation is a critical process that assesses the extent of damage to a property, often following events such as natural disasters, accidents, or other unforeseen incidents. This evaluation is essential for insurance claims, restoration efforts, and ensuring the safety of occupants. The evaluation process typically involves two main components: natural disaster assessment and building structural analysis.

3.2.1. Natural Disaster Assessment

Natural disaster assessment focuses on evaluating the impact of natural events such as hurricanes, floods, earthquakes, and wildfires on properties. This assessment is crucial for understanding the extent of damage and determining the necessary recovery steps.

- Types of Natural Disasters:

- Hurricanes can cause wind damage and flooding.

- Earthquakes may lead to structural failures.

- Floods can result in water damage and mold growth.

- Assessment Process:

- Initial inspections are conducted to identify visible damage.

- The use of drones and satellite imagery can provide a broader view of affected areas, enhancing the accuracy of assessments.

- Detailed reports are generated, documenting the type and extent of damage.

- Data Collection:

- Gathering data on the disaster's intensity and duration.

- Utilizing historical data to predict potential future risks.

- Engaging with local authorities and emergency services for accurate information.

- Impact on Property Value:

- Properties in disaster-prone areas may experience a decrease in market value.

- Insurance premiums may rise due to increased risk assessments.

3.2.2. Building Structural Analysis

Building structural analysis is a systematic evaluation of a building's integrity and safety following damage. This analysis is essential to ensure that the structure can withstand future stresses and is safe for occupancy.

- Key Components of Structural Analysis:

- Foundation Assessment: Evaluating the foundation for cracks, settling, or shifting.

- Load-Bearing Walls: Inspecting walls that support the structure for any signs of damage.

- Roof Integrity: Checking for leaks, sagging, or missing shingles that could compromise the building.

- Methods of Analysis:

- Visual inspections by qualified engineers or inspectors.

- Non-destructive testing methods, such as ultrasonic testing, to assess material integrity.

- Load testing to determine if the structure can support expected loads.

- Safety Considerations:

- Identifying hazards that could pose risks to occupants.

- Ensuring compliance with local building codes and regulations.

- Recommending repairs or reinforcements to enhance structural integrity.

- Documentation and Reporting:

- Creating detailed reports that outline findings and recommendations.

- Providing documentation for insurance claims and legal purposes.

- Offering guidance on necessary repairs and timelines for restoration.

In conclusion, property damage evaluation through natural disaster assessment and building structural analysis is vital for ensuring safety, facilitating recovery, and maintaining property value. Proper evaluation helps property owners make informed decisions about repairs and future risk management. At Rapid Innovation, we leverage advanced AI technologies and blockchain solutions to streamline the property damage evaluation process, ensuring accuracy and efficiency, ultimately leading to greater ROI for our clients. For more insights on personalized risk evaluation in insurance with AI agents.

3.2.3. Interior Damage Documentation

Interior damage documentation is a critical process in assessing the extent of damage within a structure, particularly after events such as natural disasters, fires, or vandalism. This documentation serves multiple purposes, including insurance claims, legal proceedings, and restoration planning.

- Visual Evidence: Photographs and videos should be taken to capture the condition of the interior spaces. This includes:

- Walls, ceilings, and floors

- Fixtures and fittings

- Any visible mold or water damage

- Detailed Descriptions: Each documented area should include:

- A written description of the damage

- The location within the building

- The estimated size and severity of the damage

- Inventory of Damaged Items: Create a list of all damaged items, including:

- Furniture

- Appliances

- Personal belongings

- Professional Assessments: In some cases, it may be necessary to involve professionals such as:

- Structural engineers

- Restoration specialists

- Insurance adjusters

- Documentation Tools: Utilize various tools and technologies to enhance documentation, such as:

- Drones for aerial views

- 3D scanning for detailed modeling

- Mobile apps for real-time reporting

Proper interior damage documentation not only aids in recovery efforts but also ensures that all necessary information is available for future reference. Rapid Innovation can assist in this process by leveraging AI-driven image recognition and data analysis tools to streamline documentation, ensuring accuracy and efficiency, ultimately leading to a greater return on investment (ROI) for clients.

3.3. Infrastructure Assessment

Infrastructure assessment is essential for maintaining the safety and functionality of public and private structures. This process involves evaluating the condition of various infrastructure components to identify any necessary repairs or upgrades.

- Types of Infrastructure: Key areas of focus include:

- Roads and highways

- Bridges and tunnels

- Utilities such as water, gas, and electricity

- Assessment Techniques: Various methods can be employed to assess infrastructure, including:

- Visual inspections

- Non-destructive testing

- Load testing for structural integrity

- Data Collection: Collecting data is crucial for a comprehensive assessment. This can involve:

- Surveys and questionnaires

- Historical data analysis

- Geographic Information Systems (GIS) for mapping

- Prioritization of Repairs: After assessment, prioritize repairs based on:

- Safety concerns

- Cost-effectiveness

- Impact on the community

- Regulatory Compliance: Ensure that all assessments meet local, state, and federal regulations, which may include:

- Building codes

- Environmental regulations

- Safety standards

Regular infrastructure assessments help prevent catastrophic failures and ensure that communities remain safe and functional. Rapid Innovation employs blockchain technology to create immutable records of assessments, enhancing transparency and trust among stakeholders, which can lead to improved funding opportunities and project approvals.

3.3.1. Bridge and Road Inspection

Bridge and road inspections are vital components of infrastructure assessment, focusing specifically on the safety and usability of these critical transportation networks. Regular inspections help identify potential issues before they escalate into serious problems.

- Inspection Frequency: The frequency of inspections can vary based on:

- The age of the structure

- Traffic volume

- Environmental conditions

- Inspection Methods: Common methods for inspecting bridges and roads include:

- Visual inspections by trained personnel

- Use of drones for hard-to-reach areas

- Advanced technologies like ultrasonic testing for material integrity

- Key Inspection Elements: Inspectors should focus on several key areas, such as:

- Structural components (beams, girders, and supports)

- Surface conditions (cracks, potholes, and erosion)

- Safety features (guardrails, signage, and lighting)

- Documentation and Reporting: After inspections, it is essential to document findings thoroughly:

- Create detailed reports outlining the condition of the structure

- Include photographs and diagrams for clarity

- Recommend necessary repairs or maintenance

- Long-term Planning: Use inspection data to inform long-term infrastructure planning, including:

- Budgeting for repairs and upgrades

- Scheduling future inspections

- Implementing preventative maintenance programs

Regular bridge and road inspections are crucial for ensuring the safety of travelers and the longevity of infrastructure. By integrating AI analytics and blockchain for data integrity, Rapid Innovation enhances the inspection process, ensuring timely interventions and maximizing ROI for infrastructure projects.

3.3.2. Pipeline Damage Detection

Pipeline damage detection is crucial for maintaining the integrity and safety of pipeline systems. These systems transport essential resources like oil, gas, and water, and any damage can lead to significant environmental hazards and economic losses. Advanced technologies are employed to monitor pipelines continuously. Sensors and IoT devices are strategically placed along pipelines to detect leaks or structural weaknesses. Data analytics plays a vital role in interpreting sensor data to identify potential issues before they escalate. Machine learning algorithms can analyze historical data to predict failure points and optimize maintenance schedules. Regular inspections using drones and robotic systems enhance the ability to detect damage in hard-to-reach areas. Real-time monitoring systems can alert operators immediately when anomalies are detected, allowing for swift action.

The integration of AI in pipeline damage detection not only improves accuracy but also reduces operational costs. By leveraging predictive maintenance strategies, companies can minimize downtime and extend the lifespan of their infrastructure. At Rapid Innovation, we specialize in implementing these advanced AI solutions, ensuring that our clients achieve greater ROI through enhanced operational efficiency and reduced risk. For more information on how AI can enhance grid management.

3.3.3. Power Grid Infrastructure

Power grid infrastructure is the backbone of energy distribution, ensuring that electricity reaches homes and businesses efficiently. As the demand for energy increases, the need for a robust and resilient power grid becomes more critical. Smart grid technology enhances the efficiency of power distribution. Real-time data collection allows for better demand forecasting and load balancing. The integration of renewable energy sources, such as solar and wind, requires advanced grid management systems. Cybersecurity measures are essential to protect the grid from potential threats and attacks. Energy storage solutions, like batteries, help manage supply and demand fluctuations. Automated systems can quickly isolate faults, reducing the duration of outages.

Investing in modernizing power grid infrastructure is essential for sustainability and reliability. The transition to smart grids not only improves operational efficiency but also supports the integration of clean energy technologies. Rapid Innovation offers consulting and development services to help clients navigate this transition, ensuring they maximize their investments in smart grid technologies.

4. AI Agent Architecture

AI agent architecture refers to the design and structure of systems that utilize artificial intelligence to perform tasks autonomously. This architecture is fundamental in developing intelligent systems capable of learning, reasoning, and decision-making. Modular design allows for flexibility and scalability in AI applications. Components typically include perception, reasoning, learning, and action modules. Perception modules gather data from the environment through sensors and cameras. Reasoning modules analyze the data to make informed decisions based on predefined rules or learned experiences. Learning modules enable the system to adapt and improve over time through machine learning techniques. Action modules execute decisions, interacting with the environment or other systems.

The effectiveness of AI agent architecture lies in its ability to process vast amounts of data and derive actionable insights. This architecture is widely used in various applications, including robotics, autonomous vehicles, and smart home systems. By optimizing AI agent architecture, developers can create more efficient and capable intelligent systems that enhance productivity and innovation. At Rapid Innovation, we leverage our expertise in AI agent architecture to deliver tailored solutions that drive business success for our clients.

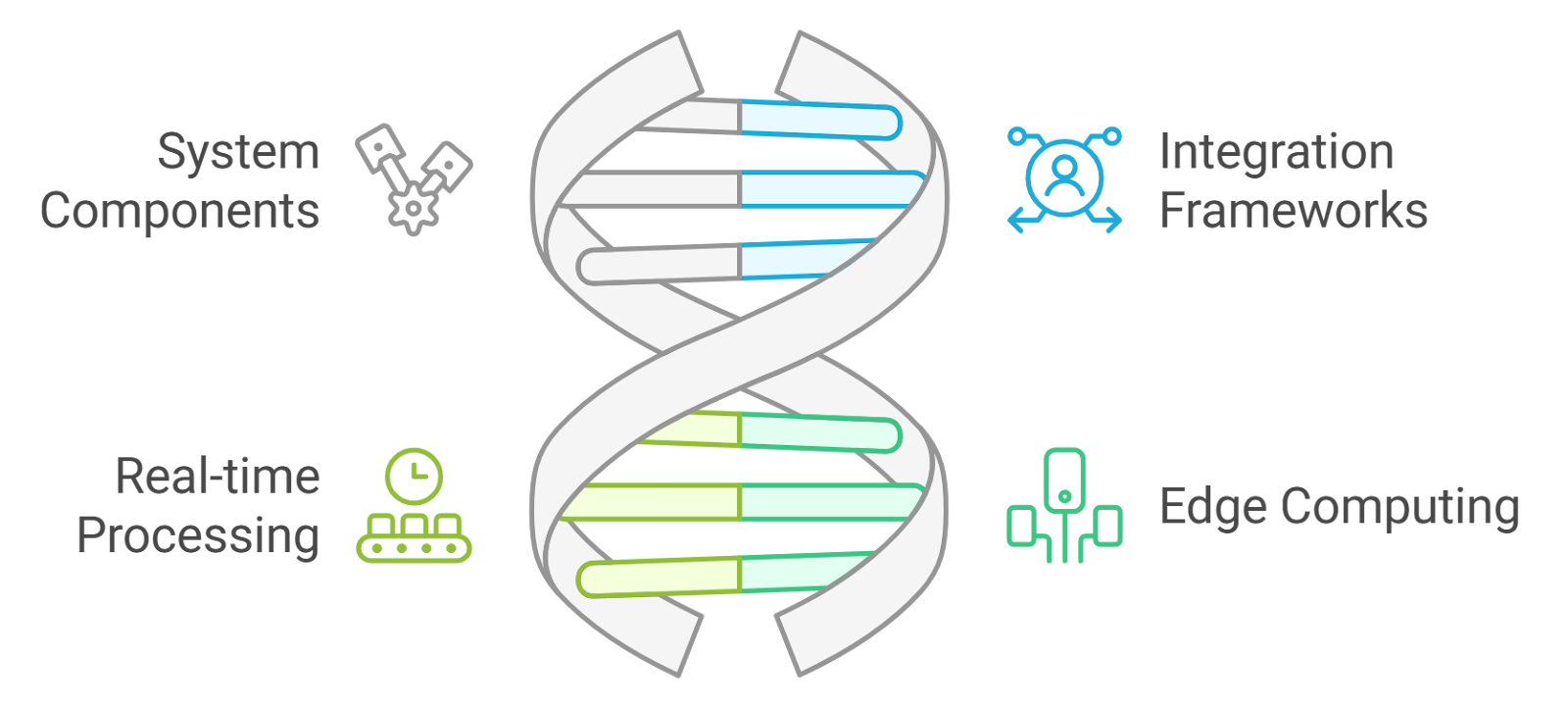

4.1. System Components

In any robust system, especially those dealing with data processing and analytics, the architecture is typically composed of several key components. Understanding these components is crucial for optimizing performance and ensuring seamless operation. The two primary components of such a system are the Data Collection Module and the Analysis Engine.

4.1.1. Data Collection Module

The Data Collection Module is the foundational element of any data-driven system. It is responsible for gathering data from various sources, ensuring that the information is accurate, timely, and relevant. This module plays a critical role in the overall effectiveness of the system.

- Data Sources: The module can collect data from multiple sources, including:

- IoT devices

- Social media platforms

- Databases

- APIs

- User inputs

- Data Types: It handles various types of data, such as:

- Structured data (e.g., databases)

- Unstructured data (e.g., text, images)

- Semi-structured data (e.g., JSON, XML)

- Data Quality: Ensuring data quality is paramount. The module typically includes:

- Validation checks to ensure accuracy

- Cleaning processes to remove duplicates and errors

- Standardization to maintain consistency across datasets.

- Real-time vs. Batch Processing: The module can operate in different modes:

- Real-time data collection for immediate analysis, including realtime data processing and realtime data integration

- Batch processing for periodic data aggregation

- Scalability: As data volumes grow, the module must be scalable to handle increased loads without compromising performance.

- Security: Data collection must adhere to security protocols to protect sensitive information, including:

- Encryption during transmission

- Access controls to limit data exposure

4.1.2. Analysis Engine

The Analysis Engine is the core component that processes the collected data, transforming it into actionable insights. This engine employs various algorithms and methodologies to analyze data, making it a critical part of any data analytics system.

- Data Processing: The engine processes data through:

- Statistical analysis to identify trends and patterns

- Machine learning algorithms for predictive analytics

- Natural language processing for text analysis

- Visualization: The engine often includes tools for data visualization, allowing users to:

- Create dashboards

- Generate reports

- Use graphs and charts to represent data findings

- Performance Optimization: To ensure efficient processing, the engine may utilize:

- Parallel processing to handle large datasets

- Caching mechanisms to speed up repeated queries

- Integration: The Analysis Engine must integrate seamlessly with other system components, including:

- Data storage solutions (e.g., databases, data lakes)

- User interfaces for end-user interaction

- Feedback Loop: A well-designed engine incorporates a feedback mechanism to:

- Continuously improve algorithms based on new data

- Adjust models to enhance accuracy over time

- Scalability and Flexibility: The engine should be scalable to accommodate growing data needs and flexible enough to adapt to new analytical methods or technologies, such as OLAP and OLTP systems.

In summary, the Data Collection Module and the Analysis Engine are essential components of a data-driven system. Together, they ensure that data is accurately collected, processed, and transformed into valuable insights, driving informed decision-making and strategic planning. At Rapid Innovation, we leverage these components to help our clients achieve greater ROI by implementing tailored solutions that enhance data integrity, optimize analytics, and ultimately support their business objectives, including data migration from legacy systems and data warehouse extract transform load processes.

4.1.3. Reporting System

A robust reporting system is essential for organizations to make informed decisions based on data analysis. It serves as a bridge between raw data and actionable insights. An effective reporting system not only enhances transparency but also fosters accountability within the organization. By leveraging advanced analytics, businesses can uncover hidden insights that drive strategic initiatives and ultimately achieve greater ROI.

- Provides comprehensive data visualization tools to present information clearly, enabling stakeholders to grasp complex data quickly.

- Enables users to generate customized reports tailored to specific needs, ensuring that decision-makers have the most relevant information at their fingertips.

- Supports various formats for report generation, including PDF, Excel, and web-based dashboards, allowing for flexibility in data presentation.

- Facilitates real-time data access, allowing for timely decision-making that can significantly impact business outcomes.

- Incorporates automated reporting features to reduce manual effort and errors, streamlining the reporting process and enhancing productivity, such as those found in automated reporting systems and safety incident management software.

- Ensures data accuracy and integrity through validation processes, which is crucial for maintaining trust in the data-driven decision-making process.

- Offers historical data analysis to identify trends and patterns over time, empowering organizations to make proactive strategic decisions, similar to the capabilities of hr reporting software and epic reporting.

4.2. Integration Frameworks

Integration frameworks are critical for ensuring seamless communication between different systems and applications within an organization. They enable data sharing and process automation, enhancing overall operational efficiency. By implementing a robust integration framework, organizations can streamline workflows, reduce operational silos, and improve data accessibility across departments, leading to increased productivity and ROI.

- Supports various integration methods, including API, ETL (Extract, Transform, Load), and middleware solutions, allowing for versatile data connectivity.

- Facilitates interoperability between legacy systems and modern applications, ensuring that organizations can leverage existing investments while adopting new technologies.

- Allows for real-time data synchronization across platforms, ensuring consistency and accuracy in data reporting.

- Provides a centralized hub for managing data flows and integrations, simplifying the oversight of complex data environments.

- Enhances scalability by allowing organizations to add new applications without disrupting existing processes, thus supporting growth initiatives.

- Reduces integration complexity through pre-built connectors and templates, accelerating deployment times and reducing costs.

- Promotes data governance by ensuring compliance with regulatory standards, which is essential for maintaining operational integrity.

4.3. Real-time Processing Capabilities

Real-time processing capabilities are essential for organizations that require immediate insights and actions based on data. This capability allows businesses to respond swiftly to changing conditions and customer needs. With real-time processing capabilities, organizations can gain a competitive edge by leveraging data to drive innovation and improve operational efficiency, ultimately enhancing ROI.

- Enables instant data ingestion from various sources, including IoT devices, social media, and transactional systems, ensuring that organizations have access to the latest information.

- Supports real-time analytics, allowing organizations to derive insights as data is generated, which is crucial for timely decision-making.

- Facilitates immediate decision-making, which is crucial in industries like finance, healthcare, and e-commerce, where rapid responses can lead to significant advantages.

- Enhances customer experience by providing timely responses and personalized services, fostering customer loyalty and satisfaction.

- Reduces latency in data processing, ensuring that information is always up-to-date and actionable.

- Integrates with machine learning algorithms to enable predictive analytics in real-time, allowing organizations to anticipate trends and make informed decisions.

- Supports event-driven architectures, allowing systems to react to specific triggers or events, further enhancing operational responsiveness.

Additionally, tools like powerscribe, home inspection reporting software, and oracle business intelligence publisher can further enhance the reporting capabilities within organizations.

4.4. Edge Computing Implementation

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the location where it is needed. This approach reduces latency, enhances speed, and improves the overall efficiency of data processing. Implementing edge computing involves several key steps:

- Infrastructure Setup: Establishing the necessary hardware and software infrastructure at the edge, which includes edge devices like IoT sensors, gateways, and local servers that can process data near the source.

- Data Management: Developing a robust data management strategy to handle data generated at the edge. This includes data filtering, aggregation, and storage solutions that minimize bandwidth usage and ensure quick access to relevant data.

- Application Development: Creating applications that can leverage edge computing capabilities. These applications should be designed to operate in real-time, processing data locally and only sending essential information to the cloud for further analysis. The implementation of IoT with edge devices is crucial in this step to enhance functionality.

- Security Measures: Implementing security protocols to protect data at the edge, including encryption, secure access controls, and regular updates to safeguard against vulnerabilities.

- Integration with Cloud Services: Ensuring seamless integration between edge devices and cloud services. This allows for a hybrid approach where critical data is processed locally, while less urgent data can be sent to the cloud for deeper analysis.

- Monitoring and Maintenance: Establishing a system for monitoring edge devices and applications to ensure they are functioning correctly. Regular maintenance and updates are crucial to keep the system secure and efficient.

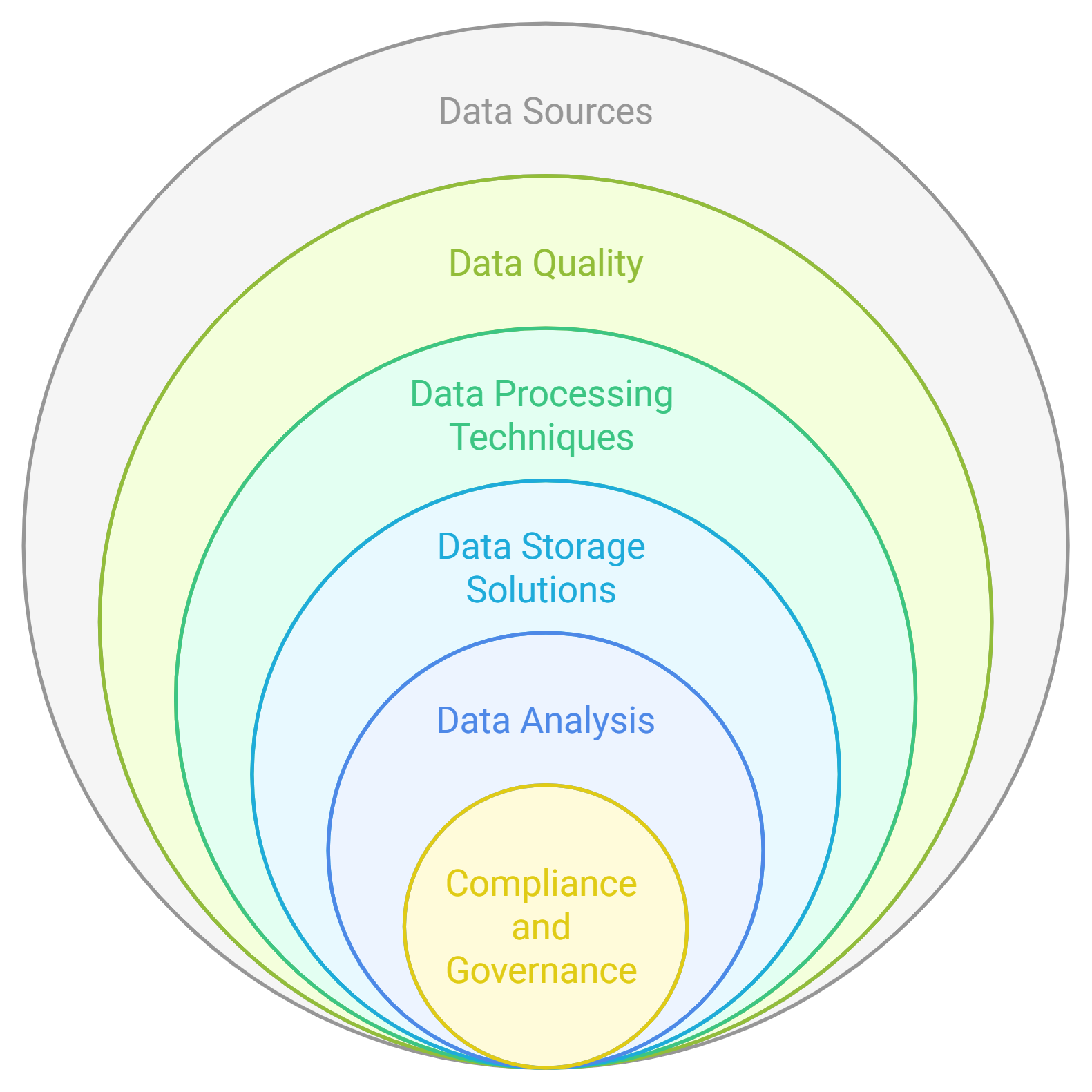

5. Data Collection and Processing

Data collection and processing are fundamental components of any data-driven strategy. This process involves gathering data from various sources, processing it for analysis, and deriving insights that can inform decision-making. Key aspects include:

- Data Sources: Identifying and utilizing various data sources, such as IoT devices, social media, and enterprise systems. Each source can provide valuable information that contributes to a comprehensive data set.

- Data Quality: Ensuring the quality of collected data is paramount. This involves validating data accuracy, consistency, and completeness to ensure reliable analysis.

- Data Processing Techniques: Employing various data processing techniques, such as batch processing and real-time processing, depending on the use case. Real-time processing is essential for applications requiring immediate insights, while batch processing is suitable for large volumes of data.

- Data Storage Solutions: Choosing appropriate storage solutions that can handle the volume and velocity of data being collected. Options include cloud storage, on-premises databases, and hybrid solutions.

- Data Analysis: Utilizing analytical tools and techniques to extract insights from the processed data. This can involve statistical analysis, machine learning algorithms, and data visualization tools.

- Compliance and Governance: Adhering to data governance policies and regulations, such as GDPR or HIPAA, to ensure data privacy and security throughout the collection and processing stages.

5.1. Image and Video Capture

Image and video capture are critical components of data collection, especially in fields like surveillance, healthcare, and marketing. The process involves several key considerations:

- Capture Devices: Utilizing high-quality cameras and sensors for capturing images and videos. The choice of device can significantly impact the quality of the data collected.

- Resolution and Quality: Ensuring that the images and videos captured are of high resolution and quality, which is essential for accurate analysis and interpretation of the data.

- Storage and Management: Implementing effective storage solutions for the large volumes of image and video data generated, including cloud storage options that allow for easy access and retrieval.

- Processing Techniques: Applying image and video processing techniques to enhance the quality of the captured data, which can include noise reduction, image stabilization, and color correction.

- Real-time Processing: For applications requiring immediate insights, real-time processing of video feeds is crucial. This can involve using edge computing to analyze video data on-site, reducing latency.

- Data Annotation: Annotating images and videos for training machine learning models. This process involves labeling data to help algorithms learn and improve their accuracy.

- Privacy Considerations: Addressing privacy concerns related to image and video capture, including obtaining consent from individuals being recorded and ensuring compliance with relevant regulations.

By focusing on these aspects, organizations can effectively implement edge computing, streamline data collection and processing, and enhance their image and video capture capabilities. Rapid Innovation is well-equipped to assist clients in these areas, leveraging our expertise in AI and blockchain to drive efficiency and maximize ROI. Through tailored solutions, we help businesses harness the power of adaptive AI development implementation and data analytics to achieve their strategic objectives.

5.2. Sensor Data Integration

Sensor data integration is a crucial process in the realm of data analytics, particularly in industries such as IoT, healthcare, and smart cities. This process involves combining data from multiple sensors to create a comprehensive dataset that can be analyzed for insights.

- Types of Sensors: Various sensors, including temperature, humidity, motion, and pressure sensors, generate sensor data that can be integrated.

- Data Fusion: This technique combines sensor data from different sources to improve accuracy and reliability. For instance, merging data from GPS and accelerometers can enhance location tracking, which is vital for applications like fleet management and logistics optimization.

- Real-time Processing: Integrating sensor data in real-time allows for immediate analysis and response, which is vital in applications like autonomous vehicles and industrial automation. Rapid Innovation leverages advanced AI algorithms to facilitate real-time sensor data processing, enabling clients to make informed decisions swiftly.

- Standardization: To effectively integrate sensor data, it is essential to standardize formats and protocols. This ensures that data from different sensors can be easily combined and analyzed, leading to more coherent insights and better decision-making.

- Challenges: Issues such as data redundancy, latency, and varying data quality can complicate sensor data integration. Addressing these challenges is key to successful implementation. Rapid Innovation employs robust methodologies to tackle these issues, ensuring that clients can achieve greater ROI through efficient sensor data integration.

5.3. Data Preprocessing

Data preprocessing is a fundamental step in data analysis that prepares raw data for further analysis. This stage is critical for ensuring the quality and usability of the data.

- Data Cleaning: This involves removing inaccuracies, duplicates, and irrelevant information from the dataset. Techniques include identifying and correcting errors, removing outliers, and filling in missing values, which are essential for maintaining data integrity.

- Data Transformation: This step modifies the data into a suitable format for analysis. Common transformations include normalization (scaling data to a specific range) and encoding categorical variables (converting categories into numerical values). These transformations enhance the performance of AI models developed by Rapid Innovation.

- Data Reduction: Reducing the volume of data while maintaining its integrity is essential for efficient processing. Techniques include dimensionality reduction (using methods like PCA - Principal Component Analysis - to reduce the number of features) and aggregation (summarizing data points to create a more manageable dataset).

- Feature Engineering: Creating new features from existing data can enhance model performance. This may involve combining multiple features and extracting date and time components, which can lead to more accurate predictions and insights.

- Importance: Proper data preprocessing can significantly improve the accuracy of predictive models and the overall quality of insights derived from the data, ultimately driving better business outcomes for our clients.

5.4. Quality Assurance Methods

Quality assurance (QA) methods are essential for ensuring the reliability and accuracy of data throughout its lifecycle. Implementing robust QA practices can prevent errors and enhance data integrity.

- Data Validation: This process checks the accuracy and quality of data before it is used. Techniques include range checks (ensuring data falls within specified limits) and consistency checks (verifying that data is consistent across different sources).

- Automated Testing: Utilizing automated tools to test data quality can save time and reduce human error. This includes regular audits of data quality and automated scripts to identify anomalies, ensuring that clients can trust the data they are working with.

- Statistical Methods: Employing statistical techniques to assess data quality can provide insights into potential issues. Common methods include descriptive statistics (analyzing mean, median, and mode to understand data distribution) and control charts (monitoring data over time to identify trends and variations).

- Documentation and Standards: Establishing clear documentation and standards for data collection and processing helps maintain quality. This includes creating data dictionaries and defining data entry protocols, which are crucial for compliance and operational efficiency.

- Continuous Improvement: QA should be an ongoing process. Regularly reviewing and updating QA methods ensures that data quality remains high as systems and technologies evolve, allowing Rapid Innovation to provide clients with cutting-edge solutions that adapt to their changing needs.

5.5. Data Storage and Management

Data storage and management are critical components in any organization, especially in the age of big data. Effective data storage ensures that information is easily accessible, secure, and organized. Here are some key aspects to consider:

- Types of Data Storage:

- On-Premises Storage: Physical servers located within the organization, providing direct control over data.

- Cloud Storage: Data stored on remote servers accessed via the internet, offering scalability and flexibility to adapt to changing business needs, including cloud storage management and cloud file management.

- Hybrid Solutions: A combination of on-premises and cloud storage, allowing for a tailored approach that meets specific organizational requirements, such as hybrid cloud storage management.

- Data Management Practices:

- Data Governance: Establishing policies and standards for data management to ensure data quality and compliance with regulations, including electronic data management systems.

- Data Backup and Recovery: Regularly backing up data to prevent loss and implementing recovery plans for emergencies, ensuring business continuity, which can involve cloud backup management.

- Data Lifecycle Management: Managing data from creation to deletion, ensuring that data is archived or disposed of appropriately to optimize storage costs, relevant in data storage management solutions.

- Security Measures:

- Encryption: Protecting data by converting it into a secure format that can only be read with a decryption key, safeguarding sensitive information.

- Access Controls: Implementing user permissions to restrict access to sensitive data, ensuring that only authorized personnel can view or modify information.

- Regular Audits: Conducting audits to ensure compliance with data management policies and identify potential vulnerabilities, enhancing overall security posture.

- Emerging Technologies:

- Artificial Intelligence: Utilizing AI for data organization, predictive analytics, and anomaly detection, enabling organizations to derive insights and make informed decisions, which can be enhanced through big data storage and management.

- Blockchain: Ensuring data integrity and security through decentralized storage solutions, providing a transparent and tamper-proof method of managing data.

Effective data storage and management not only enhance operational efficiency but also support informed decision-making and strategic planning, ultimately leading to greater ROI for organizations, including the use of electronic document records management systems and information storage management.

6. Analysis and Assessment Techniques

Analysis and assessment techniques are essential for interpreting data and deriving actionable insights. These techniques help organizations understand trends, make predictions, and evaluate performance. Key techniques include:

- Descriptive Analysis:

- Summarizes historical data to identify patterns and trends.

- Utilizes statistical measures such as mean, median, and mode.

- Predictive Analysis:

- Uses historical data to forecast future outcomes.

- Employs machine learning algorithms to identify potential risks and opportunities.

- Prescriptive Analysis:

- Recommends actions based on data analysis.

- Combines predictive analytics with optimization techniques to suggest the best course of action.

- Qualitative Analysis:

- Focuses on non-numerical data to understand underlying motivations and behaviors.

- Involves methods such as interviews, focus groups, and content analysis.

- Quantitative Analysis:

- Involves numerical data to quantify relationships and test hypotheses.

- Utilizes statistical tools and software for data analysis.

- Visualization Techniques:

- Employs charts, graphs, and dashboards to present data in an easily digestible format.

- Enhances understanding and communication of complex data sets.

By implementing these analysis and assessment techniques, organizations can make data-driven decisions that enhance performance and drive growth, ultimately maximizing their return on investment.

6.1. Damage Classification Algorithms

Damage classification algorithms are vital in various fields, including engineering, healthcare, and environmental science. These algorithms help in identifying and categorizing damage based on specific criteria. Key points include:

- Types of Damage Classification Algorithms:

- Supervised Learning Algorithms:

- Require labeled data for training.

- Common algorithms include Support Vector Machines (SVM), Decision Trees, and Neural Networks.

- Unsupervised Learning Algorithms:

- Analyze data without pre-existing labels.

- Techniques like clustering (e.g., K-means) are used to identify patterns in data.

- Supervised Learning Algorithms:

- Applications:

- Structural Health Monitoring: Used to assess the integrity of buildings and bridges by classifying damage types.

- Medical Imaging: Algorithms classify types of injuries or diseases based on imaging data (e.g., X-rays, MRIs).

- Environmental Assessment: Classifies damage to ecosystems or habitats due to natural disasters or human activities.

- Performance Metrics:

- Accuracy: Measures the proportion of correctly classified instances.

- Precision and Recall: Evaluate the algorithm's ability to identify relevant instances while minimizing false positives and negatives.

- F1 Score: A balance between precision and recall, providing a single metric for performance evaluation.

- Challenges:

- Data Quality: Poor quality data can lead to inaccurate classifications.

- Overfitting: Algorithms may perform well on training data but poorly on unseen data.

- Scalability: Ensuring algorithms can handle large datasets efficiently.

By leveraging damage classification algorithms, organizations can enhance their ability to assess and respond to various types of damage, ultimately improving safety and operational efficiency, and driving better business outcomes, including the management of research data and the use of electronic database management systems.

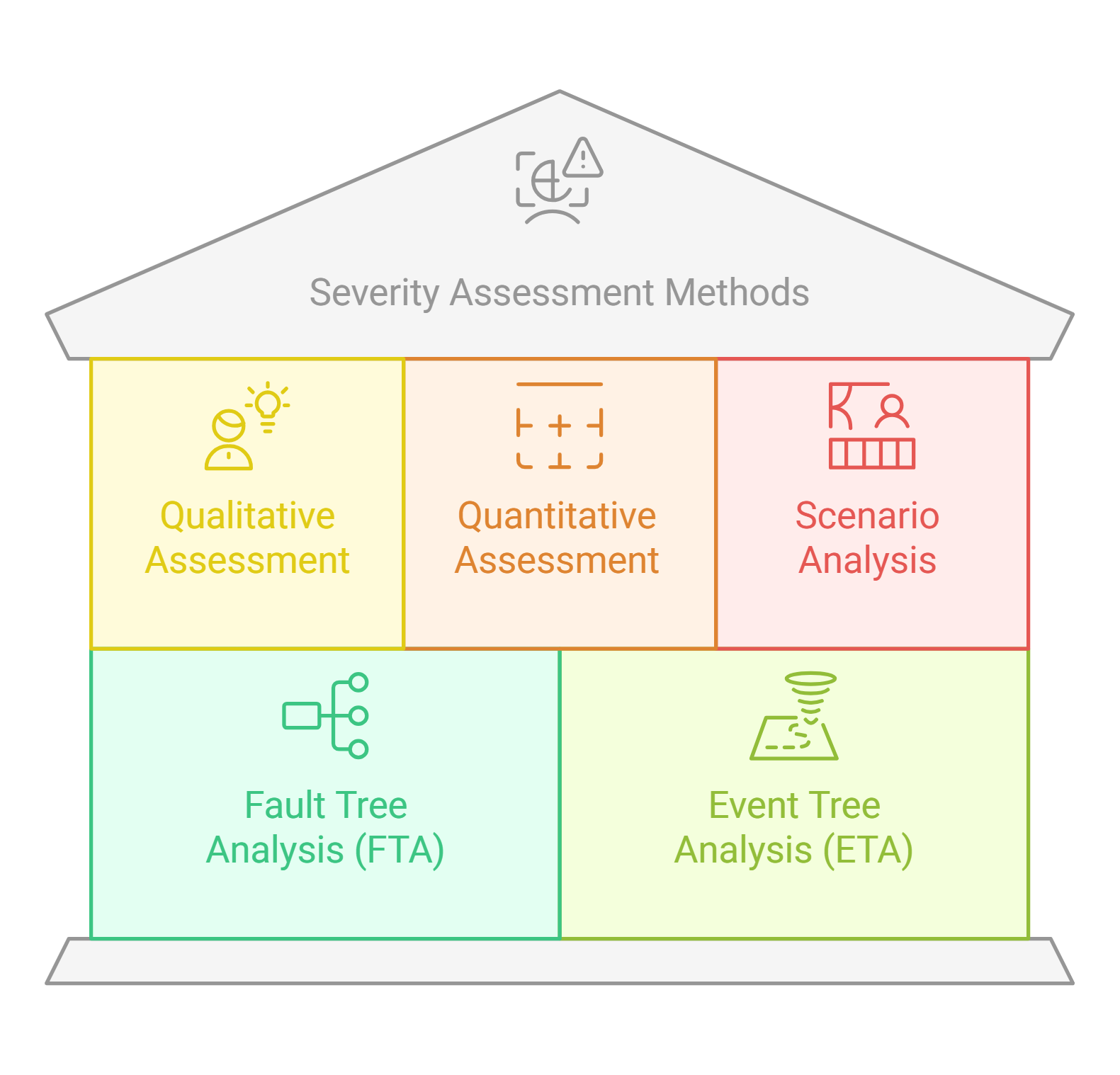

6.2. Severity Assessment Methods

Severity assessment methods are crucial in evaluating the potential impact of risks on projects, systems, or processes. These methods help organizations prioritize risks based on their severity, allowing for more effective risk management strategies.

- Qualitative Assessment: This method involves subjective judgment to categorize risks based on their potential impact and likelihood. Common tools include risk matrices and expert opinions, often utilized in qualitative risk assessment and qualitative risk analysis.