Table Of Contents

Category

Machine Learning (ML)

Artificial Intelligence (AI)

IoT

Blockchain

Manufacturing

Logistics

Supplychain

Cryptocurrency Solutions

Decentralized Finance (DeFi)

Cloud Computing

1. Introduction to Predictive Device Failure Detection

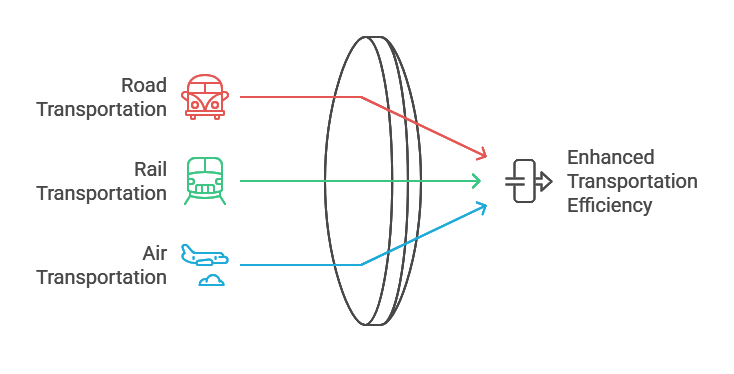

Predictive device failure detection is a crucial aspect of modern industrial operations, leveraging advanced technologies to foresee potential equipment failures before they occur. This proactive approach minimizes downtime, reduces maintenance costs, and enhances overall operational efficiency. Predictive maintenance utilizes data analytics, machine learning, and artificial intelligence (AI) to monitor equipment health by analyzing historical data and real-time sensor inputs. Predictive models can identify patterns that indicate impending failures, transitioning from reactive maintenance—which addresses issues after they arise—to a more strategic, predictive approach.

The rise of the Internet of Things (IoT) has significantly contributed to the effectiveness of predictive device failure detection. With numerous sensors embedded in machinery, organizations can collect vast amounts of data, which can be analyzed to predict when a device is likely to fail. This technology is applicable across various industries, including manufacturing, energy, transportation, and healthcare. Companies can save substantial costs by preventing unexpected breakdowns and optimizing maintenance schedules through IoT predictive maintenance.

At Rapid Innovation, we specialize in integrating AI-driven predictive maintenance solutions tailored to your specific operational needs. By leveraging our expertise in predictive maintenance solutions, clients can enhance their predictive capabilities, leading to improved ROI through reduced operational disruptions and optimized resource allocation.

Incorporating AI agents into predictive maintenance systems enhances their capabilities. These AI agents can process large datasets quickly, identify anomalies, and provide actionable insights. AI algorithms can learn from new data, continuously improving their predictive accuracy. This adaptability is essential in dynamic environments where equipment conditions can change rapidly, making IoT and predictive maintenance a powerful combination. For more information on how AI agents can be utilized in maintenance tracking.

Overall, predictive device failure detection represents a significant advancement in maintenance strategies, enabling organizations to operate more efficiently and effectively. With Rapid Innovation's consulting and development services, including partnerships with predictive maintenance companies like IBM, SAP, and AWS, businesses can harness the power of AI to transform their maintenance practices and achieve their strategic goals.

1.1. Overview and Importance

Failure detection systems are critical components in various industries, including manufacturing, telecommunications, and IT. These systems are designed to identify and diagnose failures in equipment or processes, ensuring that operations run smoothly and efficiently. They help minimize downtime, which can lead to significant financial losses. Early detection of failures can prevent catastrophic events, enhancing safety. Additionally, failure detection systems contribute to improved maintenance strategies, allowing for predictive maintenance rather than reactive approaches.

The importance of failure detection systems cannot be overstated. They play a vital role in enhancing operational efficiency by ensuring that equipment is functioning optimally, reducing maintenance costs through timely interventions, and increasing customer satisfaction by ensuring consistent service delivery. At Rapid Innovation, we leverage our expertise in AI to develop tailored failure detection solutions that align with your business goals, ultimately driving greater ROI. For more insights on how AI can enhance procurement intelligence, check out our article on AI Agent Procurement Intelligence Engine.

1.2. Evolution of Failure Detection Systems

The evolution of failure detection systems has been marked by significant technological advancements. Initially, these systems relied on basic monitoring techniques, such as manual inspections and simple alarms. Over time, the following developments have occurred:

- Introduction of automated monitoring systems that utilize sensors to collect data in real-time.

- Development of sophisticated algorithms that analyze data to predict potential failures before they occur.

- Integration of Internet of Things (IoT) technology, allowing for remote monitoring and control of devices.

The shift from reactive to proactive maintenance strategies has been a game-changer. Modern failure detection systems can now:

- Utilize machine learning to improve accuracy in failure predictions.

- Leverage big data analytics to process vast amounts of information for better decision-making.

- Incorporate cloud computing for enhanced data storage and accessibility.

At Rapid Innovation, we harness these advancements to create customized solutions that not only detect failures but also optimize maintenance schedules, ensuring that your operations remain efficient and cost-effective.

1.3. Role of AI in Modern Device Monitoring

Artificial Intelligence (AI) has revolutionized the landscape of device monitoring and failure detection systems. By harnessing the power of AI, organizations can achieve unprecedented levels of efficiency and accuracy in their monitoring systems. AI algorithms can analyze historical data to identify patterns and anomalies that may indicate potential failures. Machine learning models can continuously improve their predictions based on new data, leading to more reliable outcomes. Furthermore, AI-driven failure detection systems can automate responses to detected failures, reducing the need for human intervention.

The integration of AI in failure detection systems offers several advantages:

- Enhanced predictive maintenance capabilities, allowing organizations to schedule maintenance activities based on actual equipment conditions rather than fixed schedules.

- Improved resource allocation, as AI can help prioritize which devices require immediate attention.

- Increased operational resilience, as AI systems can adapt to changing conditions and provide real-time insights.

At Rapid Innovation, we specialize in developing AI-driven failure detection systems that empower organizations to not only monitor their devices effectively but also to make informed decisions that enhance operational efficiency and reduce costs.

In conclusion, the combination of advanced failure detection systems and AI technology is transforming how organizations monitor and maintain their devices, leading to greater efficiency, safety, and cost savings. By partnering with Rapid Innovation, you can leverage these advancements to achieve your business goals efficiently and effectively.

1.4. Business Impact and ROI

Understanding the business impact and return on investment (ROI) of a project or initiative is crucial for organizations aiming to maximize their resources. The business impact refers to the tangible and intangible effects that a project has on an organization, while ROI measures the financial return relative to the investment made.

- Increased Efficiency: Implementing new systems or processes can streamline operations, reducing time and costs. For example, Rapid Innovation has helped clients automate their workflows, leading to faster processing times and fewer errors, ultimately enhancing productivity.

- Enhanced Customer Satisfaction: Improved services or products can lead to higher customer satisfaction, resulting in increased loyalty and repeat business. Rapid Innovation's AI-driven solutions have enabled clients to personalize their offerings, making satisfied customers more likely to recommend their business to others.

- Competitive Advantage: Organizations that leverage innovative technologies or processes can gain a competitive edge in the market. Rapid Innovation assists clients in adopting cutting-edge AI solutions, which can lead to increased market share and profitability.

- Data-Driven Decision Making: Investments in analytics and reporting tools can provide insights that drive better business decisions. Rapid Innovation empowers clients with advanced analytics capabilities, leading to more effective strategies and improved performance.

- Financial Metrics: Calculating ROI involves comparing the net profit from an investment to its cost. A positive ROI indicates that the benefits outweigh the costs, making the investment worthwhile. According to a study, companies that invest in technology can see an ROI of up to 300% over three years.

- Long-Term Growth: Investments that may not show immediate returns can lead to long-term growth opportunities, including developing new markets or enhancing product offerings.

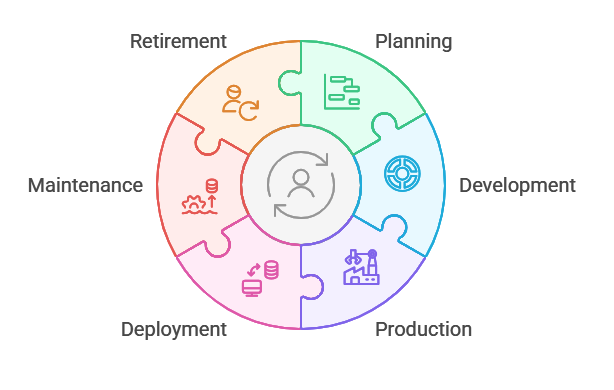

2. System Architecture

System architecture refers to the conceptual model that defines the structure, behavior, and various views of a system. It serves as a blueprint for both the system and the project developing it. A well-defined system architecture is essential for ensuring that all components work together effectively.

- Scalability: A good system architecture allows for easy scaling of resources to meet growing demands, which is crucial for businesses that anticipate growth or fluctuating workloads.

- Integration: Effective architecture facilitates the integration of various components, ensuring that they communicate seamlessly. This is vital for maintaining data consistency and operational efficiency.

- Security: A robust architecture incorporates security measures to protect sensitive data and systems from threats. This includes implementing firewalls, encryption, and access controls.

- Flexibility: The architecture should be adaptable to changes in technology or business needs, allowing organizations to pivot quickly in response to market demands.

- Performance: System architecture impacts the overall performance of applications. Optimized architecture can lead to faster response times and improved user experiences.

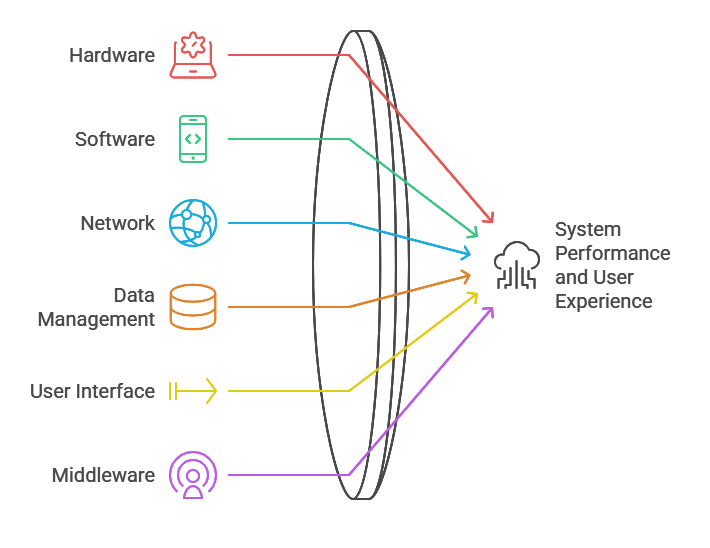

2.1. Core Components

Core components of system architecture are the fundamental building blocks that define how a system operates. Understanding these components is essential for designing effective systems.

- Hardware: This includes the physical devices such as servers, computers, and networking equipment that support the system. The choice of hardware can significantly affect performance and scalability.

- Software: The applications and operating systems that run on the hardware are critical. This includes both system software (like operating systems) and application software (like databases and user interfaces).

- Network: The network infrastructure connects different components of the system, enabling communication and data transfer. A reliable network is essential for system performance and user accessibility.

- Data Management: Effective data management practices ensure that data is stored, retrieved, and processed efficiently. This includes databases, data warehouses, and data lakes.

- User Interface: The user interface (UI) is how users interact with the system. A well-designed UI enhances user experience and can lead to higher productivity.

- Middleware: Middleware acts as a bridge between different software applications, allowing them to communicate and share data. It is essential for integrating disparate systems and ensuring smooth operations.

2.1.1. Data Collection Layer

The Data Collection Layer is the foundational component of any data-driven system. It is responsible for gathering raw data from various sources, ensuring that the data is accurate, relevant, and timely. This layer plays a crucial role in the overall data pipeline, as the quality of data collected directly impacts the effectiveness of subsequent processing and analysis.

- Sources of Data:

- IoT devices

- Social media platforms

- Transactional databases

- Web scraping

- APIs from third-party services

- Key Functions:

- Data ingestion: Collecting data in real-time or batch mode.

- Data validation: Ensuring the accuracy and integrity of the data collected.

- Data storage: Temporarily holding data before it is processed.

- Technologies Used:

- Apache Kafka for real-time data streaming.

- Apache NiFi for data flow automation.

- ETL (Extract, Transform, Load) tools for batch processing.

The effectiveness of the Data Collection Layer is critical for businesses aiming to leverage big data analytics. A robust data collection strategy, including techniques of data collection and data gathering techniques in research, can lead to better insights and informed decision-making. At Rapid Innovation, we assist clients in implementing efficient data collection frameworks that enhance data quality and accessibility, ultimately driving greater ROI. This includes data collection procedures for qualitative research and automating data capture to streamline the process. For more on the importance of data quality.

2.1.2. Processing Engine

The Processing Engine is the core component that transforms raw data into a structured format suitable for analysis. This layer is responsible for executing various data processing tasks, including data cleaning, transformation, and aggregation. The efficiency of the Processing Engine can significantly affect the speed and accuracy of data analysis.

- Key Functions:

- Data cleaning: Removing duplicates, correcting errors, and handling missing values.

- Data transformation: Converting data into a usable format, such as normalizing or encoding.

- Data aggregation: Summarizing data to provide insights at different levels.

- Processing Techniques:

- Batch processing: Handling large volumes of data at once, often used for historical data analysis.

- Stream processing: Analyzing data in real-time as it is collected, ideal for time-sensitive applications.

- Technologies Used:

- Apache Spark for distributed data processing.

- Apache Flink for real-time stream processing.

- Hadoop MapReduce for batch processing.

The Processing Engine is essential for ensuring that data is not only processed efficiently but also accurately. A well-optimized processing engine can lead to faster insights and improved operational efficiency. Rapid Innovation helps clients streamline their processing capabilities, enabling them to make timely and informed decisions that enhance their competitive edge. This includes collecting and analyzing data to ensure comprehensive insights.

2.1.3. AI Analysis Module

The AI Analysis Module is the advanced layer that applies artificial intelligence and machine learning algorithms to the processed data. This module is designed to extract meaningful insights, identify patterns, and make predictions based on historical data. The integration of AI into data analysis enhances the capability to derive actionable insights from complex datasets.

- Key Functions:

- Predictive analytics: Using historical data to forecast future trends.

- Pattern recognition: Identifying trends and anomalies in data.

- Natural language processing: Analyzing text data for sentiment analysis and topic modeling.

- Techniques Used:

- Supervised learning: Training models on labeled data to make predictions.

- Unsupervised learning: Discovering hidden patterns in unlabeled data.

- Reinforcement learning: Training models through trial and error to optimize decision-making.

- Technologies Used:

- TensorFlow and PyTorch for building machine learning models.

- Scikit-learn for traditional machine learning algorithms.

- Apache Mahout for scalable machine learning.

The AI Analysis Module is pivotal for organizations looking to harness the power of data-driven decision-making. By leveraging AI, businesses can gain deeper insights, improve customer experiences, and drive innovation. At Rapid Innovation, we empower our clients to utilize advanced AI techniques, ensuring they stay ahead in their industries and achieve substantial returns on their investments. This includes understanding the meaning of collection of data and the importance of data gathering and analysis in driving business success.

2.1.4. Alert and Reporting System

An effective alert and reporting system is crucial for monitoring and managing operations within any organization. This system serves as a proactive measure to identify issues before they escalate, ensuring that stakeholders are informed and can take timely action.

- Real-time Alerts: The alert and reporting system should provide real-time notifications for critical events, such as system failures, security breaches, or performance degradation. This allows teams to respond quickly to mitigate potential damage, ultimately enhancing operational efficiency and reducing downtime.

- Customizable Reporting: Users should have the ability to customize reports based on specific metrics and KPIs relevant to their roles. This ensures that the information presented is actionable and tailored to the needs of different departments, leading to more informed decision-making and improved performance.

- Historical Data Analysis: The alert and reporting system should maintain historical data to analyze trends over time. This can help in identifying recurring issues and understanding the root causes of problems, enabling organizations to implement preventive measures and optimize processes.

- User-Friendly Interface: A simple and intuitive interface is essential for users to navigate the alert and reporting system effectively. This reduces the learning curve and increases adoption rates among team members, fostering a culture of data-driven decision-making.

- Integration with Other Tools: The alert and reporting system should seamlessly integrate with other tools and platforms used within the organization, such as project management software, CRM systems, and communication tools. This enhances collaboration and ensures that all relevant data is accessible in one place, streamlining workflows and improving overall productivity.

At Rapid Innovation, we specialize in developing and implementing alert and reporting systems to help our clients achieve their business goals efficiently and effectively, ultimately driving greater ROI through enhanced operational capabilities. If you're looking to enhance your alert and reporting system, consider hiring our Action Transformer Developers to assist you in this process. Additionally, you can explore the benefits of generative AI in automated financial reporting for further insights.

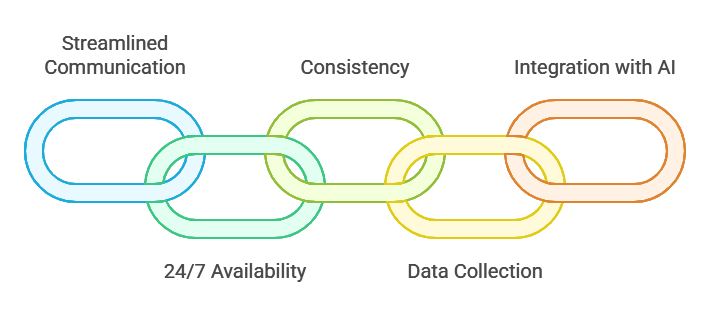

2.2. Integration Points

Integration points are critical for ensuring that various systems and applications within an organization work together efficiently. Identifying and implementing these integration points can lead to improved workflows and data consistency.

- API Connectivity: Application Programming Interfaces (APIs) are essential for enabling different software applications to communicate with each other. Organizations should leverage APIs to connect disparate systems, allowing for data exchange and functionality sharing, which can significantly enhance operational efficiency.

- Data Synchronization: Ensuring that data is synchronized across systems is vital for maintaining accuracy and consistency. Integration points should facilitate real-time data updates, reducing the risk of discrepancies and errors, thereby improving data integrity.

- Third-Party Integrations: Many organizations rely on third-party applications for specific functions, such as marketing automation or customer support. Identifying integration points with these tools can enhance overall efficiency and provide a more comprehensive view of operations, leading to better strategic planning.

- Workflow Automation: Integration points can enable workflow automation, reducing manual tasks and streamlining processes. This can lead to increased productivity and reduced operational costs, ultimately contributing to a higher return on investment.

- Security Considerations: When establishing integration points, it is essential to consider security implications. Data should be encrypted during transmission, and access controls should be implemented to protect sensitive information, ensuring compliance with industry standards.

2.3. Scalability Considerations

Scalability is a critical factor for any organization looking to grow and adapt to changing market conditions. A scalable system can accommodate increased workloads without compromising performance or requiring significant reconfiguration.

- Infrastructure Flexibility: Organizations should invest in flexible infrastructure that can easily scale up or down based on demand. This includes cloud-based solutions that allow for on-demand resource allocation, ensuring that businesses can respond swiftly to market changes.

- Load Balancing: Implementing load balancing techniques can help distribute workloads evenly across servers, ensuring that no single server becomes a bottleneck. This enhances performance and reliability as user demand increases, supporting sustained growth.

- Modular Architecture: A modular system architecture allows organizations to add or remove components as needed. This flexibility enables businesses to adapt to new requirements without overhauling the entire system, facilitating innovation and agility.

- Performance Monitoring: Continuous performance monitoring is essential for identifying potential scalability issues before they impact operations. Organizations should implement monitoring tools to track system performance and resource utilization, ensuring optimal performance at all times.

- Cost Management: As organizations scale, it is crucial to manage costs effectively. This includes evaluating the cost implications of scaling up infrastructure and ensuring that the return on investment justifies the expenses incurred, allowing for sustainable growth and profitability.

2.4. Security Architecture

Security architecture is a critical component in the design and implementation of any system, particularly in the context of information technology and data management. It encompasses the framework and strategies that protect data integrity, confidentiality, and availability.

- Components of Security Architecture:

- Policies and Standards: Establishing clear security policies and standards is essential for guiding the behavior of users and systems.

- Access Control: Implementing robust access control mechanisms ensures that only authorized users can access sensitive data.

- Network Security: Utilizing firewalls, intrusion detection systems, and secure communication protocols helps protect data in transit.

- Data Encryption: Encrypting data both at rest and in transit safeguards it from unauthorized access and breaches.

- Monitoring and Auditing: Continuous monitoring and regular audits help identify vulnerabilities and ensure compliance with security policies.

- Frameworks and Models:

- Zero Trust Architecture: This model assumes that threats could be internal or external, requiring strict verification for every user and device.

- Defense in Depth: This strategy involves multiple layers of security controls to protect data, making it harder for attackers to penetrate the system.

- SASE Architecture: This framework combines network security functions with WAN capabilities to support the secure access needs of users and devices.

- Compliance and Regulations: Adhering to regulations such as GDPR, HIPAA, and PCI-DSS is crucial for maintaining security and protecting user data.

- Emerging Technologies: The integration of AI and machine learning in security architecture can enhance threat detection and response capabilities, allowing organizations to proactively address potential security risks. This is particularly relevant in the context of cloud security architecture, where organizations must secure their cloud environments against evolving threats. For more information on how we can assist with this, our insights on AI agents for IoT sensor integration.

3. Data Collection and Preprocessing

Data collection and preprocessing are vital steps in the data analysis pipeline, ensuring that the data used for analysis is accurate, relevant, and ready for processing. This phase involves gathering data from various sources and preparing it for further analysis.

- Importance of Data Collection:

- Accurate data collection is essential for making informed decisions and helps in identifying trends, patterns, and anomalies in the data.

- Methods of Data Collection:

- Surveys and Questionnaires: Collecting data directly from users or stakeholders.

- Web Scraping: Extracting data from websites for analysis.

- APIs: Utilizing application programming interfaces to gather data from other software applications.

- Data Preprocessing Steps:

- Data Cleaning: Removing duplicates, correcting errors, and handling missing values to ensure data quality.

- Data Transformation: Converting data into a suitable format or structure for analysis, such as normalization or standardization.

- Data Integration: Combining data from different sources to create a unified dataset.

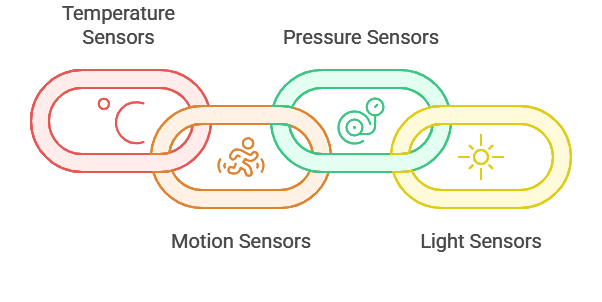

3.1. Sensor Types and Deployment

Sensors play a crucial role in data collection, especially in the context of the Internet of Things (IoT) and smart systems. Understanding the types of sensors and their deployment strategies is essential for effective data gathering.

- Types of Sensors:

- Temperature Sensors: Measure ambient temperature and are widely used in climate control systems.

- Motion Sensors: Detect movement and are commonly used in security systems and smart homes.

- Pressure Sensors: Monitor pressure levels in various applications, including industrial processes and weather stations.

- Light Sensors: Measure light intensity and are used in smart lighting systems.

- Deployment Strategies:

- Fixed Deployment: Sensors are installed in a specific location for continuous monitoring, such as in smart buildings.

- Mobile Deployment: Sensors are placed on mobile devices or vehicles to collect data on the go, useful in logistics and transportation.

- Distributed Networks: A network of sensors is deployed across a wide area to gather comprehensive data, often used in environmental monitoring.

- Challenges in Sensor Deployment:

- Connectivity Issues: Ensuring reliable communication between sensors and data collection systems can be challenging.

- Power Management: Many sensors operate on battery power, necessitating efficient energy management strategies.

- Data Security: Protecting the data collected by sensors from unauthorized access and breaches is crucial, particularly in the context of computer security architecture.

- Applications of Sensors:

- Smart Cities: Sensors are used for traffic management, waste management, and environmental monitoring.

- Healthcare: Wearable sensors monitor patient health metrics in real-time.

- Agriculture: Soil moisture sensors help optimize irrigation and improve crop yields.

At Rapid Innovation, we leverage our expertise in AI and data management to enhance security architecture and data collection processes for our clients. By implementing advanced security measures and efficient data handling strategies, we help organizations achieve greater ROI and ensure their systems are robust against emerging threats, including those related to cyber security architecture and cloud computing security architecture.

3.2. Data Sources

Data sources are critical for gathering insights and making informed decisions in various fields, including technology, environmental science, and business analytics. Understanding the types of data sources available can enhance the quality of analysis and improve outcomes. Two significant categories of data sources are device telemetry and environmental metrics.

3.2.1. Device Telemetry

Device telemetry refers to the automated process of collecting and transmitting data from devices to a central system for monitoring and analysis. This data can come from various devices, including sensors, IoT devices, and machinery.

Key aspects of device telemetry include:

- Real-time data collection: Telemetry systems can provide immediate insights, allowing for quick decision-making.

- Remote monitoring: Devices can be monitored from anywhere, reducing the need for physical presence.

- Data accuracy: Automated data collection minimizes human error, ensuring more reliable data.

- Scalability: Telemetry systems can easily scale to accommodate more devices as needed.

Applications of device telemetry are vast and include:

- Healthcare: Wearable devices collect health metrics like heart rate and activity levels, providing valuable data for patient monitoring. Rapid Innovation can help healthcare providers implement AI-driven analytics to interpret this data, leading to improved patient outcomes and operational efficiencies.

- Manufacturing: Machinery telemetry helps in predictive maintenance, reducing downtime and improving efficiency. By integrating AI algorithms, Rapid Innovation enables manufacturers to predict equipment failures before they occur, thus maximizing ROI through reduced maintenance costs.

- Transportation: Fleet management systems use telemetry to track vehicle performance and optimize routes. Our AI solutions can analyze this data to enhance route planning, reduce fuel consumption, and improve overall fleet efficiency.

3.2.2. Environmental Metrics

Environmental metrics involve the collection and analysis of data related to environmental conditions. This data is crucial for understanding the impact of human activities on the environment and for developing strategies for sustainability.

Important components of environmental metrics include:

- Air quality: Measurements of pollutants and particulate matter help assess the health of the atmosphere.

- Water quality: Data on chemical composition and biological indicators of water bodies inform conservation efforts.

- Climate data: Temperature, humidity, and precipitation metrics are essential for climate modeling and forecasting.

The significance of environmental metrics can be seen in various sectors:

- Urban planning: Data on air and noise pollution can guide the development of greener cities. Rapid Innovation can assist urban planners by providing AI tools that analyze environmental data to create sustainable urban designs.

- Agriculture: Farmers use environmental metrics to optimize irrigation and crop management, enhancing yield while conserving resources. Our AI solutions can analyze weather patterns and soil conditions to provide actionable insights, leading to increased agricultural productivity and sustainability.

- Policy-making: Governments rely on environmental data to create regulations aimed at reducing pollution and protecting natural resources. Rapid Innovation can support policymakers by developing AI models that simulate the impact of various regulations, helping to create more effective environmental policies.

In conclusion, both device telemetry and environmental metrics serve as vital data sources that contribute to informed decision-making across multiple domains. By leveraging these data sources, organizations can enhance operational efficiency, promote sustainability, and improve overall outcomes. Rapid Innovation is committed to helping clients harness the power of AI to achieve these goals effectively and efficiently, ultimately driving greater ROI.

Additionally, various projects can enhance data analysis capabilities, such as sql projects for data analysis, data analysis projects in python, and data analytics projects for beginners. For those looking to delve deeper, big data analytics projects and procurement data analytics can provide valuable insights. Open source data analytics and open source data analysis tools can also be beneficial for organizations seeking cost-effective solutions. Furthermore, integrating tools like azure synapse data warehouse and adobe analytics data feed can streamline data management and analysis processes.

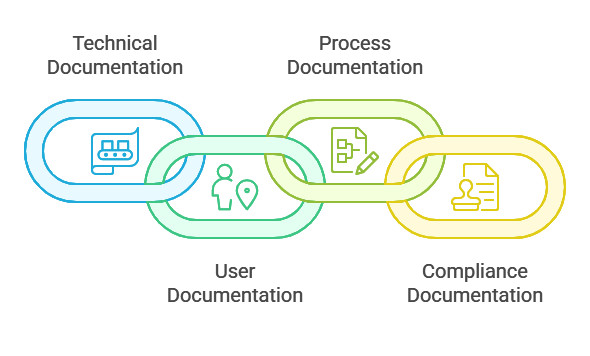

3.2.3. Historical Failure Data

Historical failure data is crucial for understanding the reliability and performance of systems and components over time. This data provides insights into past failures, enabling organizations to identify patterns and trends that can inform future maintenance and operational strategies.

- Helps in predictive maintenance: By analyzing historical failure data, organizations can predict when a component is likely to fail, allowing for timely interventions. Rapid Innovation leverages advanced AI algorithms to analyze this data, enabling clients to implement predictive maintenance strategies that significantly reduce downtime and maintenance costs.

- Identifies root causes: Understanding the reasons behind past failures can help in addressing underlying issues, reducing the likelihood of recurrence. Our AI-driven analytics tools assist clients in pinpointing root causes, leading to more effective solutions and improved system reliability.

- Supports risk assessment: Historical failure data can be used to evaluate the risk associated with specific components or systems, aiding in prioritization of maintenance efforts. Rapid Innovation's data analytics capabilities empower organizations to assess risks accurately, ensuring that resources are allocated where they are needed most.

- Enhances decision-making: Access to comprehensive failure data allows for informed decision-making regarding resource allocation and operational changes. Our AI solutions provide actionable insights that help clients make strategic decisions, ultimately driving greater ROI.

- Facilitates compliance: Many industries require documentation of historical failure data for regulatory compliance, making it essential for organizations to maintain accurate records. Rapid Innovation assists clients in automating compliance processes, ensuring that they meet regulatory requirements efficiently. For more information on how we can help, check out our Enterprise AI Development services.

3.2.4. Maintenance Records

Maintenance records are essential for tracking the upkeep and servicing of equipment and systems. These records provide a detailed history of maintenance activities, which is vital for ensuring operational efficiency and safety.

- Provides a maintenance history: Detailed records help in understanding the frequency and type of maintenance performed on equipment, which can inform future maintenance schedules. Our solutions enable clients to maintain comprehensive maintenance histories, optimizing their maintenance planning.

- Aids in warranty claims: Accurate maintenance records are often necessary for validating warranty claims, ensuring that organizations can recover costs for repairs or replacements. Rapid Innovation's systems streamline record-keeping, making it easier for clients to manage warranty claims effectively.

- Supports performance analysis: By reviewing maintenance records, organizations can assess the effectiveness of their maintenance strategies and make necessary adjustments. Our AI tools provide insights that help clients refine their maintenance approaches, leading to improved performance.

- Enhances accountability: Maintenance records create a clear trail of responsibility, ensuring that all maintenance activities are documented and can be reviewed if issues arise. Rapid Innovation's solutions promote accountability by providing transparent and accessible maintenance records.

- Improves resource management: Understanding maintenance needs through records allows organizations to allocate resources more effectively, optimizing both time and budget. Our data-driven insights help clients manage their resources efficiently, maximizing their operational effectiveness.

3.3. Data Quality Management

Data quality management (DQM) is a systematic approach to ensuring that data is accurate, consistent, and reliable. In the context of maintenance and operational data, DQM is essential for making informed decisions and optimizing processes.

- Ensures data accuracy: DQM processes help in identifying and correcting errors in data, ensuring that organizations are working with reliable information. Rapid Innovation employs AI techniques to enhance data accuracy, enabling clients to trust their data for critical decision-making.

- Promotes consistency: By standardizing data entry and management practices, DQM helps maintain consistency across datasets, which is crucial for analysis and reporting. Our solutions ensure that clients' data management practices are aligned, facilitating seamless analysis.

- Facilitates data integration: High-quality data can be easily integrated from various sources, enabling comprehensive analysis and insights. Rapid Innovation's expertise in data integration allows clients to harness the full potential of their data, driving better business outcomes.

- Supports compliance: Many industries have strict data quality standards; effective DQM ensures that organizations meet these requirements, avoiding potential penalties. Our DQM solutions help clients navigate compliance challenges with ease, safeguarding their operations.

- Enhances decision-making: Reliable data is the foundation of effective decision-making, allowing organizations to make strategic choices based on accurate information. Rapid Innovation empowers clients with high-quality data, enabling them to make informed decisions that drive growth and efficiency.

3.4. Real-time Data Processing

Real-time data processing refers to the immediate processing of data as it is generated or received. This capability is crucial for businesses that rely on timely information to make decisions.

- Enables instant insights: Organizations can analyze data on-the-fly, allowing for quick decision-making. For instance, a retail company can adjust its inventory in real-time based on customer purchasing patterns, leading to reduced stockouts and increased sales. This is a key aspect of real time data integration.

- Supports various applications: Industries such as finance, healthcare, and e-commerce benefit from real-time analytics to monitor transactions, patient data, and customer behavior. For example, financial institutions can detect fraudulent transactions as they occur, minimizing losses through real time data analysis.

- Enhances customer experience: Businesses can provide personalized services and recommendations based on real-time data, improving customer satisfaction. A streaming service, for example, can suggest content based on a user's current viewing habits, leveraging real time stream analytics.

- Utilizes technologies: Tools like Apache Kafka, Apache Flink, and Amazon Kinesis are popular for handling real-time data streams, enabling organizations to harness the power of their data effectively. Kafka real time streaming is particularly noted for its scalability and reliability in real time processing.

- Facilitates predictive analytics: By processing data in real-time, companies can predict trends and behaviors, leading to proactive strategies. For instance, a healthcare provider can anticipate patient needs and allocate resources accordingly, which is essential in real time etl processes. Rapid Innovation offers MLOps consulting services to help organizations optimize their real-time data processing capabilities. Additionally, the importance of data annotation services in enhancing AI and machine learning models cannot be overlooked.

3.5. Data Storage Solutions

Data storage solutions are essential for managing the vast amounts of data generated by organizations. Choosing the right storage solution can significantly impact data accessibility, security, and cost.

- Types of storage:

- On-premises storage: Physical servers located within the organization, offering control but requiring maintenance. This option is suitable for businesses with strict data compliance requirements.

- Cloud storage: Services like Amazon S3, Google Cloud Storage, and Microsoft Azure provide scalable and flexible storage options, allowing businesses to pay only for what they use.

- Hybrid storage: Combines on-premises and cloud solutions, allowing businesses to optimize costs and performance while maintaining control over sensitive data.

- Considerations for selection:

- Scalability: The ability to grow with the organization’s data needs is crucial for long-term success.

- Security: Ensuring data is protected from breaches and unauthorized access is paramount, especially in industries like finance and healthcare.

- Cost-effectiveness: Balancing performance with budget constraints helps organizations maximize their ROI.

- Accessibility: Ensuring data can be easily accessed by authorized users enhances operational efficiency.

- Emerging technologies:

- Object storage: Ideal for unstructured data, offering high scalability and durability, which is essential for big data applications.

- Data lakes: Centralized repositories that allow for the storage of structured and unstructured data at scale, enabling advanced analytics and machine learning initiatives.

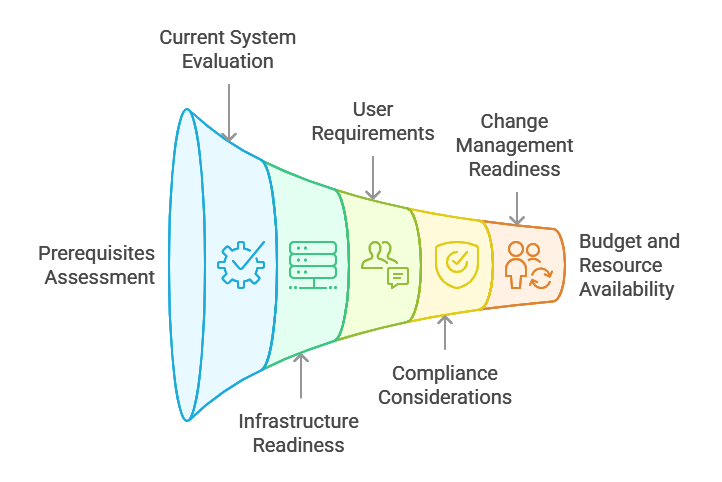

4. AI and Machine Learning Implementation

AI and machine learning (ML) are transforming how businesses operate by enabling data-driven decision-making and automating processes. Implementing these technologies can lead to significant competitive advantages.

- Key components of implementation:

- Data collection: Gathering high-quality data is crucial for training AI models. Rapid Innovation assists clients in establishing robust data pipelines to ensure data integrity.

- Model selection: Choosing the right algorithms based on the specific use case, such as supervised or unsupervised learning, is essential for achieving desired outcomes.

- Training and validation: Training models on historical data and validating their performance to ensure accuracy is a critical step in the implementation process.

- Applications of AI and ML:

- Predictive analytics: Forecasting trends and behaviors to inform business strategies, such as predicting customer churn and optimizing marketing efforts.

- Natural language processing (NLP): Enhancing customer interactions through chatbots and sentiment analysis, leading to improved customer engagement and satisfaction.

- Image recognition: Automating quality control in manufacturing or enhancing security systems, which can significantly reduce operational costs.

- Challenges in implementation:

- Data quality: Ensuring the data used is clean and relevant for accurate model training is a challenge that Rapid Innovation helps clients overcome through data cleansing and preprocessing techniques.

- Integration: Seamlessly incorporating AI solutions into existing systems and workflows is vital for maximizing the benefits of AI technologies.

- Talent acquisition: Finding skilled professionals who can develop and manage AI initiatives is a common hurdle, and Rapid Innovation offers consulting services to bridge this gap.

- Future trends:

- Increased automation: More processes will be automated, reducing human intervention and increasing efficiency.

- Ethical AI: Growing focus on responsible AI practices to mitigate bias and ensure fairness will shape the future of AI development.

- Edge computing: Processing data closer to the source to improve response times and reduce latency will become increasingly important as IoT devices proliferate.

By leveraging Rapid Innovation's expertise in real-time data processing, including real time stream processing and real time data ingestion, data storage solutions, and AI implementation, organizations can achieve their business goals efficiently and effectively, ultimately leading to greater ROI.

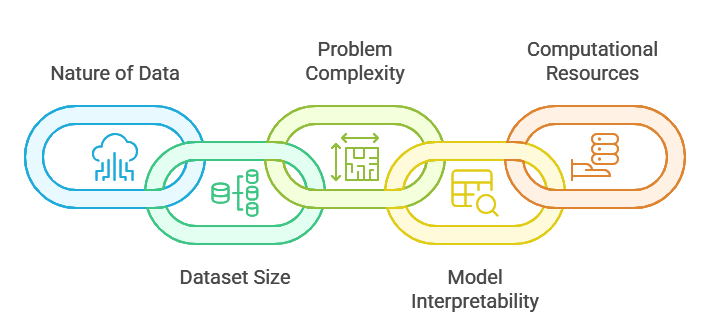

4.1. Model Selection

Model selection is a critical step in the machine learning process. It involves choosing the most appropriate algorithm or model to solve a specific problem based on the data available. The right model can significantly impact the performance and accuracy of predictions, ultimately leading to greater ROI for your business. Factors to consider during model selection include:

- Nature of the data: structured vs. unstructured

- Size of the dataset

- Complexity of the problem

- Required interpretability of the model

- Computational resources available

Choosing the right model often requires experimentation and validation to ensure that it generalizes well to unseen data. At Rapid Innovation, we leverage our expertise to guide clients through this process, ensuring that the selected model aligns with their business objectives and maximizes efficiency. This includes considerations for machine learning features selection and various machine learning model selection strategies.

4.1.1. Supervised Learning Approaches

Supervised learning is a type of machine learning where the model is trained on labeled data. This means that the input data is paired with the correct output, allowing the model to learn the relationship between the two. Common supervised learning approaches include:

- Regression: Used for predicting continuous values. Examples include linear regression and polynomial regression.

- Classification: Used for predicting categorical outcomes. Examples include logistic regression, decision trees, and support vector machines (SVM).

- Ensemble Methods: Techniques like random forests and gradient boosting combine multiple models to improve accuracy and robustness.

Key characteristics of supervised learning include:

- Requires a labeled dataset for training.

- Performance can be evaluated using metrics like accuracy, precision, recall, and F1 score.

- Suitable for problems where historical data is available and the relationship between input and output is known.

By employing supervised learning techniques, Rapid Innovation has helped clients in various industries enhance their predictive capabilities, leading to improved decision-making and increased profitability. This often involves choosing a machine learning model that best fits the data and problem context.

4.1.2. Unsupervised Learning Methods

Unsupervised learning, in contrast, deals with unlabeled data. The model attempts to learn the underlying structure or distribution of the data without any explicit guidance on what the output should be. Common unsupervised learning methods include:

- Clustering: Groups similar data points together. Examples include k-means clustering and hierarchical clustering.

- Dimensionality Reduction: Reduces the number of features while preserving essential information. Techniques include Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE).

- Anomaly Detection: Identifies unusual data points that do not conform to expected patterns. This is useful in fraud detection and network security.

Key characteristics of unsupervised learning include:

- Does not require labeled data, making it useful for exploratory data analysis.

- Performance evaluation can be challenging since there are no predefined labels.

- Suitable for discovering hidden patterns or intrinsic structures in data.

Both supervised and unsupervised learning approaches have their unique advantages and applications. The choice between them depends on the specific problem, the nature of the data, and the desired outcomes. Rapid Innovation's tailored approach ensures that clients can effectively harness these methodologies to uncover insights and drive strategic initiatives, ultimately achieving their business goals efficiently and effectively. This includes model evaluation and selection in machine learning, as well as adaptive deep learning model selection on embedded systems, ensuring that the best practices in model selection and validation in machine learning are followed. For a comprehensive understanding of these concepts, you can refer to machine learning.

4.1.3. Deep Learning Models

Deep learning models are a subset of machine learning that utilize neural networks with multiple layers to analyze various forms of data. These models have gained immense popularity due to their ability to automatically learn representations from raw data, making them particularly effective for complex tasks.

- Neural Networks: Deep learning primarily relies on artificial neural networks, which are designed to mimic the way the human brain processes information. At Rapid Innovation, we leverage these networks, including deep neural networks and deep belief networks, to create tailored solutions that enhance decision-making processes for our clients.

- Convolutional Neural Networks (CNNs): These are particularly effective for image processing tasks, as they can capture spatial hierarchies in images. CNNs, also referred to as convolutional neural nets, are widely used in applications such as facial recognition and object detection. By implementing CNNs, we have helped clients in retail optimize their inventory management through visual recognition systems.

- Recurrent Neural Networks (RNNs): RNNs are designed for sequential data, making them ideal for tasks like natural language processing and time series analysis. They can remember previous inputs, which is crucial for understanding context in language. Our expertise in recurrent neural networks, including RNN recurrent neural networks, has enabled clients in the finance sector to enhance their predictive analytics for stock market trends.

- Transfer Learning: This technique allows models trained on one task to be adapted for another, significantly reducing the amount of data and time required for training. For instance, a model trained on a large dataset can be fine-tuned for a specific application, enhancing performance. Rapid Innovation has successfully utilized transfer learning to accelerate project timelines for clients in healthcare, improving diagnostic accuracy with limited data.

- Applications: Deep learning models, including convolutional networks and deep neural nets, are used in various fields, including healthcare for disease diagnosis, finance for fraud detection, and autonomous vehicles for navigation. Our tailored deep learning solutions have consistently delivered greater ROI for clients by streamlining operations and enhancing service delivery. For advanced solutions, consider our transformer model development and learn more about the types of artificial neural networks.

4.1.4. Hybrid Approaches

Hybrid approaches combine different machine learning techniques to leverage the strengths of each method. This can lead to improved performance and robustness in various applications.

- Ensemble Learning: This technique involves combining multiple models to produce a single output. Methods like bagging and boosting are common ensemble techniques that can enhance predictive accuracy. At Rapid Innovation, we implement ensemble learning to improve the reliability of our clients' predictive models.

- Combining Deep Learning with Traditional Methods: Hybrid models may integrate deep learning with traditional machine learning algorithms. For example, using deep learning for feature extraction followed by a traditional classifier can yield better results. Our clients have benefited from this approach, achieving higher accuracy in their data-driven decisions.

- Multi-Modal Learning: This approach involves integrating data from different sources or modalities, such as text, images, and audio. By combining these diverse data types, hybrid models can provide a more comprehensive understanding of the problem at hand. Rapid Innovation has successfully applied multi-modal learning in projects that require a holistic view of customer behavior, leading to improved marketing strategies.

- Applications: Hybrid approaches are particularly useful in fields like healthcare, where combining clinical data with imaging data can lead to better diagnostic models. They are also used in recommendation systems, where user behavior and item characteristics are analyzed together. Our expertise in hybrid models has enabled clients to achieve significant improvements in their operational efficiency and customer satisfaction.

4.2. Feature Engineering

Feature engineering is the process of selecting, modifying, or creating new features from raw data to improve the performance of machine learning models. It plays a crucial role in the success of any machine learning project.

- Importance of Features: The quality of features directly impacts the model's ability to learn and make accurate predictions. Well-engineered features can significantly enhance model performance. At Rapid Innovation, we prioritize feature engineering to ensure our clients' models are robust and effective.

- Techniques:

- Feature Selection: This involves identifying the most relevant features from the dataset, which can reduce overfitting and improve model interpretability. Our team employs advanced techniques to ensure that only the most impactful features are utilized in model training.

- Feature Transformation: Techniques like normalization, scaling, and encoding categorical variables help in preparing data for modeling. For instance, scaling numerical features ensures that they contribute equally to the model. We guide our clients through these transformations to optimize their data for analysis.

- Creating New Features: Sometimes, combining existing features or deriving new ones can provide additional insights. For example, creating interaction terms or aggregating data can reveal hidden patterns. Our innovative feature creation strategies have led to enhanced model performance for our clients.

- Tools: Various tools and libraries, such as Scikit-learn and Pandas, facilitate feature engineering by providing functions for data manipulation and transformation. Rapid Innovation utilizes these tools to streamline the feature engineering process, ensuring efficiency and effectiveness.

- Impact on Model Performance: Effective feature engineering can lead to significant improvements in model accuracy and efficiency, making it a critical step in the machine learning pipeline. Our commitment to excellence in feature engineering has consistently resulted in higher ROI for our clients, enabling them to achieve their business goals with greater efficiency.

4.3. Model Training Process

The model training process is a critical phase in machine learning where the algorithm learns from the training data. This process involves several key steps:

- Data Preparation: Before training, data must be cleaned and preprocessed. This includes handling missing values, normalizing data, and encoding categorical variables. Proper data preparation ensures that the model can learn effectively, which is a service Rapid Innovation excels in, helping clients streamline their data for optimal model performance. This is particularly important in training machine learning models and preparing machine learning train data.

- Choosing a Model: Selecting the right algorithm is essential. Different models, such as linear regression, decision trees, or neural networks, have unique strengths and weaknesses. The choice depends on the problem type, data characteristics, and desired outcomes. Rapid Innovation provides expert consulting to guide clients in selecting the most suitable model for their specific needs, including training in machine learning and training neural networks.

- Splitting the Data: The dataset is typically divided into training, validation, and test sets. The training set is used to train the model, while the validation set helps tune hyperparameters. The test set evaluates the model's performance on unseen data. Our team ensures that this process is executed correctly to maximize the model's effectiveness, especially in machine learning training and testing.

- Training the Model: During training, the model learns by adjusting its parameters to minimize the error between predicted and actual outcomes. This is often done using optimization algorithms like gradient descent. Rapid Innovation employs advanced techniques to enhance the training process, ensuring clients achieve better results in less time. This includes methods like xgboost training and online machine learning, as well as fine-tuning language models.

- Monitoring Performance: Throughout the training process, it’s crucial to monitor performance metrics such as accuracy, precision, recall, and loss. This helps in understanding how well the model is learning and if adjustments are needed. Our analytics tools provide real-time insights, allowing clients to make informed decisions and adjustments swiftly, particularly during training and testing in machine learning.

4.4. Validation Techniques

Validation techniques are essential for assessing the performance of a machine learning model. They help ensure that the model generalizes well to new, unseen data. Key validation techniques include:

- Cross-Validation: This technique involves dividing the dataset into multiple subsets or folds. The model is trained on some folds and validated on others. K-fold cross-validation is a popular method where the data is split into K subsets, and the model is trained K times, each time using a different fold for validation.

- Holdout Method: In this simpler approach, the dataset is split into two parts: a training set and a test set. The model is trained on the training set and evaluated on the test set. This method is straightforward but can lead to variability in results based on how the data is split.

- Leave-One-Out Cross-Validation (LOOCV): This is a special case of cross-validation where each training set is created by leaving out one data point. This method is computationally expensive but provides a thorough evaluation of the model.

- Stratified Sampling: When dealing with imbalanced datasets, stratified sampling ensures that each class is represented proportionally in both training and validation sets. This helps in achieving a more reliable evaluation of the model's performance.

4.5. Model Optimization

Model optimization is the process of improving a machine learning model's performance. It involves fine-tuning various aspects of the model to achieve better accuracy and efficiency. Key strategies for model optimization include:

- Hyperparameter Tuning: Hyperparameters are settings that govern the training process, such as learning rate, batch size, and the number of layers in a neural network. Techniques like grid search, random search, and Bayesian optimization can be used to find the best hyperparameter values.

- Feature Selection: Selecting the most relevant features can significantly enhance model performance. Techniques such as recursive feature elimination, LASSO regression, and tree-based feature importance can help identify and retain the most impactful features.

- Regularization: To prevent overfitting, regularization techniques like L1 (LASSO) and L2 (Ridge) can be applied. These methods add a penalty for larger coefficients, encouraging simpler models that generalize better to new data.

- Ensemble Methods: Combining multiple models can lead to improved performance. Techniques like bagging, boosting, and stacking leverage the strengths of different models to create a more robust final prediction.

- Early Stopping: During training, monitoring the model's performance on a validation set can help prevent overfitting. If the performance starts to degrade, training can be halted early to retain the best model.

- Data Augmentation: In scenarios with limited data, data augmentation techniques can artificially expand the training dataset. This is particularly useful in image processing, where transformations like rotation, scaling, and flipping can create new training examples.

By implementing these strategies, practitioners can enhance the performance of their machine learning models, ensuring they are both accurate and efficient. Rapid Innovation is committed to helping clients navigate these processes, ultimately leading to greater ROI and successful project outcomes, including training in ML and exploring advanced concepts like transfer learning deep learning and federated learning models.

5. Failure Detection Mechanisms

Failure detection mechanisms are essential in various industries, particularly in manufacturing, IT, and engineering. These failure detection mechanisms help identify potential failures before they escalate into significant issues, ensuring operational efficiency and safety. Two critical components of failure detection mechanisms are early warning indicators and pattern recognition.

5.1. Early Warning Indicators

Early warning indicators (EWIs) are metrics or signals that provide advance notice of potential failures. They are crucial for proactive maintenance and risk management. By monitoring these early warning indicators, organizations can take corrective actions before a failure occurs.

- Types of Early Warning Indicators:

- Performance Metrics: These include key performance indicators (KPIs) that track the efficiency and effectiveness of systems. For example, a sudden drop in production output may signal equipment malfunction.

- Environmental Factors: Changes in temperature, humidity, or vibration levels can indicate potential failures in machinery. Monitoring these factors can help in predicting equipment breakdowns.

- User Feedback: Regular feedback from users can serve as an early warning. If multiple users report issues with a system, it may indicate an underlying problem that needs attention.

- Benefits of Early Warning Indicators:

- Proactive Maintenance: By identifying issues early, organizations can schedule maintenance before a failure occurs, reducing downtime.

- Cost Savings: Early detection can lead to significant cost savings by preventing extensive repairs or replacements.

- Improved Safety: Identifying potential failures early can enhance workplace safety by preventing accidents related to equipment failure.

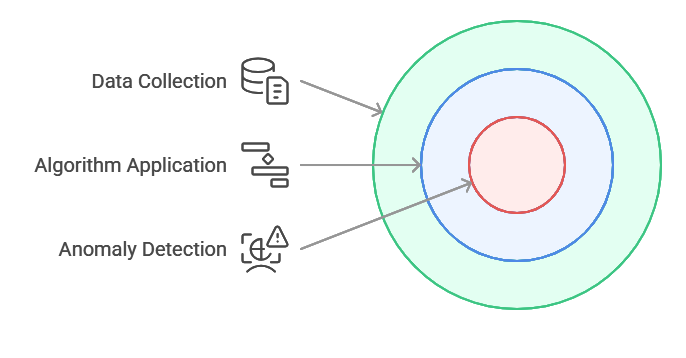

5.2. Pattern Recognition

Pattern recognition involves analyzing data to identify trends or anomalies that may indicate potential failures. This technique is increasingly used in various fields, including machine learning, data analytics, and predictive maintenance.

- How Pattern Recognition Works:

- Data Collection: Organizations collect data from various sources, such as sensors, logs, and user interactions. This data serves as the foundation for pattern recognition.

- Algorithm Application: Advanced algorithms analyze the collected data to identify patterns or trends. Machine learning models can be trained to recognize normal operating conditions and flag deviations.

- Anomaly Detection: When the system detects a pattern that deviates from the norm, it raises an alert. This can indicate a potential failure that requires further investigation.

- Applications of Pattern Recognition:

- Predictive Maintenance: By recognizing patterns in equipment performance, organizations can predict when maintenance is needed, reducing unexpected breakdowns.

- Quality Control: In manufacturing, pattern recognition can help identify defects in products by analyzing production data, ensuring higher quality standards.

- Fraud Detection: In finance, pattern recognition is used to detect fraudulent activities by identifying unusual transaction patterns.

- Benefits of Pattern Recognition:

- Enhanced Decision-Making: By providing insights into potential failures, pattern recognition aids in informed decision-making.

- Increased Efficiency: Organizations can optimize operations by addressing issues before they escalate, leading to improved productivity.

- Data-Driven Insights: Pattern recognition leverages data analytics to provide actionable insights, enhancing overall operational effectiveness.

In conclusion, failure detection mechanisms, particularly early warning indicators and pattern recognition, play a vital role in maintaining operational efficiency and safety across various industries. By implementing these failure detection mechanisms, organizations can proactively address potential failures, leading to significant cost savings and improved performance. At Rapid Innovation, we leverage our expertise in AI to develop tailored solutions that enhance these mechanisms, ensuring our clients achieve greater ROI through improved operational resilience and efficiency. For more information on how we can assist you, check out our computer vision software development services.

5.3. Anomaly Detection

Anomaly detection is a critical process in various fields, including cybersecurity, finance, and healthcare. It involves identifying patterns in data that do not conform to expected behavior. This technique is essential for early detection of potential issues, fraud, or system failures, enabling organizations to respond proactively.

- Definition: Anomaly detection refers to the identification of rare items, events, or observations that raise suspicions by differing significantly from the majority of the data.

- Techniques: Common methods include statistical tests, machine learning algorithms, and clustering techniques, all of which can be tailored to specific industry needs. Anomaly detection methods can also include outlier detection algorithms and statistical anomaly detection.

- Applications:

- Fraud detection in banking transactions, where Rapid Innovation's AI solutions can analyze transaction patterns to flag suspicious activities in real-time.

- Intrusion detection in network security, utilizing machine learning to identify unusual access patterns that may indicate a breach, including network traffic anomaly detection.

- Monitoring patient health data for unusual patterns, allowing healthcare providers to intervene before critical issues arise, which can be enhanced through anomaly detection for data quality.

- Importance: Early detection of anomalies can prevent significant losses and enhance decision-making processes, ultimately leading to greater ROI for organizations. Techniques such as anomaly detection using Python and scikit learn outlier detection can streamline this process.

- Challenges:

- High false positive rates can lead to unnecessary investigations, which can be mitigated through advanced model training and refinement.

- The need for large datasets to train models effectively, a challenge that Rapid Innovation addresses by leveraging synthetic data generation techniques.

- Variability in data can complicate the detection process, necessitating continuous model updates and monitoring, particularly in outlier detection time series.

5.4. Failure Classification

Failure classification is the process of categorizing failures based on their characteristics and causes. This is crucial for understanding the nature of failures and implementing effective solutions.

- Definition: Failure classification involves analyzing failures to determine their type, severity, and potential impact on operations.

- Types of Failures:

- Hard failures: Complete system breakdowns requiring immediate attention.

- Soft failures: Partial failures that may not halt operations but can degrade performance.

- Methods:

- Root cause analysis (RCA) to identify underlying issues.

- Failure mode and effects analysis (FMEA) to assess potential failure modes.

- Benefits:

- Improved maintenance strategies by understanding failure patterns, which can be enhanced through AI-driven predictive analytics.

- Enhanced safety by identifying critical failure points, allowing organizations to prioritize interventions.

- Cost reduction through targeted interventions, ultimately leading to improved operational efficiency.

- Challenges:

- Data collection can be time-consuming and complex, a challenge that Rapid Innovation can help streamline through automated data gathering solutions.

- Classifying failures accurately requires expertise and experience, which our team of specialists provides.

- Continuous monitoring is necessary to keep classification systems updated, ensuring that organizations remain resilient against emerging threats.

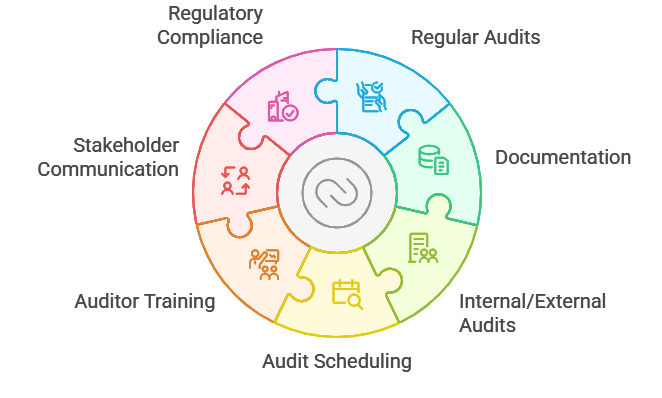

5.5. Risk Assessment

Risk assessment is a systematic process of evaluating potential risks that could negatively impact an organization. It is essential for effective risk management and decision-making.

- Definition: Risk assessment involves identifying, analyzing, and evaluating risks to determine their potential impact and likelihood.

- Steps in Risk Assessment:

- Risk identification: Recognizing potential risks that could affect the organization.

- Risk analysis: Evaluating the likelihood and impact of identified risks.

- Risk evaluation: Comparing estimated risks against risk criteria to determine significance.

- Importance:

- Helps organizations prioritize risks based on their potential impact, enabling more strategic resource allocation.

- Facilitates informed decision-making and resource allocation, ultimately enhancing operational resilience.

- Enhances compliance with regulations and standards, reducing the risk of penalties and reputational damage.

- Tools and Techniques:

- Qualitative assessments for subjective evaluation.

- Quantitative assessments for numerical analysis.

- Risk matrices to visualize risk levels, which can be integrated into AI-driven dashboards for real-time monitoring.

- Challenges:

- Dynamic environments can lead to rapidly changing risk profiles, necessitating agile risk management strategies.

- Subjectivity in risk evaluation can result in inconsistencies, which can be minimized through standardized AI models.

- Requires ongoing monitoring and updating to remain effective, a service that Rapid Innovation can provide through continuous support and system enhancements.

5.6. Prediction Confidence Scoring

Prediction confidence scoring is a critical aspect of machine learning and data analytics that helps users understand the reliability of predictions made by models. This scoring system quantifies the certainty of a model's output, allowing stakeholders to make informed decisions based on the level of confidence associated with each prediction. Confidence scores are typically expressed as probabilities, ranging from 0 to 1. A higher score indicates greater confidence in the prediction, while a lower score suggests uncertainty. This scoring can be particularly useful in applications such as finance, healthcare, and marketing, where decisions based on predictions can have significant consequences.

Incorporating prediction confidence scoring into machine learning models can enhance their usability by:

- Providing transparency: Users can see how confident the model is in its predictions, which can help in assessing risk.

- Enabling better decision-making: Stakeholders can prioritize actions based on the confidence level of predictions.

- Improving model performance: By analyzing confidence scores, data scientists can identify areas where the model may need improvement or retraining.

For example, in a healthcare setting, a model predicting patient outcomes might provide a confidence score of 0.85 for a specific diagnosis. This high score indicates a strong likelihood of accuracy, allowing healthcare professionals to act with greater assurance. Conversely, a score of 0.45 would suggest caution, prompting further investigation before making treatment decisions.

6. Real-time Monitoring System

A real-time monitoring system is essential for organizations that rely on continuous data analysis and immediate feedback. This system allows businesses to track performance metrics, detect anomalies, and respond to changes in real-time, ensuring that they remain agile and competitive. Real-time monitoring systems can be applied across various industries, including finance, manufacturing, and e-commerce. They utilize advanced technologies such as IoT devices, cloud computing, and big data analytics to gather and process data instantaneously. The benefits of implementing a real-time monitoring system include enhanced operational efficiency, improved customer satisfaction, and proactive risk management.

Key components of a real-time monitoring system include:

- Data collection: Continuous data gathering from various sources, such as sensors, user interactions, and transaction logs.

- Data processing: Immediate analysis of incoming data to identify trends, patterns, and anomalies.

- Alerting mechanisms: Automated notifications to stakeholders when specific thresholds are met or exceeded, enabling quick responses to potential issues.

By leveraging a real-time monitoring system, organizations can make data-driven decisions that enhance their overall performance and responsiveness to market changes.

6.1. Dashboard Design

Dashboard design is a crucial element of any real-time monitoring system, as it serves as the primary interface for users to visualize and interact with data. An effective dashboard provides a clear, concise overview of key performance indicators (KPIs) and other relevant metrics, enabling users to quickly assess the state of their operations. A well-designed dashboard should prioritize usability and accessibility, ensuring that users can easily navigate and interpret the information presented. Key features of an effective dashboard include:

- Intuitive layout: Organizing information logically to facilitate quick understanding.

- Visualizations: Utilizing charts, graphs, and other visual elements to represent data clearly.

- Customization: Allowing users to tailor the dashboard to their specific needs and preferences.

When designing a dashboard, consider the following best practices:

- Identify the target audience: Understand who will be using the dashboard and what information is most relevant to them.

- Focus on key metrics: Highlight the most important KPIs that align with organizational goals.

- Ensure real-time updates: Provide users with the latest data to support timely decision-making.

By implementing these design principles, organizations can create dashboards that not only enhance data visibility but also empower users to make informed decisions based on real-time insights.

At Rapid Innovation, we specialize in integrating these advanced AI capabilities into your business processes, ensuring that you achieve greater ROI through informed decision-making and enhanced operational efficiency. Our expertise in machine learning and real-time data analytics positions us as a trusted partner in your journey towards digital transformation. For tailored solutions, explore our custom AI model development services and learn more about AI agent market trend predictor.

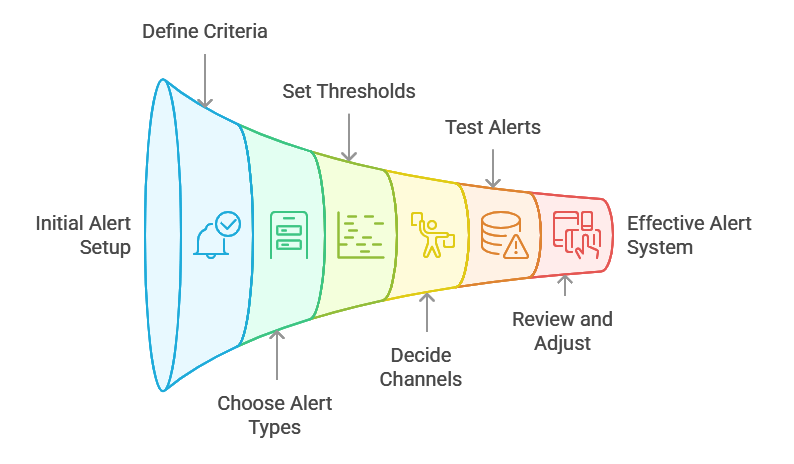

6.2. Alert Configuration

Alert configuration is a critical aspect of system monitoring and management. It involves setting up notifications that inform administrators about specific events or thresholds that have been reached within a system, including system monitoring alerts. Proper alert configuration ensures that issues are identified and addressed promptly, minimizing downtime and maintaining system performance.

- Define alert criteria: Establish clear parameters for what constitutes an alert. This could include error rates, response times, or resource usage levels.

- Choose alert types: Determine the types of alerts needed, such as critical, warning, or informational alerts. Each type should have a different level of urgency and response protocol.

- Set thresholds: Configure thresholds for alerts based on historical data and expected performance. This helps in avoiding false positives and ensures that alerts are meaningful.

- Notification channels: Decide on the channels through which alerts will be sent, such as email, SMS, or integration with monitoring tools like Slack or PagerDuty.

- Test alerts: Regularly test the alert system to ensure that notifications are sent correctly and received by the intended recipients.

- Review and adjust: Continuously review alert configurations to adapt to changing system requirements and performance metrics.

6.3. Performance Metrics

Performance metrics are essential for evaluating the efficiency and effectiveness of a system. They provide quantitative data that helps in understanding how well a system is functioning and where improvements can be made.

- Key performance indicators (KPIs): Identify the most relevant KPIs for your system, such as uptime, response time, and transaction throughput.

- Data collection: Implement tools and processes for collecting performance data. This can include application performance monitoring (APM) tools, log analysis, and user feedback.

- Benchmarking: Compare performance metrics against industry standards or historical data to identify areas for improvement.

- Visualization: Use dashboards and visual reports to present performance metrics in an easily digestible format. This aids in quick decision-making and trend analysis.

- Regular reviews: Schedule regular reviews of performance metrics to ensure that the system is meeting its goals and to identify any potential issues early on.

6.4. Resource Utilization

Resource utilization refers to how effectively a system uses its available resources, such as CPU, memory, disk space, and network bandwidth. Monitoring resource utilization is vital for optimizing performance and ensuring that systems run efficiently.