Table Of Contents

Category

Artificial Intelligence (AI)

Machine Learning (ML)

Generative AI

Cloud Computing

Blockchain-as-a-Service (BaaS)

Automation

Blockchain

Legal

Logistics

Supplychain

1. Introduction

The introduction serves as the gateway to understanding the transformative potential of AI in business, particularly in the context of artificial intelligence in companies. It sets the stage for the discussion, providing context and background information that is essential for grasping the subsequent content. A well-crafted introduction not only captures the reader's attention but also outlines the significance of leveraging AI technologies to achieve business goals. It engages the reader with a compelling opening statement, provides a brief overview of the topic, establishes the relevance of the discussion in today’s competitive landscape, and prepares the reader for the detailed exploration that follows. For more insights, you can explore the potential of business AI.

1.1. Purpose and Objectives

The purpose and objectives section clarifies the intent behind the discussion. It outlines what the reader can expect to learn and the goals that the content aims to achieve. This section is crucial for setting clear expectations and guiding the reader through the material.

- Define the primary purpose of the content, which is to illustrate how Rapid Innovation can enhance business efficiency and effectiveness through AI solutions, especially in the realm of artificial technology companies.

- Outline specific objectives that will be addressed, such as demonstrating case studies where clients achieved greater ROI through our services.

- Highlight the importance of the topic in practical applications, emphasizing how AI can streamline operations, improve decision-making, and drive innovation.

- Encourage reader engagement by emphasizing the benefits of understanding the material, particularly how it can lead to actionable insights for their own business strategies.

By clearly stating the purpose and objectives, readers can better appreciate the value of the information presented and how it may apply to their own experiences or knowledge base.

1.2. Key Features and Benefits

Understanding the key features and benefits of a system is crucial for evaluating its effectiveness and suitability for specific needs. Here are some of the standout features and their corresponding benefits:

- User-Friendly Interface:

- Simplifies navigation and enhances user experience, allowing users to focus on their tasks rather than struggling with the system.

- Reduces the learning curve for new users, enabling quicker onboarding and productivity.

- Scalability:

- Adapts to growing data and user demands without compromising performance, ensuring that businesses can scale operations seamlessly.

- Supports business expansion and increased workload, allowing organizations to grow without the need for significant system overhauls.

- Integration Capabilities:

- Seamlessly connects with existing tools and software, ensuring that businesses can leverage their current technology investments, such as employee navigator payroll integration.

- Facilitates data sharing and improves workflow efficiency, leading to enhanced collaboration across teams.

- Robust Security Measures:

- Protects sensitive data through encryption and access controls, safeguarding against data breaches and unauthorized access.

- Ensures compliance with industry regulations, helping organizations avoid legal penalties and maintain customer trust.

- Real-Time Analytics:

- Provides immediate insights into performance metrics, enabling businesses to react swiftly to changing conditions.

- Aids in informed decision-making and strategic planning, allowing organizations to optimize operations and drive growth.

- Customizability:

- Allows users to tailor features to meet specific requirements, ensuring that the system aligns with unique business processes, similar to features of customer loyalty programs.

- Enhances user satisfaction and operational efficiency, as teams can work in a way that best suits their needs.

- 24/7 Support:

- Offers assistance at any time, ensuring minimal downtime and quick resolution of issues.

- Builds trust and reliability among users, fostering a positive relationship with the system.

These features collectively contribute to improved productivity, cost savings, and enhanced operational efficiency, making the system a valuable asset for organizations looking to achieve greater ROI.

1.3. System Overview

A comprehensive system overview provides insight into the functionality and purpose of the system. This section outlines the core components and their roles:

- Core Functionality:

- The system is designed to streamline processes and automate tasks, serving as a central hub for data management and analysis, which is essential for informed decision-making, similar to features of a managed health care plan.

- User Roles and Permissions:

- Different user roles are defined to ensure appropriate access levels, enhancing security and accountability within the system, which is critical for maintaining data integrity.

- Data Management:

- Centralized storage allows for easy access and retrieval of information, supporting data integrity and consistency across the platform, which is vital for operational efficiency.

- Reporting Tools:

- Built-in tools for generating reports and visualizations facilitate performance tracking and strategic insights, enabling organizations to measure success and identify areas for improvement, akin to patient record management system features.

- Mobile Accessibility:

- Enables users to access the system from various devices, increasing flexibility and productivity for remote work, which is increasingly important in today’s work environment.

- Feedback Mechanism:

- Allows users to provide input for continuous improvement, ensuring the system evolves to meet user needs and remains relevant in a fast-paced business landscape.

This overview highlights how the system functions as an integrated solution, enhancing collaboration and efficiency across various departments.

2. Architecture Overview

The architecture of a system is fundamental to its performance and scalability. Here’s a breakdown of the architecture components:

- Client-Server Model:

- The system operates on a client-server architecture, where clients request services from servers, enhancing resource management and load balancing, which is essential for optimal performance.

- Microservices Architecture:

- Breaks down the application into smaller, independent services, facilitating easier updates and maintenance, promoting agility and responsiveness to market changes.

- Database Layer:

- Centralized database management system (DBMS) for data storage ensures data integrity and supports complex queries, which is crucial for data-driven decision-making, similar to features of cloud-based accounting systems.

- API Layer:

- Provides a set of protocols for communication between different software components, enhancing integration capabilities with third-party applications, which is vital for a cohesive tech ecosystem.

- Security Layer:

- Implements various security protocols to protect data and user access, including firewalls, encryption, and authentication mechanisms, ensuring robust protection against cyber threats.

- User Interface Layer:

- The front-end design that users interact with focuses on usability and accessibility to enhance user experience, making it easier for teams to engage with the system.

- Cloud Infrastructure:

- Utilizes cloud services for hosting and scalability, offering flexibility in resource allocation and cost management, which is essential for modern businesses looking to optimize their IT expenditures, similar to features of national pension systems.

This architecture overview illustrates how the system is structured to ensure reliability, security, and performance, making it suitable for diverse applications and helping organizations achieve their business goals efficiently and effectively.

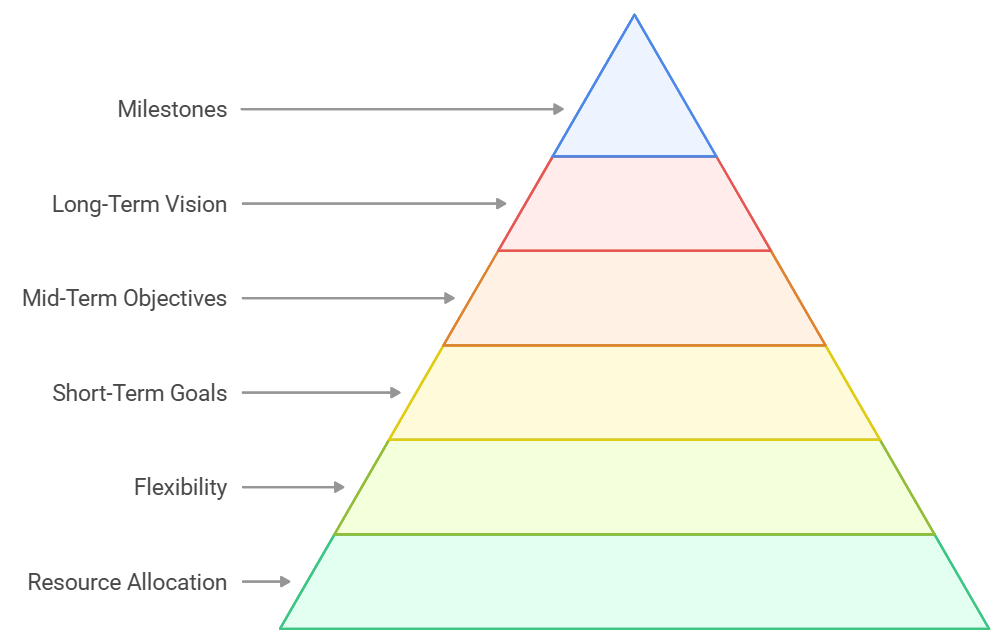

Refer to the image for a visual representation of the system's architecture and its key features and benefits:

.

2.1. System Components

Understanding the system components is crucial for grasping how a system operates effectively. Each component plays a specific role in ensuring the overall functionality and efficiency of the system.

- Hardware: This includes physical devices such as servers, computers, and networking equipment. Hardware is essential for processing data and running applications, enabling businesses to leverage AI solutions that require substantial computational power.

- Software: Software components consist of applications and operating systems that manage hardware resources and provide user interfaces. This can include everything from system software to application software tailored for specific tasks, such as AI algorithms that drive decision-making processes. This category also encompasses components of an operating system, which are vital for the overall functionality of the system.

- Database: A database is a structured collection of data that allows for easy access, management, and updating. It serves as the backbone for data storage and retrieval in any system, particularly important for AI applications that rely on large datasets for training and inference.

- Network: The network component facilitates communication between different system components. It includes both wired and wireless connections that enable data transfer and connectivity, ensuring that AI models can access real-time data for analysis and predictions.

- User Interface: This is the point of interaction between users and the system. A well-designed user interface enhances user experience and ensures that users can efficiently navigate the system, which is vital for the adoption of AI solutions in business processes.

- Security: Security components protect the system from unauthorized access and data breaches. This includes firewalls, encryption, and authentication mechanisms, which are essential for safeguarding sensitive data used in AI applications.

- Components of Linux Operating System: Understanding the components of Linux operating system is also crucial, as Linux is widely used in server environments and for running AI applications.

- Components of Windows Operating System: Similarly, components of Windows operating system are important for businesses that rely on Windows-based systems for their operations.

- Kernel Components: The kernel components are fundamental to the operating system, managing system resources and facilitating communication between hardware and software.

- Components of System Software: These components are essential for the operation of system software, which provides a platform for running application software.

- VMware Components: For businesses utilizing virtualization, understanding VMware components is important for managing virtual environments effectively.

- Components of a System: Overall, the components of a system encompass all the elements that work together to ensure functionality and efficiency. For more insights on enhancing AI and machine learning models, you can explore data annotation services.

2.2. Data Flow

Data flow refers to the movement of data within a system, illustrating how information is processed and transferred between components. Understanding data flow is essential for optimizing system performance and ensuring data integrity.

- Input: Data enters the system through various input methods, such as user input, sensors, or external data sources. This initial stage is critical for gathering the necessary information for processing, especially in AI systems that require diverse data inputs for accurate predictions.

- Processing: Once data is inputted, it undergoes processing, which can involve calculations, data manipulation, or transformation to convert raw data into meaningful information. This step is where AI algorithms analyze data to generate insights that drive business decisions.

- Storage: Processed data is then stored in databases or data warehouses, allowing for easy retrieval and management of information for future use. Efficient data storage is crucial for AI applications that need to access historical data for training and validation.

- Output: After processing and storage, the data is outputted in a usable format. This can include reports, visualizations, or direct user feedback, depending on the system's purpose. AI-driven outputs can provide actionable insights that enhance business strategies.

- Feedback Loop: A feedback loop is essential for continuous improvement, allowing users to provide input on the output, which can lead to adjustments in processing or data collection methods. This iterative process is vital for refining AI models and improving their accuracy over time.

2.3. Integration Points

Integration points are critical junctures where different systems or components interact and exchange data. Effective integration is vital for ensuring seamless operation and enhancing overall system functionality.

- APIs (Application Programming Interfaces): APIs serve as bridges between different software applications, allowing them to communicate and share data. They are essential for integrating third-party services and enhancing system capabilities, particularly in AI ecosystems that require collaboration between various tools and platforms.

- Middleware: Middleware acts as a facilitator between different applications or services, enabling them to work together. It can manage data exchange, communication, and transaction processing, ensuring that AI systems can operate cohesively within a larger IT infrastructure.

- Data Integration Tools: These tools help consolidate data from various sources into a unified view, which is essential for businesses that rely on data from multiple systems to make informed decisions. In the context of AI, having a comprehensive data view is crucial for training robust models.

- Cloud Services: Integration with cloud services allows for scalable storage and processing capabilities, which is particularly useful for businesses looking to leverage cloud computing for flexibility and cost-effectiveness. AI applications often benefit from cloud resources to handle large-scale data processing and model training.

- User Interfaces: Integration points also include user interfaces that allow users to interact with multiple systems seamlessly. A well-integrated user interface can enhance user experience and productivity, making it easier for teams to utilize AI insights effectively.

- Monitoring and Analytics: Integration points often include monitoring tools that track system performance and data flow. Analytics can provide insights into how well the integration is functioning and identify areas for improvement, which is essential for maintaining the effectiveness of AI solutions in achieving business goals.

Refer to the image for a visual representation of the system components discussed in section 2.1.

2.4. Scalability Considerations

Scalability is a critical aspect of any system, especially in the context of modern applications that handle large volumes of data and user interactions. When designing a scalable system, several factors must be taken into account:

- Horizontal vs. Vertical Scaling: Horizontal scaling involves adding more machines or nodes to distribute the load, while vertical scaling means upgrading existing hardware. Horizontal scaling is often preferred for cloud-based applications due to its flexibility and cost-effectiveness.

- Load Balancing: Implementing load balancers helps distribute incoming traffic evenly across multiple servers, ensuring no single server becomes a bottleneck. This enhances performance and reliability, especially during peak usage times.

- Microservices Architecture: Adopting a microservices architecture allows different components of an application to scale independently. This means that if one service experiences high demand, it can be scaled without affecting the entire system. This approach is essential in designing highly scalable systems. For more insights on how microservices support business and IT alignment.

- Database Scalability: Choosing the right database technology is crucial. NoSQL databases, for example, can handle large volumes of unstructured data and scale horizontally more easily than traditional SQL databases. Techniques like sharding and replication can also improve database performance and availability, which is a key consideration in system design scalability.

- Caching Strategies: Implementing caching mechanisms can significantly reduce the load on databases and improve response times. Tools like Redis or Memcached can store frequently accessed data in memory, allowing for faster retrieval.

- Monitoring and Auto-Scaling: Continuous monitoring of system performance helps identify bottlenecks and areas for improvement. Auto-scaling features in cloud services can automatically adjust resources based on current demand, ensuring optimal performance without manual intervention. This is particularly important in scalability and system design for developers.

At Rapid Innovation, we leverage these scalability considerations to help our clients build robust systems that can grow with their business needs. For instance, by implementing a microservices architecture, we enable clients to scale specific components of their applications independently, leading to improved performance and reduced costs. This approach has resulted in significant ROI for our clients, as they can efficiently manage resources and respond to changing market demands, which is a fundamental aspect of scalability in system design.

3. Core Components

Understanding the core components of a system is essential for effective design and implementation. These components work together to ensure the system operates smoothly and efficiently. Key core components include:

- User Interface (UI): The UI is the front-end part of the application that users interact with. It should be intuitive and responsive to enhance user experience.

- Application Logic: This component contains the business logic that processes user requests and interacts with the database. It is crucial for ensuring that the application behaves as expected.

- Database: The database stores all the data required by the application. Choosing the right database type (SQL vs. NoSQL) is vital for performance and scalability.

- APIs: Application Programming Interfaces (APIs) allow different components of the system to communicate with each other. Well-designed APIs facilitate integration with third-party services and enhance functionality.

- Security Layer: A robust security layer protects the application from unauthorized access and data breaches. This includes authentication, authorization, and encryption measures.

- Infrastructure: The underlying infrastructure, whether on-premises or cloud-based, supports the deployment and operation of the application. It includes servers, networking, and storage solutions.

3.1. Content Ingestion Engine

The content ingestion engine is a vital component of any system that deals with large volumes of data. It is responsible for collecting, processing, and storing content from various sources. Key aspects of a content ingestion engine include:

- Data Sources: The engine should be capable of ingesting data from multiple sources, such as APIs, databases, and file systems. This flexibility allows for a more comprehensive data collection strategy.

- Data Processing: Once data is ingested, it often requires processing to ensure it is in a usable format. This may include data cleaning, transformation, and enrichment.

- Real-time vs. Batch Processing: Depending on the application’s needs, the ingestion engine can support real-time processing for immediate data availability or batch processing for efficiency.

- Scalability: The ingestion engine must be designed to scale as data volumes grow. This can involve using distributed processing frameworks like

Apache KafkaorApache Flink, which are essential in designing scalable systems. - Error Handling: Robust error handling mechanisms are essential to ensure that data ingestion processes can recover from failures without losing data.

- Monitoring and Logging: Implementing monitoring and logging capabilities allows for tracking the performance of the ingestion engine and identifying potential issues early.

- Integration with Storage Solutions: The engine should seamlessly integrate with various storage solutions, whether cloud-based or on-premises, to ensure that ingested data is stored efficiently and securely.

By focusing on these aspects, organizations can build a powerful content ingestion engine that supports their data-driven initiatives effectively. At Rapid Innovation, we assist clients in developing such engines, ensuring they can harness their data for actionable insights and improved decision-making, ultimately driving greater ROI. This aligns with our commitment to scalability and system design for developers, ensuring they have the tools and knowledge to create scalable solutions.

Refer to the image for a visual representation of the scalability considerations discussed above:

3.2. Feature Extraction Module

The Feature Extraction Module is a critical component in various applications, particularly in machine learning, computer vision, and natural language processing. This module is responsible for identifying and extracting relevant features from raw data, which can then be used for further analysis or processing.

- Purpose:

- To transform raw data into a structured format that can be easily analyzed.

- To enhance the performance of machine learning models by providing them with the most relevant information.

- Techniques Used:

- Statistical methods: These include mean, variance, and standard deviation calculations to summarize data characteristics.

- Signal processing: Techniques like Fourier transforms or wavelet transforms are used to extract features from time-series data.

- Image processing: Methods such as edge detection, histogram analysis, and texture analysis are employed to extract features from images, including feature extraction from image and computer vision feature extraction.

- Text analysis: Natural language processing techniques like tokenization, stemming, and vectorization are used to extract features from text data, such as nlp feature extraction and feature extraction nlp.

- Applications:

- In image recognition, features such as shapes, colors, and textures are extracted to identify objects.

- In speech recognition, features like pitch, tone, and frequency are analyzed to understand spoken language, including mfcc feature extraction.

- In sentiment analysis, features from text data help determine the emotional tone behind words.

At Rapid Innovation, we leverage the Feature Extraction Module to help clients optimize their data processing workflows, leading to improved model accuracy and ultimately greater ROI. This includes utilizing feature extraction techniques and deep learning for feature extraction to enhance our capabilities. For a more in-depth understanding of these processes, you can read about pattern recognition in machine learning.

3.3. Matching Algorithm

The Matching Algorithm is essential for comparing and identifying similarities between different sets of data. This algorithm plays a vital role in various fields, including image recognition, biometric identification, and recommendation systems.

- Purpose:

- To determine the degree of similarity or difference between two or more data sets.

- To facilitate decision-making processes based on the comparison results.

- Types of Matching Algorithms:

- Exact matching: This method looks for identical matches between data sets, often used in database queries.

- Fuzzy matching: This approach allows for approximate matches, accommodating minor differences or errors in data.

- Machine learning-based matching: Algorithms like k-nearest neighbors (KNN) or support vector machines (SVM) are used to classify and match data based on learned patterns.

- Applications:

- In facial recognition systems, matching algorithms compare facial features to identify individuals.

- In e-commerce, recommendation systems use matching algorithms to suggest products based on user preferences and behavior.

- In plagiarism detection, algorithms compare text documents to identify similarities and potential copying.

Rapid Innovation employs advanced Matching Algorithms to enhance our clients' systems, ensuring they can make informed decisions quickly and accurately, thus maximizing their operational efficiency and ROI.

3.4. Cross-Platform Adapter Framework

The Cross-Platform Adapter Framework is designed to facilitate communication and interoperability between different software platforms and systems. This framework is crucial in today's diverse technological landscape, where applications often need to work seamlessly across various environments.

- Purpose:

- To enable different systems to communicate and share data effectively, regardless of their underlying technologies.

- To reduce development time and costs by providing a standardized approach to integration.

- Key Features:

- Abstraction: The framework abstracts the complexities of different platforms, allowing developers to focus on functionality rather than compatibility.

- Scalability: It supports the integration of multiple systems, making it easier to expand and adapt as business needs change.

- Flexibility: The framework can accommodate various protocols and data formats, ensuring smooth communication between disparate systems.

- Applications:

- In enterprise environments, the framework allows different software applications to work together, enhancing productivity and efficiency.

- In mobile app development, it enables apps to function across different operating systems, such as iOS and Android.

- In cloud computing, the framework facilitates the integration of cloud services with on-premises systems, providing a unified experience for users.

At Rapid Innovation, our Cross-Platform Adapter Framework empowers clients to achieve seamless integration across their technology stack, reducing costs and improving overall system performance, which translates to a higher return on investment.

Refer to the image for a visual representation of the Feature Extraction Module and its components:

3.5. Results Generator

The Results Generator is a crucial component in various applications, particularly in data analysis, machine learning, and content creation. It serves to transform raw data or inputs into meaningful outputs that can be easily interpreted and utilized, ultimately driving business efficiency and effectiveness.

- Generates insights from data: The Results Generator processes input data to produce actionable insights, making it easier for users to understand complex information. This capability allows businesses to make informed decisions quickly, leading to improved ROI. Utilizing tools in data analytics and data analysis software can enhance this process.

- Customizable output formats: Users can often customize the format of the results, whether they need charts, graphs, or textual summaries, enhancing usability. This flexibility ensures that stakeholders can access information in a way that best suits their needs, especially when using business analytics software.

- Integration with other tools: Many Results Generators can integrate with other software tools, allowing for seamless data flow and enhanced functionality. This integration helps organizations streamline their processes and maximize the value of their existing technology investments, particularly with tools of data analytics.

- Real-time processing: Some advanced generators can provide results in real-time, which is essential for applications requiring immediate feedback, such as financial trading or live data analysis. This capability enables businesses to respond swiftly to market changes, thereby optimizing their strategies, especially in predictive data analytics.

- User-friendly interface: A well-designed Results Generator features an intuitive interface that allows users to easily input data and interpret results without needing extensive technical knowledge. This accessibility empowers teams across the organization to leverage data effectively, fostering a data-driven culture through tools in data analysis.

4. Content Processing

Content Processing refers to the methods and technologies used to analyze, manipulate, and generate content from various sources. This process is vital in fields such as digital marketing, content management, and artificial intelligence.

Content Processing often involves natural language processing (NLP) techniques to analyze text data, extracting key themes, sentiments, and entities. It encompasses the processing of various content types, including images, videos, and audio, ensuring that all forms of media are effectively utilized. Many content processing systems automate repetitive tasks, such as data entry or content formatting, saving time and reducing human error. Additionally, Content Processing includes mechanisms for quality assurance, ensuring that the generated content meets specific standards and is free from errors. Effective content processing systems can scale to handle large volumes of data, making them suitable for businesses of all sizes, particularly those utilizing data and analytics tools.

4.1. Supported Content Types

Supported Content Types refer to the various formats and categories of content that a system or application can process. Understanding these types is essential for maximizing the effectiveness of content processing tools.

- Text: This includes articles, blogs, reports, and any written material. Text processing often involves grammar checks, sentiment analysis, and keyword extraction, which can be enhanced by using data analysis ai.

- Images: Systems can process image files (JPEG, PNG, GIF) for tasks such as image recognition, tagging, and enhancement.

- Audio: Supported audio formats (MP3, WAV, AAC) can be analyzed for transcription, sentiment analysis, and sound quality improvement.

- Video: Video content (MP4, AVI, MOV) can be processed for editing, summarization, and metadata extraction, which can be improved with metadata analysis.

- Structured data: This includes databases and spreadsheets, which can be analyzed for trends, patterns, and insights, particularly through database analytics tools.

By understanding the capabilities of the Results Generator, Content Processing, and Supported Content Types, users can leverage these tools to enhance their data analysis and content creation efforts effectively, ultimately achieving greater business outcomes with Rapid Innovation's expertise, especially in the realm of ai for data analytics and business intelligence bi analyst.

4.2. Content Normalization

Content normalization is a crucial process in data management and information retrieval. It involves standardizing content to ensure consistency and improve the quality of data across various platforms. This process is essential for effective data analysis, search engine optimization (SEO), and user experience.

- Ensures uniformity in data formats, making it easier to process and analyze.

- Helps in removing duplicates and irrelevant information, enhancing data quality.

- Facilitates better indexing by search engines, improving visibility and ranking.

- Supports various content types, including text, images, and videos, ensuring they are all treated uniformly.

- Enhances the ability to compare and contrast different data sets, leading to more informed decision-making.

By implementing content normalization, organizations can streamline their data management processes, leading to improved efficiency and effectiveness in their operations. At Rapid Innovation, we leverage advanced AI algorithms to automate and optimize content normalization, ensuring that our clients achieve greater ROI through enhanced data quality and operational efficiency. For more information on how we can assist you, check out our content discovery workflow and our AI business automation solutions.

4.3. Metadata Extraction

Metadata extraction is the process of retrieving and organizing metadata from various content sources. Metadata is essential as it provides context and information about the data, making it easier to manage and utilize effectively.

- Involves identifying key attributes such as title, author, date, and keywords associated with the content.

- Enhances searchability by allowing users to find relevant information quickly.

- Supports content categorization, making it easier to organize and retrieve data.

- Plays a vital role in SEO by providing search engines with essential information about the content.

- Can be automated using various tools and technologies, saving time and reducing human error.

Effective metadata extraction can significantly improve content management systems, leading to better user experiences and more efficient data retrieval. Rapid Innovation employs cutting-edge AI techniques to automate metadata extraction, enabling our clients to enhance their content discoverability and drive higher engagement rates.

4.4. Content Fingerprinting

Content fingerprinting is a technique used to identify and track digital content across various platforms. This method creates a unique identifier or "fingerprint" for each piece of content, allowing for easy recognition and management.

- Helps in detecting duplicate content, which is crucial for maintaining content integrity and SEO.

- Enables copyright protection by tracking the usage of original content across the web.

- Assists in content recommendation systems by analyzing user behavior and preferences.

- Supports content verification, ensuring that the information is accurate and reliable.

- Can be applied to various content types, including text, audio, and video, making it a versatile tool.

By utilizing content fingerprinting, organizations can protect their intellectual property, enhance user engagement, and improve overall content management strategies. At Rapid Innovation, we integrate content fingerprinting solutions that empower our clients to safeguard their assets while maximizing their content's reach and impact.

4.5. Version Control

Version control is a critical component in software development and data management, allowing teams to track changes, collaborate effectively, and maintain the integrity of their projects. It provides a systematic way to manage changes to code, documents, and other digital assets.

- Collaboration: Multiple team members can work on the same project simultaneously without overwriting each other's contributions. This is achieved through branching and merging strategies, which Rapid Innovation leverages to enhance team productivity and streamline workflows. Tools like subversion svn and apache subversion are commonly used for this purpose.

- History Tracking: Version control systems maintain a history of changes, enabling developers to revert to previous versions if necessary. This is particularly useful for debugging and understanding the evolution of a project, ensuring that Rapid Innovation's clients can maintain high-quality standards throughout the development process. Systems such as apache subversion svn provide robust history tracking features.

- Backup and Recovery: In case of data loss or corruption, version control systems serve as a backup, allowing users to recover lost work easily. This feature is crucial for Rapid Innovation's clients, as it minimizes downtime and protects valuable intellectual property. Software svn solutions often include backup functionalities.

- Code Review: Many version control systems facilitate code reviews, where team members can comment on changes before they are merged into the main codebase. This enhances code quality and fosters knowledge sharing, aligning with Rapid Innovation's commitment to delivering robust and reliable solutions. Tools like git version control are popular for managing code reviews.

- Integration with CI/CD: Version control integrates seamlessly with Continuous Integration and Continuous Deployment (CI/CD) pipelines, automating testing and deployment processes. Rapid Innovation utilizes this integration to ensure that clients can deploy updates quickly and efficiently, maximizing their return on investment. The integration of git version management with CI/CD tools is a common practice.

Popular version control systems include Git, Subversion (SVN), and Mercurial. Git, in particular, has become the industry standard due to its distributed nature and robust feature set. The use of vcs version control systems has also gained traction in recent years, providing additional options for teams.

5. Matching Engine

A matching engine is a core component of trading platforms, e-commerce sites, and various applications that require the pairing of buyers and sellers or the alignment of similar items. It plays a crucial role in ensuring efficient transactions and user satisfaction.

- Functionality: The matching engine processes incoming orders and matches them with existing orders based on predefined criteria, such as price and time. Rapid Innovation can develop customized matching engines tailored to specific business needs, enhancing operational efficiency.

- Speed and Efficiency: High-performance matching engines can handle thousands of transactions per second, making them essential for high-frequency trading environments. Rapid Innovation's expertise in AI allows us to optimize these engines for maximum throughput and minimal latency.

- Order Types: It supports various order types, including market orders, limit orders, and stop orders, allowing users to execute trades according to their strategies. Our solutions ensure that clients can implement complex trading strategies effectively.

- Market Data: The engine continuously updates market data, providing users with real-time information on prices and available orders. Rapid Innovation can integrate advanced analytics to help clients make informed decisions based on real-time data.

- Scalability: A well-designed matching engine can scale to accommodate increasing transaction volumes, ensuring that performance remains consistent as user demand grows. Rapid Innovation focuses on building scalable solutions that grow with our clients' businesses.

Matching engines are vital in sectors like finance, where they facilitate the buying and selling of stocks, and in e-commerce, where they help connect buyers with products.

5.1. Similarity Metrics

Similarity metrics are mathematical measures used to quantify how alike two or more items are. They are essential in various fields, including machine learning, data mining, and information retrieval, particularly in applications like recommendation systems and clustering.

- Types of Similarity Metrics:

- Cosine Similarity: Measures the cosine of the angle between two non-zero vectors, often used in text analysis to determine document similarity.

- Euclidean Distance: Calculates the straight-line distance between two points in a multi-dimensional space, commonly used in clustering algorithms.

- Jaccard Index: Evaluates the similarity between two sets by dividing the size of the intersection by the size of the union, useful in comparing binary data.

- Applications:

- Recommendation Systems: Similarity metrics help suggest products or content to users based on their preferences and behaviors. Rapid Innovation can implement these systems to enhance user engagement and drive sales for our clients.

- Clustering: In unsupervised learning, similarity metrics are used to group similar data points together, aiding in pattern recognition. Our expertise in AI allows us to develop sophisticated clustering algorithms that provide actionable insights.

- Image Recognition: Similarity metrics can compare image features to identify similar images or classify them into categories, enabling innovative applications in various industries.

- Choosing the Right Metric: The choice of similarity metric depends on the nature of the data and the specific application. For instance, cosine similarity is preferred for text data, while Euclidean distance is often used for numerical data. Rapid Innovation assists clients in selecting and implementing the most effective metrics for their unique needs.

Understanding and implementing effective similarity metrics can significantly enhance the performance of algorithms and systems that rely on data comparison and analysis, ultimately leading to greater ROI for our clients.

5.2. Machine Learning Models

Machine learning models are algorithms that enable computers to learn from data and make predictions or decisions without being explicitly programmed. These models are essential in various applications, including natural language processing, image recognition, and predictive analytics. At Rapid Innovation, we leverage these models, including convolutional neural networks and random forest machine learning, to help our clients achieve their business goals efficiently and effectively, ultimately driving greater ROI.

- Types of machine learning models:

- Supervised learning: Involves training a model on labeled data, where the outcome is known. Examples include regression and classification tasks. For instance, we have assisted a healthcare client in developing a supervised learning model to predict patient outcomes, leading to improved treatment plans and reduced costs. Techniques such as linear regression with scikit learn and logistic regression model machine learning are commonly used in this category.

- Unsupervised learning: Involves training a model on unlabeled data to identify patterns or groupings. Clustering and dimensionality reduction are common techniques. A retail client benefited from our unsupervised learning approach to segment customers, enabling targeted marketing strategies that increased sales. Restricted Boltzmann machines are an example of unsupervised learning models we utilize.

- Reinforcement learning: Involves training a model to make decisions by rewarding desired actions and penalizing undesired ones, often used in robotics and game playing. We have implemented reinforcement learning solutions for clients in logistics, optimizing their supply chain operations and reducing delivery times.

- Key components of machine learning models:

- Data: The quality and quantity of data significantly impact model performance. More diverse datasets lead to better generalization. Rapid Innovation emphasizes data quality, ensuring our clients have the right data to train their models effectively.

- Features: Selecting the right features is crucial for model accuracy. Feature engineering can enhance model performance. We work closely with clients to identify and engineer features that drive better model outcomes, including using techniques from ensemble learning in machine learning.

- Algorithms: Various algorithms, such as decision trees, neural networks, and support vector machines, can be employed based on the problem type. Our team at Rapid Innovation stays updated on the latest algorithms, including scikit learn linear regression and xgboost regression, to provide clients with the best solutions tailored to their needs.

- Applications of machine learning models:

- Healthcare: Predicting patient outcomes and diagnosing diseases.

- Finance: Fraud detection and credit scoring.

- Marketing: Customer segmentation and personalized recommendations.

5.3. Pattern Recognition

Pattern recognition is a branch of machine learning that focuses on identifying patterns and regularities in data. It plays a vital role in various fields, including computer vision, speech recognition, and data mining. Rapid Innovation utilizes pattern recognition techniques to help clients extract valuable insights from their data, enhancing decision-making processes.

- Types of pattern recognition:

- Statistical pattern recognition: Involves using statistical methods to classify data based on its features. This approach often requires a training set to build a model. We have successfully implemented statistical pattern recognition for clients in finance to detect fraudulent transactions.

- Structural pattern recognition: Focuses on the arrangement of data and relationships between elements. It is commonly used in image and shape recognition. Our work in this area has enabled clients in manufacturing to automate quality control processes.

- Syntactic pattern recognition: Involves using grammar-based approaches to recognize patterns, often applied in natural language processing. We have developed NLP solutions for clients in customer service, improving response accuracy and customer satisfaction.

- Key techniques in pattern recognition:

- Feature extraction: Identifying and selecting relevant features from raw data to improve classification accuracy.

- Classification: Assigning labels to data based on learned patterns. Common classifiers include k-nearest neighbors, neural networks, and support vector machines.

- Clustering: Grouping similar data points together without prior labeling, useful for exploratory data analysis.

- Applications of pattern recognition:

- Image processing: Facial recognition and object detection.

- Speech recognition: Converting spoken language into text.

- Medical diagnosis: Identifying diseases from medical images.

5.4. Fuzzy Matching

Fuzzy matching is a technique used to identify similarities between data entries that may not be identical but are close enough to be considered a match. This approach is particularly useful in data cleaning, record linkage, and natural language processing. Rapid Innovation employs fuzzy matching techniques to help clients maintain clean and accurate datasets, which is crucial for effective decision-making.

- Key concepts in fuzzy matching:

- Similarity measures: Various algorithms, such as Levenshtein distance and Jaccard index, quantify how similar two strings or data entries are.

- Thresholds: Setting a similarity threshold helps determine whether two entries should be considered a match. This threshold can be adjusted based on the specific application.

- Techniques for fuzzy matching:

- Tokenization: Breaking down strings into smaller components (tokens) to facilitate comparison.

- Soundex and Metaphone: Algorithms that convert words into phonetic representations, allowing for matching based on pronunciation rather than spelling.

- N-grams: Dividing strings into overlapping sequences of n characters, which can help identify similarities in longer texts.

- Applications of fuzzy matching:

- Data deduplication: Identifying and merging duplicate records in databases.

- Search engines: Improving search results by matching queries with similar terms.

- Customer relationship management: Linking customer records that may have variations in spelling or formatting.

At Rapid Innovation, we are committed to helping our clients harness the power of machine learning, including decision tree machine learning and deep learning models, key concepts and technologies in AI, pattern recognition, and fuzzy matching to achieve their business objectives and maximize their return on investment.

5.5. Confidence Scoring

Confidence scoring is a critical component in various applications, particularly in machine learning and artificial intelligence. It quantifies the certainty of a model's predictions, helping users understand the reliability of the results.

- Confidence scores are typically represented as a percentage, indicating how confident the model is in its prediction.

- A higher confidence score suggests a more reliable prediction, while a lower score indicates uncertainty.

- This scoring system is essential in fields like finance, healthcare, and autonomous vehicles, where decisions based on predictions can have significant consequences.

- For instance, in fraud detection, a confidence score can help determine whether a transaction is legitimate or suspicious.

- Users can set thresholds for action based on these scores, allowing for more informed decision-making.

Incorporating confidence scoring into applications enhances transparency and trust in AI systems. It allows users to gauge the risk associated with a particular prediction and adjust their actions accordingly. At Rapid Innovation, we leverage confidence scoring to help our clients make data-driven decisions, ultimately leading to greater ROI by minimizing risks and optimizing operational efficiency. Additionally, our ChatGPT integration services can further enhance the capabilities of your applications by incorporating advanced AI functionalities.

6. Platform Integration

Platform integration refers to the process of connecting different software applications and systems to work together seamlessly. This is crucial for businesses looking to streamline operations and improve efficiency.

- Effective platform integration allows for data sharing between systems, reducing redundancy and improving accuracy.

- It can enhance user experience by providing a unified interface for accessing multiple services.

- Integration can be achieved through various methods, including middleware, APIs, and web services.

- Businesses can benefit from real-time data access, enabling quicker decision-making and responsiveness to market changes.

- A well-integrated platform can lead to cost savings by automating processes and reducing manual intervention.

Successful platform integration requires careful planning and execution. Organizations must consider compatibility, data security, and user training to ensure a smooth transition. Rapid Innovation specializes in platform integration, ensuring that our clients can maximize their technology investments and achieve their business goals efficiently.

6.1. API Specifications

API specifications define how different software components should interact with each other. They serve as a blueprint for developers, outlining the methods, data formats, and protocols required for integration.

- Clear API specifications are essential for ensuring that different systems can communicate effectively.

- They typically include details such as endpoints, request and response formats, authentication methods, and error handling.

- Well-documented APIs facilitate easier integration, allowing developers to understand how to use them without extensive trial and error.

- Common API specifications include REST, SOAP, and GraphQL, each with its own strengths and use cases.

- Adhering to industry standards in API design can enhance interoperability and reduce integration time.

By providing clear API specifications, organizations can foster collaboration between teams and third-party developers, leading to more innovative solutions and improved functionality. At Rapid Innovation, we ensure that our API specifications are robust and user-friendly, enabling our clients to integrate their systems seamlessly and drive greater business value.

6.2. Authentication Methods

Authentication methods are crucial for ensuring that only authorized users can access a system or application. Various techniques are employed to verify user identities, each with its own strengths and weaknesses.

- Username and Password: The most common method, where users create a unique username and password combination. However, this method is vulnerable to attacks like phishing and brute force.

- Multi-Factor Authentication (MFA): This adds an extra layer of security by requiring two or more verification methods. For example, a user may need to enter a password and then confirm their identity through a text message or authentication app, such as the authentication app Microsoft or Microsoft Authenticator login. MFA significantly reduces the risk of unauthorized access.

- Biometric Authentication: This method uses unique biological traits, such as fingerprints or facial recognition, to authenticate users. While highly secure, it raises privacy concerns and requires specialized hardware.

- OAuth and OpenID Connect: These protocols allow users to authenticate using their existing accounts from trusted providers (like Google or Facebook). This method simplifies the login process but relies on third-party services.

- Token-Based Authentication: In this method, users receive a token after logging in, which they must present for subsequent requests. This is commonly used in APIs and web applications, enhancing security by not requiring the user to send their credentials repeatedly.

- Passwordless Login: This emerging method allows users to authenticate without a password, often using biometrics or one-time codes sent to their devices.

- Knowledge Based Authentication: This method requires users to answer specific questions that only they should know, adding another layer of verification.

At Rapid Innovation, we understand the importance of robust authentication methods in safeguarding your applications. By implementing advanced authentication techniques, such as Azure multi-factor authentication and biometrics for authentication, we help clients enhance security, reduce the risk of data breaches, and ultimately achieve greater ROI through increased user trust and satisfaction. For more information on setting up MFA, check at https aka ms mfasetup. Additionally, our expertise extends to hybrid exchange development to further enhance your application's capabilities.

6.3. Rate Limiting

Rate limiting is a technique used to control the amount of incoming and outgoing traffic to or from a network. It helps protect systems from abuse and ensures fair usage among users.

- Preventing Abuse: Rate limiting can mitigate the risk of denial-of-service (DoS) attacks by limiting the number of requests a user can make in a given timeframe. This helps maintain system performance and availability.

- User Experience: By implementing rate limits, systems can prevent users from overwhelming the server, ensuring that all users have a smooth experience. This is particularly important for APIs, where excessive requests can lead to slow response times.

- Types of Rate Limiting:

- Global Rate Limiting: Applies limits across all users, ensuring that the total number of requests does not exceed a certain threshold.

- User-Based Rate Limiting: Limits the number of requests per user, which helps prevent a single user from monopolizing resources.

- IP-Based Rate Limiting: Restricts the number of requests from a specific IP address, useful for blocking malicious actors.

- Implementation Strategies: Rate limiting can be implemented using various algorithms, such as

token bucketorleaky bucket, which determine how requests are processed based on predefined limits.

At Rapid Innovation, we leverage rate limiting strategies to ensure that your applications remain resilient and responsive, even under heavy load. This not only protects your infrastructure but also enhances user experience, leading to improved customer retention and higher ROI.

6.4. Error Handling

Effective error handling is essential for maintaining a robust and user-friendly application. It involves anticipating potential errors and implementing strategies to manage them gracefully.

- User-Friendly Messages: Instead of displaying technical jargon, applications should provide clear and concise error messages that help users understand what went wrong and how to resolve it. This enhances user experience and reduces frustration.

- Logging Errors: Keeping a log of errors is vital for diagnosing issues and improving system performance. Logs should capture relevant details, such as the error type, timestamp, and user actions leading up to the error.

- Graceful Degradation: In the event of an error, systems should aim to continue functioning at a reduced capacity rather than failing completely. This approach ensures that users can still access essential features even when some components are down.

- Validation and Sanitization: Implementing input validation and sanitization can prevent many errors from occurring in the first place. By ensuring that user inputs meet expected formats and constraints, applications can avoid common pitfalls like SQL injection or buffer overflow attacks.

- Retry Mechanisms: For transient errors, such as network timeouts, implementing retry mechanisms can improve user experience. This allows the system to attempt the operation again after a brief pause, often resolving temporary issues without user intervention.

- Monitoring and Alerts: Setting up monitoring tools to track error rates and performance metrics can help identify issues before they escalate. Alerts can notify developers or system administrators of critical errors, enabling prompt resolution.

At Rapid Innovation, we prioritize effective error handling to ensure that your applications remain reliable and user-friendly. By implementing comprehensive error management strategies, we help clients minimize downtime and enhance overall system performance, leading to greater operational efficiency and ROI.

6.5. Platform-Specific Adapters

Platform-specific adapters are essential components in software architecture that facilitate communication between different systems or platforms. They act as intermediaries, translating requests and responses between various applications, ensuring seamless integration and functionality.

- Purpose of Platform-Specific Adapters:

- Enable interoperability between diverse systems.

- Simplify the integration process by handling platform-specific protocols and data formats.

- Enhance the overall user experience by providing consistent functionality across platforms.

- Types of Adapters:

- API Adapters: Connect different APIs, allowing them to communicate effectively.

- Database Adapters: Facilitate interaction between applications and various database systems.

- Messaging Adapters: Enable communication between different messaging systems or protocols.

- Benefits:

- Reduces development time by providing pre-built solutions for common integration challenges.

- Improves maintainability by isolating platform-specific code from the core application logic.

- Enhances scalability by allowing systems to evolve independently.

- Considerations:

- Ensure that adapters are designed to handle the specific requirements of each platform.

- Regularly update adapters to accommodate changes in APIs or platform specifications.

- Monitor performance to avoid bottlenecks in data transfer.

At Rapid Innovation, we leverage platform-specific adapters to streamline integration processes for our clients, ensuring that their systems communicate effectively and efficiently. This not only accelerates development timelines but also enhances the overall functionality of their applications, leading to greater ROI. For custom solutions, consider partnering with a custom AI development.

7. Performance Optimization

Performance optimization is a critical aspect of software development that focuses on improving the efficiency and speed of applications. It involves various techniques and strategies to ensure that applications run smoothly and can handle increased loads without degradation in performance.

- Key Areas of Focus:

- Code Efficiency: Writing clean, efficient code that minimizes resource consumption.

- Resource Management: Effectively managing memory, CPU, and other resources to prevent leaks and bottlenecks.

- Load Balancing: Distributing workloads evenly across servers to enhance responsiveness and reliability.

- Techniques for Optimization:

- Profiling: Use profiling tools to identify performance bottlenecks in the application.

- Asynchronous Processing: Implement asynchronous operations to improve responsiveness and reduce wait times.

- Minification: Reduce the size of files (like CSS and JavaScript) to decrease load times.

- Benefits of Performance Optimization:

- Improved user satisfaction due to faster load times and smoother interactions.

- Increased application reliability and uptime.

- Enhanced scalability, allowing applications to handle more users and data.

At Rapid Innovation, we prioritize performance optimization to ensure that our clients' applications not only meet but exceed user expectations. By implementing tailored optimization strategies, we help businesses achieve higher efficiency and better resource utilization, ultimately leading to increased ROI.

7.1. Caching Strategies

Caching strategies are techniques used to store frequently accessed data in a temporary storage area, allowing for quicker retrieval and reduced load on the primary data source. Effective caching can significantly enhance application performance and user experience.

- Types of Caching:

- In-Memory Caching: Stores data in the server's memory for rapid access. Examples include Redis and Memcached.

- Browser Caching: Utilizes the user's browser to store static resources, reducing the need for repeated requests to the server.

- Content Delivery Network (CDN) Caching: Distributes cached content across multiple servers worldwide, improving access speed for users regardless of their location.

- Best Practices for Caching:

- Cache Invalidation: Implement strategies to ensure that stale data is updated or removed from the cache.

- Cache Size Management: Monitor and manage cache size to prevent excessive memory usage.

- Data Granularity: Determine the appropriate level of granularity for cached data to balance performance and resource usage.

- Benefits of Caching:

- Reduced latency and faster response times for users.

- Decreased load on backend systems, leading to improved overall performance.

- Cost savings by minimizing the need for additional server resources.

By implementing platform-specific adapters, optimizing performance, and utilizing effective caching strategies, Rapid Innovation empowers developers to create robust applications that deliver exceptional user experiences while maintaining efficiency and scalability. Our expertise in these areas ensures that our clients achieve their business goals effectively and efficiently, maximizing their return on investment.

7.2. Database Optimization

Database optimization is crucial for enhancing the performance and efficiency of database systems. It involves various techniques aimed at improving the speed of data retrieval and ensuring that the database operates smoothly under varying loads. At Rapid Innovation, we leverage our expertise in AI to implement advanced database optimization strategies that can lead to significant improvements in operational efficiency and return on investment (ROI).

- Indexing: Creating indexes on frequently queried columns can significantly speed up data retrieval. Proper indexing reduces the amount of data the database engine needs to scan, leading to faster query responses and improved user experience.

- Query Optimization: Analyzing and rewriting SQL queries can lead to better execution plans. Using tools like

EXPLAINcan help identify slow queries and suggest improvements. Our AI-driven tools can automate this process, ensuring that your database queries are always optimized for performance. - Normalization: Structuring the database to reduce redundancy and improve data integrity can enhance performance. However, over-normalization can lead to complex queries, so a balance is necessary. We assist clients in finding the right structure that maximizes efficiency without compromising on data integrity.

- Caching: Implementing caching strategies, such as in-memory databases or query caching, can reduce the load on the database by storing frequently accessed data in memory. Our solutions can intelligently manage caching based on usage patterns, further enhancing performance.

- Regular Maintenance: Routine tasks like updating statistics, rebuilding indexes, and purging old data can keep the database running efficiently. We provide automated maintenance solutions that ensure your database remains optimized without manual intervention. For more information on how AI can enhance these processes, check out our article on IT resource optimization.

7.3. Load Balancing

Load balancing is a technique used to distribute workloads across multiple servers or resources to ensure no single server becomes overwhelmed. This is essential for maintaining high availability and reliability in applications. Rapid Innovation employs sophisticated load balancing strategies that enhance application performance and user satisfaction.

- Traffic Distribution: Load balancers can distribute incoming traffic evenly across multiple servers, preventing any single server from becoming a bottleneck. Our AI algorithms can predict traffic patterns and adjust distribution dynamically.

- Failover Support: In case one server fails, load balancers can redirect traffic to other operational servers, ensuring continuous service availability. This minimizes downtime and enhances user trust in your services.

- Scalability: Load balancing allows for easy scaling of applications. As demand increases, additional servers can be added to the pool without disrupting service. Our solutions facilitate seamless scaling, ensuring that your infrastructure can grow with your business.

- Session Persistence: Some applications require session persistence, where a user is consistently directed to the same server. Load balancers can manage this through sticky sessions, ensuring a smooth user experience.

- Health Monitoring: Load balancers continuously monitor the health of servers and can automatically reroute traffic away from any server that is underperforming or down. Our monitoring tools provide real-time insights, allowing for proactive management of server health.

7.4. Resource Management

Resource management involves efficiently allocating and utilizing resources such as CPU, memory, storage, and network bandwidth in a computing environment. Effective resource management is vital for optimizing performance and minimizing costs. At Rapid Innovation, we utilize AI-driven resource management solutions to help clients achieve optimal performance while maximizing ROI.

- Resource Allocation: Properly allocating resources based on application needs can prevent resource contention and ensure that critical applications have the necessary resources to function optimally. Our AI models analyze usage patterns to recommend the best allocation strategies.

- Monitoring Tools: Utilizing monitoring tools can provide insights into resource usage patterns, helping identify bottlenecks and areas for improvement. We offer advanced monitoring solutions that leverage AI to predict future resource needs.

- Virtualization: Implementing virtualization technologies allows for better resource utilization by running multiple virtual machines on a single physical server, maximizing hardware efficiency. Our virtualization strategies help clients reduce costs while improving performance.

- Auto-scaling: In cloud environments, auto-scaling can dynamically adjust resources based on current demand, ensuring that applications have the right amount of resources at all times. Our solutions ensure that scaling is both efficient and cost-effective.

- Cost Management: Regularly reviewing resource usage can help identify underutilized resources, allowing organizations to optimize costs by scaling down or reallocating resources as needed. We provide tools that automate this review process, ensuring that your organization remains agile and cost-efficient.

By partnering with Rapid Innovation, clients can leverage our expertise in database optimization strategies, load balancing, and resource management to achieve their business goals efficiently and effectively, ultimately leading to greater ROI.

7.5. Monitoring and Metrics

Monitoring and metrics are essential components of any successful project or system. They provide insights into performance, help identify areas for improvement, and ensure that objectives are being met. Effective project monitoring metrics can lead to better decision-making and enhanced outcomes, ultimately driving greater ROI for your organization.

- Define Key Performance Indicators (KPIs): Establish clear KPIs that align with your project goals. These indicators should be measurable and relevant to your objectives, allowing for precise tracking of progress and success.

- Use Real-Time Data: Implement tools that allow for real-time data collection and analysis. This enables quick responses to any issues that may arise, ensuring that your project remains on track and aligned with business objectives.

- Regular Reporting: Create a schedule for reporting metrics to stakeholders. This keeps everyone informed and engaged in the project's progress, fostering collaboration and transparency.

- Analyze Trends: Look for patterns in the data over time. Understanding trends can help predict future performance and inform strategic decisions, allowing for proactive adjustments that enhance project outcomes.

- Adjust Strategies: Use the insights gained from monitoring to adjust strategies as needed. Flexibility is key to responding to changing circumstances, ensuring that your project remains relevant and effective.

- Tools and Software: Utilize monitoring tools and software that can automate data collection and reporting. This reduces manual effort and increases accuracy, freeing up resources for more strategic initiatives.

- Stakeholder Involvement: Involve stakeholders in the monitoring process. Their feedback can provide valuable insights and foster a sense of ownership, enhancing commitment to project success.

8. Implementation Guide

An implementation guide serves as a roadmap for executing a project or system. It outlines the steps necessary to achieve successful implementation, ensuring that all team members are aligned and informed.

- Define Objectives: Clearly outline the objectives of the implementation. This provides a focus for the entire team and helps measure success.

- Develop a Timeline: Create a detailed timeline that includes all phases of the implementation process. This helps keep the project on track and ensures timely completion.

- Assign Roles and Responsibilities: Clearly define who is responsible for each task. This prevents confusion and ensures accountability.

- Resource Allocation: Identify the resources needed for implementation, including personnel, technology, and budget. Ensure that these resources are available and allocated appropriately.

- Risk Management: Develop a risk management plan to identify potential challenges and outline strategies to mitigate them. This proactive approach can save time and resources.

- Training and Support: Provide training for team members to ensure they understand their roles and the tools they will be using. Ongoing support is also crucial for addressing any issues that arise.

- Communication Plan: Establish a communication plan to keep all stakeholders informed throughout the implementation process. Regular updates foster transparency and collaboration.

8.1. Setup Requirements

Setup requirements are the foundational elements needed to initiate a project or system. Understanding these requirements is crucial for a smooth implementation process.

- Hardware Requirements: Identify the necessary hardware components, such as servers, computers, and networking equipment. Ensure that these meet the specifications needed for optimal performance.

- Software Requirements: Determine the software applications and tools required for the project. This includes operating systems, databases, and any specialized software.

- Network Configuration: Plan the network configuration to ensure seamless connectivity. This includes setting up firewalls, routers, and any necessary security measures.

- User Access: Define user access levels and permissions. This ensures that team members have the appropriate access to perform their tasks while maintaining security.

- Data Migration: If applicable, outline the process for migrating existing data to the new system. This includes data cleansing and validation to ensure accuracy.

- Compliance and Security: Ensure that all setup requirements comply with relevant regulations and security standards. This protects sensitive information and maintains trust.

- Testing Environment: Establish a testing environment to validate the setup before going live. This allows for troubleshooting and adjustments without impacting the main system.

By focusing on monitoring and metrics, following a structured implementation guide, and understanding setup requirements, organizations can enhance their project outcomes and ensure successful execution. Rapid Innovation is committed to helping clients navigate these processes effectively, leveraging AI-driven insights to maximize ROI and achieve business goals efficiently.

8.2. Configuration

Configuration is a critical step in the software development lifecycle, ensuring that the application is set up correctly to meet user requirements and system specifications. Proper configuration can significantly enhance performance, security, and usability, ultimately leading to greater ROI for our clients.

- Identify system requirements: Understand the hardware and software prerequisites for the application, ensuring that the infrastructure is optimized for AI workloads.

- Set up environment variables: Configure necessary environment variables that the application will use during execution, facilitating seamless integration with AI models and services.

- Database configuration: Ensure that the database connections are correctly set up, including user permissions and connection strings, to support efficient data retrieval and storage for AI applications.

- Application settings: Adjust application settings such as API keys, service endpoints, and feature flags to align with the deployment environment, ensuring that AI functionalities are accessible and operational.

- Security configurations: Implement security measures, including firewalls, encryption, and access controls to protect sensitive data, particularly important in AI applications that handle personal or proprietary information.

- Performance tuning: Optimize configurations for performance, such as adjusting memory allocation and thread management, to enhance the responsiveness of AI algorithms and improve user experience.

- Documentation: Maintain clear documentation of all configuration settings for future reference and troubleshooting, which is essential for ongoing support and optimization of AI solutions and VR application development.

8.3. Deployment Steps

Deployment is the process of making an application available for use. It involves several steps to ensure that the application is correctly installed and operational in the target environment, maximizing the effectiveness of AI solutions.

- Prepare the deployment environment: Ensure that the target environment meets all system requirements and is ready for the application, particularly for AI workloads that may require specific hardware configurations.

- Build the application: Compile the application code and package it into deployable units, such as binaries or containers, to streamline the deployment of AI models and services.

- Transfer files: Move the application files to the target server or cloud environment using secure transfer methods, ensuring that AI components are securely deployed.

- Configure the application: Apply the necessary configuration settings specific to the deployment environment, enabling the application to leverage AI capabilities effectively. This may include using an application deployment tool like AWS OpsWorks or Elastic Beanstalk AWS.

- Database migration: Execute any database migrations or updates required for the application to function correctly, ensuring that data is structured to support AI analytics.

- Start services: Launch the application services and ensure that all components are running as expected, particularly those related to AI processing. This could involve deploying a Heroku app or using Azure DevOps to deploy a web job.

- Monitor deployment: Use monitoring tools to track the deployment process and identify any issues that arise, allowing for quick resolution and minimizing downtime. Deployment tracking is essential in this phase.

- Rollback plan: Have a rollback plan in place to revert to the previous version in case of deployment failure, safeguarding the integrity of AI applications.

8.4. Testing Procedures

Testing is an essential phase in the software development process, ensuring that the application functions as intended and meets quality standards. A well-structured testing procedure can help identify bugs and improve user satisfaction, particularly in AI-driven applications.

- Unit testing: Test individual components or functions of the application to ensure they work correctly in isolation, including AI algorithms and models.